Anvil Composable Subsystem

New usage patterns have emerged in research computing that depend on the availability of custom services such as notebooks, databases, elastic software stacks, and science gateways alongside traditional batch HPC. The Anvil Composable Subsystem is a Kubernetes based private cloud managed with Rancher that provides a platform for creating composable infrastructure on demand. This cloud-style flexibility provides researchers the ability to self-deploy and manage persistent services to complement HPC workflows and run container-based data analysis tools and applications.

Concepts

Link to section 'Containers & Images' of 'Concepts' Containers & Images

Image - An image is a simple text file that defines the source code of an application you want to run as well as the libraries, dependencies, and tools required for the successful execution of the application. Images are immutable meaning they do not hold state or application data. Images represent a software environment at a specific point of time and provide an easy way to share applications across various environments. Images can be built from scratch or downloaded from various repositories on the internet, additionally many software vendors are now providing containers alongside traditional installation packages like Windows .exe and Linux rpm/deb.

Container - A container is the run-time environment constructed from an image when it is executed or run in a container runtime. Containers allow the user to attach various resources such as network and volumes in order to move and store data. Containers are similar to virtual machines in that they can be attached to when a process is running and have arbitrary commands executed that affect the running instance. However, unlike virtual machines, containers are more lightweight and portable allowing for easy sharing and collaboration as they run identically in all environments.

Tags - Tags are a way of organizing similar image files together for ease of use. You might see several versions of an image represented using various tags. For example, we might be building a new container to serve web pages using our favorite web server: nginx. If we search for the nginx container on Docker Hub image repository we see many options or tags are available for the official nginx container.

The most common you will see are typically :latest and :number where

number refers to the most recent few versions of the software releases. In

this example we can see several tags refer to the same image:

1.21.1, mainline, 1, 1.21, and latest all reference the same image while the

1.20.1, stable, 1.20 tags all reference a common but different image.

In this case we likely want the nginx image with either the latest or 1.21.1

tag represented as nginx:latest and nginx:1.21.1 respectively.

Container Security - Containers enable fast developer velocity and ease compatibility through great portability, but the speed and ease of use come at some costs. In particular it is important that folks utilizing container driver development practices have a well established plan on how to approach container and environment security. Best Practices

Container Registries - Container registries act as large repositories of images, containers, tools and surrounding software to enable easy use of pre-made containers software bundles. Container registries can be public or private and several can be used together for projects. Docker Hub is one of the largest public repositories available, and you will find many official software images present on it. You need a user account to avoid being rate limited by Docker Hub. A private container registry based on Harbor that is available to use. TODO: link to harbor instructions

Docker Hub - Docker Hub is one of the largest container image registries that exists and is well known and widely used in the container community, it serves as an official location of many popular software container images. Container image repositories serve as a way to facilitate sharing of pre-made container images that are “ready for use.” Be careful to always pay attention to who is publishing particular images and verify that you are utilizing containers built only from reliable sources.

Harbor - Harbor is an open source registry for Kubernetes artifacts, it provides private image storage and enforces container security by vulnerability scanning as well as providing RBAC or role based access control to assist with user permissions. Harbor is a registry similar to Docker Hub, however it gives users the ability to create private repositories. You can use this to store your private images as well as keeping copies of common resources like base OS images from Docker Hub and ensure your containers are reasonably secure from common known vulnerabilities.

Link to section 'Container Runtime Concepts' of 'Concepts' Container Runtime Concepts

Docker Desktop - Docker Desktop is an application for your Mac / Windows machine that will allow you to build and run containers on your local computer. Docker desktop serves as a container environment and enables much of the functionality of containers on whatever machine you are currently using. This allows for great flexibility, you can develop and test containers directly on your laptop and deploy them directly with little to no modifications.

Volumes - Volumes provide us with a method to create persistent data that is generated and consumed by one or more containers. For docker this might be a folder on your laptop while on a large Kubernetes cluster this might be many SSD drives and spinning disk trays. Any data that is collected and manipulated by a container that we want to keep between container restarts needs to be written to a volume in order to remain around and be available for later use.

Link to section 'Container Orchestration Concepts' of 'Concepts' Container Orchestration Concepts

Container Orchestration - Container orchestration broadly means the automation of much of the lifecycle management procedures surrounding the usage of containers. Specifically it refers to the software being used to manage those procedures. As containers have seen mass adoption and development in the last decade, they are now being used to power massive environments and several options have emerged to manage the lifecycle of containers. One of the industry leading options is Kubernetes, a software project that has descended from a container orchestrator at Google that was open sourced in 2015.

Kubernetes (K8s) - Kubernetes (often abbreviated as "K8s") is a platform providing container orchestration functionality. It was open sourced by Google around a decade ago and has seen widespread adoption and development in the ensuing years. K8s is the software that provides the core functionality of the Anvil Composable Subsystem by managing the complete lifecycle of containers. Additionally it provides the following functions: service discovery and load balancing, storage orchestration, secret and configuration management. The Kubernetes cluster can be accessed via the Rancher UI or the kubectl command line tool.

Rancher - Rancher is a “is a complete software stack for teams adopting containers.” as described by its website. It can be thought of as a wrapper around Kubernetes, providing an additional set of tools to help operate the K8 cluster efficiently and additional functionality that does not exist in Kubernetes itself. Two examples of the added functionality is the Rancher UI that provides an easy to use GUI interface in a browser and Rancher projects, a concept that allows for multi-tenancy within the cluster. Users can interact directly with Rancher using either the Rancher UI or Rancher CLI to deploy and manage workloads on the Anvil Composable Subsystem.

Rancher UI - The Rancher UI is a web based graphical interface to use the Anvil Composable Subsystem from anywhere.

Rancher CLI - The Rancher CLI provides a convenient text based toolkit to interact with the cluster. The binary can be downloaded from the link on the right hand side of the footer in the Rancher UI. After you download the Rancher CLI, you need to make a few configurations Rancher CLI requires:

-

Your Rancher Server URL, which is used to connect to Rancher Server.

-

An API Bearer Token, which is used to authenticate with Rancher. see Creating an API Key.

After setting up the Rancher CLI you can issue rancher --help to view the full

range of options available.

Kubectl - Kubectl is a text based tool for working with the underlying Anvil Kubernetes cluster. In order to take advantage of kubectl you will either need to set up a Kubeconfig File or use the built in kubectl shell in the Rancher UI. You can learn more about kubectl and how to download the kubectl file here.

Storage - Storage is utilized to provide persistent data storage between container deployments. The Ceph filesystem provides access to Block, Object and shared file systems. File storage provides an interface to access data in a file and folder hierarchy similar to NTFS or NFS. Block storage is a flexible type of storage that allows for snapshotting and is good for database workloads and generic container storage. Object storage is also provided by Ceph, this features a REST based bucket file system providing S3 and Swift compatibility.

Access

How to Access the Anvil Composable Subsystem via the Rancher UI, the command line (kubectl) and the Anvil Harbor registry.

Rancher

Logging in to Rancher

The Anvil Composable Subsystem Rancher interface can be accessed via a web browser at https://composable.anvil.rcac.purdue.edu. Log in by choosing "log in with shibboleth" and using your ACCESS credentials at the ACCESS login screen.

kubectl

Link to section 'Configuring local kubectl access with Kubeconfig file' of 'kubectl' Configuring local kubectl access with Kubeconfig file

kubectl can be installed and run on your local machine to perform various actions against the Kubernetes cluster using the API server.

These tools authenticate to Kubernetes using information stored in a kubeconfig file.

To authenticate to the Anvil cluster you can download a kubeconfig file that is generated by Rancher as well as the kubectl tool binary.

-

From anywhere in the rancher UI navigate to the cluster dashboard by hovering over the box to the right of the cattle and selecting anvil under the "Clusters" banner.

-

Click on kubeconfig file at the top right

-

Click copy to clipboard

-

Create a hidden folder called .kube in your home directory

-

Copy the contents of your kubeconfig file from step 2 to a file called config in the newly create .kube directory

-

-

You can now issue commands using kubectl against the Anvil Rancher cluster

-

to look at the current config settings we just set use

kubectl config view -

now let’s list the available resource types present in the API with

kubectl api-resources

-

To see more options of kubectl review the cheatsheet found on Kubernetes' kubectl cheatsheet.

Link to section 'Accessing kubectl in the rancher web UI' of 'kubectl' Accessing kubectl in the rancher web UI

You can launch a kubectl command window from within the Rancher UI by selecting the Launch kubectl button to the left of the Kubeconfig File button. This will deploy a container in the cluster with kubectl installed and give you an interactive window to use the command from.

Harbor

Link to section 'Logging into the Anvil Registry UI with ACCESS credentials' of 'Harbor' Logging into the Anvil Registry UI with ACCESS credentials

Harbor is configured to use ACCESS as an OpenID Connect (OIDC) authentication provider. This allows you to login using your ACCESS credentials.

To login to the harbor registry using your ACCESS credentials:

Navigate to https://registry.anvil.rcac.purdue.edu in your favorite web browser.

-

Click the Login via OIDC Provider button.

-

This redirects you to the ACCESS account for authentication.

-

-

If this is the first time that you are logging in to Harbor with OIDC, specify a user name for Harbor to associate with your OIDC username.

-

This is the user name by which you are identified in Harbor, which is used when adding you to projects, assigning roles, and so on. If the username is already taken, you are prompted to choose another one.

-

-

After the OIDC provider has authenticated you, you are redirected back to the Anvil Harbor Registry.

Workloads

Link to section 'Deploy a Workload' of 'Workloads' Deploy a Workload

- Using the top right dropdown select the Project or Namespace you wish to deploy to.

- Using the far left menu navigate to Workload

- Click Create at the top right

- Select the appropriate Deployment Type for your use case

- Select Namespace if not already done from step 1

- Set a unique Name for your deployment, i.e. “myapp"

- Set Container Image. Ensure you're using the Anvil registry for personal images or the Anvil registry docker-hub cache when pulling public docker-hub specific images. e.g:

registry.anvil.rcac.purdue.edu/my-registry/myimage:tagorregistry.anvil.rcac.purdue.edu/docker-hub-cache/library/image:tag - Click Create

Wait a couple minutes while your application is deployed. The “does not have minimum availability” message is expected. But, waiting more than 5 minutes for your workload to deploy typically indicates a problem. You can check for errors by clicking your workload name (i.e. "myapp"), then the lower button on the right side of your deployed pod and selecting View Logs

If all goes well, you will see an Active status for your deployment

You can then interact with your deployed container on the command line by clicking the button with three dots on the right side of the screen and choosing "Execute Shell"

Services

Link to section 'Service' of 'Services' Service

A Service is an abstract way to expose an application running on Pods as a network service. This allows the networking and application to be logically decoupled so state changes in either the application itself or the network connecting application components do not need to be tracked individually by all portions of an application.

Link to section 'Service resources' of 'Services' Service resources

In Kubernetes, a Service is an abstraction which defines a logical set of Pods and a policy by which to access them (sometimes this pattern is called a micro-service). The set of Pods targeted by a Service is usually determined by a Pod selector, but can also be defined other ways.

Link to section 'Publishing Services (ServiceTypes)' of 'Services' Publishing Services (ServiceTypes)

For some parts of your application you may want to expose a Service onto an external IP address, that’s outside of your cluster.

Kubernetes ServiceTypes allow you to specify what kind of Service you want. The default is ClusterIP.

-

ClusterIP: Exposes the Service on a cluster-internal IP. Choosing this value makes the Service only reachable from within the cluster. This is the default ServiceType.

-

NodePort: Exposes the Service on each Node’s IP at a static port (the NodePort). A ClusterIP Service, to which the NodePort Service routes, is automatically created. You’ll be able to contact the NodePort Service, from outside the cluster, by requesting

<NodeIP>:<NodePort>. -

LoadBalancer: Exposes the Service externally using Anvil's load balancer. NodePort and ClusterIP Services, to which the external load balancer routes, are automatically created. Users can create LoadBalancer resources using a private IP pool on Purdue's network with the annotation

metallb.universe.tf/address-pool: anvil-private-pool. Public LoadBalancer IPs are avaiable via request only.

You can see an example of exposing a workload using the LoadBalancer type on Anvil in the examples section.

-

ExternalName: Maps the Service to the contents of the externalName field (e.g. foo.bar.example.com), by returning a CNAME record with its value. No proxying of any kind is set up.

Link to section 'Ingress' of 'Services' Ingress

An Ingress is an API object that manages external access to the services in a cluster, typically HTTP/HTTPS. An Ingress is not a ServiceType, but rather brings external traffic into the cluster and then passes it to an Ingress Controller to be routed to the correct location. Ingress may provide load balancing, SSL termination and name-based virtual hosting. Traffic routing is controlled by rules defined on the Ingress resource.

You can see an example of a service being exposed with an Ingress on Anvil in the examples section.

Link to section 'Ingress Controller' of 'Services' Ingress Controller

Anvil provides the nginx ingress controller configured to facilitate SSL termination and automatic DNS name generation under the anvilcloud.rcac.purdue.edu subdomain.

Kubernetes provides additional information about Ingress Controllers in the official documentation.

Registry

Link to section 'Accessing the Anvil Composable Registry' of 'Registry' Accessing the Anvil Composable Registry

The Anvil registry uses Harbor, an open source registry to manage containers and artifacts, it can be accessed at the following URL: https://registry.anvil.rcac.purdue.edu

Link to section 'Using the Anvil Registry Docker Hub Cache' of 'Registry' Using the Anvil Registry Docker Hub Cache

It’s advised that you use the Docker Hub cache within Anvil to pull images for deployments. There’s a limit to how many images Docker hub will allow to be pulled in a 24 hour period which Anvil reaches depending on user activity. This means if you’re trying to deploy a workload, or have a currently deployed workload that needs migrated, restarted, or upgraded, there’s a chance it will fail.

To bypass this, use the Anvil cache url registry.anvil.rcac.purdue.edu/docker-hub-cache/ in your image names

For example if you’re wanting to pull a notebook from jupyterhub’s Docker Hub repo e.g jupyter/tensorflow-notebook:latest Pulling it from the Anvil cache would look like this registry.anvil.rcac.purdue.edu/docker-hub-cache/jupyter/tensorflow-notebook:latest

Link to section 'Using OIDC from the Docker or Helm CLI' of 'Registry' Using OIDC from the Docker or Helm CLI

After you have authenticated via OIDC and logged into the Harbor interface for the first time, you can use the Docker or Helm CLI to access Harbor.

The Docker and Helm CLIs cannot handle redirection for OIDC, so Harbor provides a CLI secret for use when logging in from Docker or Helm.

-

Log in to Harbor with an OIDC user account.

-

Click your username at the top of the screen and select User Profile.

-

Click the clipboard icon to copy the CLI secret associated with your account.

-

Optionally click the … icon in your user profile to display buttons for automatically generating or manually creating a new CLI secret.

-

A user can only have one CLI secret, so when a new secret is generated or create, the old one becomes invalid.

-

-

If you generated a new CLI secret, click the clipboard icon to copy it.

You can now use your CLI secret as the password when logging in to Harbor from the Docker or Helm CLI.

docker login -u <username> -p <cli secret> registry.anvil.rcac.purdue.edu

Link to section 'Creating a harbor Registry' of 'Registry' Creating a harbor Registry

-

Using a browser login to https://registry.anvil.rcac.purdue.edu with your ACCESS account username and password

-

From the main page click create project, this will act as your registry

-

Fill in a name and select whether you want the project to be public or private

-

Click ok to create and finalize

Link to section 'Tagging and Pushing Images to Your Harbor Registry' of 'Registry' Tagging and Pushing Images to Your Harbor Registry

-

Tag your image

$ docker tag my-image:tag registry.anvil.rcac.purdue.edu/project-registry/my-image:tag -

login to the Anvil registry via command line

$ docker login registry.anvil.rcac.purdue.edu -

Push your image to your project registry

$ docker push registry.anvil.rcac.purdue.edu/project-registry/my-image:tag

Link to section 'Creating a Robot Account for a Private Registry' of 'Registry' Creating a Robot Account for a Private Registry

A robot account and token can be used to authenticate to your registry in place of having to supply or store your private credentials on multi-tenant cloud environments like Rancher/Anvil.

-

Navigate to your project after logging into https://registry.anvil.rcac.purdue.edu

-

Navigate to the Robot Accounts tab and click New Robot Account

-

Fill out the form

-

Name your robot account

-

Select account expiration if any, select never to make permanent

-

Customize what permissions you wish the account to have

-

Click Add

-

-

Copy your information

-

Your robot’s account name will be something longer than what you specified, since this is a multi-tenant registry, harbor does this to avoid unrelated project owners creating a similarly named robot account

-

Export your token as JSON or copy it to a clipboard

-

Link to section 'Adding Your Private Registry to Rancher' of 'Registry' Adding Your Private Registry to Rancher

-

From your project navigate to

Resources > secrets -

Navigate to the Registry Credentials tab and click Add Registry

-

Fill out the form

-

Give a name to the Registry secret (this is an arbitrary name)

-

Select whether or not the registry will be available to all or a single namespace

-

Select address as “custom” and provide “registry.anvil.rcac.purdue.edu”

-

Enter your robot account’s long name eg.

robot$my-registry+robotas the Username -

Enter your robot account’s token as the password

-

Click Save

-

Link to section 'External Harbor Documentation' of 'Registry' External Harbor Documentation

-

You can learn more about the Harbor project on the official website: https://goharbor.io/

Storage

Storage is utilized to provide persistent data storage between container deployments and comes in a few options on Anvil.

The Ceph software is used to provide block, filesystem and object storage on the Anvil composable cluster. File storage provides an interface to access data in a file and folder hierarchy similar to NTFS or NFS. Block storage is a flexible type of storage that allows for snapshotting and is good for database workloads and generic container storage. Object storage is ideal for large unstructured data and features a REST based API providing an S3 compatible endpoint that can be utilized by the preexisting ecosystem of S3 client tools.

Link to section 'Storage Classes' of 'Storage' Storage Classes

Anvil Composable provides two different storage classes based on access characteristics needs of workloads.

- anvil-block - Block storage based on SSDs that can be accessed by a single node (Single-Node Read/Write).

anvil-filesystem- File storage based on SSDs that can be accessed by multiple nodes (Many-Node Read/Write or Many-Node Read-Only)

Link to section 'Provisioning Block and Filesystem Storage for use in deployments' of 'Storage' Provisioning Block and Filesystem Storage for use in deployments

Block and Filesystem storage can both be provisioned in a similar way.

-

While deploying a Workload, select the Volumes drop down and click Add Volume…

-

Select “Add a new persistent volume (claim)”

-

Set a unique volume name, i.e. “<username>-volume”

-

Select a Storage Class. The default storage class is Ceph for this Kubernetes cluster

-

Request an amount of storage in Gigabytes

-

Click Define

-

Provide a Mount Point for the persistent volume: i.e /data

Link to section 'Backup Strategies' of 'Storage' Backup Strategies

Developers using the Anvil Composable Platform should have a backup strategy in place to ensure that your data is safe and can be recovered in case of a disaster. Below is a list of methods that can be used to backup data on Persistent Volume Claims.

Link to section 'Copying Files to and from a Container' of 'Storage' Copying Files to and from a Container

The kubectl cp command can be used to copy files into or out of a running container.

# get pod id you want to copy to/form

kubectl -n <namespace> get pods

# copy a file from local filesystem to remote pod

kubectl cp /tmp/myfile <namespace>/<pod>:/tmp/myfile

# copy a file from remote pod to local filesystem

kubectl cp <namespace>/<pod>:/tmp/myfile /tmp/myfile

This method requires the tar executable to be present in your container, which is usually the case with Linux image. More info can be found in the kubectl docs.

Link to section 'Copying Directories from a Container' of 'Storage' Copying Directories from a Container

The kubectl cp command can also be used to recusively copy entire directories to local storage or places like Data Depot.

# get pod id you want to copy to/form

kubectl -n <namespace> get pods

# copy a directory from remote pod to local filesystem

kubectl cp <namespace>/<pod>:/pvcdirectory /localstorage

Link to section 'Backing up a Database from a Container' of 'Storage' Backing up a Database from a Container

The kubectl exec command can be used to create a backup or dump of a database and save it to a local directory. For instance, to backup a MySQL database with kubectl, run the following commands from a local workstation or cluster frontend.

# get pod id of your database pod

kubectl -n <namespace> get pods

# run mysqldump in the remote pod and redirect the output to local storage

kubectl -n <namespace> exec <pod> -- mysqldump --user=<username> --password=<password> my_database > my_database_dump.sql

Link to section 'Backups using common Linux tools' of 'Storage' Backups using common Linux tools

If your container has the OpenSSH client or rsync packages installed, one can use the kubectl exec command to copy or synchonize another storage location.

# get pod id of your pod

kubectl -n <namespace> get pods

# run scp to transfer data from the pod to a remote storage location

kubectl -n <namespace> <pod> exec -- scp -r /data username@anvil.rcac.purdue.edu:~/backup

Link to section 'Automating Backups' of 'Storage' Automating Backups

Kubernetes CronJob resources can be used with the commands above to create an automated backup solution. For more information, refer to the Kubernetes documentation.

Link to section 'Accessing object storage externally from local machine using Cyberduck' of 'Storage' Accessing object storage externally from local machine using Cyberduck

Cyberduck is a free server and cloud storage browser that can be used to access the public S3 endpoint provided by Anvil.

-

Launch Cyberduck

-

Click + Open Connection at the top of the UI.

-

Select S3 from the dropdown menu

-

Fill in Server, Access Key ID and Secret Access Key fields

-

Click Connect

-

You can now right click to bring up a menu of actions that can be performed against the storage endpoint

Further information about using Cyberduck can be found on the Cyberduck documentation site.

Examples

Examples of deploying a database with persistent storage and making it available on the network and deploying a webserver using a self-assigned URL.

Database

Link to section 'Deploy a postgis Database' of 'Database' Deploy a postgis Database

- Select your Project from the top right dropdown

- Using the far left menu, select Workload

- Click Create at the top right

- Select the appropriate Deployment Type for your use case, here we will select and use Deployment

- Fill out the form

- Select Namespace

- Give arbitrary Name

- Set Container Image to the postgis Docker image:

registry.anvil.rcac.purdue.edu/docker-hub-cache/postgis/postgis:latest - Set the postgres user password

- Select the Add Variable button under the Environment Variables section

- Fill in the fields Variable Name and Value so that we have a variable

POSTGRES_PASSWORD = <some password>

- Create a persistent volume for your database

- Select the Storage tab from within the current form on the left hand side

- Select Add Volume and choose Create Persistent Volume Claim

- Give arbitrary Name

- Select Single-Node Read/Write

- Select appropriate Storage Class from the dropdown and give Capacity in GiB e.g 5

- Provide the default postgres data directory as a Mount Point for the persistent volume

/var/lib/postgresql/data - Set Sub Path to

data

- Set resource CPU limitations

- Select Resources tab on the left within the current form

- Under the CPU Reservation box fill in

2000This ensures that Kubernetes will only schedule your workload to nodes that have that resource amount available, guaranteeing your application has 2CPU cores to utilize - Under the CPU Limit box also will in 2000 This ensures that your workload cannot exceed or utilize more than 2CPU cores. This helps resource quota management on the project level.

- Setup Pod Label

- Select Labels & Annotations on the left side of the current form

- Select Add Label under the Pod Labels section

- Give arbitrary unique key and value you can remember later when creating Services and other resources e.g Key:

my-dbValue:postgis

- Select Create to launch the postgis database

Wait a couple minutes while your persistent volume is created and the postgis container is deployed. The “does not have minimum availability” message is expected. But, waiting more than 5 minutes for your workload to deploy typically indicates a problem. You can check for errors by clicking your workload name (i.e. "mydb"), then the lower button on the right side of your deployed pod and selecting View Logs If all goes well, you will see an Active status for your deployment

Link to section 'Expose the Database to external clients' of 'Database' Expose the Database to external clients

Use a LoadBalancer service to automatically assign an IP address on a private Purdue network and open the postgres port (5432). A DNS name will automatically be configured for your service as <servicename>.<namespace>.anvilcloud.rcac.purdue.edu.

- Using the far left menu and navigate to Service Discovery > Services

- Select Create at the top right

- Select Load Balancer

- Fill out the form

- Ensure to select the namespace where you deployed the postgis database

- Give a Name to your Service. Remember that your final DNS name when the service creates will be in the format of

<servicename>.<namespace>.anvilcloud.rcac.purdue.edu - Fill in Listening Port and Target Port with the postgis default port 5432

- Select the Selectors tab within the current form

- Fill in Key and Value with the label values you created during the Setup Pod Label step from earlier e.g Key:

my-dbValue:postgis - IMPORTANT: The yellow bar will turn green if your key-value pair matches the pod label you set during the "Setup Pod Label" deployment step above. If you don't see a green bar with a matching Pod, your LoadBalancer will not work.

- Fill in Key and Value with the label values you created during the Setup Pod Label step from earlier e.g Key:

- Select the Labels & Annotations tab within the current form

- Select Add Annotation

- To deploy to a Purdue Private Address Range fill in Key:

metallb.universe.tf/address-poolValue:anvil-private-pool - To deploy to a Public Address Range fill in Key:

metallb.universe.tf/address-poolValue:anvil-public-pool

Kubernetes will now automatically assign you an IP address from the Anvil Cloud private IP pool. You can check the IP address by hovering over the “5432/tcp” link on the Service Discovery page or by viewing your service via kubectl on a terminal.

$ kubectl -n <namespace> get services

Verify your DNS record was created:

$ host <servicename>.<namespace>.anvilcloud.rcac.purdue.edu

Web Server

Link to section 'Nginx Deployment' of 'Web Server' Nginx Deployment

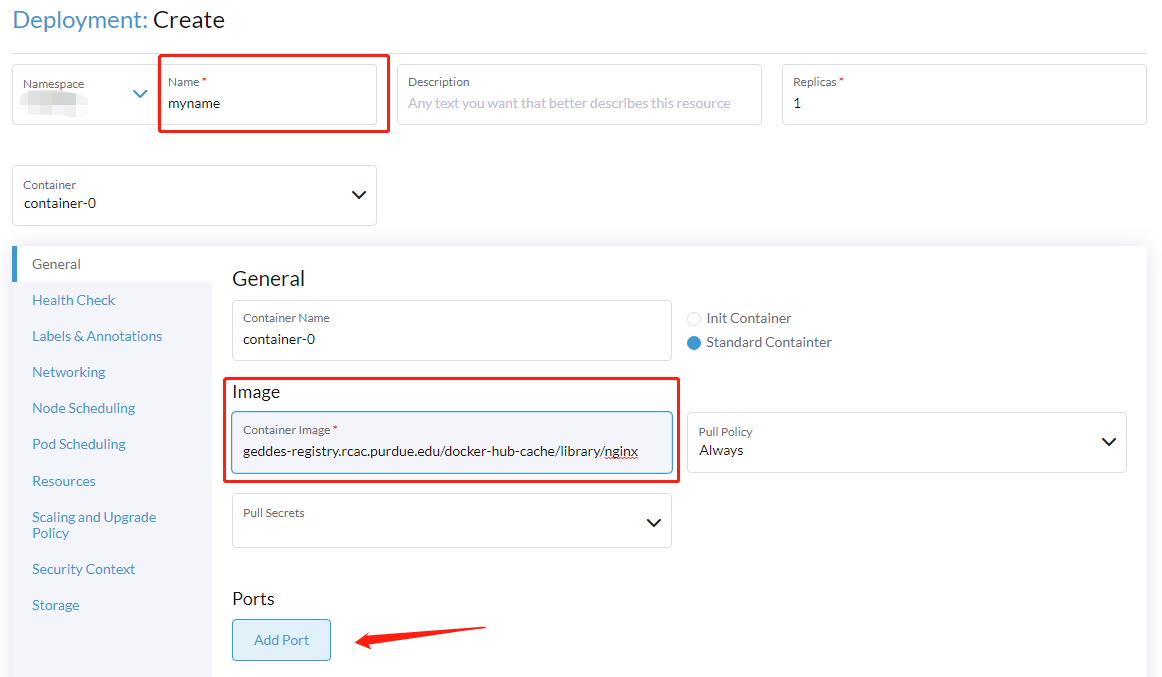

- Select your Project from the top right dropdown

- Using the far left menu so select Workload

- Click Create at the top right

- Select the appropriate Deployment Type for your use case, here we will select and use Deployment

- Fill out the form

- Select Namespace

- Give arbitrary Name

- Set Container Image to the postgis Docker image:

registry.anvil.rcac.purdue.edu/docker-hub-cache/library/nginx - Create a Cluster IP service to point our external accessible ingress to later

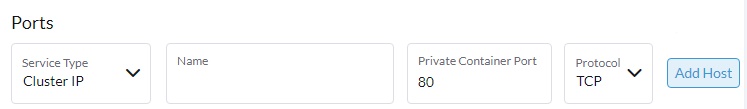

- Click Add Port

- Click Service Type and with the drop select Cluster IP

- In the Private Container Port box type 80

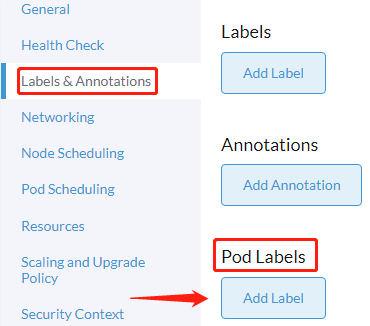

- Setup Pod Label

- Select Labels & Annotations on the left side of the current form

- Select Add Label under the Pod Labels section

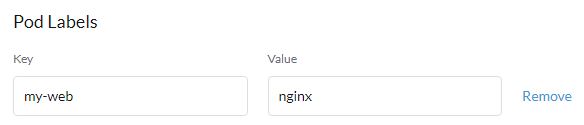

- Give arbitrary unique key and value you can remember later when creating Services and other resources e.g Key:

my-webValue:nginx

- Click Create

Wait a couple minutes while your application is deployed. The “does not have minimum availability” message is expected. But, waiting more than 5 minutes for your workload to deploy typically indicates a problem. You can check for errors by clicking your workload name (i.e. "mywebserver"), then using the vertical ellipsis on the right hand side of your deployed pod and selecting View Logs

If all goes well, you will see an Active status for your deployment.

Link to section 'Expose the web server to external clients via an Ingress' of 'Web Server' Expose the web server to external clients via an Ingress

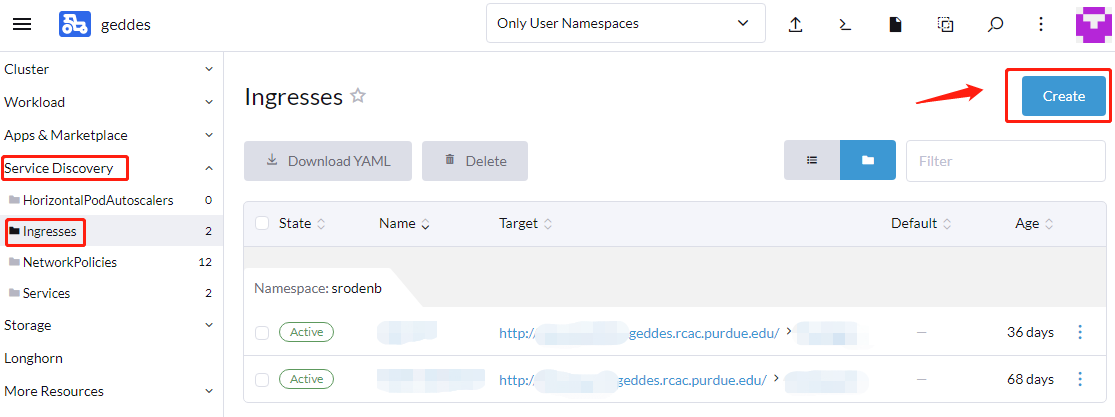

- Using the far left menu and navigate to Service Discovery > Ingresses and select Create at the top right

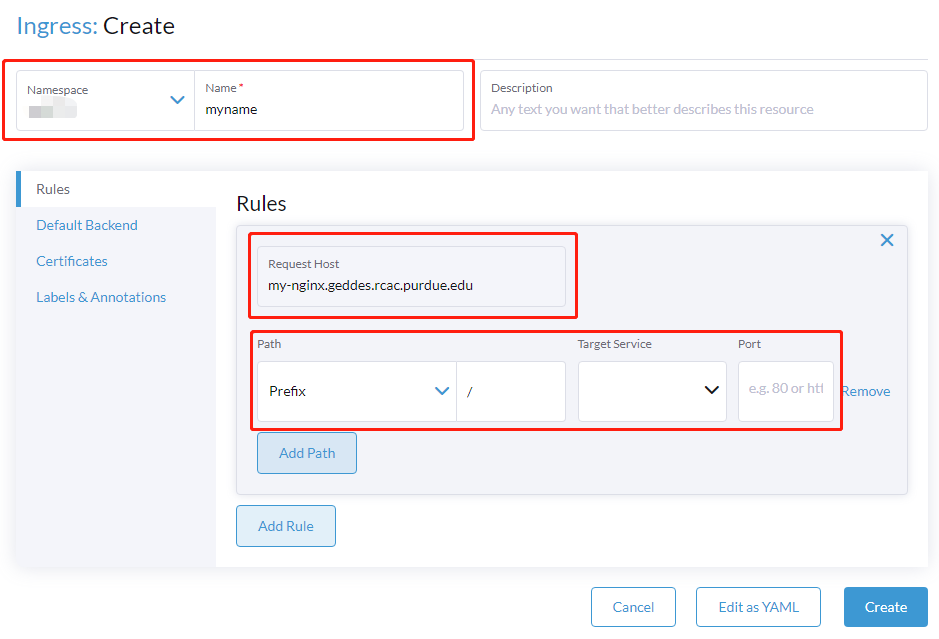

- Fill out the form

- Ensure to select the namespace where you deployed the nginx

- Give an arbitrary Name

- Under Request Host give the url you want for your web application e.g

my-nginx.anvilcloud.rcac.purdue.edu - Fill in the value Path > Prefix as

/ - Use the Target Service and ;Port dropdowns to select the service you created during the Nginx Deployment section

- Click Create