Gautschi supercomputer in full production and available for faculty use

Purdue University’s Rosen Center for Advanced Computing’s (RCAC) most powerful supercomputer to date, Gautschi, has completed its early user program and entered full production - now available for faculty use.

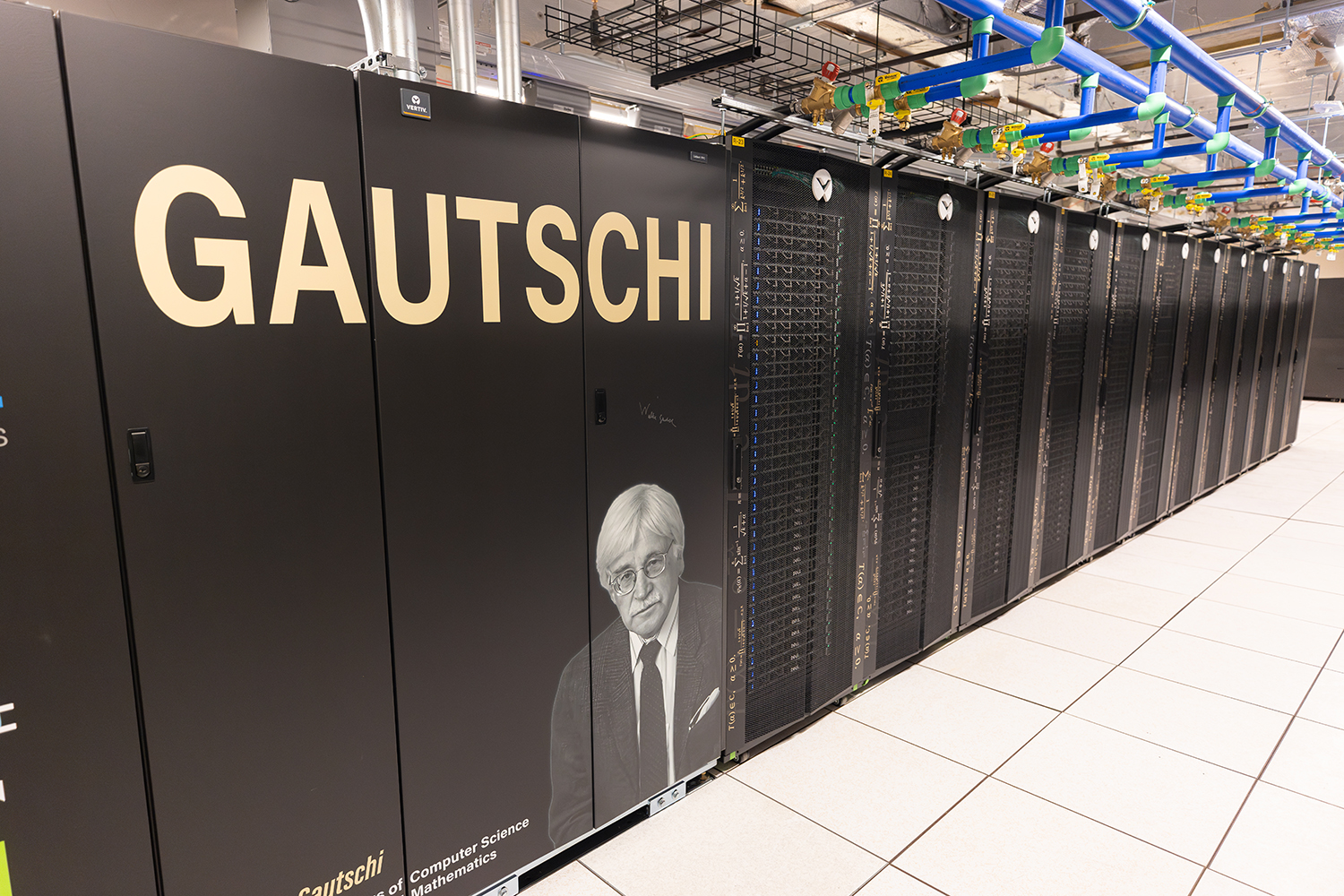

The Gautschi cluster  features cutting-edge technology for traditional physics-based modeling and simulation while also offering NVIDIA's top-tier solutions for artificial intelligence (AI) applications. Thanks to support from Purdue Computes and the Institute for Physical AI (IPAI), the cluster was built through a partnership with Dell, AMD, and Nvidia in late 2024. It is named in honor of Walter Gautschi, Professor Emeritus of Computer Science and Professor Emeritus of Mathematics at Purdue University.

features cutting-edge technology for traditional physics-based modeling and simulation while also offering NVIDIA's top-tier solutions for artificial intelligence (AI) applications. Thanks to support from Purdue Computes and the Institute for Physical AI (IPAI), the cluster was built through a partnership with Dell, AMD, and Nvidia in late 2024. It is named in honor of Walter Gautschi, Professor Emeritus of Computer Science and Professor Emeritus of Mathematics at Purdue University.

Gautschi consists of two partitions—a traditional high-performance computing (HPC) resource focused on providing next-generation CPUs and a dedicated AI partition containing Nvidia H100 SXM GPUs.

In total, the Gautschi cluster consists of:

- 338 Dell compute nodes with two 96-core AMD Epyc “Genoa” processors (192 cores per node) and 384 GB of memory,

- Six large memory nodes with 1.5 TB of memory,

- Six AI inference nodes with two Nvidia L40S GPUs, and

- 20 Dell PowerEdge XE9680 compute Nodes w/ 2x 56-Core Intel Xeon Platinum 8480+ CPUs and 8 Nvidia H100 SXM GPUs with 80 GB of HBM3 memory. Each card has its own non-blocking 400 Gbps NDR link.

Gautschi debuted at number 157 on the Top500 list of the world’s most powerful supercomputers and number 43 on the Green500 list of the most energy-efficient supercomputers. It is currently a Top-7 academic system in the US, and the 5th most powerful campus-serving cluster.

So far, 29 research groups—addressing problems ranging from modeling protein structures for drug discovery, building a generative AI model to simulate the effects of drug molecules on cultured cells, running density functional theory (DFT) simulations of solid-state crystal materials, building language models and mid-scale computer vision models, and developing large tomographic reconstruction software—have been onboarded and successfully run workflows on Gautschi via an Early User Program (EUP). The EUP provided these users with early access to the cluster in order to help identify and remedy any potential issues with the new system prior to production. This broad cross-section of Purdue researchers used over 130,000 GPU hours (nearly 15 years) during the 11-week EUP, a volume of work that would have cost millions of dollars if purchased from commercial providers. Collected via a post-EUP survey, these are some of things users are saying about Gautschi:

- “The GPUs were, of course, exceptional, especially for generative AI applications.”

- “Having GPU and CPU partitions in the same cluster was extremely helpful for some workloads. No more shuttling data back and forth between Negishi and Gilbreth!”

- “For GPU, it was about 2-3 times faster for the problems I was running.”

- “All flash Lustre was really great performance. Also, it allows us to place 5x more file count in the scratch, which enables me to do some calculations that cannot be done in different clusters.”

- “Having access to more GPUs at once definitely was helpful to improve my code.”

- “Since we are using state-of-the-art techniques like flow-matching generative AI, our model sizes are usually of the order of 1-10 billion parameters. Access to Gautschi helped make this project possible since the H100 chip is one of the only systems that allows us to train models at this scale.”

Gautschi is now part of Purdue’s community cluster program, which is celebrating its 21-year anniversary and serves more than 261 active partners from 66 departments across all three Purdue campuses. Last year, 73% of Purdue’s sponsored research awards were to faculty using HPC. As with all Purdue Community Cluster systems, RCAC offers world-class support for its Gautschi users, with new experts with real-world knowledge in AI applications available to help users optimize workflows and understand how to run their jobs quickly and efficiently on this new system.

“Having a major resource like Gautschi will allow Purdue researchers to quickly accelerate their Physical AI workflows, and serve as a way to quickly differentiate Purdue as we recruit faculty for Purdue Computes and identify Dream Hires. This system is the fastest that we’ve ever operated here at Purdue, and is an extremely cost-effective way to deliver AI compute to researchers across the University,” says Preston Smith, Executive Director of the Rosen Center for Advanced Computing and Director of Computing Infrastructure for IPAI.

Researchers at Purdue can purchase capacity in Gautschi through the RCAC cluster orders website. To help promote the utilization of AI workflows and enable Purdue to lead the charge in AI research, IPAI is currently offering a matching program for Gautschi-AI, with IPAI matching a one-year allocation of one GPU, for each GPU purchased (up to 8 GPUs). To take advantage of the matching program, all researchers need to do is provide a written description of their project and how it relates to physical AI alongside their purchase order.

Due to unique features and optimization required for AI usage patterns, Gautschi-AI will feature an updated resource accounting model that is different from the model used for previous community clusters, and Gautschi’s CPU partition. For more information regarding the details of the Gautschi-AI partition and its allocation model, please read through our Gautschi-AI Overview article.

To learn more about the Gautschi cluster or other RCAC resources, please contact rcac-help@purdue.edu.

Written by: Jonathan Poole, poole43@purdue.edu