Anvil enters year three of production

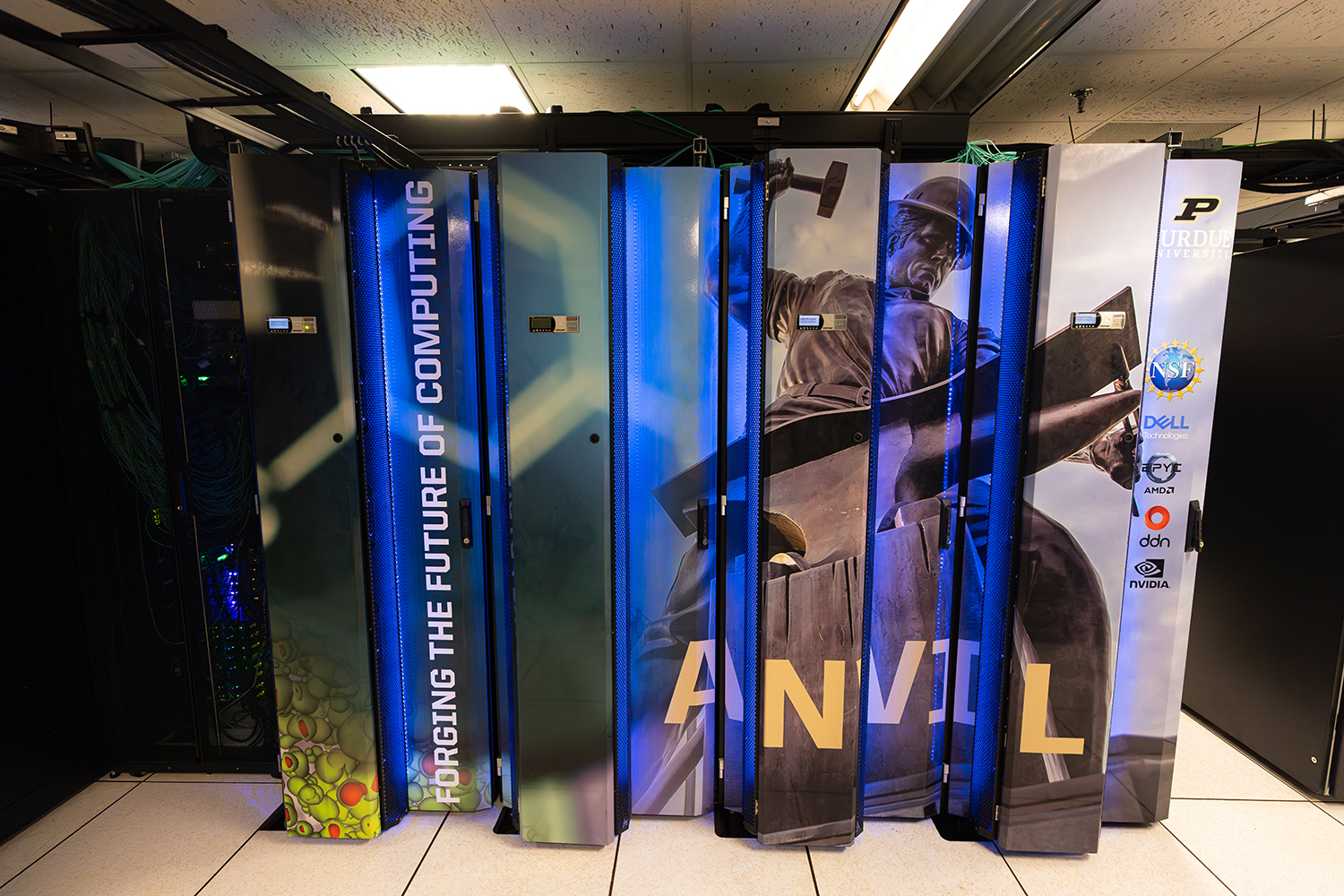

Anvil, Purdue’s most powerful supercomputer, continues its pursuit of excellence in HPC as it enters its third year of operations. Funded by a $10 million acquisition grant from the National Science Foundation (NSF), Anvil began early user operations in November 2021 and entered production operations in February 2022. After two years online, Anvil has more than proven its value. The supercomputer has been used to help nearly 6,000 researchers push the boundaries of scientific exploration in a variety of fields, including artificial intelligence, astrophysics, climatology, and nanotechnology.

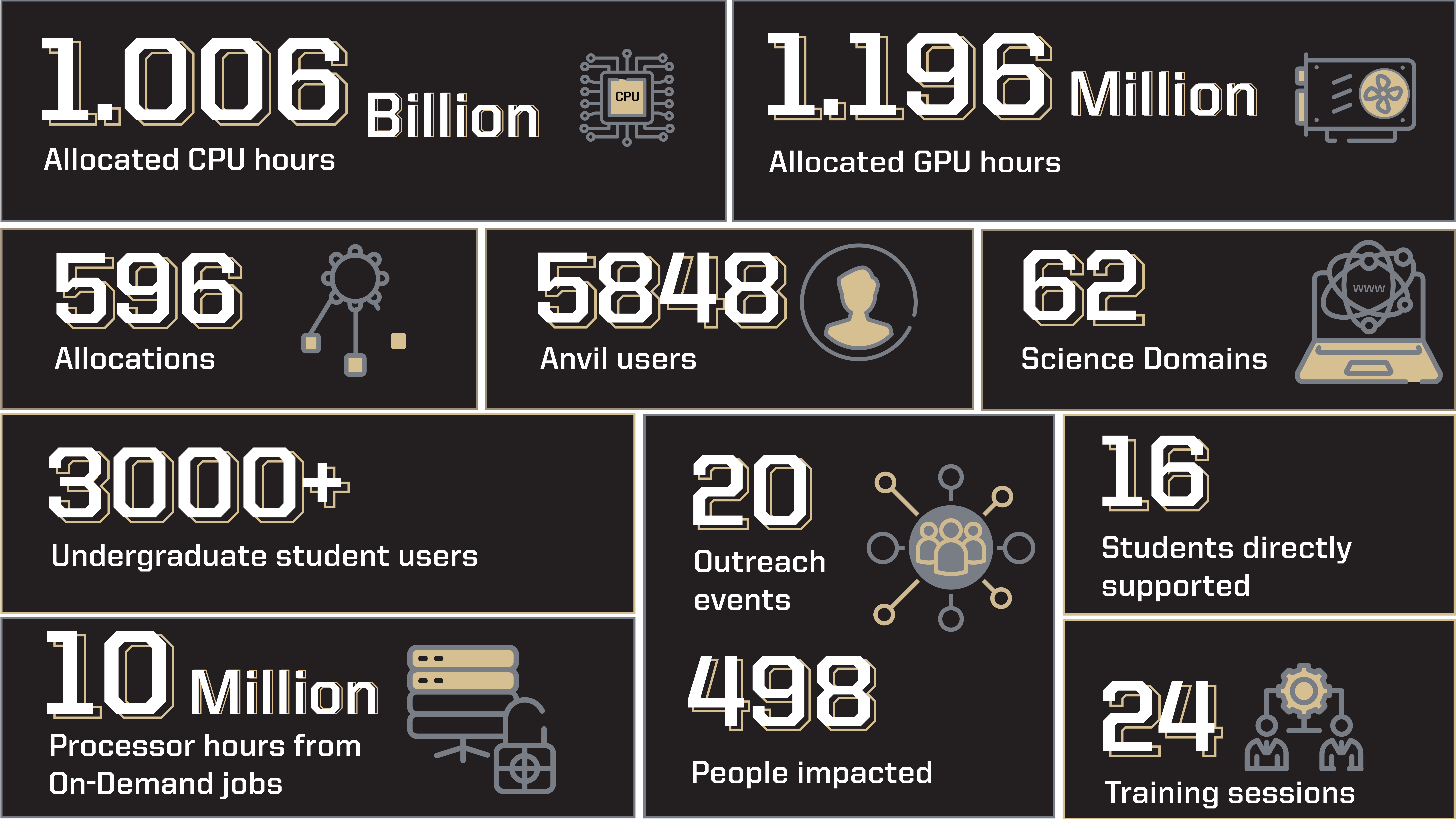

Anvil at a Glance—Two Years of Operations

Over the past two years, Anvil has had a significant impact on scientific research and student development. With nearly 6,000 total users thus far, of which over 3,000 were undergraduate students, Anvil is not only helping meet the growing need for high-performance computing (HPC) within the realms of research, but also actively assisting with the development of cyberinfrastructure professionals of tomorrow. Overall, Anvil has allowed users access to 1.006 billion CPU hours and 1.196 million GPU hours, supporting research across 62 diverse scientific domains. In 2023 alone, 72 research publications cited Anvil usage. Aside from the supercomputer itself, the Anvil team has been hard at work promoting the benefits of HPC and ensuring the nation has a workforce trained in the use, operation, and support of advanced cyberinfrastructure. In the two years of operations, the Anvil team has participated in 20 outreach events and conducted 24 training sessions, with more on the horizon. These training sessions are designed to deliver working knowledge of HPC systems and teach users how to get the most out of their research time on Anvil. The team also provided hands-on training to students through initiatives such as the Anvil Summer REU program, which allowed the students to gain much-needed knowledge and experience in the field of HPC.

Anvil Tech Specs

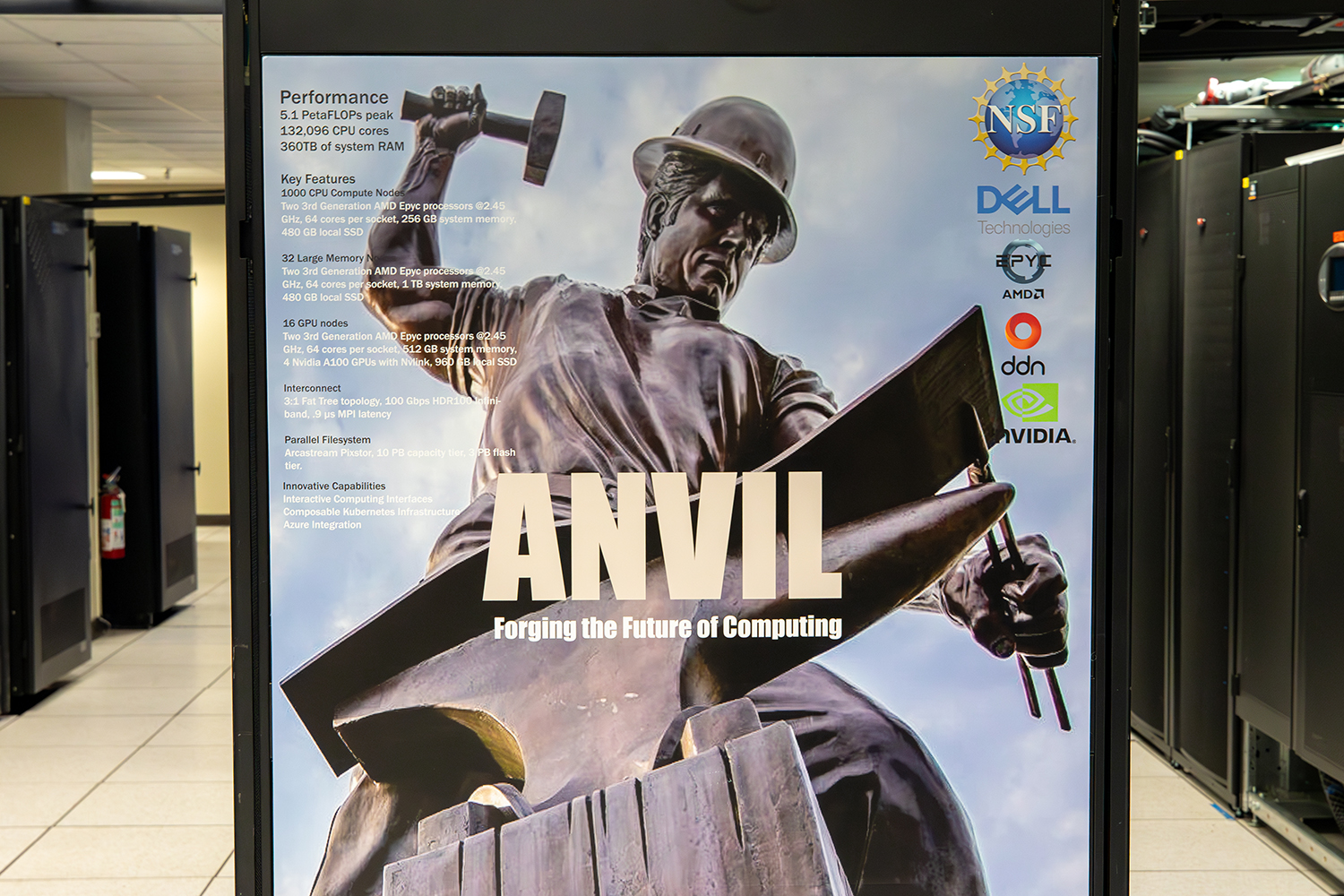

Anvil is a supercomputer deployed  by Purdue’s Rosen Center for Advanced Computing (RCAC) in partnership with Dell and AMD. The system was created to significantly increase the computing capacity available to users of the NSF’s Advanced Cyberinfrastructure Coordination Ecosystem: Services and Support (ACCESS), a program that serves tens of thousands of researchers across the United States. The system consists of 1,000 Dell compute nodes, each with two 64-core third-generation AMD EPYC processors, and will deliver over 1 billion CPU core hours to ACCESS every year. Anvil's nodes are interconnected with 100 Gbps Nvidia Quantum HDR InfiniBand. The supercomputer ecosystem also includes 32 large memory nodes, with 1 TB of RAM per node, and 16 GPU nodes, each with four NVIDIA A100 Tensor Core GPUs. These GPU nodes provide an additional 1.5 PF of single-precision performance to support machine learning and artificial intelligence applications.

by Purdue’s Rosen Center for Advanced Computing (RCAC) in partnership with Dell and AMD. The system was created to significantly increase the computing capacity available to users of the NSF’s Advanced Cyberinfrastructure Coordination Ecosystem: Services and Support (ACCESS), a program that serves tens of thousands of researchers across the United States. The system consists of 1,000 Dell compute nodes, each with two 64-core third-generation AMD EPYC processors, and will deliver over 1 billion CPU core hours to ACCESS every year. Anvil's nodes are interconnected with 100 Gbps Nvidia Quantum HDR InfiniBand. The supercomputer ecosystem also includes 32 large memory nodes, with 1 TB of RAM per node, and 16 GPU nodes, each with four NVIDIA A100 Tensor Core GPUs. These GPU nodes provide an additional 1.5 PF of single-precision performance to support machine learning and artificial intelligence applications.

In 2023, GPU capabilities were added to the Anvil Composable Subsystem of the Anvil supercomputer. The Anvil Composable Subsystem hosts eight composable nodes, each with 64 cores and 512 GB of RAM, and a composable GPU node, with 4 NVIDIA A100 80GB GPUs. The Anvil Composable Subsystem is a Kubernetes-based private cloud managed with Rancher that provides a platform for creating composable infrastructure on demand. This cloud-style flexibility allows researchers to self-deploy and manage persistent services to complement HPC workflows and run container-based data analysis tools and applications. The composable subsystem is intended for non-traditional workloads, such as science gateways and databases, and the addition of the composable GPU node supports tasks such as AI inference services and model hosting.

Enabling science through advanced computing

Because of its configuration, Anvil is able to reach a peak processing speed of 5.3 petaFLOPS, making it one of the most powerful academic supercomputers in the US. When it debuted, the Anvil supercomputer was listed as number 143 on the Top500 list of the world’s most powerful supercomputers. This advanced processing speed and power has allowed researchers to save hours of time on computations and simulations, enabling innovative scientific research and discovery. The highlights given below are but a few of the hundreds of use-cases stemming from Anvil:

1) Scientists from the collaborative Simulating eXtreme Spacetimes (SXS) research group used (and still use) Purdue’s Anvil supercomputer to explore the physics of cataclysmic space-time events and help shed light on the nature of one of the Universe’s fundamental forces: gravity. Vijay Varma, Assistant Professor in the Department of Mathematics at the University of Massachusetts, Dartmouth, and Nils Deppe, Assistant Professor of Physics at Cornell University, are computational astrophysicists. The two use Anvil to help develop, test, and run state-of-the-art numerical relativity codes that make high-accuracy gravitational wave predictions for Laser Interferometer Gravitational-Wave Observatory (LIGO) signals. The Anvil supercomputer is indispensable to them for their research, and the group is pleased not only with the performance of the computer, but also with the support they received from the Anvil staff:

“The team at Anvil has been absolutely terrific in working with us to resolve any issues we had when we started,” says Deppe. “At this point, everything is just smooth sailing. So a huge shoutout to the team for working really hard and being flexible with our needs. We run huge simulations that take several months, so we need to keep the data around for a long time. Most supercomputers have a frequent purge policy, so I’m extremely grateful for the team figuring out how to let us store data for longer.”

Varma added, “Indeed, we definitely couldn't have done so many simulations without the special allocation of scratch storage for the group; that was extremely important.”

I

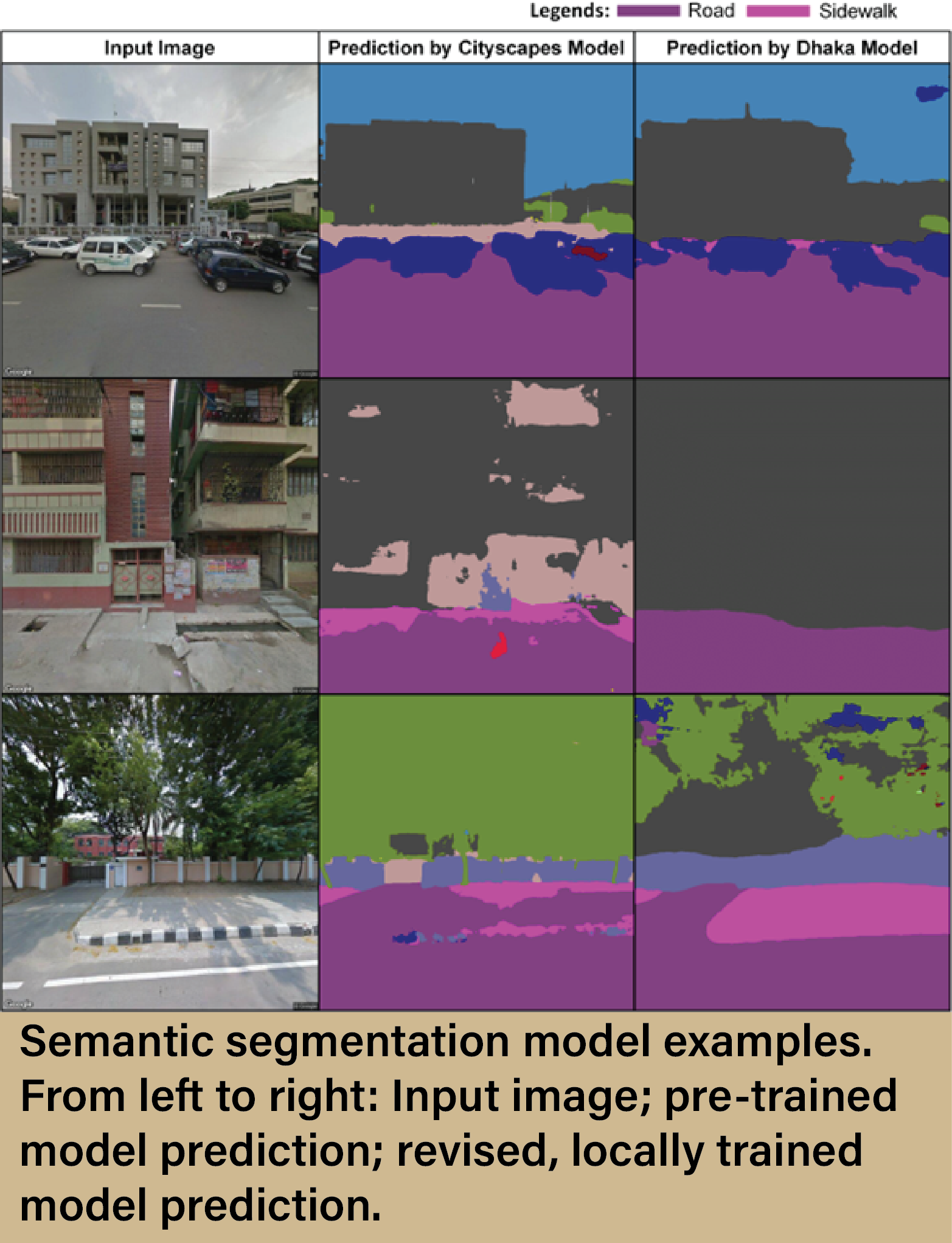

2) Omar Faruqe Hamim is a Graduate Research  Assistant at the Lyles School of Civil Engineering at Purdue University. He recently used Anvil to conduct research involving advanced computer vision techniques and machine learning algorithms, a subsect of artificial intelligence (AI). Hamim worked alongside Surendra Reddy Kancharla, a postdoctoral researcher, and under the supervision of Professor Satish Ukkusuri. Together, the team successfully created a deep learning architecture-based semantic segmentation model for mapping roads and sidewalks in developing countries from remotely sensed, open-sourced data. They were then able to automate the process of inventorying sidewalks by creating sidewalk maps of the study area on a neighborhood scale, based on the output from the developed model. All of this helps develop pedestrian infrastructure by enabling a relatively quick and efficient method of creating and maintaining a sidewalk inventory that local authorities can use for project planning. Hamim and his team were thrilled with what they could accomplish on Anvil:

Assistant at the Lyles School of Civil Engineering at Purdue University. He recently used Anvil to conduct research involving advanced computer vision techniques and machine learning algorithms, a subsect of artificial intelligence (AI). Hamim worked alongside Surendra Reddy Kancharla, a postdoctoral researcher, and under the supervision of Professor Satish Ukkusuri. Together, the team successfully created a deep learning architecture-based semantic segmentation model for mapping roads and sidewalks in developing countries from remotely sensed, open-sourced data. They were then able to automate the process of inventorying sidewalks by creating sidewalk maps of the study area on a neighborhood scale, based on the output from the developed model. All of this helps develop pedestrian infrastructure by enabling a relatively quick and efficient method of creating and maintaining a sidewalk inventory that local authorities can use for project planning. Hamim and his team were thrilled with what they could accomplish on Anvil:

“So we used HR net plus OCR,” says Hamim, “that's the semantic segmentation model. It was state-of-the-art at the time of doing the research, but the problem with that model was that it had millions of parameters and we needed a large number of GPUs to use it. So Professor Ukkusuri contacted Rajesh [Kalyanam, Senior Research Scientist at RCAC] and he helped us get set up on Anvil. We trained our model there and were able to reduce our computational time while also increasing the pixel size of our images. In our case, the images were 640 x 640 pixels, but on our lab computer we were only able to use a random pixel count of 128 x 128. On Anvil we were able to capture the whole image, which increased the performance of our model. So without the GPUs on Anvil, I would not have been able to train the model completely.”

3) Dr. Marco Giometto is an Assistant Professor in the Civil Engineering and Engineering Mechanics Department at Columbia University, as well as the head of Columbia University’s Environmental Flow Physics Lab (EFPL). He and the rest of the EFPL group use supercomputers to conduct research that focuses on the study of flow phenomena involving turbulence, heat transfer, and evaporation, specifically within the atmospheric boundary layer (bottom layer of the atmosphere which is in contact with the surface of the earth). Though the specifics of each research project can be quite different, the group’s overall goal is to advance the understanding and ability to model turbulent transport in the atmosphere. The group knew they would need a very powerful supercomputer to model turbulence, as it is a multiscale phenomenon, so they turned to Anvil. The group initially requested 7 million core hours on Anvil, which they quickly burned through. They have since extended their allocation request and received approval for nearly 35 million CPU core hours, all on the Anvil system. According to Dr. Giometto, two unexpected benefits to using Anvil were the queue times and max allowable simulation time. On other systems the group has used in the past, the max time allowed per simulation was two days, after which they would be placed back in a long queue to wait until their next turn. This was not the case with Anvil, as the maximum simulation time was four days, and the queue was very short.

“With Anvil,” says Dr. Giometto, “one nice thing was that it was not oversubscribed, and it had a four-day simulation duration. And these, I think, enabled us to close on a project that we wouldn’t have been able to close on otherwise.”

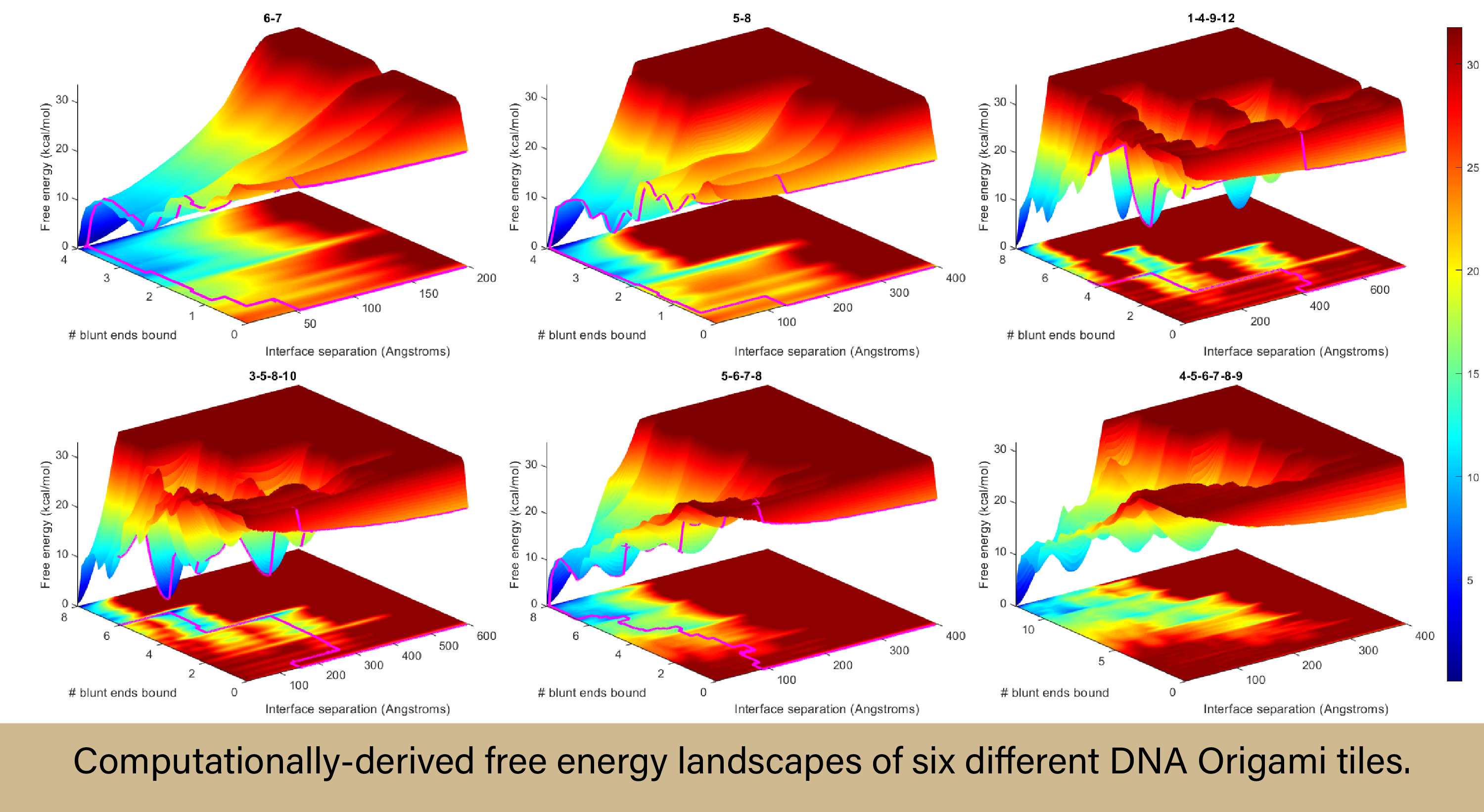

4) Pranav Sharma is an Associate Researcher  in the Biological and Soft Materials Modeling Lab at Duke University. He is using Anvil to develop and run a coarse-grained molecular model of nanoparticles in order to reverse-engineer a phenomenon called self-assembly, wherein nanoparticles spontaneously organize into higher-level structures due to intermolecular forces. Specifically, Sharma focused his project on DNA Origami, an emerging nanotechnology that takes advantage of the phenomenon of self-assembly as well as the properties inherent in DNA structures, with the ultimate goal of deepening the understanding of these structures and eventually leading to the development of a new nanoscale manufacturing paradigm. Sharma is no stranger to HPC, and he found that while Anvil had tremendous power and speed to offer for his simulations, the more exciting aspect of Purdue’s supercomputer was its reliability:

in the Biological and Soft Materials Modeling Lab at Duke University. He is using Anvil to develop and run a coarse-grained molecular model of nanoparticles in order to reverse-engineer a phenomenon called self-assembly, wherein nanoparticles spontaneously organize into higher-level structures due to intermolecular forces. Specifically, Sharma focused his project on DNA Origami, an emerging nanotechnology that takes advantage of the phenomenon of self-assembly as well as the properties inherent in DNA structures, with the ultimate goal of deepening the understanding of these structures and eventually leading to the development of a new nanoscale manufacturing paradigm. Sharma is no stranger to HPC, and he found that while Anvil had tremendous power and speed to offer for his simulations, the more exciting aspect of Purdue’s supercomputer was its reliability:

“Anvil is great,” says Sharma. “I’ve used many supercomputers, and Anvil has performed more reliably than many of these other systems. Often, I would leave a calculation running and come back to find something had gone wrong, only to run it the exact same way and have it work perfectly, but on Anvil, this was never an issue. I was able to just leave it running, and that’s the best thing you can ask for.”

Training and Education Impact

Aside from enabling groundbreaking research across multiple fields of science, Anvil is being used as a tool to develop the future workforce in computing. From professional training and workshops to hands-on learning experiences for students, Anvil is helping to forge the next generation of researchers and cyberinfrastructure professionals.

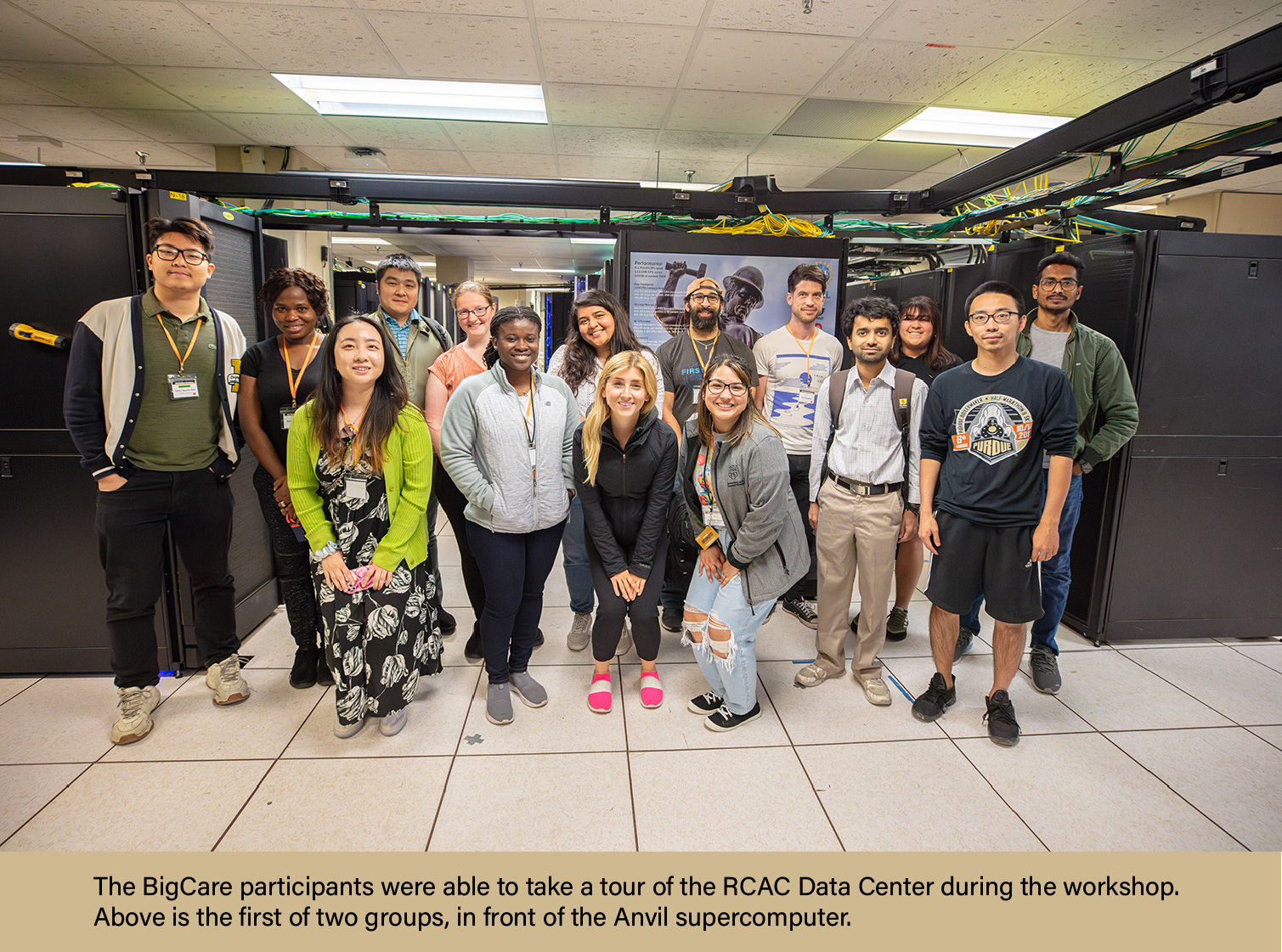

One major training and educational  impact made by Anvil involved supporting the 2023 NIH BigCare Workshop. The BigCare Workshop was a National Cancer Institute-funded biomedical data analysis workshop designed to train cancer researchers on how to visualize, analyze, manage, and integrate large amounts of data in cancer studies. Dr. Min Zhang, the principal investigator on the NCI-funded project, taught the workshop participants the skills needed to analyze their research data, while Anvil provided an HPC environment that had a very low barrier to entry, ensuring that non-HPC professionals could quickly and easily complete their research without having to become an expert in computing.

impact made by Anvil involved supporting the 2023 NIH BigCare Workshop. The BigCare Workshop was a National Cancer Institute-funded biomedical data analysis workshop designed to train cancer researchers on how to visualize, analyze, manage, and integrate large amounts of data in cancer studies. Dr. Min Zhang, the principal investigator on the NCI-funded project, taught the workshop participants the skills needed to analyze their research data, while Anvil provided an HPC environment that had a very low barrier to entry, ensuring that non-HPC professionals could quickly and easily complete their research without having to become an expert in computing.

“We don’t want to turn everyone into a computer scientist, because they have more important things to do,” says Zhang. “Previously, we had to teach users the front end, back end, command lines, all this kind of stuff, and now it’s all gone! Life is so much easier. And everyone was so excited that they wanted to take Anvil to their own institution. Some of them would even say, ‘We do have HPC, we do have cloud, but it’s not as user-friendly as Anvil.’”

Anvil was so helpful for the workshop that Zhang intends to renew it as the resource for supporting BigCare for the foreseeable future. “It definitely made our workshop run much better and much smoother, and attracts many more researchers. So I think we will carry on this collaboration for not only next year, but the next five years. The cancer researchers will benefit a lot from the Anvil computing environment.”

Anvil also supported roughly 1,800 students in a national data science experiential learning and research program known as The Data Mine. The goal of The Data Mine is to foster faculty-industry partnerships and enable the adoption of cutting-edge technologies. The course introduces students of all levels and majors to concepts of data science and coding skills for research. The students then partner with outside companies for a year to work on real-world analytic problems. Anvil provided 1 million CPU hours for the program and allowed the students to manage extensive research datasets, thanks to the supercomputer’s large capacity.

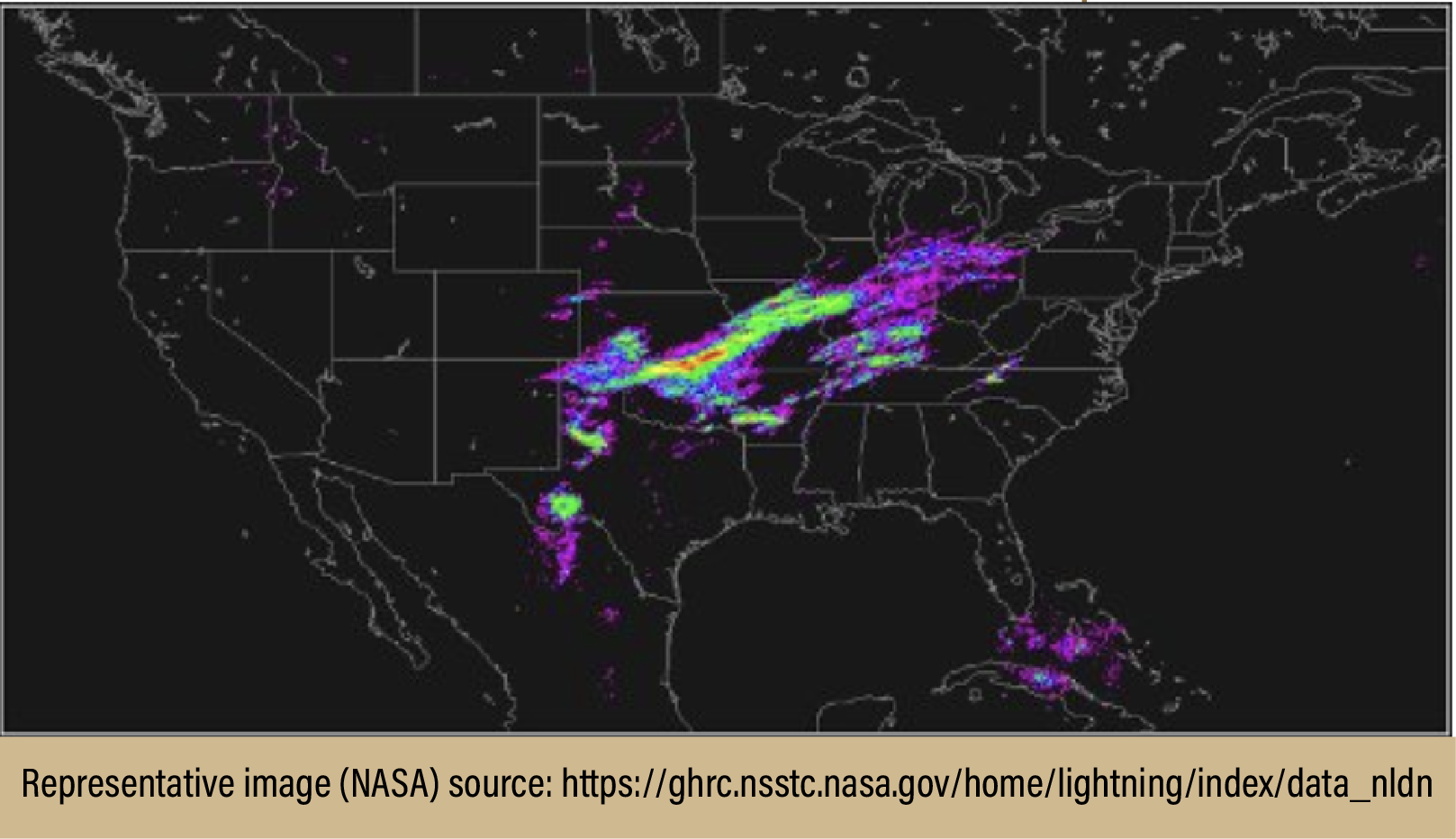

Anvil has been busy enabling research experience programs for undergraduates as well. One such program involved five students from the University of Illinois at Urbana-Champaign (U of I) who took part in a semester-long research experience program to gain practical knowledge on data analytics and statistical research using common computing HPC resources. Daniel Ries, a Principal Data Scientist at Sandia National Laboratories, led the project, teaching undergraduates in statistics and computer science the skills they will need once they enter the workforce. According to Ries, the intent behind this research experience was multifaceted—he wanted to utilize a real-world research problem to teach students how to scope, execute and refine, and draw conclusions, apply knowledge gained from coursework to actual research, and—importantly—create reproducible research on a common computing platform. By obtaining access to the Anvil supercomputer, the students were able to accomplish all of this and more.

“Getting the students on Anvil was not only a benefit, you know, in terms of reproducibility, but in terms of what these students will be doing when they either go to grad school or get a full-time job in the data science world,” says Ries. “Most of the work that’s done at a company, at a research lab, in academia—the computing is done on servers. You don’t do computing on your own laptop or your own computer anymore. Just given the scale of models, the scale of data, it’s very common to have to get used to working in a server environment, a Linux environment, things like that, and I don’t think actually any of the students had experience with that. So it actually turned out to be a very good, I think, experience for them.”

Not only did the students get  HPC experience in an actual research application, but the research itself had practical implications. The group focused on a mode of predictive modeling known as “nowcasting.” With nowcasting, a research team is looking to predict weather conditions in the near future based on conditions in the very recent past. As part of this project, the undergraduate students set out to build three different predictive models that could determine where lightning would strike in the next 15 to 60 minutes—a type of nowcasting that is immensely useful across multiple sectors. Using data collected from multiple sources, the students were able to develop two traditional statistical models and a third, U-Net deep learning model. The two traditional models, while typically not memory or computationally expensive, benefitted from the use of Anvil due to the sheer size of the data sets. And the U-Net model was trained on the Anvil GPUs, saving the team an enormous amount of time (30-60 minutes per training run versus a day or more without). By the end of the semester, the students successfully developed all three models.

HPC experience in an actual research application, but the research itself had practical implications. The group focused on a mode of predictive modeling known as “nowcasting.” With nowcasting, a research team is looking to predict weather conditions in the near future based on conditions in the very recent past. As part of this project, the undergraduate students set out to build three different predictive models that could determine where lightning would strike in the next 15 to 60 minutes—a type of nowcasting that is immensely useful across multiple sectors. Using data collected from multiple sources, the students were able to develop two traditional statistical models and a third, U-Net deep learning model. The two traditional models, while typically not memory or computationally expensive, benefitted from the use of Anvil due to the sheer size of the data sets. And the U-Net model was trained on the Anvil GPUs, saving the team an enormous amount of time (30-60 minutes per training run versus a day or more without). By the end of the semester, the students successfully developed all three models.

Another research experience that Anvil supported was RCAC’s very own Anvil Research Experience for Undergraduates (REU) Summer 2023 program. The 2023 Anvil REU program saw five students from across the nation gather at Purdue’s campus in West Lafayette, Indiana, for 11 weeks to learn about HPC and work on projects related to the operations of the Anvil supercomputer. Eight members of RCAC’s staff provided mentorship to the five students throughout the summer, helping them to complete four separate Anvil-enhancing projects. The student participants of the program were:

- Ved Arora, Data Science & Analytics major from Case Western Reserve University in Ohio

- Nayeli Gurrola, Computer Science major from the University of Texas Rio Grande Valley

- Oluwatumininu Oguntola, Computer Science major from the University of North Carolina at Chapel Hill

- Henry Olson, Computer Science and Cybersecurity double major from Purdue University Northwest

- Aneesh Chakravarthula, Computer Science major from Purdue University

By summer’s end, the students had made fantastic progress: they completed their projects, learned technical and people skills they will need when in the workforce, and gained an in-depth understanding of the world of HPC. Each of the four projects is still being used by the Anvil team in some capacity to help advance the system, and two of the students were even able to produce a conference publication from their work, which they presented at the 2023 International Conference for High-Performance Computing, Networking, Storage, and Analysis (SC23).

Industry Partnerships

Anvil’s second year of production saw the introduction of its Industry Partnership program. This program allows industry users to utilize the Anvil supercomputer for their business needs, but at a fraction of the cost of private HPC companies. Examples of some of the current Industry Partnership users, as well as projects under discussion, include:

- Smart building technology company (Kubernetes GPU workloads)

- Non-profit engaged in clean energy research (I/O intensive workloads)

- High-resolution weather prediction company (large geospatial datasets)

- AI-driven platform for airport power infrastructure management for electric aircraft

- Electromagnetic propulsion systems

- Generative AI for personalized content

- Company working on cancer detection technology using blood tests

- Life sciences diagnostics company

- Technology company aimed at early detection of TBI and cognitive impairment

To learn more about the Industry Partnership program, please visit: https://www.rcac.purdue.edu/industry

Looking to the Future

With artificial intelligence (AI) becoming more  and more prominent in society, it is clear that HPC systems will need to assist not only with studying AI, but efficiently utilizing AI for the advancement of science and technology. The Anvil team has recognized that need and has responded accordingly by becoming an official resource for the newly launched National Artificial Intelligence Research Resource (NAIRR) Pilot. The NAIRR is an NSF project aimed at creating a national infrastructure that connects U.S. researchers to responsible and trustworthy Artificial Intelligence (AI) resources. The NAIRR will also provide these researchers equitable access to the data, software, training, computational, and educational resources needed to advance research, discovery, and innovation within the field of AI. By being part of the NAIRR Pilot program, Anvil will directly support research projects that focus on testing and validating AI systems, improving model performance, increasing the interpretability and privacy of learned models, reducing vulnerability to attacks, and assuring that AI functionality aligns with societal values and obeys safety guarantees.

and more prominent in society, it is clear that HPC systems will need to assist not only with studying AI, but efficiently utilizing AI for the advancement of science and technology. The Anvil team has recognized that need and has responded accordingly by becoming an official resource for the newly launched National Artificial Intelligence Research Resource (NAIRR) Pilot. The NAIRR is an NSF project aimed at creating a national infrastructure that connects U.S. researchers to responsible and trustworthy Artificial Intelligence (AI) resources. The NAIRR will also provide these researchers equitable access to the data, software, training, computational, and educational resources needed to advance research, discovery, and innovation within the field of AI. By being part of the NAIRR Pilot program, Anvil will directly support research projects that focus on testing and validating AI systems, improving model performance, increasing the interpretability and privacy of learned models, reducing vulnerability to attacks, and assuring that AI functionality aligns with societal values and obeys safety guarantees.

“We are very excited to take part in such an important effort and help provide the nation with advanced AI computing resources,” says Rosen Center Chief Scientist Carol Song, principal investigator and project director for Anvil. “Anvil was intended to lower barriers to applications of high-performance computing, with AI being a key workload. Joining the NAIRR, the Anvil team will bring together Purdue’s long history of supporting advanced computing with the experts being assembled on our campus to work on strategic initiatives, including Purdue Computes and the new Institute for Physical AI.”

The Anvil team also intends to continue its pursuit of excellence in HPC education throughout production year three. This summer’s Anvil REU program will see an increase from five undergraduate students to eight. These eight students will gain hands-on HPC experience while working to complete four separate, real-world application projects. The Anvil team will also be hosting two summer camp courses for high-school students. The first course, titled “CyberSafe Heroes,” will take place from June 16th through June 21st, 2024, and will focus on creating interest in and preparing students for careers in cybersecurity. The second, titled “Code Explorers,” will occur the following week, and will teach coding languages and introduce data analysis and visualization techniques to the students. Alongside these direct student interactions, Anvil will continue to provide world-class training sessions surrounding all things HPC. Many of the upcoming training sessions will focus on AI and data analysis applications, and how best to use them in a shared computing environment.

- Other goals for the upcoming year include:

- Continue to drive for adoption of all Anvil capabilities

- Operations and collaboration with ACCESS

- Expand software and tools

- Expand user support and training

- Hardware expansions (GPUs/storage)

- Sustain and broaden outreach

- Increase Industry engagement

“Anvil has established itself as a major HPC resource to the national research community,” says Preston Smith, Executive Director for the Rosen Center for Advanced Computing and co-PI on the Anvil project. “After two years in production, we are pleased with everything Anvil has enabled thus far, whether it be the science conducted on the machine or the training and education opportunities it has provided. Looking ahead to year three, our goal is to continue to innovate, helping expand the boundaries of scientific discovery, while still providing world-class support and education for researchers nationwide. With our inclusion as a resource for the NAIRR Pilot, we are looking forward to the new challenges in the upcoming year.”

Anvil is funded under NSF award number 2005632. The Anvil executive team includes Carol Song (PI), Preston Smith (Co-PI), Rajesh Kalyanam (Co-PI), and Arman Pazouki (Co-PI). Researchers may request access to Anvil via the ACCESS allocations process.

Written by: Jonathan Poole, poole43@purdue.edu