Worldwide particle physics experiment relies on Geddes cluster

Purdue is a large contributor of computing power to a major particle physics experiment, which relies on the Rosen Center for Advanced Computing (RCAC)’s Geddes community cluster, among others, to process and analyze large datasets in real time.

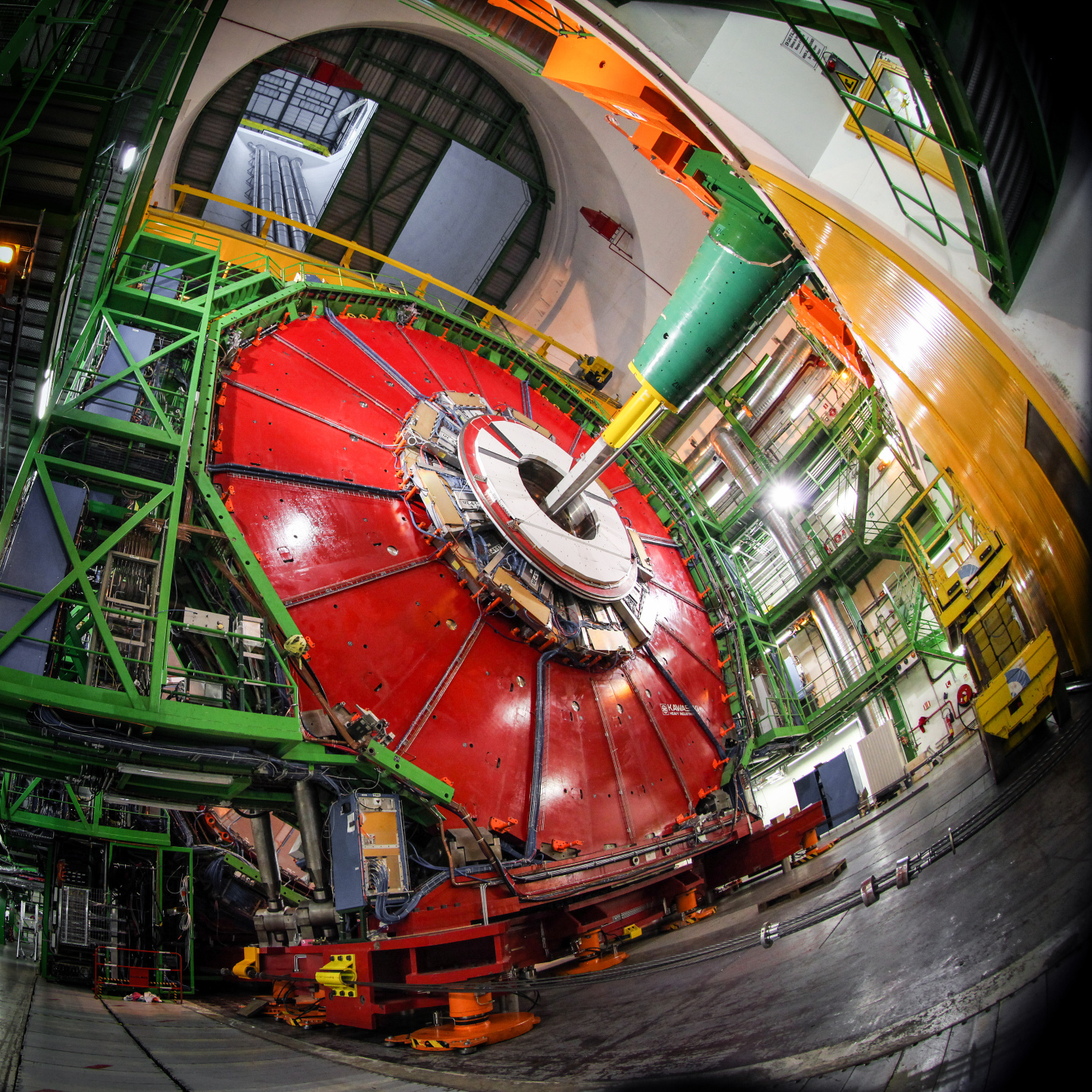

The Compact Muon Solenoid (CMS) experiment is a collaboration that includes over 4,000 physicists, engineers, and students from 57 different countries and regions to study data from the CMS detector at the Large Hadron Collider, the world’s largest and most powerful particle accelerator.

The CMS detector is a giant high-speed high-resolution camera capable of capturing proton-proton collisions and individual particles ejected from them. By identifying and analyzing particles produced in these collisions, scientists measure various properties of the collision, and try to find possible footprints of dark matter and other hypotheticals.

The name refers to the giant solenoid magnet in the core of the detector, which is 100,000 times more powerful than the Earth’s magnetic field and is used to deflect charged particles.

Purdue hosts several CMS research groups working on a wide range of topics, including searches for new physics phenomena, measuring properties of particles, algorithm development, detector components manufacturing, machine learning, computing, and much more.

To analyze large datasets in reasonable time, CMS distributes the processing across 170 computing sites located in 42 countries via the Worldwide LHC Computing Grid (WLCG). WLCG has ~1.4 million cores and ~1.5 exabytes of storage in total. A large part of these resources are at Purdue.

CMS uses these computing resources to run various pattern recognition algorithms to identify particles in raw data and measure their properties, filter the events for further analysis, and apply sophisticated methods to improve the precision of the analysis.

At Purdue, these types of processing jobs depend on the usage of the Geddes cluster. Among U.S.-based data centers, Purdue is one of the largest contributors of computing power for CMS.

"At CMS, we engage in some of the most advanced experimental science, aiming to uncover the mysteries of the universe, such as the nature of dark matter. The Geddes cluster gives us the opportunity to leverage cutting-edge computing technologies, paired with excellent user support,” says Dmitry Kondratyev, one of the researchers working on the experiment.

With the rise of Kubernetes and cloud-native technologies in the last few years, there have been many impressive developments in computing infrastructure, which offer new ways to manage computing resources.

Using the Geddes Cluster, researchers can access the latest Kubernetes functionality locally, with their own hardware, customized software and infrastructure, as well as close user support.

“The exceptional resilience and scalability of Kubernetes, along with the power of GPUs to accelerate AI algorithms, allow us to push the boundaries of scientific computing like never before,” adds Kondratyev.

Geddes is unique among RCAC’s community clusters for its design, which is optimized for composable, cloud-like workflows that are complementary to the batch applications run on other community clusters.

Funded by the National Science Foundation under grant OAC-2018926, Geddes consists of Dell Compute nodes with two 64-core AMD Epyc 'Rome' processors (128 cores per node).

In the future, plans for the Geddes cluster include implementing distributed training of machine learning algorithms, and implementing GitOps approaches to develop projects.

To learn more about the Geddes cluster and other RCAC resources, contact rcac-help@purdue.edu.