Recent GPU expansions to Gilbreth cluster increase capacity by 46%

After significantly expanding GPU computing capabilities in fall 2022, the Rosen Center for Advanced Computing (RCAC) has added 104 new NVIDIA A100 GPUs to the Gilbreth community cluster. Based on Dell PowerEdge R7525 compute nodes with .5 TB of RAM, two Nvidia A100 Tensor Core GPUs, and 100 Gbps HDR Infiniband, this expansion increases the GPU capacity in the cluster by 46%.

The new GPUs went into production in April 2023, and bring Gilbreth to an aggregate peak performance of 32 single-precision PetaFLOPs (one quadrillion operations per second) – doubling Gilbreth’s AI performance.

Over the last year, Gilbreth has supported 98 principal investigators from 25 departments, enabling research ranging from computer science and electrical and computer engineering, to healthcare, energetics, and materials science.

The added resources are just a part of RCAC’s ongoing investment in supporting researchers performing AI and machine learning work. Along with the additional hardware, RCAC has full-time research scientists with AI and machine learning expertise, who offer training opportunities and are available to partner with faculty on proposals.

“Since the summer of 2022, continued investment by Purdue IT has allowed RCAC to grow our GPU capacity available to Purdue researchers and students from 104 to 331 after this latest expansion – a two-and-a-half times increase,” said Preston Smith, Executive Director for RCAC in Purdue IT.

“This investment in resources, and pricing structures that are better than do-it-yourself options will ensure that Purdue faculty have access to cutting-edge resources to ensure their competitiveness, while also benefitting from the community cluster program’s professional maintenance and scientific support to ensure that their research is appropriately safeguarded, and they can focus on scientific outcomes instead of technology problems.”

GPUs are optimized for AI and machine learning because they break complex tasks down and perform the subtasks in parallel, which allows a researcher to get more done in a shorter amount of time than they could for the same investment in traditional CPUs.

As the 2023 winner of Purdue’s Arden J. Bement Award for recent outstanding accomplishments in pure and applied sciences and engineering, Jay Gore, the Reilly University Chair Professor of Mechanical Engineering recently spoke to a campus audience on the use of big data, machine learning, and augmented intelligence techniques to model the output and efficiency of steam generators, and power plants that use these.

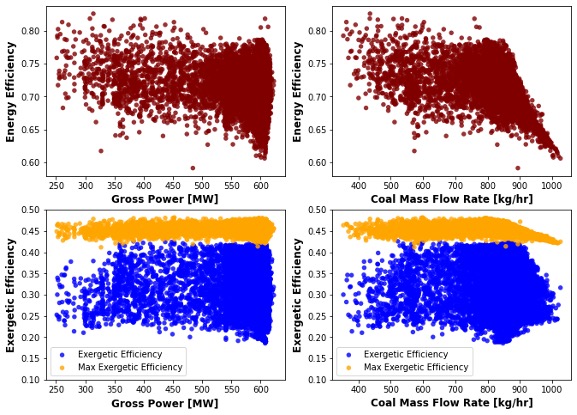

Designed to produce steady output, coal-fired power plants are increasingly forced to operate with fluctuating demand, “cycling” the power generation as electrical needs rise and fall. Cycling, however, creates significant costs for maintenance and impacts the life cycle of the power plant equipment.

Using supercomputers, Gore’s graduate students including Veeraraghava Raju Hasti, Abhishek Navarkar, and Elihu Deneke developed an artificial neural network (ANN) that analyzed 10 years of power plant data, and developed a model that estimates the properties to which the components of a steam generator are subjected during cycling operations.

This use of “physical AI” allows power plant operators to optimize parameters including pressures, temperatures, flow rates, and compositions during operations, to minimize waste, pollutants, costs and risks created by cycling. Gore also touched on the work, by his graduate students Luke Dillard, Rathziel Roncancio, and Mehmed Ulcay, on the minimization of overheating and blowout risks in gas turbine engines using big data, machine learning and augmented intelligence.

The new A100 Tensor Core GPUs are offered under a similar pricing model as CPU-based community cluster systems, meaning researchers can choose between purchasing per-GPU units through either a one-time five-year charge or an annual subscription. The economies of scale of community clusters allow Gilbreth GPUs to be priced more competitively than do-it-yourself GPU solutions, and the existing inventory allows for immediate access to nodes without a lengthy procurement process.

Researchers will have a queue specific to their lab as on other community clusters and will also have standby access to unused nodes. Access to Gilbreth can be purchased through the RCAC cluster orders website . The new GPUs were procured through support from Purdue IT as part of the community cluster program’s lifecycle replacement plan.

To learn more about Gilbreth or other Research Computing resources, contact rcac-help@purdue.edu.