Data Depot User Guide

The Data Depot is a high-capacity, fast, reliable and secure data storage service designed, configured and operated for the needs of Purdue researchers in any field and shareable with both on-campus and off-campus collaborators.

Data Depot Overview

As with the community clusters, research labs will be able to easily purchase capacity in the Data Depot through the Data Depot Purchase page on this site. For more information, please contact us.

Link to section 'Data Depot Features' of 'Data Depot Overview' Data Depot Features

The Data Depot offers research groups in need of centralized data storage unique features and benefits:

-

Available

To any Purdue research group as a purchase in increments of 1 TB at a competitive annual price or you may request a 100 GB trial space free of charge. Participation in the Community Cluster program is not required.

-

Accessible

- As a Windows or Mac OS X network drive on personal and lab computers on campus.

- Directly on Community Cluster nodes.

- From other universities or labs through Globus.

-

Capable

The Data Depot facilitates joint work on shared files across your research group, avoiding the need for numerous copies of datasets across individuals' home or scratch directories. It is an ideal place to store group applications, tools, scripts, and documents.

-

Controllable Access

Access management is under your direct control. Unix groups can be created for your group and staff can assist you in setting appropriate permissions to allow exactly the access you want and prevent any you do not. Easily manage who has access through a simple web application — the same application used to manage access to Community Cluster queues.

-

Data Retention

All data kept in the Data Depot remains owned by the research group's lead faculty. When researchers or students leave your group, any files left in their home directories may become difficult to recover. Files kept in Data Depot remain with the research group, unaffected by turnover, and could head off potentially difficult disputes.

-

Never Purged

The Data Depot is never subject to purging.

-

Reliable

The Data Depot is redundant and protected against hardware failures and accidental deletion. All data is mirrored at two different sites on campus to provide for greater reliability and to protect against physical disasters.

-

Restricted Data

The Data Depot is suitable for non-HIPAA human subjects data. See the Data Depot FAQ for a data security statement for your IRB documentation. The Data Depot is not approved for regulated data, including HIPAA, ePHI, FISMA, or ITAR data.

Link to section 'Data Depot Hardware Details' of 'Data Depot Overview' Data Depot Hardware Details

The Data Depot uses an enterprise-class GPFS storage solution with an initial total capacity of over 2 PB. This storage is redundant and reliable, features regular snapshots, and is globally available on all RCAC systems. The Data Depot is non-purged space suitable for tasks such as sharing data, editing files, developing and building software, and many other uses. Built on Data Direct Networks' SFA12k storage platform, the Data Depot has redundant storage arrays in multiple campus datacenters for maximum availability.

While the Data Depot will scale well for most uses, it is recommended to continue using each cluster's parallel scratch filesystem for use as high-performance working space (scratch) for running jobs.

File Storage and Transfer

Learn more about file storage transfer for Data Depot.

Link to section 'Archive and Compression' of 'Archive and Compression' Archive and Compression

There are several options for archiving and compressing groups of files or directories. The mostly commonly used options are:

Link to section 'tar' of 'Archive and Compression' tar

See the official documentation for tar for more information.

Saves many files together into a single archive file, and restores individual files from the archive. Includes automatic archive compression/decompression options and special features for incremental and full backups.

Examples:

(list contents of archive somefile.tar)

$ tar tvf somefile.tar

(extract contents of somefile.tar)

$ tar xvf somefile.tar

(extract contents of gzipped archive somefile.tar.gz)

$ tar xzvf somefile.tar.gz

(extract contents of bzip2 archive somefile.tar.bz2)

$ tar xjvf somefile.tar.bz2

(archive all ".c" files in current directory into one archive file)

$ tar cvf somefile.tar *.c

(archive and gzip-compress all files in a directory into one archive file)

$ tar czvf somefile.tar.gz somedirectory/

(archive and bzip2-compress all files in a directory into one archive file)

$ tar cjvf somefile.tar.bz2 somedirectory/

Other arguments for tar can be explored by using the man tar command.

Link to section 'gzip' of 'Archive and Compression' gzip

The standard compression system for all GNU software.

Examples:

(compress file somefile - also removes uncompressed file)

$ gzip somefile

(uncompress file somefile.gz - also removes compressed file)

$ gunzip somefile.gz

Link to section 'bzip2' of 'Archive and Compression' bzip2

See the official documentation for bzip for more information.

Strong, lossless data compressor based on the Burrows-Wheeler transform. Stronger compression than gzip.

Examples:

(compress file somefile - also removes uncompressed file)

$ bzip2 somefile

(uncompress file somefile.bz2 - also removes compressed file)

$ bunzip2 somefile.bz2

There are several other, less commonly used, options available as well:

- zip

- 7zip

- xz

Link to section 'Sharing Files from Data Depot' of 'Sharing' Sharing Files from Data Depot

Data Depot supports several methods for file sharing. Use the links below to learn more about these methods.

Link to section 'Sharing Data with Globus' of 'Globus' Sharing Data with Globus

Data on any RCAC resource can be shared with other users within Purdue or with collaborators at other institutions. Globus allows convenient sharing of data with outside collaborators. Data can be shared with collaborators' personal computers or directly with many other computing resources at other institutions.

To share files, login to https://transfer.rcac.purdue.edu, navigate to the endpoint (collection) of your choice, and follow instructions as described in Globus documentation on how to share data:

See also RCAC Globus presentation.

Link to section 'Sharing static content from your Data Depot space via the WWW' of 'WWW' Sharing static content from your Data Depot space via the WWW

Your research group can easily share static files (images, data, HTML) from your depot space via the WWW.

- Contact support to set up a "www" folder in your Data Depot space.

- Copy any files that you wish to share via the WWW into your Data Depot space's "www" folder.

- For example, cp /path/to/image.jpg /depot/mylab/www/, where mylab is your research group name.

- or upload to smb://datadepot.rcac.purdue.edu/depot/mylab/www, where mylab is your research group name.

- Your file is now accessible via your web browser at the URL https://www.datadepot.rcac.purdue.edu/mylab/image.jpg

Note that it is not possible to run web sites, dynamic content, interpreters (PHP, Perl, Python), or CGI scripts from this web site.

File Transfer

Data Depot supports several methods for file transfer. Use the links below to learn more about these methods.

SCP

SCP (Secure CoPy) is a simple way of transferring files between two machines that use the SSH protocol. SCP is available as a protocol choice in some graphical file transfer programs and also as a command line program on most Linux, Unix, and Mac OS X systems. SCP can copy single files, but will also recursively copy directory contents if given a directory name.

After Aug 17, 2020, the community clusters will not support password-based authentication for login. Methods that can be used include two-factor authentication (Purdue Login) or SSH keys. If you do not have SSH keys installed, you would need to type your Purdue Login response into the SFTP's "Password" prompt.

Link to section 'Command-line usage:' of 'SCP' Command-line usage:

You can transfer files both to and from Data Depot while initiating an SCP session on either some other computer or on Data Depot (in other words, directionality of connection and directionality of data flow are independent from each other). The scp command appears somewhat similar to the familiar cp command, with an extra user@host:file syntax to denote files and directories on a remote host. Either Data Depot or another computer can be a remote.

Example: Initiating SCP session on some other computer (i.e. you are on some other computer, connecting to Data Depot):

(transfer TO Data Depot) (Individual files) $ scp sourcefile myusername@data.rcac.purdue.edu:somedir/destinationfile $ scp sourcefile myusername@data.rcac.purdue.edu:somedir/ (Recursive directory copy) $ scp -pr sourcedirectory/ myusername@data.rcac.purdue.edu:somedir/ (transfer FROM Data Depot) (Individual files) $ scp myusername@data.rcac.purdue.edu:somedir/sourcefile destinationfile $ scp myusername@data.rcac.purdue.edu:somedir/sourcefile somedir/ (Recursive directory copy) $ scp -pr myusername@data.rcac.purdue.edu:sourcedirectory somedir/The -p flag is optional. When used, it will cause the transfer to preserve file attributes and permissions. The -r flag is required for recursive transfers of entire directories.

Example: Initiating SCP session on Data Depot (i.e. you are on Data Depot, connecting to some other computer):

(transfer TO Data Depot) (Individual files) $ scp myusername@$another.computer.example.com:sourcefile somedir/destinationfile $ scp myusername@$another.computer.example.com:sourcefile somedir/ (Recursive directory copy) $ scp -pr myusername@$another.computer.example.com:sourcedirectory/ somedir/ (transfer FROM Data Depot) (Individual files) $ scp somedir/sourcefile myusername@$another.computer.example.com:destinationfile $ scp somedir/sourcefile myusername@$another.computer.example.com:somedir/ (Recursive directory copy) $ scp -pr sourcedirectory myusername@$another.computer.example.com:somedir/The -p flag is optional. When used, it will cause the transfer to preserve file attributes and permissions. The -r flag is required for recursive transfers of entire directories.

Link to section 'Software (SCP clients)' of 'SCP' Software (SCP clients)

Linux and other Unix-like systems:

- The

scpcommand-line program should already be installed.

Microsoft Windows:

- MobaXterm

Free, full-featured, graphical Windows SSH, SCP, and SFTP client. - Command-line

scpprogram can be installed as part of Windows Subsystem for Linux (WSL), or Git-Bash.

Mac OS X:

- The

scpcommand-line program should already be installed. You may start a local terminal window from "Applications->Utilities". - Cyberduck is a full-featured and free graphical SFTP and SCP client.

Globus

Globus, previously known as Globus Online, is a powerful and easy to use file transfer service for transferring files virtually anywhere. It works within RCAC's various research storage systems; it connects between RCAC and remote research sites running Globus; and it connects research systems to personal systems. You may use Globus to connect to your home, scratch, and Fortress storage directories. Since Globus is web-based, it works on any operating system that is connected to the internet. The Globus Personal client is available on Windows, Linux, and Mac OS X. It is primarily used as a graphical means of transfer but it can also be used over the command line.

Link to section 'Globus Web:' of 'Globus' Globus Web:

- Navigate to http://transfer.rcac.purdue.edu

- Click "Proceed" to log in with your Purdue Career Account.

- On your first login it will ask to make a connection to a Globus account. Accept the conditions.

- Now you are at the main screen. Click "File Transfer" which will bring you to a two-panel interface (if you only see one panel, you can use selector in the top-right corner to switch the view).

- You will need to select one collection and file path on one side as the source, and the second collection on the other as the destination. This can be one of several Purdue endpoints, or another University, or even your personal computer (see Personal Client section below).

The RCAC collections are as follows. A search for "Purdue" will give you several suggested results you can choose from, or you can give a more specific search.

- Research Data Depot: "Purdue Research Computing - Data Depot", a search for "Depot" should provide appropriate matches to choose from.

- Fortress: "Purdue Fortress HPSS Archive", a search for "Fortress" should provide appropriate matches to choose from.

From here, select a file or folder in either side of the two-pane window, and then use the arrows in the top-middle of the interface to instruct Globus to move files from one side to the other. You can transfer files in either direction. You will receive an email once the transfer is completed.

Link to section 'Globus Personal Client setup:' of 'Globus' Globus Personal Client setup:

Globus Connect Personal is a small software tool you can install to make your own computer a Globus endpoint on its own. It is useful if you need to transfer files via Globus to and from your computer directly.

- On the "Collections" page from earlier, click "Get Globus Connect Personal" or download a version for your operating system it from here: Globus Connect Personal

- Name this particular personal system and follow the setup prompts to create your Globus Connect Personal endpoint.

- Your personal system is now available as a collection within the Globus transfer interface.

Link to section 'Globus Command Line:' of 'Globus' Globus Command Line:

Globus supports command line interface, allowing advanced automation of your transfers.

To use the recommended standalone Globus CLI application (the globus command):

- First time use: issue the globus login command and follow instructions for initial login.

- Commands for interfacing with the CLI can be found via Using the Command Line Interface, as well as the Globus CLI Examples pages.

Link to section 'Sharing Data with Outside Collaborators' of 'Globus' Sharing Data with Outside Collaborators

Globus allows convenient sharing of data with outside collaborators. Data can be shared with collaborators' personal computers or directly with many other computing resources at other institutions. See the Globus documentation on how to share data:

For links to more information, please see Globus Support page and RCAC Globus presentation.

Windows Network Drive / SMB

SMB (Server Message Block), also known as CIFS, is an easy to use file transfer protocol that is useful for transferring files between RCAC systems and a desktop or laptop. You may use SMB to connect to your home, scratch, and Fortress storage directories. The SMB protocol is available on Windows, Linux, and Mac OS X. It is primarily used as a graphical means of transfer but it can also be used over the command line.

Note: to access Data Depot through SMB file sharing, you must be on a Purdue campus network or connected through VPN.

Link to section 'Windows:' of 'Windows Network Drive / SMB' Windows:

- Windows 7: Click Windows menu > Computer, then click Map Network Drive in the top bar

- Windows 8 & 10: Tap the Windows key, type computer, select This PC, click Computer > Map Network Drive in the top bar

- In the folder location enter the following information and click Finish:

- To access your Data Depot directory, enter \\datadepot.rcac.purdue.edu\depot\mylab where mylab is your research group name. Use your career account login name and password when prompted. (You will not need to add "

,push" nor use your Purdue Duo client.)

- To access your Data Depot directory, enter \\datadepot.rcac.purdue.edu\depot\mylab where mylab is your research group name. Use your career account login name and password when prompted. (You will not need to add "

- Your Data Depot directory should now be mounted as a drive in the Computer window.

Link to section 'Mac OS X:' of 'Windows Network Drive / SMB' Mac OS X:

- In the Finder, click Go > Connect to Server

- In the Server Address enter the following information and click Connect:

- To access your Data Depot directory, enter smb://datadepot.rcac.purdue.edu/depot/mylab where mylab is your research group name. Use your career account login name and password when prompted. (You will not need to add "

,push" nor use your Purdue Duo client.)

- To access your Data Depot directory, enter smb://datadepot.rcac.purdue.edu/depot/mylab where mylab is your research group name. Use your career account login name and password when prompted. (You will not need to add "

- Note: Use your career account login name and password when prompted. (You will not need to add "

,push" nor use your Purdue Duo client.)

Link to section 'Linux:' of 'Windows Network Drive / SMB' Linux:

- There are several graphical methods to connect in Linux depending on your desktop environment. Once you find out how to connect to a network server on your desktop environment, choose the Samba/SMB protocol and adapt the information from the Mac OS X section to connect.

- If you would like access via samba on the command line you may install smbclient which will give you FTP-like access and can be used as shown below. For all the possible ways to connect look at the Mac OS X instructions.

smbclient //datadepot.rcac.purdue.edu/depot/ -U myusername cd mylab - Note: Use your career account login name and password when prompted. (You will not need to add "

,push" nor use your Purdue Duo client.)

FTP / SFTP

FTP is not supported on any research systems because it does not allow for secure transmission of data. Use SFTP instead, as described below.

SFTP (Secure File Transfer Protocol) is a reliable way of transferring files between two machines. SFTP is available as a protocol choice in some graphical file transfer programs and also as a command-line program on most Linux, Unix, and Mac OS X systems. SFTP has more features than SCP and allows for other operations on remote files, remote directory listing, and resuming interrupted transfers. Command-line SFTP cannot recursively copy directory contents; to do so, try using SCP or graphical SFTP client.

After Aug 17, 2020, the community clusters will not support password-based authentication for login. Methods that can be used include two-factor authentication (Purdue Login) or SSH keys. If you do not have SSH keys installed, you would need to type your Purdue Login response into the SFTP's "Password" prompt.

Link to section 'Command-line usage' of 'FTP / SFTP' Command-line usage

You can transfer files both to and from Data Depot while initiating an SFTP session on either some other computer or on Data Depot (in other words, directionality of connection and directionality of data flow are independent from each other). Once the connection is established, you use put or get subcommands between "local" and "remote" computers. Either Data Depot or another computer can be a remote.

Example: Initiating SFTP session on some other computer (i.e. you are on another computer, connecting to Data Depot):

$ sftp myusername@data.rcac.purdue.edu (transfer TO Data Depot) sftp> put sourcefile somedir/destinationfile sftp> put -P sourcefile somedir/ (transfer FROM Data Depot) sftp> get sourcefile somedir/destinationfile sftp> get -P sourcefile somedir/ sftp> exitThe -P flag is optional. When used, it will cause the transfer to preserve file attributes and permissions.

Example: Initiating SFTP session on Data Depot (i.e. you are on Data Depot, connecting to some other computer):

$ sftp myusername@$another.computer.example.com (transfer TO Data Depot) sftp> get sourcefile somedir/destinationfile sftp> get -P sourcefile somedir/ (transfer FROM Data Depot) sftp> put sourcefile somedir/destinationfile sftp> put -P sourcefile somedir/ sftp> exitThe -P flag is optional. When used, it will cause the transfer to preserve file attributes and permissions.

Link to section 'Software (SFTP clients)' of 'FTP / SFTP' Software (SFTP clients)

Linux and other Unix-like systems:

- The

sftpcommand-line program should already be installed.

Microsoft Windows:

- MobaXterm

Free, full-featured, graphical Windows SSH, SCP, and SFTP client. - Command-line

sftpprogram can be installed as part of Windows Subsystem for Linux (WSL), or Git-Bash.

Mac OS X:

- The

sftpcommand-line program should already be installed. You may start a local terminal window from "Applications->Utilities". - Cyberduck is a full-featured and free graphical SFTP and SCP client.

Lost File Recovery

Data Depot is protected against accidental file deletion through a series of snapshots taken every night just after midnight. Each snapshot provides the state of your files at the time the snapshot was taken. It does so by storing only the files which have changed between snapshots. A file that has not changed between snapshots is only stored once but will appear in every snapshot. This is an efficient method of providing snapshots because the snapshot system does not have to store multiple copies of every file.

These snapshots are kept for a limited time at various intervals. RCAC keeps nightly snapshots for 7 days, weekly snapshots for 4 weeks, and monthly snapshots for 3 months. This means you will find snapshots from the last 7 nights, the last 4 Sundays, and the last 3 first of the months. Files are available going back between two and three months, depending on how long ago the last first of the month was. Snapshots beyond this are not kept.

Only files which have been saved during an overnight snapshot are recoverable. If you lose a file the same day you created it, the file is not recoverable because the snapshot system has not had a chance to save the file.

Snapshots are not a substitute for regular backups. It is the responsibility of the researchers to back up any important data to the Fortress Archive. Data Depot does protect against hardware failures or physical disasters through other means however these other means are also not substitutes for backups.

Files in scratch directories are not recoverable. Files in scratch directories are not backed up. If you accidentally delete a file, a disk crashes, or old files are purged, they cannot be restored.

Data Depot offers several ways for researchers to access snapshots of their files.

flost

If you know when you lost the file, the easiest way is to use the flost command. This tool is available from any RCAC resource. If you do not have access to a compute cluster, any Data Depot user may use an SSH client to connect to data.rcac.purdue.edu and run this command.

To run the tool you will need to specify the location where the lost file was with the -w argument:

$ flost -w /depot/mylab

Replace mylab with the name of your lab's Data Depot directory. If you know more specifically where the lost file was you may provide the full path to that directory.

This tool will prompt you for the date on which you lost the file or would like to recover the file from. If the tool finds an appropriate snapshot it will provide instructions on how to search for and recover the file.

If you are not sure what date you lost the file you may try entering different dates into the flost to try to find the file or you may also manually browse the snapshots as described below.

Manual Browsing

You may also search through the snapshots by hand on the Data Depot filesystem if you are not sure what date you lost the file or would like to browse by hand. Snapshots can be browsed from any RCAC resource. If you do not have access to a compute cluster, any Data Depot user may use an SSH client to connect to data.rcac.purdue.edu and browse from there. The snapshots are located at /depot/.snapshots on these resources.

You can also mount the snapshot directory over Samba (or SMB, CIFS) on Windows or Mac OS X. Mount (or map) the snapshot directory in the same way as you did for your main Data Depot space substituting the server name and path for \\datadepot.rcac.purdue.edu\depot\.winsnaps (Windows) or smb://datadepot.rcac.purdue.edu/depot/.winsnaps (Mac OS X).

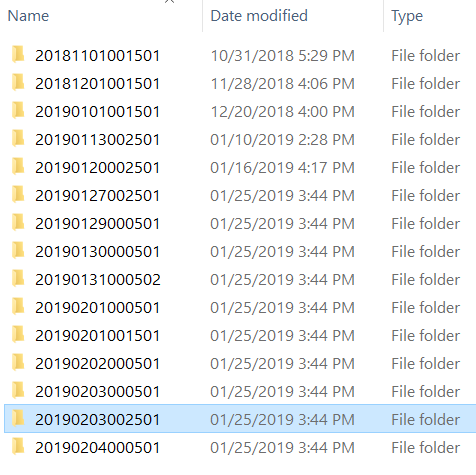

Once connected to the snapshot directory through SSH or Samba, you will see something similar to this:

| SSH to data.rcac.purdue.edu | Samba mount on datadepot.rcac.purdue.edu |

|---|---|

|

|

Each of these directories is a snapshot of the entire Data Depot filesystem at the timestamp encoded into the directory name. The format for this timestamp is year, two digits for month, two digits for day, followed by the time of the day.

You may cd into any of these directories where you will find the entire Data Depot filesystem. Use cd to continue into your lab's Data Depot space and then you may browse the snapshot as normal.

If you are browsing these directories over a Samba network drive you can simply drag and drop the files over into your live Data Depot folder.

Once you find the file you are looking for, use cp to copy the file back into your lab's live Data Depot space. Do not attempt to modify files directly in the snapshot directories.

Windows

If you use Data Depot through "network drives" on Windows you may recover lost files directly from within Windows:

- Open the folder that contained the lost file.

- Right click inside the window and select "Properties".

- Click on the "Previous Versions" tab.

- A list of snapshots will be displayed.

- Select the snapshot from which you wish to restore.

- In the new window, locate the file you wish to restore.

- Simply drag the file or folder to their correct locations.

In the "Previous Versions" window the list contains two columns. The first column is the timestamp on which the snapshot was taken. The second column is the date on which the selected file or folder was last modified in that snapshot. This may give you some extra clues to which snapshot contains the version of the file you are looking for.

Mac OS X

Mac OS X does not provide any way to access the Data Depot snapshots directly. To access the snapshots there are two options: browse the snapshots by hand through a network drive mount or use an automated command-line based tool.

To browse the snapshots by hand, follow the directions outlined in the Manual Browsing section.

To use the automated command-line tool, log into a compute cluster or into the host data.rcac.purdue.edu (which is available to all Data Depot users) and use the flost tool. On Mac OS X you can use the built-in SSH terminal application to connect.

- Open the Applications folder from Finder.

- Navigate to the Utilities folder.

- Double click the Terminal application to open it.

- Type the following command when the terminal opens.

Replace myusername with your Purdue career account username and provide your password when prompted.$ ssh myusername@data.rcac.purdue.edu

Once logged in use the flost tool as described above. The tool will guide you through the process and show you the commands necessary to retrieve your lost file.

Access Permissions & Directories

Data Depot is very flexible in the access permission models and directory structures it can support. New spaces on Data Depot are given a default access model and directory structure at setup time. This can be modified as needed to support your workflows.

Information follows about the default structure and common access models.

Default Configuration

This is what a default configuration looks like for a research group called "mylab":

/depot/mylab/

+--apps/

|

+--data/

|

+--etc/

| +--bashrc

| +--cshrc

|

(other subdirectories)

The /depot/mylab/ directory is the main top-level directory for all your research group storage. All files are to be kept within one of the subdirectories of this, based on your specific access requirements. These subdirectories will be created after consulting with you as to exactly what you need.

By default,the following subdirectories, with the following access and use models, will be created. All of these details can be changed to suit the particular needs of your research group.

- data/

Intended for read and write use by a limited set of people chosen by the research group's managers.

Restricted to not be readable or writable by anyone else.

This is frequently used as an open space for storage of shared research data. - apps/

Intended for write use by a limited set of people chosen by the research group's managers.

Restricted to not be writable by anyone else.

Allows read and execute by anyone who has access to any cluster queues owned by the research group and anyone who has other file permissions granted by the research group (such as "data" access above).

This is frequently used as a space for central management of shared research applications. - etc/

Intended for write use by a limited set of people chosen by the research group's managers (by default, the same as for "apps" above).

Restricted to not be writable by anyone else.

Allows read and execute by anyone who has access to any cluster queues owned by the research group and anyone who has other file permissions granted by the research group (such as "data" access above).

This is frequently used as a space for central management of shared startup/login scripts, environment settings, aliases, etc. - etc/bashrc

etc/cshrc

Examples of group-wide shell startup files. Group members can source these from their own $HOME/.bashrc or $HOME/.cshrc files so they would automatically pick up changes to their environment needed to work with applications and data for the research group. There are more detailed instructions in these files on how to use them. - Additional subdirectories can be created as needed in the top and/or any of the lower levels. Just contact support and we will be happy to figure out what will work best for your needs.

Common Access Permission Scenarios

Depending on your research group's specific needs and preferred way of sharing, there are various permission models your Data Depot can be designed to reflect. Here are some common scenarios for access:

- "We have privately shared data within the group and some software for use only by us and a few collaborators."

Suggested implementation:Keep data in the data/ subdirectory and limit read and write access to select approved researchers.

Keep applications (if any) in the apps/ subdirectory and limit write access to your developers and/or application stewards.

Allow read/execute to apps/ by anyone in the larger research group with cluster queue access and approved collaborators. - "We have privately shared data within the group and some software which is needed by all cluster users (not just our group or known collaborators)."

Suggested implementation:Keep data in the data/ subdirectory and limit read and write access to select approved researchers.

Keep applications (if any) in the apps/ subdirectory and limit write access to your developers and/or application stewards.

Allow read/execute to apps/ by anyone at all by opening read/execute permissions on your base Data Depot directory. - "We have a few different projects and only the PI and respective project members should have any access to files for each project."

Suggested implementation:Create distinct subdirectories within your Data Depot base directory for each project and corresponding Unix groups for read/write access to each.

Approve specific researchers for read and write access to only the projects they are working on.

Many variants and combinations of the above are also possible covering the range from "very restrictive" to "mostly open" in terms of both read and write access to each subdirectory within your Data Depot space. Your lab can sit down with our staff and explain your specific needs in human terms, and then we can help you implement those requirements in actual permissions and groups. Once the initial configuration is done, you will then be able to easily add or remove access for your people. If your needs change, just let us know and we can accommodate your new requirements as well.

Storage Access Unix Groups

To enable a wide variety of access permissions, users are assigned one or more auxiliary Unix groups. It is the combination of this Unix group membership and the r/w/x permission bits on subdirectories that allow fine-tuning who can and can not do what within specific areas of your Data Depot. These Unix groups will generally closely match the name of your Data Depot root directory and the name of the subdirectory to which write access is being given. For example, write access to /depot/mylab/data/ is controlled by membership in the mylab-data Unix group.

There is also one Unix group which has the name of the base directory of your Data Depot, mylab. This group serves to limit read/execute access to your base /depot/mylab/ directory and also helps to define the read/execute permissions of some of the subdirectories within. This Unix group is composed of the union of the following:

- all members of your more specific Unix groups

- all users authorized to access any of your research group's cluster queues

- any other specific individuals you may have approved

Research group faculty and their designees may directly manage membership in these Unix groups, and by extension, the storage access permissions they grant, through the online web application.

Link to section 'Checking Your Group Membership' of 'Storage Access Unix Groups' Checking Your Group Membership

As a user you can check which groups you are a member of by issuing the groups command while logged into any RCAC resource. You can also look on the website at https://www.rcac.purdue.edu/account/groups.

$ groups

mylab mylab-apps mylab-data

If you have recently been added to a group you need to log out and then back in again before the permissions changes take effect.

Frequently Asked Questions

Some common questions, errors, and problems are categorized below. Click the Expand Topics link in the upper right to see all entries at once. You can also use the search box above to search the user guide for any issues you are seeing.

About Data Depot

Frequently asked questions about Data Depot.

Can you remove me from the Data Depot mailing list?

Your subscription in the Data Depot mailing list is tied to your account on Data Depot. If you are no longer using your account on Data Depot, your account can be deleted from the My Accounts page. Hover over the resource you wish to remove yourself from and click the red 'X' button. Your account and mailing list subscription will be removed overnight. Be sure to make a copy of any data you wish to keep first.

What sort of performance should I expect to and from the Data Depot?

| Access type | Large file, reading | Large file, writing | Many small files, reading | Many small files, writing |

|---|---|---|---|---|

| CIFS access, single client (GigE) | 102.1 MB/sec | 71.64 MB/sec | 12.43 MB/sec | 11.57 MB/sec |

Is the Data Depot just a file server?

The Data Depot is a suite of file service tools, specifically targeted at the needs of an academic research lab. More than just the file service infrastructure and hardware, the Data Depot also encompasses self-service access management, permissions control, and file sharing with Globus.

Do I need to do anything to my firewall to access Data Depot?

No firewall changes are needed to access Data Depot. However, to access data through Network Drives (i.e., CIFS, "Z: Drive"), you must be on a Purdue campus network or connected through VPN.

What is the best way to mount Data Depot in my lab?

You can mount your Data Depot space via Network Drives / CIFS using your Purdue Career Account. NFS access may also be possible depending on your lab's environment. If you require NFS access, contact support.

How do Data Depot, Fortress, and PURR relate to each other?

The Data Depot, Fortress, and PURR, are complementary parts of Purdue's infrastructure for working with research data. The Data Depot is designed for large, actively-used, persistent research data; Fortress is intended for long-term, archival storage of data and results; and PURR is for management, curation, and long-term preservation of research data.

Data

Frequently asked questions about data and data management.

Can I store Export-controlled data on Data Depot?

The Data Depot is not approved for storing data requiring Export control including ITAR, FISMA, DFAR-7012, NIST 800-171. Please contact the Export Control Office to discuss technology control plans and data storage appropriate for export controlled projects.

Can I store HIPAA data on Data Depot?

The Data Depot is not approved for storing data covered by HIPAA. Please contact the HIPAA Compliance Office to discuss HIPAA-compliant data storage.

What do I need to do in order to store non-HIPAA human subjects data in the Data Depot?

Use the following IRB-approved text in your IRB documentation when describing your data safeguards, substituting the PI's name for "PROFESSORNAME":

Only individuals specifically approved by PROFESSORNAME may access project data in the Research Data Depot. All membership in the PROFESSORNAME group is authorized by the project PI(s) and/or designees. Purdue University has network firewalls and other security devices to protect the Research Data Depot infrastructure from outside the campus.

Purdue Career accounts have password security policies that enforce age and quality requirements.

Auditing is enabled on Research Data Depot fileservers to track login attempts, maintain logs, and generate reports of access attempts.

Can I share data with outside collaborators?

Yes! Globus allows convenient sharing of data with outside collaborators. Data can be shared with collaborators' personal computers or directly with many other computing resources at other institutions. See the Globus documentation on how to share data: