Gautschi User Guide

Gautschi is a Community Cluster optimized for communities running traditional, tightly-coupled science and engineering applications.

Link to section 'Overview of Gautschi' of 'Overview of Gautschi' Overview of Gautschi

Gautschi is a Community Cluster optimized for communities running traditional, tightly-coupled science and engineering applications. The Gautschi Community Cluster is equipped with both CPU and GPU compute nodes, each designed for specific computational tasks. The cluster includes Dell CPU compute nodes that feature dual 96-core AMD Epyc "Genoa" processors, providing 192 cores per node and 384 GB of memory. GPU compute nodes come with two 56-core Intel Xeon Platinum 8480+ processors, a total of 1031 GB of CPU memory, and eight NVIDIA H100 GPUs, each boasting 80 GB of memory. All compute nodes have 400 Gbps NDR Infiniband interconnect and service through 2030.

Access to the Gautschi cluster is being offered in units of 48-cores or one quarter share of a CPU node or 5 GPU-year package for GPU nodes. To purchase access to Gautschi today, go to the Cluster Access Purchase page. Please subscribe to our Community Cluster Program Mailing List to stay informed on the latest purchasing developments or contact us via email at rcac-cluster-purchase@lists.purdue.edu if you have any questions.

Link to section 'Gautschi Namesake' of 'Overview of Gautschi' Gautschi Namesake

Gautschi is named in honor of Dr. Walter Gautschi, the Professor in the Department of Computer Science and Mathematics at Purdue. More information about his life and impact on Purdue is available in a Biography of Gautschi.

Link to section 'Gautschi Specifications' of 'Overview of Gautschi' Gautschi Specifications

All Gautschi CPU compute nodes have 192 processing cores, 384 GB of memory, and 400 Gbps NDR Infiniband interconnects. Each Gautschi GPU compute node has eight NVIDIA H100 GPUs with 80 GB of GPU memory, 112 processing cores, 1032 GB of CPU memory, and 400 Gbps NDR Infiniband interconnects.

| Front-Ends | Number of Nodes | Processors per Node | Cores per Node | Memory per Node | Retires in |

|---|---|---|---|---|---|

| 8 | Two AMD EPYC 9654 96-Core Processor “Genoa” CPUs @ 2.4 GHz | 192 | 768 GB | 2030 |

| Sub-Cluster | Number of Nodes | Processors per Node | Cores per Node | Memory per Node | Retires in |

|---|---|---|---|---|---|

| A | 338 | Two AMD EPYC 9654 96-Core Processor “Genoa” CPUs @ 2.4 GHz | 192 | 384 GB | 2030 |

| B | 6 | Two AMD Epyc 9654 96-Core Processor “Genoa” CPUs @ 2.4GHz | 192 | 1.5 TB | 2030 |

| G | 6 | Two AMD EPYC 9554 64-Core Processor “Genoa” CPUs @ 3.1GHz, Two NVIDIA L40S GPUs (48GB) |

128 | 384 GB | 2030 |

| H | 20 | Two Intel Xeon Platinum 8480+ 56-Core CPUs @ 3.8GHz, Eight NVIDIA H100 GPUs (80GB) |

112 | 1 TB | 2030 |

Gautschi nodes run Rocky Linux 9 and use Slurm (Simple Linux Utility for Resource Management) as the batch scheduler for resource and job management. The application of operating system patches occurs as security needs dictate.

On Gautschi, the following set of compiler and message-passing libraries for parallel code are recommended:

- GCC 14.1.0

- OpenMPI

Link to section 'Walter Gautschi' of 'Biography of Gautschi' Walter Gautschi

Professor Gautschi is the Professor Emeritus of Computer Science and Mathematics in the Department of Computer Science at Purdue. Before coming to Purdue, Professor Gautschi did postdoctoral work as a Janggen-Pöhn Research Fellow at the National Institute of Applied Mathematics in Rome and at the Harvard Computation Laboratory. He also held positions at the National Bureau of Standards, the American University, the Oak Ridge National Laboratory, and the University of Tennessee. Since coming to Purdue, he has been a Fulbright Scholar at the Technical University of Munich and has held visiting appointments at the University of Wisconsin, Argonne National Laboratory, the Wright-Patterson Air Force Base, ETH Zurich, the University of Padova, and the University of Basel.

He has been a Fulbright Lecturer, an ACM National Lecturer, and a SIAM Visiting Lecturer. He is, or has been, on the editorial boards of SIAM Journal on Mathematical Analysis, Numerische Mathematik, Calcolo, and Mathematics of Computation, and has served as a special editor for Linear Algebra and Its Applications. From 1984 to 1995, he was the managing editor of Mathematics of Computation and, since 1991, an honorary editor of Numerische Mathematik. In 2001, Professor Gautschi was elected a Corresponding Member of the Bavarian Academy of Sciences and Humanities and, in the same year, a Foreign Member of the Academy of Sciences of Turin.

Link to section 'Accounts on Gautschi' of 'Accounts' Accounts on Gautschi

Link to section 'Obtaining an Account' of 'Accounts' Obtaining an Account

To obtain an account, you must be part of a research group which has purchased access to Gautschi. Refer to the Accounts / Access page for more details on how to request access.

Link to section 'Outside Collaborators' of 'Accounts' Outside Collaborators

A valid Purdue Career Account is required for access to any resource. If you do not currently have a valid Purdue Career Account you must have a current Purdue faculty or staff member file a Request for Privileges (R4P) before you can proceed.

Logging In

To submit jobs on Gautschi, log in to the submission host gautschi.rcac.purdue.edu via SSH. This submission host is actually 8 front-end hosts: login00.gautschi through login07.gautschi. The login process randomly assigns one of these front-ends to each login to gautschi.rcac.purdue.edu.

Purdue Login

Link to section 'SSH' of 'Purdue Login' SSH

- SSH to the cluster as usual.

- When asked for a password, type your password followed by "

,push". - Your Purdue Duo client will receive a notification to approve the login.

Link to section 'Thinlinc' of 'Purdue Login' Thinlinc

- When asked for a password, type your password followed by "

,push". - Your Purdue Duo client will receive a notification to approve the login.

- The native Thinlinc client will prompt for Duo approval twice due to the way Thinlinc works.

- The native Thinlinc client also supports key-based authentication.

Passwords

Gautschi supports either Purdue two-factor authentication (Purdue Login) or SSH keys.

SSH Client Software

Secure Shell or SSH is a way of establishing a secure connection between two computers. It uses public-key cryptography to authenticate the user with the remote computer and to establish a secure connection. Its usual function involves logging in to a remote machine and executing commands. There are many SSH clients available for all operating systems:

Linux / Solaris / AIX / HP-UX / Unix:

- The

sshcommand is pre-installed. Log in usingssh myusername@gautschi.rcac.purdue.edufrom a terminal.

Microsoft Windows:

- MobaXterm is a small, easy to use, full-featured SSH client. It includes X11 support for remote displays, SFTP capabilities, and limited SSH authentication forwarding for keys.

Mac OS X:

- The

sshcommand is pre-installed. You may start a local terminal window from "Applications->Utilities". Log in by typing the commandssh myusername@gautschi.rcac.purdue.edu.

When prompted for password, enter your Purdue career account password followed by ",push ". Your Purdue Duo client will then receive a notification to approve the login.

SSH Keys

Link to section 'General overview' of 'SSH Keys' General overview

To connect to Gautschi using SSH keys, you must follow three high-level steps:

- Generate a key pair consisting of a private and a public key on your local machine.

- Copy the public key to the cluster and append it to

$HOME/.ssh/authorized_keysfile in your account. - Test if you can ssh from your local computer to the cluster without using your Purdue password.

Detailed steps for different operating systems and specific SSH client softwares are give below.

Link to section 'Mac and Linux:' of 'SSH Keys' Mac and Linux:

-

Run

ssh-keygenin a terminal on your local machine. You may supply a filename and a passphrase for protecting your private key, but it is not mandatory. To accept the default settings, press Enter without specifying a filename.

Note: If you do not protect your private key with a passphrase, anyone with access to your computer could SSH to your account on Gautschi. -

By default, the key files will be stored in

~/.ssh/id_rsaand~/.ssh/id_rsa.pubon your local machine. -

Copy the contents of the public key into

$HOME/.ssh/authorized_keyson the cluster with the following command. When asked for a password, type your password followed by ",push". Your Purdue Duo client will receive a notification to approve the login.ssh-copy-id -i ~/.ssh/id_rsa.pub myusername@gautschi.rcac.purdue.eduNote: use your actual Purdue account user name.

If your system does not have the

ssh-copy-idcommand, use this instead:cat ~/.ssh/id_rsa.pub | ssh myusername@gautschi.rcac.purdue.edu "mkdir -p ~/.ssh && chmod 700 ~/.ssh && cat >> ~/.ssh/authorized_keys" -

Test the new key by SSH-ing to the server. The login should now complete without asking for a password.

-

If the private key has a non-default name or location, you need to specify the key by

ssh -i my_private_key_name myusername@gautschi.rcac.purdue.edu

Link to section 'Windows:' of 'SSH Keys' Windows:

| Programs | Instructions |

|---|---|

| MobaXterm | Open a local terminal and follow Linux steps |

| Git Bash | Follow Linux steps |

| Windows 10 PowerShell | Follow Linux steps |

| Windows 10 Subsystem for Linux | Follow Linux steps |

| PuTTY | Follow steps below |

PuTTY:

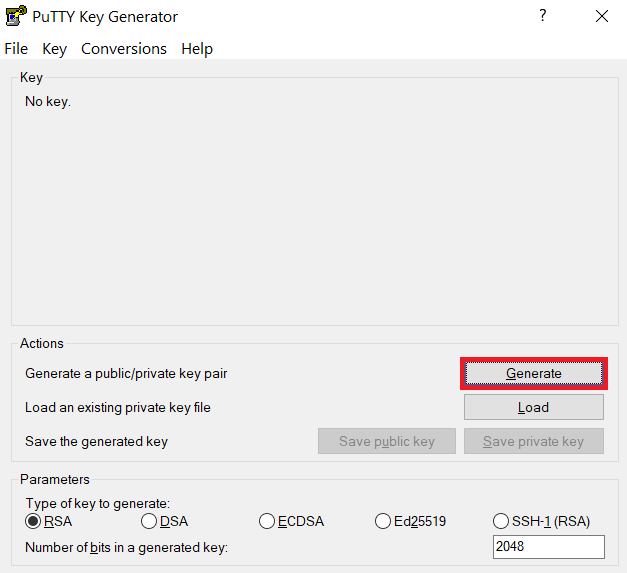

-

Launch PuTTYgen, keep the default key type (RSA) and length (2048-bits) and click Generate button.

The "Generate" button can be found under the "Actions" section of the PuTTY Key Generator interface. -

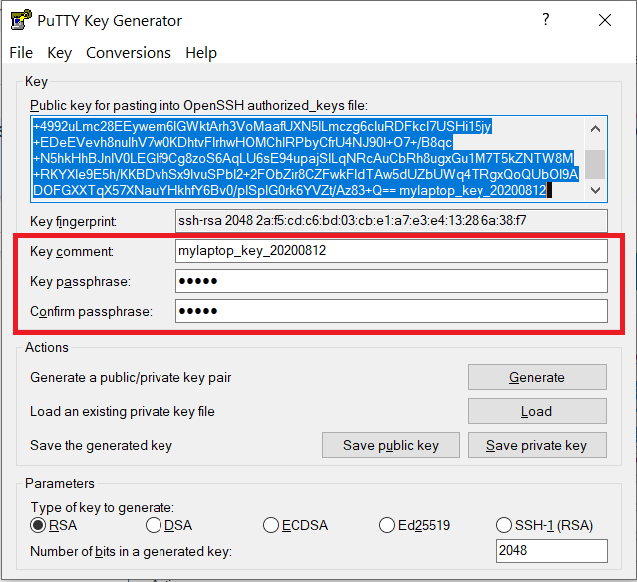

Once the key pair is generated:

Use the Save public key button to save the public key, e.g.

Documents\SSH_Keys\mylaptop_public_key.pubUse the Save private key button to save the private key, e.g.

Documents\SSH_Keys\mylaptop_private_key.ppk. When saving the private key, you can also choose a reminder comment, as well as an optional passphrase to protect your key, as shown in the image below. Note: If you do not protect your private key with a passphrase, anyone with access to your computer could SSH to your account on Gautschi.

The PuTTY Key Generator form has inputs for the Key passphrase and optional reminder comment. From the menu of PuTTYgen, use the "Conversion -> Export OpenSSH key" tool to convert the private key into openssh format, e.g.

Documents\SSH_Keys\mylaptop_private_key.opensshto be used later for Thinlinc. -

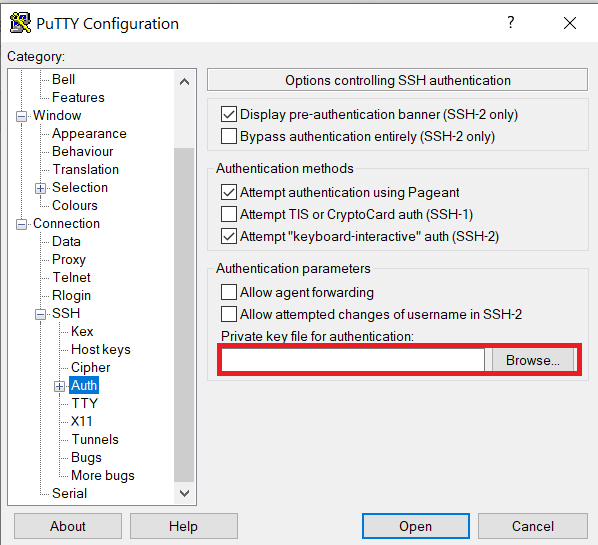

Configure PuTTY to use key-based authentication:

Launch PuTTY and navigate to "Connection -> SSH ->Auth" on the left panel, click Browse button under the "Authentication parameters" section and choose your private key, e.g. mylaptop_private_key.ppk

After clicking Connection -> SSH ->Auth panel, the "Browse" option can be found at the bottom of the resulting panel. Navigate back to "Session" on the left panel. Highlight "Default Settings" and click the "Save" button to ensure the change in place.

-

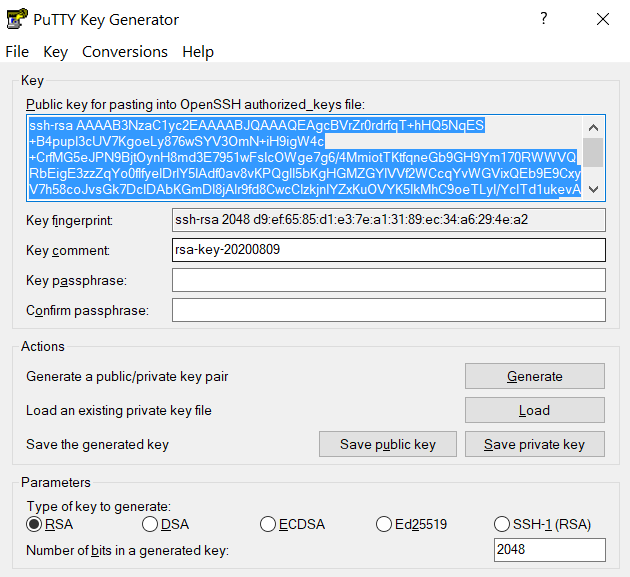

Connect to the cluster. When asked for a password, type your password followed by "

,push". Your Purdue Duo client will receive a notification to approve the login. Copy the contents of public key from PuTTYgen as shown below and paste it into$HOME/.ssh/authorized_keys. Please double-check that your text editor did not wrap or fold the pasted value (it should be one very long line).

The "Public key" will look like a long string of random letters and numbers in a text box at the top of the window. - Test by connecting to the cluster. If successful, you will not be prompted for a password or receive a Duo notification. If you protected your private key with a passphrase in step 2, you will instead be prompted to enter your chosen passphrase when connecting.

SSH X11 Forwarding

SSH supports tunneling of X11 (X-Windows). If you have an X11 server running on your local machine, you may use X11 applications on remote systems and have their graphical displays appear on your local machine. These X11 connections are tunneled and encrypted automatically by your SSH client.

Link to section 'Installing an X11 Server' of 'SSH X11 Forwarding' Installing an X11 Server

To use X11, you will need to have a local X11 server running on your personal machine. Both free and commercial X11 servers are available for various operating systems.

Linux / Solaris / AIX / HP-UX / Unix:

- An X11 server is at the core of all graphical sessions. If you are logged in to a graphical environment on these operating systems, you are already running an X11 server.

- ThinLinc is an alternative to running an X11 server directly on your Linux computer. ThinLinc is a service that allows you to connect to a persistent remote graphical desktop session.

Microsoft Windows:

- ThinLinc is an alternative to running an X11 server directly on your Windows computer. ThinLinc is a service that allows you to connect to a persistent remote graphical desktop session.

- MobaXterm is a small, easy to use, full-featured SSH client. It includes X11 support for remote displays, SFTP capabilities, and limited SSH authentication forwarding for keys.

Mac OS X:

- X11 is available as an optional install on the Mac OS X install disks prior to 10.7/Lion. Run the installer, select the X11 option, and follow the instructions. For 10.7+ please download XQuartz.

- ThinLinc is an alternative to running an X11 server directly on your Mac computer. ThinLinc is a service that allows you to connect to a persistent remote graphical desktop session.

Link to section 'Enabling X11 Forwarding in your SSH Client' of 'SSH X11 Forwarding' Enabling X11 Forwarding in your SSH Client

Once you are running an X11 server, you will need to enable X11 forwarding/tunneling in your SSH client:

ssh: X11 tunneling should be enabled by default. To be certain it is enabled, you may usessh -Y.- MobaXterm: Select "New session" and "SSH." Under "Advanced SSH Settings" check the box for X11 Forwarding.

SSH will set the remote environment variable $DISPLAY to "localhost:XX.YY" when this is working correctly. If you had previously set your $DISPLAY environment variable to your local IP or hostname, you must remove any set/export/setenv of this variable from your login scripts. The environment variable $DISPLAY must be left as SSH sets it, which is to a random local port address. Setting $DISPLAY to an IP or hostname will not work.

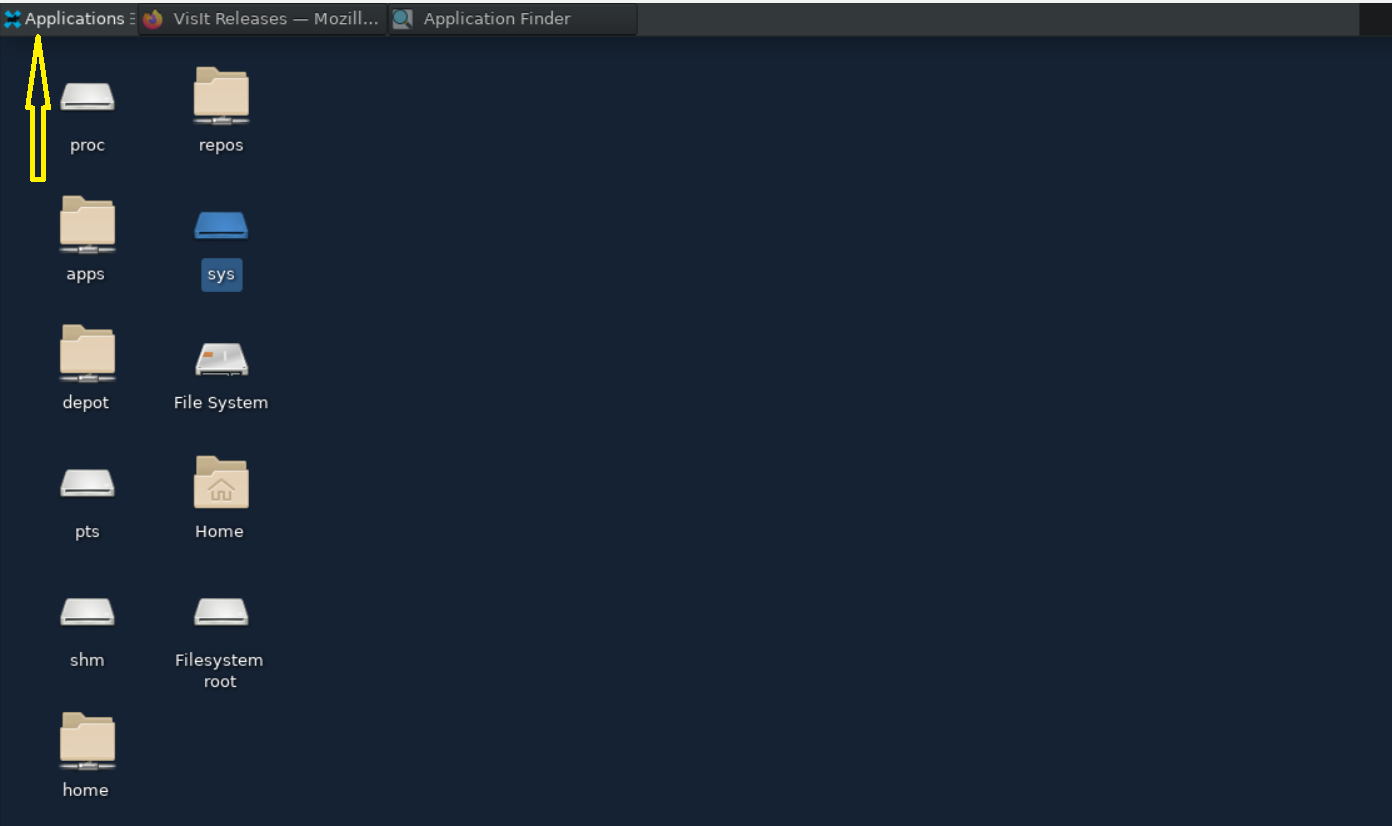

ThinLinc

RCAC provides Cendio's ThinLinc as an alternative to running an X11 server directly on your computer. It allows you to run graphical applications or graphical interactive jobs directly on Gautschi through a persistent remote graphical desktop session.

ThinLinc is a service that allows you to connect to a persistent remote graphical desktop session. This service works very well over a high latency, low bandwidth, or off-campus connection compared to running an X11 server locally. It is also very helpful for Windows users who do not have an easy to use local X11 server, as little to no set up is required on your computer.

There are two ways in which to use ThinLinc: preferably through the native client or through a web browser.

Link to section 'Installing the ThinLinc native client' of 'ThinLinc' Installing the ThinLinc native client

The native ThinLinc client will offer the best experience especially over off-campus connections and is the recommended method for using ThinLinc. It is compatible with Windows, Mac OS X, and Linux.

- Download the ThinLinc client from the ThinLinc website.

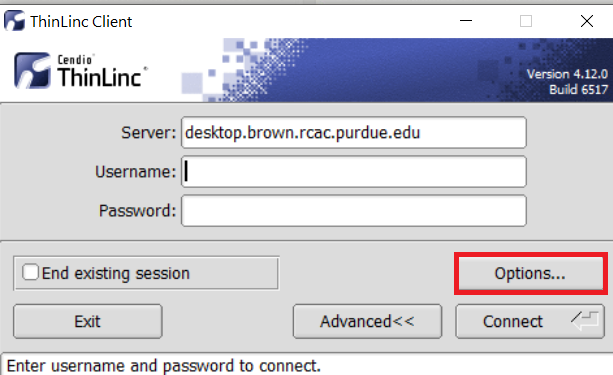

- Start the ThinLinc client on your computer.

- In the client's login window, use desktop.gautschi.rcac.purdue.edu as the Server. Use your Purdue Career Account username and password, but append "

,push" to your password. - Click the Connect button.

- Your Purdue Login Duo will receive a notification to approve your login.

- Continue to following section on connecting to Gautschi from ThinLinc.

Link to section 'Using ThinLinc through your web browser' of 'ThinLinc' Using ThinLinc through your web browser

The ThinLinc service can be accessed from your web browser as a convenience to installing the native client. This option works with no set up and is a good option for those on computers where you do not have privileges to install software. All that is required is an up-to-date web browser. Older versions of Internet Explorer may not work.

- Open a web browser and navigate to desktop.gautschi.rcac.purdue.edu.

- Log in with your Purdue Career Account username and password, but append "

,push" to your password. - You may safely proceed past any warning messages from your browser.

- Your Purdue Login Duo will receive a notification to approve your login.

- Continue to the following section on connecting to Gautschi from ThinLinc.

Link to section 'Connecting to Gautschi from ThinLinc' of 'ThinLinc' Connecting to Gautschi from ThinLinc

- Once logged in, you will be presented with a remote Linux desktop running directly on a cluster front-end.

- Open the terminal application on the remote desktop.

- Once logged in to the Gautschi head node, you may use graphical editors, debuggers, software like Matlab, or run graphical interactive jobs. For example, to test the X forwarding connection issue the following command to launch the graphical editor gedit:

$ gedit - This session will remain persistent even if you disconnect from the session. Any interactive jobs or applications you left running will continue running even if you are not connected to the session.

Link to section 'Tips for using ThinLinc native client' of 'ThinLinc' Tips for using ThinLinc native client

- To exit a full screen ThinLinc session press the F8 key on your keyboard (fn + F8 key for Mac users) and click to disconnect or exit full screen.

- Full screen mode can be disabled when connecting to a session by clicking the Options button and disabling full screen mode from the Screen tab.

Link to section 'Configure ThinLinc to use SSH Keys' of 'ThinLinc' Configure ThinLinc to use SSH Keys

- The web client does NOT support public-key authentication.

-

ThinLinc native client supports the use of an SSH key pair. For help generating and uploading keys to the cluster, see SSH Keys section in our user guide for details.

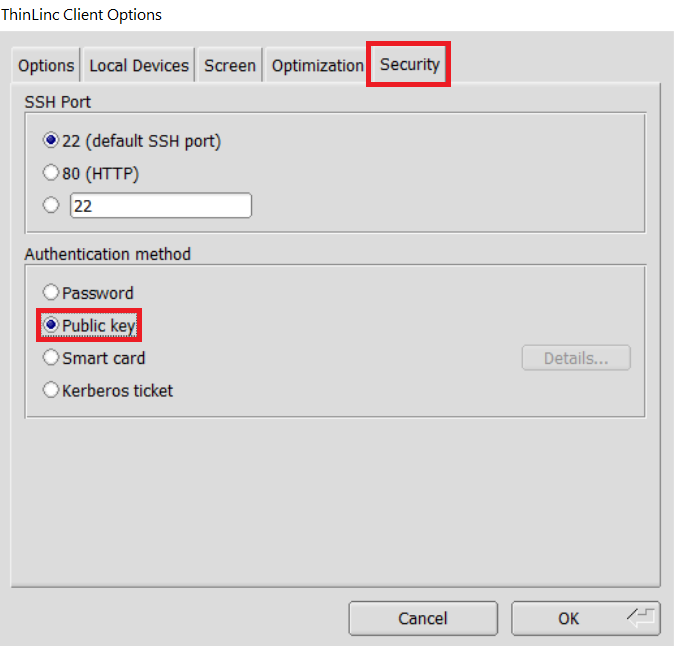

To set up SSH key authentication on the ThinLinc client:

-

Open the Options panel, and select Public key as your authentication method on the Security tab.

The "Options..." button in the ThinLinc Client can be found towards the bottom left, above the "Connect" button. -

In the options dialog, switch to the "Security" tab and select the "Public key" radio button:

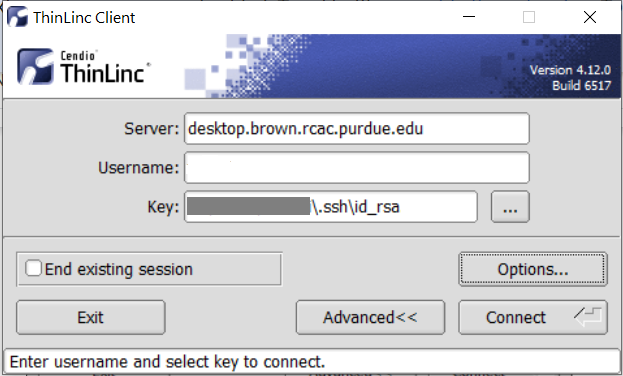

The "Security" tab found in the options dialog, will be the last of available tabs. The "Public key" option can be found in the "Authentication method" options group. - Click OK to return to the ThinLinc Client login window. You should now see a Key field in place of the Password field.

-

In the Key field, type the path to your locally stored private key or click the ... button to locate and select the key on your local system. Note: If PuTTY is used to generate the SSH Key pairs, please choose the private key in the openssh format.

The ThinLinc Client login window will now display key field instead of a password field.

-

Software

Link to section 'Environment module' of 'Software' Environment module

Link to section 'Software catalog' of 'Software' Software catalog

Our clusters provide a number of software packages to users of the system via the module command.

Link to section 'Environment Management with the Module Command' of 'Environment Management with the Module Command' Environment Management with the Module Command

The module command is the preferred method to manage your processing environment. With this command, you may load applications and compilers along with their libraries and paths. Modules are packages that you load and unload as needed.

Please use the module command and do not manually configure your environment, as staff may make changes to the specifics of various packages. If you use the module command to manage your environment, these changes will not be noticeable.

Link to section 'Hierarchy' of 'Environment Management with the Module Command' Hierarchy

Many modules have dependencies on other modules. For example, a particular openmpi module requires a specific version of the Intel compiler to be loaded. Often, these dependencies are not clear to users of the module, and there are many modules which may conflict. Arranging modules in a hierarchical fashion makes this dependency clear. This arrangement also helps make the software stack easy to understand - your view of the modules will not be cluttered with a bunch of conflicting packages.

Your default module view on Gautschi will include a set of compilers and a set of basic software that has no dependencies (such as Matlab and Fluent). To make software available that depends on a compiler, you must first load the compiler, and then software which depends on it becomes available to you. In this way, all software you see when doing module avail is completely compatible with each other.

Link to section 'Using the Hierarchy' of 'Environment Management with the Module Command' Using the Hierarchy

Your default module view on Gautschi will include a set of compilers and a set of basic software that has no dependencies (such as Matlab and Fluent).

To see what modules are available on this system by default:

$ module avail

To see which versions of a specific compiler are available on this system:

$ module avail gcc

$ module avail intel

To continue further into the hierarchy of modules, you will need to choose a compiler. As an example, if you are planning on using the Intel compiler you will first want to load the Intel compiler:

$ module load intel

With intel loaded, you can repeat the avail command, and at the bottom of the output you will see the section of additional software that the intel module provides:

$ module avail

Several of these new packages also provide additional software packages, such as MPI libraries. You can repeat the last two steps with one of the MPI packages such as openmpi and you will have a few more software packages available to you.

If you are looking for a specific software package and do not see it in your default view, the module command provides a search function for searching the entire hierarchy tree of modules without the need for you to manually load and avail on every module.

To search for a software package:

$ module spider openmpi

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

openmpi:

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Versions:

openmpi/4.1.6

openmpi/5.0.5

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

For detailed information about a specific "openmpi" package (including how to load the modules) use the module's full name.

Note that names that have a trailing (E) are extensions provided by other modules.

For example:

$ module spider openmpi/5.0.5

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

This will search for the openmpi software package. If you do not specify a specific version of the package, you will be given a list of versions available on the system. Select the version you wish to use and spider that to see how to access the module:

$ module spider openmpi/5.0.5

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

openmpi: openmpi/5.0.5

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

You will need to load all module(s) on any one of the lines below before the "openmpi/5.0.5" module is available to load.

gcc/11.4.1

gcc/14.1.0

Help:

An open source Message Passing Interface implementation. The Open MPI

Project is an open source Message Passing Interface implementation that

is developed and maintained by a consortium of academic, research, and

industry partners. Open MPI is therefore able to combine the expertise,

technologies, and resources from all across the High Performance

Computing community in order to build the best MPI library available.

Open MPI offers advantages for system and software vendors, application

developers and computer science researchers.

The output of this command will instruct you that you can load this module directly, or in the case of the above example, that you will need to first load a module or two. With the information provided with this command, you can now construct a load command to load a version of OpenMPI into your environment:

$ module load gcc/14.1.0 openmpi/5.0.5

Some user communities may maintain copies of their domain software for others to use. For example, the Purdue Bioinformatics Core provides a wide set of bioinformatics software for use by any user of RCAC clusters via the bioinfo module. The spider command will also search this repository of modules. If it finds a software package available in the bioinfo module repository, the spider command will instruct you to load the bioinfo module first.

Link to section 'Load / Unload a Module' of 'Environment Management with the Module Command' Load / Unload a Module

All modules consist of both a name and a version number. When loading a module, you may use only the name to load the default version, or you may specify which version you wish to load.

For each cluster, RCAC makes a recommendation regarding the set of compiler, math library, and MPI library for parallel code. To load the recommended set:

$ module load rcac

To verify what you loaded:

$ module list

To load the default version of a specific compiler, choose one of the following commands:

$ module load gcc

$ module load intel

To load a specific version of a compiler, include the version number:

$ module load gcc/11.2.0

When running a job, you must use the job submission file to load on the compute node(s) any relevant modules. Loading modules on the front end before submitting your job makes the software available to your session on the front-end, but not to your job submission script environment. You must load the necessary modules in your job submission script.

To unload a compiler or software package you loaded previously:

$ module unload gcc

$ module unload intel

$ module unload matlab

To unload all currently loaded modules and reset your environment:

$ module purge

Link to section 'Show Module Details' of 'Environment Management with the Module Command' Show Module Details

To learn more about what a module does to your environment, you may use the module show command.

$ module show matlab

Here is an example showing what loading the default Matlab does to the processing environment:

-------------------------------------------------------------------------------------------------------------------------------------------

/opt/spack/modulefiles/Core/matlab/R2022a.lua:

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

whatis("Name : matlab")

whatis("Version : R2022a")

...

setenv("MATLAB_HOME","/apps/spack/gautschi/apps/matlab/R2022a-gcc-8.5.0-u54n6sa")

setenv("RCAC_MATLAB_ROOT","/apps/spack/gautschi/apps/matlab/R2022a-gcc-8.5.0-u54n6sa")

setenv("RCAC_MATLAB_VERSION","R2022a")

setenv("MATLAB","/apps/spack/gautschi/apps/matlab/R2022a-gcc-8.5.0-u54n6sa")

setenv("MLROOT","/apps/spack/gautschi/apps/matlab/R2022a-gcc-8.5.0-u54n6sa")

setenv("ARCH","glnxa64")

append_path("PATH","/apps/spack/gautschi/apps/matlab/R2022a-gcc-8.5.0-u54n6sa/bin/glnxa64:/apps/spack/gautschi/apps/matlab/R2019a-gcc-4.8.5-jg35hvf/bin")

append_path("CMAKE_PREFIX_PATH","/apps/spack/gautschi/apps/matlab/R2022a-gcc-8.5.0-u54n6sa/")

append_path("LD_LIBRARY_PATH","/apps/spack/gautschi/apps/matlab/R2022a-gcc-8.5.0-u54n6sa/runtime/glnxa64:/apps/spack/gautschi/apps/matlab/R2022a-gcc-8.5.0-u54n6sa/bin/glnxa64")

Link to section 'GPU modules' of 'Environment Management with the Module Command' GPU modules

We use different module branch for GPU applications on Gautschi. To use the GPU modules, you can module load the gpu module branch first:

$ module load modtree/gpu

After loading the modtree/gpu, you are able to use module spider to search modules or module load to add the desired module to your environment.

For more information about Lmod:

Running Jobs

There is one method for submitting jobs to Gautschi. You may use SLURM to submit jobs to a partition on Gautschi. SLURM performs job scheduling. Jobs may be any type of program. You may use either the batch or interactive mode to run your jobs. Use the batch mode for finished programs; use the interactive mode only for debugging.

In this section, you'll find a few pages describing the basics of creating and submitting SLURM jobs. As well, a number of example SLURM jobs that you may be able to adapt to your own needs.

Basics of SLURM Jobs

The Simple Linux Utility for Resource Management (SLURM) is a system providing job scheduling and job management on compute clusters. With SLURM, a user requests resources and submits a job to a queue. The system will then take jobs from queues, allocate the necessary nodes, and execute them.

Do NOT run large, long, multi-threaded, parallel, or CPU-intensive jobs on a front-end login host. All users share the front-end hosts, and running anything but the smallest test job will negatively impact everyone's ability to use Gautschi. Always use SLURM to submit your work as a job.

Link to section 'Submitting a Job' of 'Basics of SLURM Jobs' Submitting a Job

The main steps to submitting a job are:

Follow the links below for information on these steps, and other basic information about jobs. A number of example SLURM jobs are also available.

Queues

On Gautschi, the required options for job submisison deviates from some of the other community clusters you might have experience using. In general every job submission will have four parts:

- The number and type of resources you want

- The account the resources should come out of

- The quality of service (QOS) this job expects from the resources

- The partition where the resources are located

If you have used other clusters, you will be familiar with first item. If you have not, you can read about how to format the request on our job submission page. The rest of this page will focus on the last three items as they are specific to Gautschi.

Link to section 'Accounts' of 'Queues' Accounts

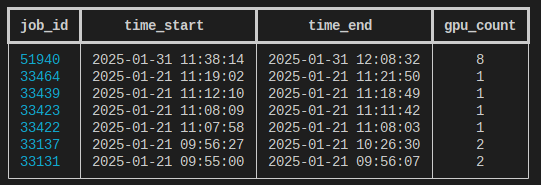

On the Gautschi community cluster, users will have access to one or more accounts, also known as queues. These accounts are dedicated to and named after each partner who has purchased access to the cluster, and they provide partners and their researchers with priority access to their portion of the cluster. These accounts can be thought of as bank accounts that contain the resources a group has purchased access to which may include some number of cores, GPU hours or both. To see the list of accounts that you have access to on Gautschi as well as the resources they contain, you can use the command "slist".

On previous clusters, you may have had access to several other special accounts like "standby", "highmem", "gpu", etc. On Gautschi, these modes of access still exist, but they are no longer accounts. As well on Gautschi, you must explicitly define the account that you want to submit to using the "-A/--account=" option.

Link to section 'Quality of Service (QOS)' of 'Queues' Quality of Service (QOS)

On Gautschi, we use a Slurm concept called a Quality of Service or a QOS. A QOS can be thought of as a tag for a job that tells the scheduler how that job should be treated with respect to limits, priority, etc. The cluster administrators define the available QOSes as well as the policies for how each QOS should be treated on the cluster. A toy example of such a policy may be "no single user can have more than 200 jobs" that has been tagged with a QOS named "highpriority". There are two classes of QOSes and a job can have both:

- Partition QOSes: A partition QOS is a tag that is automatically added to your job when you submit to a partition that defines a partition QOS.

- Job QOSes: A Job QOS is a tag that you explicitly give to a job using the option "-q/--qos=". By explicitly tagging your jobs this way, you can choose the policy that each one of your jobs should abide by. We will describe the policies for the available job QOSes in the partition section below.

As an extended metaphor, if we think of a job as a package that we need to have shipped to some destination, then the partition can be thought of as the carrier we decide to ship our package with. That carrier is going to have some company policies that dictate how you need to label/pack that package, and that company policy is like the partition QOS. It is the policy that is enforced for simply deciding to submit to a particular partition. The Job QOS can then be thought of as the various different types of shipping options that carrier might offer. You might pay extra to have that package shipped overnight or have tracking information for it or you may choose to pay less and have your package arrive as available. Once we decide to go with a particular carrier, we are subject to their company policy, but we may negotiate how they handle that package by choosing one of their available shipping options. In the same way, when you choose to submit to a partition, you are subject to the limits enforced by the partition QOS and you may be able to ask for your job to be handled a particular way by specifying the job QOS, but that option has to be offered by the partition.

In order for a job to use a Job QOS, the user submitting the job must have access to the QOS, the account the job is being submitted to must accept the QOS, and the partition the job is being submitted to must accept the QOS. The following job QOSes every user and every account of Gautschi has access to:

- "normal": The "normal" QOS is the default job QOS on the cluster meaning if you do not explicitly list an alternative job QOS, your job will be tagged with this QOS. The policy for this QOS provides a high priority and does not add any additional limits.

- "standby": The "standby" QOS must be explicitly used if desired by using the option "-q standby" or "--qos=standby". The policy for this QOS gives access to idle resources on the cluster. Jobs tagged with this QOS are "low priority" jobs and are only allowed to run for up to four hours at a time, however the resources used by these jobs do not count against the resources in your Account. For users of our previous clusters, usage of this QOS replaces the previous -A standby style of submission.

Some of these QOSes may not be available in every partition. Each of the partitions in the following section will enumerate which of these QOSes are allowed in the partition.

Link to section 'Partitions' of 'Queues' Partitions

On Gautschi, the various types of nodes on the cluster are organized into distinct partitions. This allows CPU and GPU nodes to be charged separately and differently. This also means that Instead of only needing to specify the account name in the job script, the desired partition must also be specified. Each of these partitions is subject to different limitations and has a specific use case that will be described below.

Link to section 'CPU Partition' of 'Queues' CPU Partition

This partition contains the resources a group purchases access to when they purchase CPU resources on Gautschi and is made up of 336 Gautschi-A nodes. Each of these nodes contains two Zen 4 AMD EPYC 9654 96-core processors for a total of 192 cores and 384 GB of memory for a total of more than 64,000 cores in the partition. Memory in this partition is allocated proportional to your core request such that each core is given about 2 GB of memory per core requested. Submission to this partition can be accomplished by using the option: "-p cpu" or "--partition=cpu".

The purchasing model for this partition allows groups to purchase high priority access to some number of cores. When an account uses resources in this account by submitting a job tagged with the "normal" QOS, the cores used by that job are withdrawn from the account and deposited back into the account when the job terminates.

When using the CPU partition, jobs are tagged by the "normal" QOS by default, but they can be tagged with the "standby" QOS if explicitly submitted using the "-q standby" or "--qos=standby" option.

- Jobs tagged with the "normal" QOS are subject to the following policies:

- Jobs have a high priority and should not need to wait very long before starting.

- Any cores requested by these jobs are withdrawn from the account until the job terminates.

- These jobs can run for up to two weeks at a time.

- Jobs tagged with the "standby" QOS are subject to the following policies:

- Jobs have a low priority and there is no expectation of job start time. If the partition is very busy with jobs using the "normal" QOS or if you are requesting a very large job, then jobs using the "standby" QOS may take hours or days to start.

- These jobs can use idle resources on the cluster and as such cores requested by these jobs are not withdrawn from the account to which they were submitted.

- These jobs can run for up to four hours at a time.

Groups who purchased GPU resources on Gautschi will have the ability to run jobs in this partition tagged with the "standby" QOS described above, but will not be able to submit jobs tagged with the "normal" qos unless they have purchased CPU resources on the cluster.

Available QOSes: "normal", "standby"

Link to section 'AI Partition' of 'Queues' AI Partition

This partition contains the resources a group purchases access to when they purcahse GPU resources on Gautschi and is made up of 20 Gautschi-H nodes. Each of these nodes contains two Intel Xeon Platinum 8480+ 56-core processors and 8-way NVLinked H100s for a total of 160 H100s in the partition. CPU memory in this partition is allocated proportional to your core request such that each core is given about 9 GB of memory per core requested. Submission to this partition can be accomplished by using the option: "-p ai" or "--partition=ai".

The purchasing model for this cluster allows groups to purchase access to GPUs. When a group purchases access to a GPU for a year, they are given 365*24 hours of GPU time on the partition. The advantage to tracking resources this way is that if a group needs access to more than one GPU for a project, they can use multiple GPUs at once since instead of having to purchase additional GPUs, they just consume those GPU hours faster. When an account uses resources in this partition, the balance of GPU hours in their account is charged at a rate of at least one GPU hour for each GPU their job uses per hour (this is tracked by the minute), however the usage of some QOSes described below will charge usage at a slower rate.

When using the AI partition, jobs are tagged by the "normal" QOS by default, but they can be tagged with the "preemptible" QOS if explicitly submitted using the "-q preemptible" or "--qos=preemptible" option.

- Jobs tagged with the "normal" QOS are subject to the following policies:

- Jobs have a high priority and should not need to wait very long before starting.

- The account the job is submitted to will be charged 1 GPU hour for each GPU this job uses per hour (this is tracked by the minute).

- These jobs can run for up to two weeks at a time.

- Jobs tagged with the "preemptible" QOS subject to the following policies:

- Jobs have a low priority and there is no expectation of job start time. If the partition is very busy with jobs using the "normal" QOS or if you are requesting a very large job, then jobs using the "standby" QOS may take hours or days to start.

- The account the job is submitted to will be charged 0.25 GPU hour for each GPU this job uses per hour (this is tracked by the minute) which effectively allows jobs to use 4x as many resources as a job tagged with the "normal" qos for the same cost.

- These jobs can run for up to two weeks at a time.

- If there are not enough idle resources in this partition for a job tagged with the "normal" QOS to start, then this job may be cancelled to make room for that job. This means it is imperative to use checkpointing if using this QOS.

Available QOSes: "normal", "preemptible"

Link to section 'Highmem Partition' of 'Queues' Highmem Partition

This partition is made up of 6 Gautschi-B nodes which have four times as much memory as a standard Gautschi-A node, and access to this partition is given to all accounts on the cluster to enable work that has higher memory requirements. Each of these nodes contains two Zen 4 AMD EPYC 9654 96-core processors for a total of 192 cores and 1.5 TB of memory. Memory in this partition is allocated proportional to your core request such that each core is given about 8 GB of memory per core requested. Submission to this partition can be accomplished by using the option: "-p highmem" or "--partition=highmem".

When using the Highmem partition, jobs are tagged by the "normal" QOS by default, and this is the only QOS that is available for this partition, so there is no need to specify a QOS when using this partition. Additionally jobs are tagged by a highmem partition QOS that enforces the following policies

- There is no expectation of job start time as these nodes are a shared resources that are given as a bonus for purchasing access to high priority access to resources on Gautschi

- You can have 2 jobs running in this partition at once

- You can have 8 jobs submitted to thie partition at once

- Your jobs must use more than 48 of the 192 cores on the node otherwise your memory footprint would fit on a standard Gautschi-A node

- These jobs can run for up to 24 hours at a time.

Available QOSes: "normal"

Link to section 'Profiling Partition' of 'Queues' Profiling Partition

This partition is made up of 2 Gautschi-A nodes that have hardware performance counters enabled. By enabling hardware performance counters, profiling applications such as AMD MicroProf can track certain performance criteria for execution on the CPU such as L3 cache events, speculative execution misses, etc. Due to the fact that this allows greater visibility into the execution of each process, the nodes in this partition can only be used if your job uses the entire node. Submission to this partition can be accomplished by using the option: "-p profiling" or "--partition=profiling".

When using the profiling partition, jobs are tagged by the "normal" QOS by default, and this is the only QOS that is available for this partition, so there is no need to specify a QOS when using this partition. Additionally jobs are tagged by a profiling partition QOS that enforces the following policies

- There is no expectation of job start time as these nodes are a shared resources that are given as a bonus for purchasing access to high priority access to resources on Gautschi

- You can have 1 job running in this partition at once

- If you have a single process running on one of the nodes, you must use that entire node when submitting to this partition

- These jobs can run for up to 24 hours at a time.

This is a resource that is reserved for groups profiling their applications and we monitor to ensure that this partition is not being used simply for more compute.

Available QOSes: "normal"

Link to section 'Smallgpu Partiton' of 'Queues' Smallgpu Partiton

This partition is made up of 6 Gautschi-G nodes. Each of these nodes contains two NVIDIA L40s and two Zen 4 AMD EPYC 9554 64-core processors for a total of 128 cores and 384GB of memory. Memory in this partition is allocated proportional to your core request such that each core is given about 3 GB of memory per core requested. You should request cores proportional to the number of GPUs you are using in this partition (i.e. if you only need one of the two GPUs, you should request half of the cores on the node) Submission to this partition can be accomplished by using the option: "-p smallgpu" or "--partition=smallgpu".

When using the smallgpu partition, jobs are tagged by the "normal" QOS by default, and this is the only QOS that is available for this partition, so there is no need to specify a QOS when using this partition. Additionally jobs are tagged by a smallgpu partition QOS that enforces the following policies

- There is no expectation of job start time as these nodes are a shared resources that are given as a bonus for purchasing access to high priority access to resources on Gautschi

- You can use up to 2 GPUs in this partition at once

- You can have 8 jobs submitted to thie partition at once

- These jobs can run for up to 24 hours at a time.

Available QOSes: "normal"

Job Submission Script

To submit work to a SLURM queue, you must first create a job submission file. This job submission file is essentially a simple shell script. It will set any required environment variables, load any necessary modules, create or modify files and directories, and run any applications that you need:

#!/bin/bash

# FILENAME: myjobsubmissionfile

# Loads Matlab and sets the application up

module load matlab

# Change to the directory from which you originally submitted this job.

cd $SLURM_SUBMIT_DIR

# Runs a Matlab script named 'myscript'

matlab -nodisplay -singleCompThread -r myscript

Once your script is prepared, you are ready to submit your job.

Link to section 'Job Script Environment Variables' of 'Job Submission Script' Job Script Environment Variables

| Name | Description |

|---|---|

| SLURM_SUBMIT_DIR | Absolute path of the current working directory when you submitted this job |

| SLURM_JOBID | Job ID number assigned to this job by the batch system |

| SLURM_JOB_NAME | Job name supplied by the user |

| SLURM_JOB_NODELIST | Names of nodes assigned to this job |

| SLURM_CLUSTER_NAME | Name of the cluster executing the job |

| SLURM_SUBMIT_HOST | Hostname of the system where you submitted this job |

| SLURM_JOB_PARTITION | Name of the original queue to which you submitted this job |

Submitting a Job

Once you have a job submission file, you may submit this script to SLURM using the sbatch command. SLURM will find, or wait for, available resources matching your request and run your job there.

On Gautschi, in order to submit jobs, you need to specify the partition, account and Quality of Service (QoS) name to which you want to submit your jobs. To familiarize yourself with the partitions and QoS available on Gautschi, visit Gautschi Queues and Partitons. To check the available partitions on Gautschi, you can use the showpartitions , and to check your available accounts you can use slist commands. Slurm uses the term "Account" with the option -A or --account= to specify different batch accounts, the option -p or --partition= to select a specific partition for job submission, and the option -q or --qos= .

showpartitions

Partition statistics for cluster gautschi at Fri Dec 6 05:26:30 PM EST 2024

Partition #Nodes #CPU_cores Cores_pending Job_Nodes MaxJobTime Cores Mem/Node

Name State Total Idle Total Idle Resorc Other Min Max Day-hr:mn /node (GB)

ai up 20 20 2240 2240 0 0 1 infin infinite 112 1031

cpu up 336 300 64512 57600 0 0 1 infin infinite 192 386

highmem up 6 6 1152 1152 0 0 1 infin infinite 192 1547

smallgpu up 6 6 768 768 0 0 1 infin infinite 128 386

profiling up 2 2 384 384 0 0 1 infin infinite 192 386

Link to section 'CPU Partition' of 'Submitting a Job' CPU Partition

The CPU partition on Gautschi has two Quality of Service (QoS) levels: normal and standby. To submit your job to one compute node on cpu partition and 'normal' QoS which has "high priority":

$ sbatch --nodes=1 --ntasks=1 --partition=cpu --account=accountname --qos=normal myjobsubmissionfile

$ sbatch -N1 -n1 -p cpu -A accountname -q normal myjobsubmissionfile

To submit your job to one compute node on cpu partition and 'standby' QoS which is has "low priority":

$ sbatch --nodes=1 --ntasks=1 --partition=cpu --account=accountname --qos=standby myjobsubmissionfile

$ sbatch -N1 -n1 -p cpu -A accountname -q standby myjobsubmissionfile

Link to section ' AI Partition' of 'Submitting a Job' AI Partition

The CPU partition on Gautschi has two Quality of Service (QoS) levels: normal and preemptible. To submit your job to one compute node requesting one GPU on ai partition and 'normal' QoS which is has "high priority":

$ sbatch --nodes=1 --gpus-per-node=1 --ntasks=14 --partition=ai --account=accountname --qos=normal myjobsubmissionfile

$ sbatch -N1 --gpus-per-node=1 -n14 -p ai -A accountname -q normal myjobsubmissionfile

To submit your job to one compute node requesting one GPU on ai partition and 'preemptible' QoS which has "high priority":

$ sbatch --nodes=1 --gpus-per-node=1 --ntasks=14 --partition=ai --account=accountname --qos=preemptible myjobsubmissionfile

$ sbatch -N1 --gpus-per-node=1 -n14 -p ai -A accountname -q preemptible myjobsubmissionfile

Link to section 'Highmem Partition' of 'Submitting a Job' Highmem Partition

To submit your job to a compute node on the highmem partition, you don’t need to specify the QoS name because only one QoS exists for this partition, and the default is normal. However, the highmem partition is suitable for jobs with memory requirements that exceed the capacity of a standard node, so the number of requested tasks should be appropriately high.

Link to section 'Profiling Partition' of 'Submitting a Job' Profiling Partition

To submit your job to a compute node on the profiling partition, you also don’t need to specify the QoS name because only one QoS exists for this partition, and the default is normal.

$ sbatch --nodes=1 --ntasks=1 --partition=profiling --account=accountname myjobsubmissionfile

$ sbatch -N1 -n1 -p profiling -A accountname myjobsubmissionfile

Link to section 'Smallgpu Partition' of 'Submitting a Job' Smallgpu Partition

To submit your job to a compute node on the smallgpu partition, you don’t need to specify the QoS name because only one QoS exists for this partition, and the default is normal. You should request cores proportional to the number of GPUs you are using in this partition (i.e. if you only need one of the two GPUs, you should request half of the cores on the node).

$ sbatch --nodes=1 --ntasks=64 --gpus-per-node=1 --partition=smallgpu --account=accountname myjobsubmissionfile

$ sbatch -N1 -n64 --gpus-per-node=1 -p smallgpu -A accountname myjobsubmissionfile

Link to section 'General Information' of 'Submitting a Job' General Information

By default, each job receives 30 minutes of wall time, or clock time. If you know that your job will not need more than a certain amount of time to run, request less than the maximum wall time, as this may allow your job to run sooner. To request the 1 hour and 30 minutes of wall time:

$ sbatch -t 01:30:00 -N=1 -n=1 -p=cpu -A=accountname -q=standby myjobsubmissionfile

The --nodes= or -N value indicates how many compute nodes you would like for your job, and --ntasks= or -n value indicates the number of tasks you want to run.

In some cases, you may want to request multiple nodes. To utilize multiple nodes, you will need to have a program or code that is specifically programmed to use multiple nodes such as with MPI. Simply requesting more nodes will not make your work go faster. Your code must support this ability.

To request 2 compute nodes:

$ sbatch -t 01:30:00 -N=2 -n=16 -p=cpu -A=accountname -q=standby myjobsubmissionfile

By default, jobs on Gautschi will share nodes with other jobs.

If more convenient, you may also specify any command line options to sbatch from within your job submission file, using a special form of comment:

#!/bin/sh -l

# FILENAME: myjobsubmissionfile

#SBATCH --account=accountname

#SBATCH --nodes=1

#SBATCH --ntasks=1

#SBATCH --partition=cpu

#SBATCH --qos=normal

#SBATCH --time=1:30:00

#SBATCH --job-name myjobname

# Print the hostname of the compute node on which this job is running.

/bin/hostname

If an option is present in both your job submission file and on the command line, the option on the command line will take precedence.

After you submit your job with SBATCH, it may wait in queue for minutes, hours, or even weeks. How long it takes for a job to start depends on the specific queue, the resources and time requested, and other jobs already waiting in that queue requested as well. It is impossible to say for sure when any given job will start. For best results, request no more resources than your job requires.

Once your job is submitted, you can monitor the job status, wait for the job to complete, and check the job output.

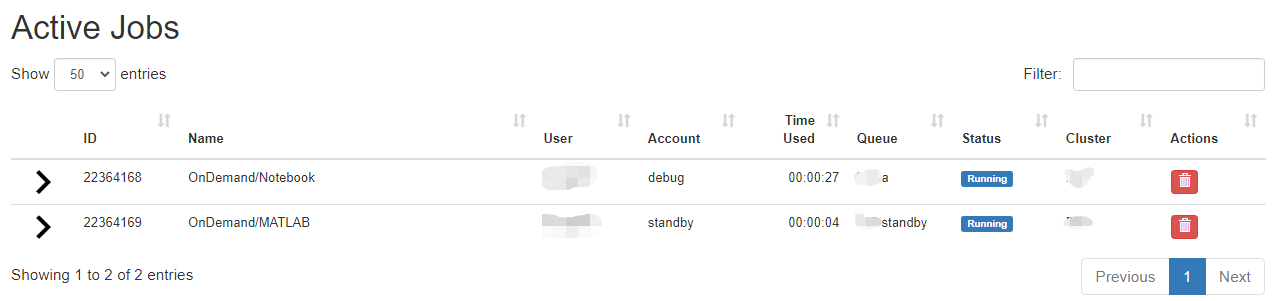

Checking Job Status

Once a job is submitted there are several commands you can use to monitor the progress of the job.

To see your jobs, use the squeue -u command and specify your username:

(Remember, in our SLURM environment a queue is referred to as an 'Account')

squeue -u myusername

JOBID USER ACCOUNT PART QOS NAME NODES TRES_PER_NODE CPUS TIME_LIMIT ST TIME

182792 myusername account_name cpu standby job1 1 N/A 192 4:00:00 R 35:54

185841 myusername account_name cpu normal job2 1 N/A 192 4:00:00 R 36:51

185844 myusername account_name smal normal job3 1 gres/gpu:1 64 8:00:00 R 22:11

To retrieve useful information about your queued or running job, use the scontrol show job command with your job's ID number. The output should look similar to the following:

scontrol show job 3519

JobId=3519 JobName=job.sub

UserId=myusername GroupId=mygroup MCS_label=N/A

Priority=1000 Nice=0 Account=myaccount QOS=normal

JobState=RUNNING Reason=None Dependency=(null)

Requeue=1 Restarts=0 BatchFlag=0 Reboot=0 ExitCode=0:0

RunTime=00:00:23 TimeLimit=04:00:00 TimeMin=N/A

SubmitTime=2025-02-24T14:31:19 EligibleTime=2025-02-24T14:31:19

AccrueTime=2025-02-24T14:31:19

StartTime=2025-02-24T14:32:36 EndTime=2025-02-24T18:32:36 Deadline=N/A

PreemptEligibleTime=2025-02-24T14:32:36 PreemptTime=None

SuspendTime=None SecsPreSuspend=0 LastSchedEval=2025-02-24T14:32:36 Scheduler=Backfill

Partition=cpu AllocNode:Sid=login02:4061246

ReqNodeList=(null) ExcNodeList=(null)

NodeList=a166

BatchHost=a166

NumNodes=1 NumCPUs=4 NumTasks=4 CPUs/Task=1 ReqB:S:C:T=0:0:*:*

ReqTRES=cpu=4,mem=7668M,node=1,gres/hp_cpu=4

AllocTRES=cpu=4,mem=7668M,node=1,gres/hp_cpu=4

Socks/Node=* NtasksPerN:B:S:C=0:0:*:* CoreSpec=*

MinCPUsNode=1 MinMemoryCPU=1917M MinTmpDiskNode=0

Features=(null) DelayBoot=00:00:00

OverSubscribe=OK Contiguous=0 Licenses=(null) Network=(null)

Command=/bin/sh

WorkDir=/home/myusername

TresPerJob=gres/hp_cpu=4

There are several useful bits of information in this output.

JobStatelets you know if the job is Pending, Running, Completed, or Held.RunTime and TimeLimitwill show how long the job has run and its maximum time.SubmitTimeis when the job was submitted to the cluster.NumNodes,NumCPUs,NumTasksandCPUs/Taskare the number of Nodes, CPUs, Tasks, and CPUs per Task are shown.WorkDiris the job's working directory.

Checking Job Output

Once a job is submitted, and has started, it will write its standard output and standard error to files that you can read.

SLURM catches output written to standard output and standard error - what would be printed to your screen if you ran your program interactively. Unless you specfied otherwise, SLURM will put the output in the directory where you submitted the job in a file named slurm- followed by the job id, with the extension out. For example slurm-3509.out. Note that both stdout and stderr will be written into the same file, unless you specify otherwise.

If your program writes its own output files, those files will be created as defined by the program. This may be in the directory where the program was run, or may be defined in a configuration or input file. You will need to check the documentation for your program for more details.

Link to section 'Redirecting Job Output' of 'Checking Job Output' Redirecting Job Output

It is possible to redirect job output to somewhere other than the default location with the --error and --output directives:

#!/bin/bash

#SBATCH --output=/home/myusername/joboutput/myjob.out

#SBATCH --error=/home/myusername/joboutput/myjob.out

# This job prints "Hello World" to output and exits

echo "Hello World"

Holding a Job

Sometimes you may want to submit a job but not have it run just yet. You may be wanting to allow lab mates to cut in front of you in the queue - so hold the job until their jobs have started, and then release yours.

To place a hold on a job before it starts running, use the scontrol hold job command:

$ scontrol hold job myjobid

Once a job has started running it can not be placed on hold.

To release a hold on a job, use the scontrol release job command:

$ scontrol release job myjobid

You find the job ID using the squeue command as explained in the SLURM Job Status section.

Job Dependencies

Dependencies are an automated way of holding and releasing jobs. Jobs with a dependency are held until the condition is satisfied. Once the condition is satisfied jobs only then become eligible to run and must still queue as normal.

Job dependencies may be configured to ensure jobs start in a specified order. Jobs can be configured to run after other job state changes, such as when the job starts or the job ends.

These examples illustrate setting dependencies in several ways. Typically dependencies are set by capturing and using the job ID from the last job submitted.

To run a job after job myjobid has started:

sbatch --dependency=after:myjobid myjobsubmissionfile

To run a job after job myjobid ends without error:

sbatch --dependency=afterok:myjobid myjobsubmissionfile

To run a job after job myjobid ends with errors:

sbatch --dependency=afternotok:myjobid myjobsubmissionfile

To run a job after job myjobid ends with or without errors:

sbatch --dependency=afterany:myjobid myjobsubmissionfile

To set more complex dependencies on multiple jobs and conditions:

sbatch --dependency=after:myjobid1:myjobid2:myjobid3,afterok:myjobid4 myjobsubmissionfile

Canceling a Job

To stop a job before it finishes or remove it from a queue, use the scancel command:

scancel myjobid

You find the job ID using the squeue command as explained in the SLURM Job Status section.

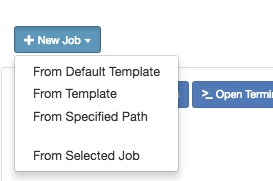

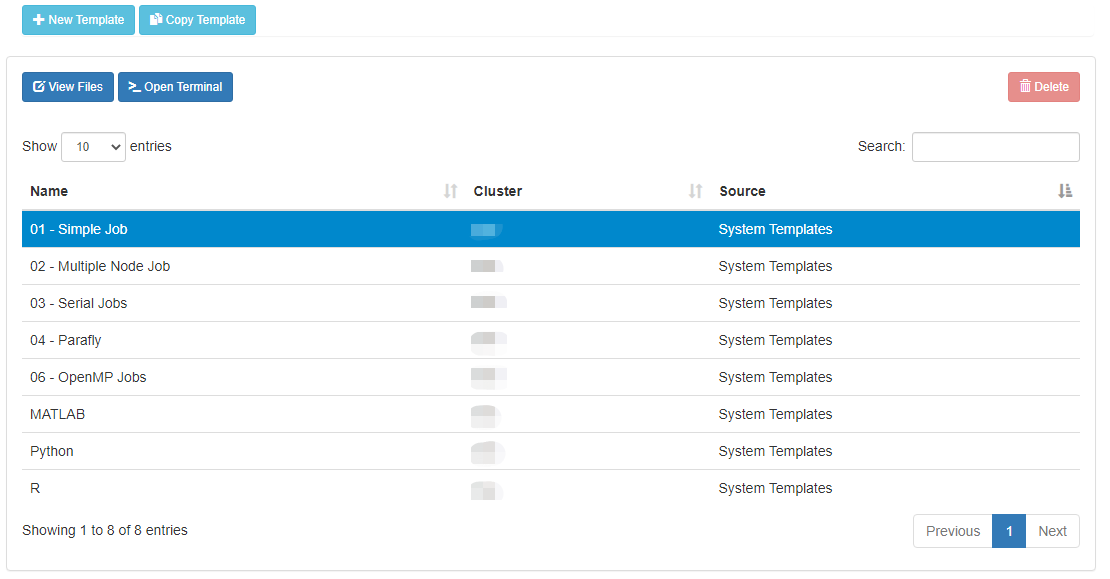

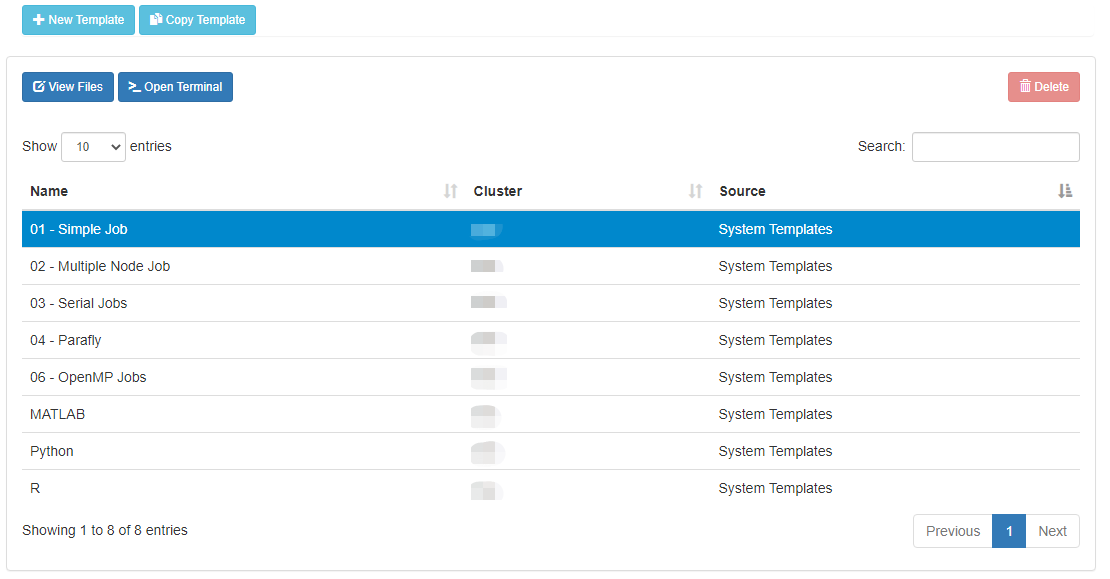

Example Jobs

A number of example jobs are available for you to look over and adapt to your own needs. The first few are generic examples, and latter ones go into specifics for particular software packages.

Generic SLURM Jobs

The following examples demonstrate the basics of SLURM jobs, and are designed to cover common job request scenarios. These example jobs will need to be modified to run your application or code.

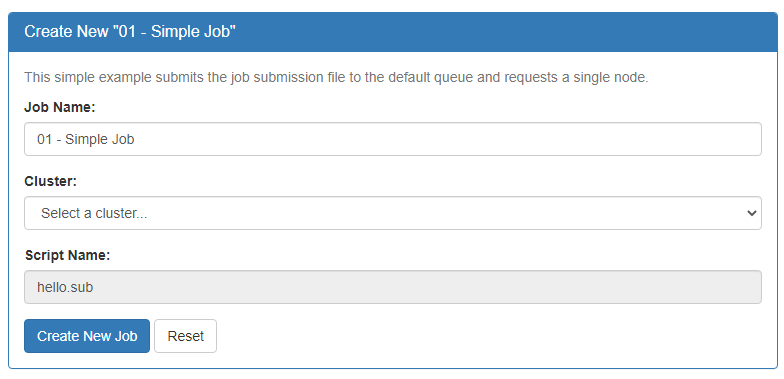

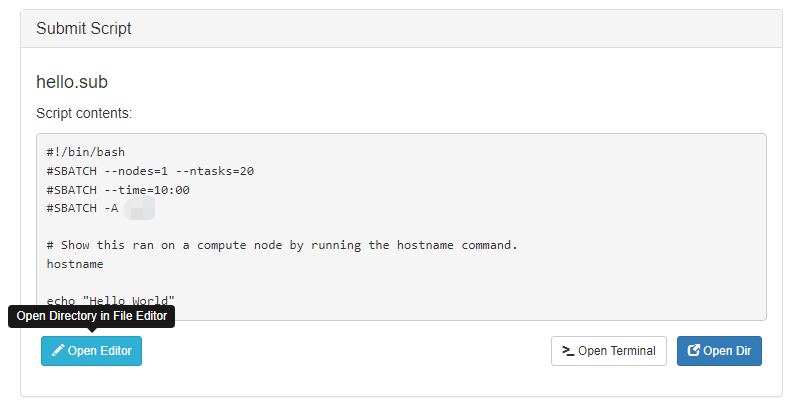

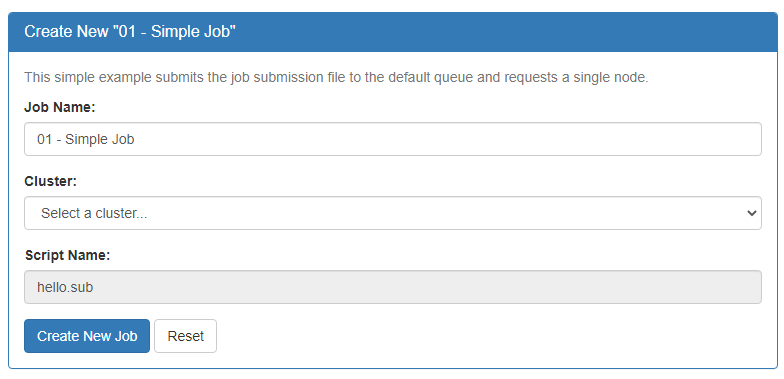

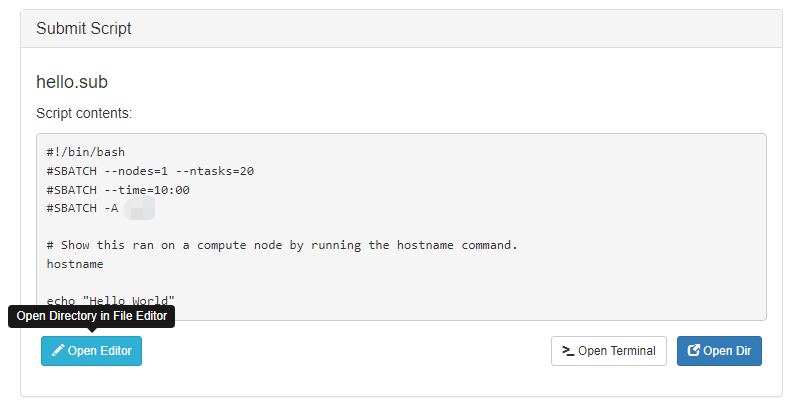

Simple Job

Every SLURM job consists of a job submission file. A job submission file contains a list of commands that run your program and a set of resource (nodes, walltime, queue) requests. The resource requests can appear in the job submission file or can be specified at submit-time as shown below.

This simple example submits the job submission file hello.sub to the cpu queue on Gautschi and requests a single node:

#!/bin/bash

# FILENAME: hello.sub

# Show this ran on a compute node by running the hostname command.

hostname

echo "Hello World"

sbatch -A myallocation -p queue-name --nodes=1 --ntasks=1 --cpus-per-task=1 --time=00:01:00 hello.sub

Submitted batch job 3521

For a real job you would replace echo "Hello World" with a command, or sequence of commands, that run your program.

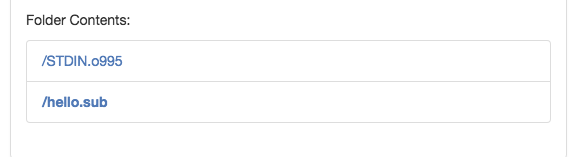

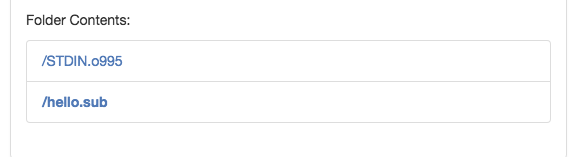

After your job finishes running, the ls command will show a new file in your directory, the .out file:

ls -l

hello.sub

slurm-3521.out

The file slurm-3521.out contains the output and errors your program would have written to the screen if you had typed its commands at a command prompt:

cat slurm-3521.out

gautschi-a001.rcac.purdue.edu

Hello World

You should see the hostname of the compute node your job was executed on. Following should be the "Hello World" statement.

Multiple Node

In some cases, you may want to request multiple nodes. To utilize multiple nodes, you will need to have a program or code that is specifically programmed to use multiple nodes such as with MPI. Simply requesting more nodes will not make your work go faster. Your code must support this ability.

This example shows a request for multiple compute nodes. The job submission file contains a single command to show the names of the compute nodes allocated:

# FILENAME: myjobsubmissionfile.sub

#!/bin/bash

echo "$SLURM_JOB_NODELIST"

sbatch --nodes=2 --ntasks=384 --time=00:10:00 -A myallocation -p queue-name myjobsubmissionfile.sub

Compute nodes allocated:

gautschi-a[014-015]

The above example will allocate the total of 384 CPU cores across 2 nodes. Note that if your multi-node job requests fewer than each node's full 192 cores per node, by default Slurm provides no guarantee with respect to how this total is distributed between assigned nodes (i.e. the cores may not necessarily be split evenly). If you need specific arrangements of your tasks and cores, you can use --cpus-per-task= and/or --ntasks-per-node= flags. See Slurm documentation or man sbatch for more options.

Directives

So far these examples have shown submitting jobs with the resource requests on the sbatch command line such as:

sbatch -A myallocation -p queue-name --nodes=1 --time=00:01:00 hello.sub

The resource requests can also be put into job submission file itself. Documenting the resource requests in the job submission is desirable because the job can be easily reproduced later. Details left in your command history are quickly lost. Arguments are specified with the #SBATCH syntax:

#!/bin/bash # FILENAME: hello.sub#SBATCH -A myallocation -p queue-name #SBATCH --nodes=1 --time=00:01:00 # Show this ran on a compute node by running the hostname command. hostname echo "Hello World"

The #SBATCH directives must appear at the top of your submission file. SLURM will stop parsing directives as soon as it encounters a line that does not start with '#'. If you insert a directive in the middle of your script, it will be ignored.

This job can be then submitted with:

sbatch hello.sub

Interactive Jobs

Interactive jobs are run on compute nodes, while giving you a shell to interact with. They give you the ability to type commands or use a graphical interface in the same way as if you were on a front-end login host.

To submit an interactive job, use sinteractive to run a login shell on allocated resources.

sinteractive accepts most of the same resource requests as sbatch, so to request a login shell on the cpu account while allocating 2 nodes and 192 total cores, you might do:

sinteractive -A myallocation -p cpu -N2 -n384

To quit your interactive job:

exit or Ctrl-D

The above example will allocate the total of 384 CPU cores across 2 nodes. Note that if your multi-node job requests fewer than each node's full 192 cores per node, by default Slurm provides no guarantee with respect to how this total is distributed between assigned nodes (i.e. the cores may not necessarily be split evenly). If you need specific arrangements of your tasks and cores, you can use --cpus-per-task= and/or --ntasks-per-node= flags. See Slurm documentation or man salloc for more options.

Serial Jobs

This shows how to submit one of the serial programs compiled in the section Compiling Serial Programs.

Create a job submission file:

#!/bin/bash

# FILENAME: serial_hello.sub

./serial_hello

Submit the job:

sbatch --nodes=1 --ntasks=1 --time=00:01:00 serial_hello.sub

After the job completes, view results in the output file:

cat slurm-myjobid.out

Runhost:gautschi-a009.rcac.purdue.edu

hello, world

If the job failed to run, then view error messages in the file slurm-myjobid.out.

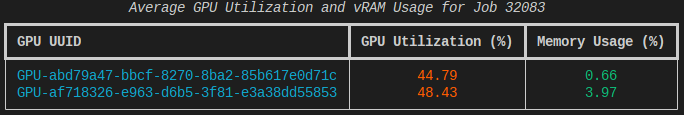

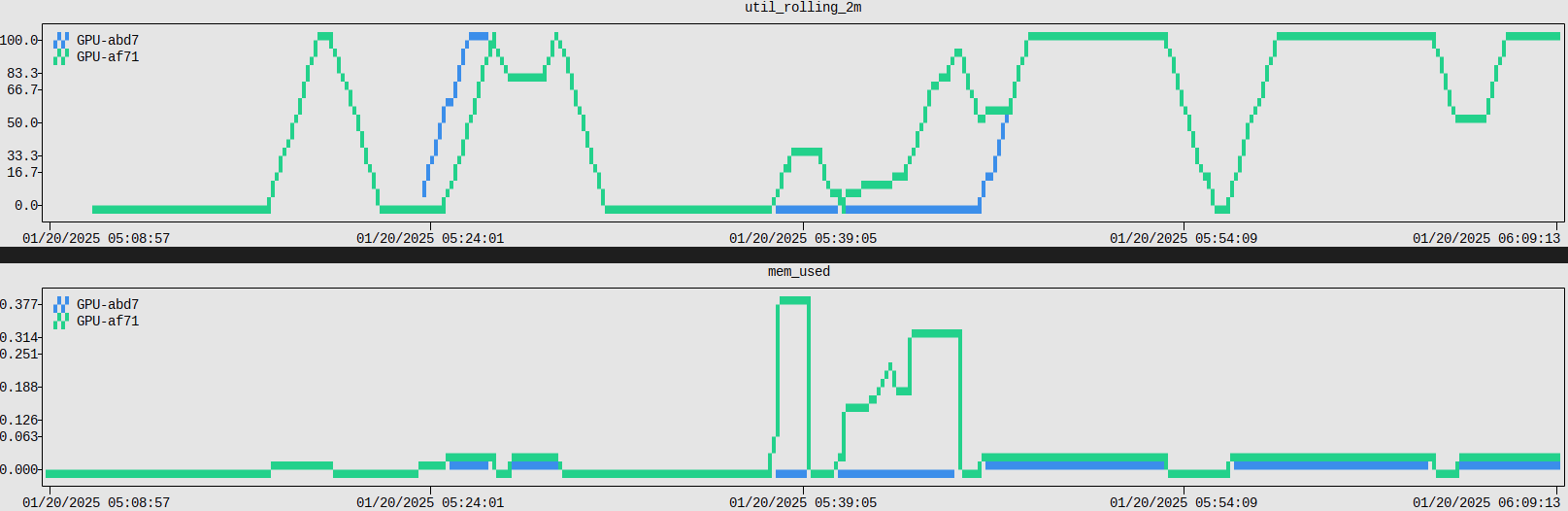

Link to section 'Collecting System Resource Utilization Data' of 'Monitoring Resources' Collecting System Resource Utilization Data

Knowing the precise resource utilization an application had during a job, such as CPU load or memory, can be incredibly useful. This is especially the case when the application isn't performing as expected.

One approach is to run a program like htop during an interactive job and keep an eye on system resources. You can get precise time-series data from nodes associated with your job using XDmod as well, online. But these methods don't gather telemetry in an automated fashion, nor do they give you control over the resolution or format of the data.

As a matter of course, a robust implementation of some HPC workload would include resource utilization data as a diagnostic tool in the event of some failure.

The monitor utility is a simple command line system resource monitoring tool for gathering such telemetry and is available as a module.

module load monitor

Complete documentation is available online at resource-monitor.readthedocs.io. A full manual page is also available for reference, man monitor.

In the context of a SLURM job you will need to put this monitoring task in the background to allow the rest of your job script to proceed. Be sure to interrupt these tasks at the end of your job.

#!/bin/bash

# FILENAME: monitored_job.sh

module load monitor

# track per-code CPU load

monitor cpu percent --all-cores >cpu-percent.log &

CPU_PID=$!

# track memory usage

monitor cpu memory >cpu-memory.log &

MEM_PID=$!

# your code here

# shut down the resource monitors

kill -s INT $CPU_PID $MEM_PID

A particularly elegant solution would be to include such tools in your prologue script and have the tear down in your epilogue script.

For large distributed jobs spread across multiple nodes, mpiexec can be used to gather telemetry from all nodes in the job. The hostname is included in each line of output so that data can be grouped as such. A concise way of constructing the needed list of hostnames in SLURM is to simply use srun hostname | sort -u.

#!/bin/bash

# FILENAME: monitored_job.sh

module load monitor

# track all CPUs (one monitor per host)

mpiexec -machinefile <(srun hostname | sort -u) \

monitor cpu percent --all-cores >cpu-percent.log &

CPU_PID=$!

# track memory on all hosts (one monitor per host)

mpiexec -machinefile <(srun hostname | sort -u) \

monitor cpu memory >cpu-memory.log &

MEM_PID=$!

# your code here

# shut down the resource monitors

kill -s INT $CPU_PID $MEM_PID

To get resource data in a more readily computable format, the monitor program can be told to output in CSV format with the --csv flag.

monitor cpu memory --csv >cpu-memory.csv

For a distributed job you will need to suppress the header lines otherwise one will be created by each host.

monitor cpu memory --csv | head -1 >cpu-memory.csv

mpiexec -machinefile <(srun hostname | sort -u) \

monitor cpu memory --csv --no-header >>cpu-memory.csv

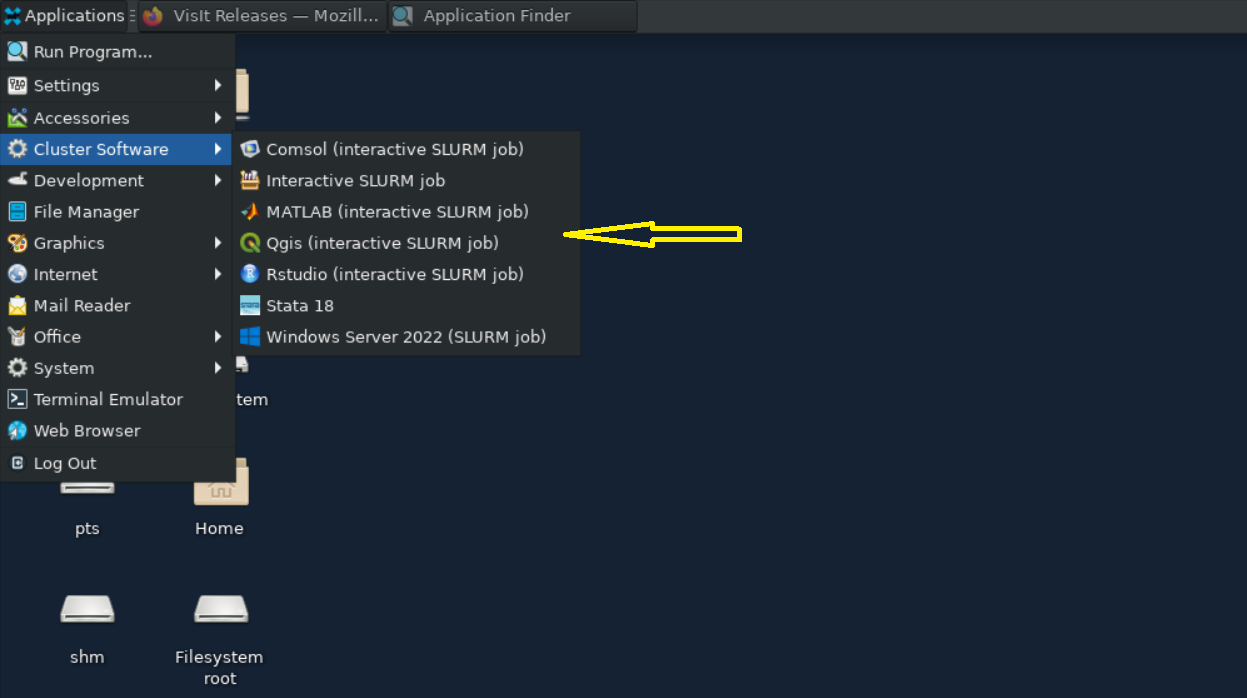

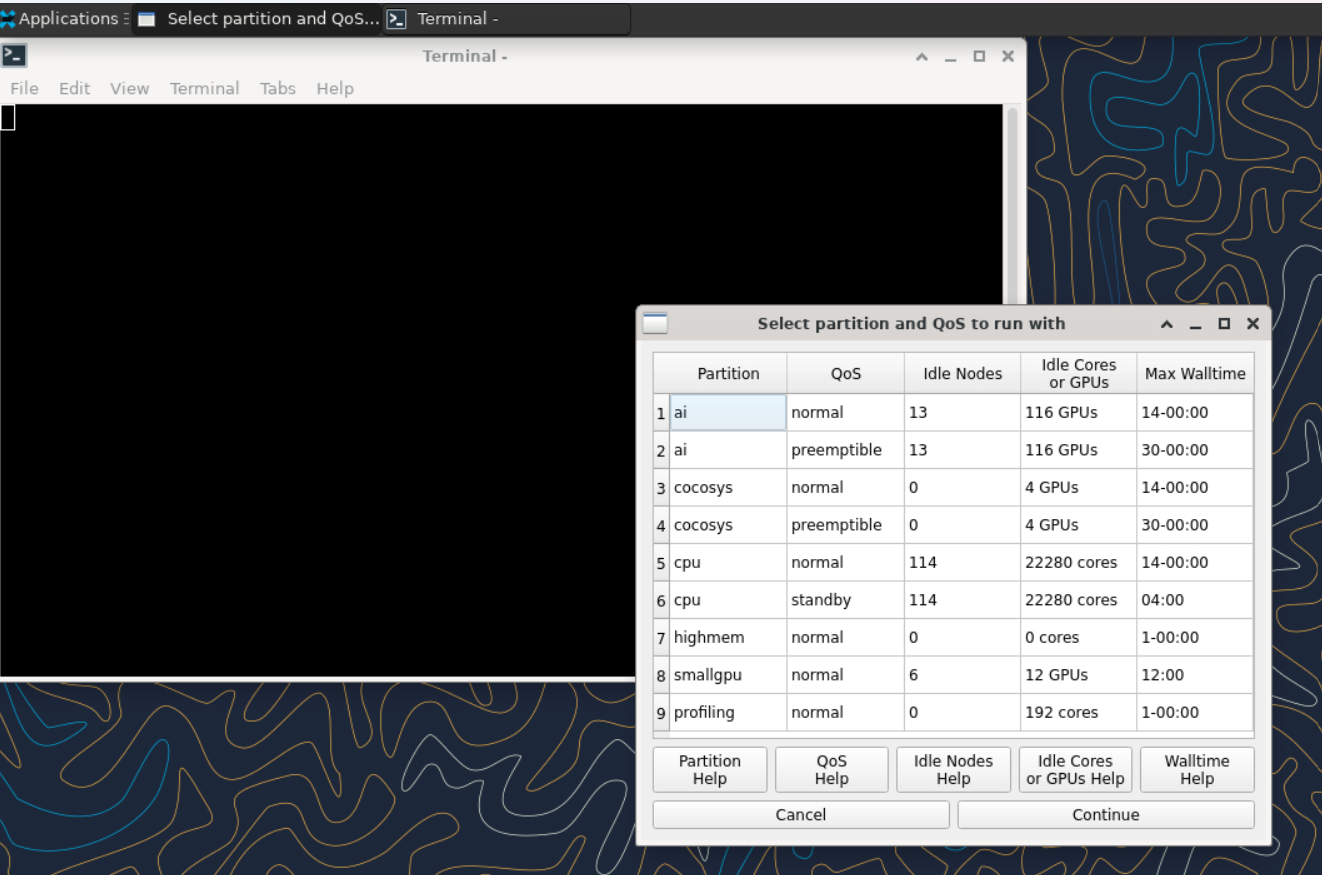

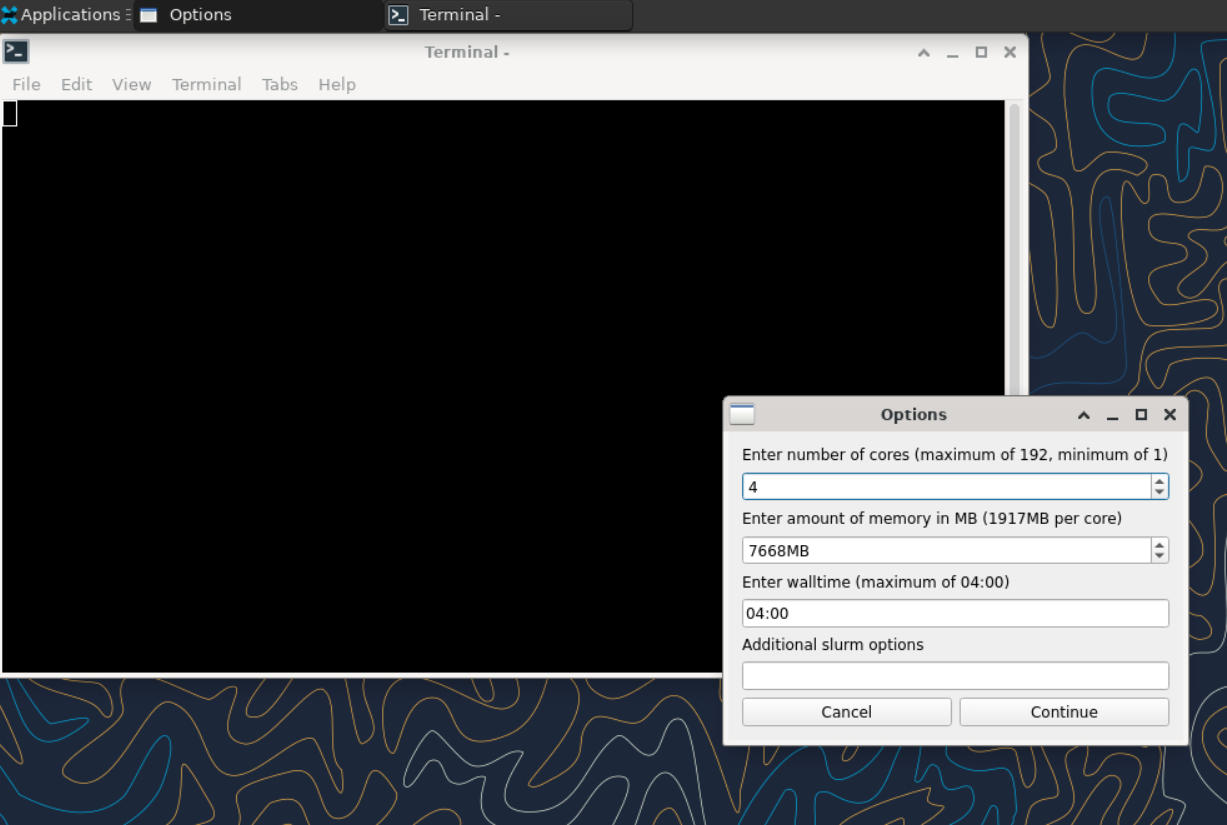

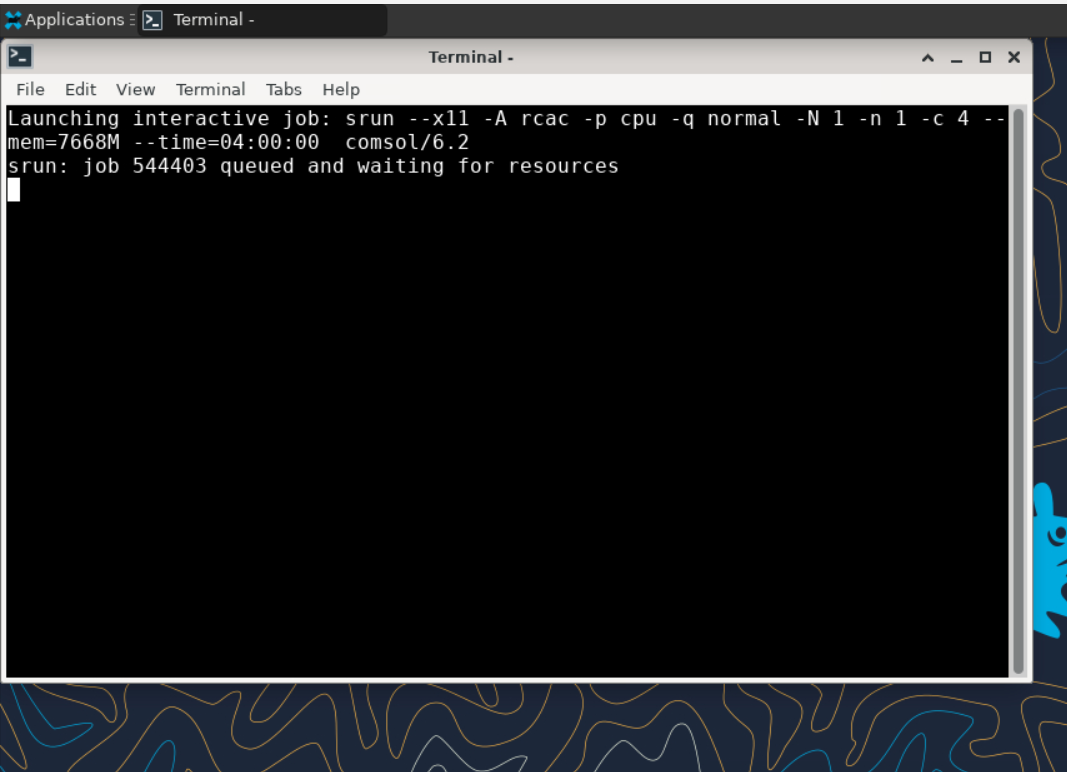

Specific Applications

The following examples demonstrate job submission files for some common real-world applications. See the Generic SLURM Examples section for more examples on job submissions that can be adapted for use.

Python

Notice: Python 2.7 has reached end-of-life on Jan 1, 2020 (announcement). Please update your codes and your job scripts to use Python 3.

Python is a high-level, general-purpose, interpreted, dynamic programming language. We suggest using Anaconda which is a Python distribution made for large-scale data processing, predictive analytics, and scientific computing. For example, to use the default Anaconda distribution:

$ module load conda

For a full list of available Anaconda and Python modules enter:

$ module spider conda

Example Python Jobs

This section illustrates how to submit a small Python job to a SLURM queue.

Link to section 'Example 1: Hello world' of 'Example Python Jobs' Example 1: Hello world

Prepare a Python input file with an appropriate filename, here named hello.py:

# FILENAME: hello.py

import string, sys

print("Hello, world!")

Prepare a job submission file with an appropriate filename, here named myjob.sub:

#!/bin/bash

# FILENAME: myjob.sub

module load conda

python hello.py

Hello, world!

Link to section 'Example 2: Matrix multiply' of 'Example Python Jobs' Example 2: Matrix multiply

Save the following script as matrix.py:

# Matrix multiplication program

x = [[3,1,4],[1,5,9],[2,6,5]]

y = [[3,5,8,9],[7,9,3,2],[3,8,4,6]]

result = [[sum(a*b for a,b in zip(x_row,y_col)) for y_col in zip(*y)] for x_row in x]

for r in result:

print(r)

Change the last line in the job submission file above to read:

python matrix.py

The standard output file from this job will result in the following matrix:

[28, 56, 43, 53]

[65, 122, 59, 73]

[63, 104, 54, 60]

Link to section 'Example 3: Sine wave plot using numpy and matplotlib packages' of 'Example Python Jobs' Example 3: Sine wave plot using numpy and matplotlib packages

Save the following script as sine.py:

import numpy as np

import matplotlib

matplotlib.use('Agg')

import matplotlib.pyplot as plt

x = np.linspace(-np.pi, np.pi, 201)

plt.plot(x, np.sin(x))

plt.xlabel('Angle [rad]')

plt.ylabel('sin(x)')

plt.axis('tight')

plt.savefig('sine.png')

Change your job submission file to submit this script and the job will output a png file and blank standard output and error files.

For more information about Python:

Managing Environments with Conda

Conda is a package manager in Anaconda that allows you to create and manage multiple environments where you can pick and choose which packages you want to use. To use Conda you must load an Anaconda module:

$ module load conda

Many packages are pre-installed in the global environment. To see these packages:

$ conda list

To create your own custom environment:

$ conda create --name MyEnvName python=3.8 FirstPackageName SecondPackageName -y

The --name option specifies that the environment created will be named MyEnvName. You can include as many packages as you require separated by a space. Including the -y option lets you skip the prompt to install the package. By default environments are created and stored in the $HOME/.conda directory.

To create an environment at a custom location:

$ conda create --prefix=$HOME/MyEnvName python=3.8 PackageName -y

To see a list of your environments:

$ conda env list

To remove unwanted environments:

$ conda remove --name MyEnvName --all

To add packages to your environment:

$ conda install --name MyEnvName PackageNames

To remove a package from an environment:

$ conda remove --name MyEnvName PackageName

Installing packages when creating your environment, instead of one at a time, will help you avoid dependency issues.

To activate or deactivate an environment you have created:

$ source activate MyEnvName

$ source deactivate MyEnvName

If you created your conda environment at a custom location using --prefix option, then you can activate or deactivate it using the full path.

$ source activate $HOME/MyEnvName

$ source deactivate $HOME/MyEnvName

To use a custom environment inside a job you must load the module and activate the environment inside your job submission script. Add the following lines to your submission script:

$ module load conda

$ source activate MyEnvName

For more information about Python:

Installing Packages

Installing Python packages in an Anaconda environment is recommended. One key advantage of Anaconda is that it allows users to install unrelated packages in separate self-contained environments. Individual packages can later be reinstalled or updated without impacting others. If you are unfamiliar with Conda environments, please check our Conda Guide.

To facilitate the process of creating and using Conda environments, we support a script (conda-env-mod) that generates a module file for an environment, as well as an optional Jupyter kernel to use this environment in a JupyterHub notebook.

You must load one of the anaconda modules in order to use this script.

$ module load conda

Step-by-step instructions for installing custom Python packages are presented below.

Link to section 'Step 1: Create a conda environment' of 'Installing Packages' Step 1: Create a conda environment

Users can use the conda-env-mod script to create an empty conda environment. This script needs either a name or a path for the desired environment. After the environment is created, it generates a module file for using it in future. Please note that conda-env-mod is different from the official conda-env script and supports a limited set of subcommands. Detailed instructions for using conda-env-mod can be found with the command conda-env-mod --help.

-

Example 1: Create a conda environment named mypackages in user's

$HOMEdirectory.$ conda-env-mod create -n mypackages -

Example 2: Create a conda environment named mypackages at a custom location.

$ conda-env-mod create -p /depot/mylab/apps/mypackagesPlease follow the on-screen instructions while the environment is being created. After finishing, the script will print the instructions to use this environment.

... ... ... Preparing transaction: ...working... done Verifying transaction: ...working... done Executing transaction: ...working... done +------------------------------------------------------+ | To use this environment, load the following modules: | | module load use.own | | module load conda-env/mypackages-py3.8.5 | +------------------------------------------------------+ Your environment "mypackages" was created successfully.

Note down the module names, as you will need to load these modules every time you want to use this environment. You may also want to add the module load lines in your jobscript, if it depends on custom Python packages.

By default, module files are generated in your $HOME/privatemodules directory. The location of module files can be customized by specifying the -m /path/to/modules option to conda-env-mod.

Note: The main differences between -p and -m are: 1) -p will change the location of packages to be installed for the env and the module file will still be located at the $HOME/privatemodules directory as defined in use.own. 2) -m will only change the location of the module file. So the method to load modules created with -m and -p are different, see Example 3 for details.

- Example 3: Create a conda environment named labpackages in your group's Data Depot space and place the module file at a shared location for the group to use.

$ conda-env-mod create -p /depot/mylab/apps/labpackages -m /depot/mylab/etc/modules ... ... ... Preparing transaction: ...working... done Verifying transaction: ...working... done Executing transaction: ...working... done +-------------------------------------------------------+ | To use this environment, load the following modules: | | module use /depot/mylab/etc/modules | | module load conda-env/labpackages-py3.8.5 | +-------------------------------------------------------+ Your environment "labpackages" was created successfully.

If you used a custom module file location, you need to run the module use command as printed by the command output above.

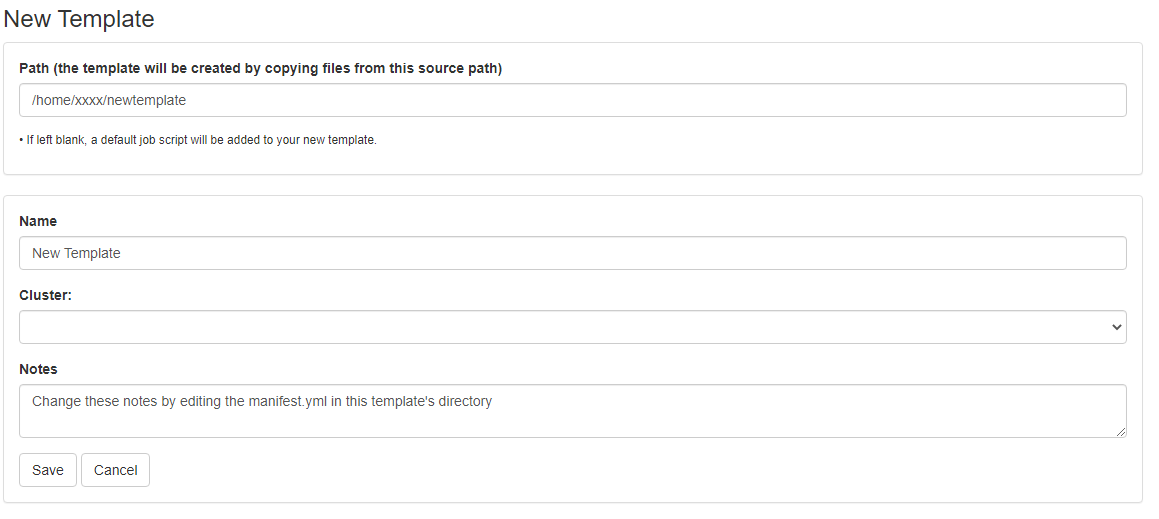

By default, only the environment and a module file are created (no Jupyter kernel). If you plan to use your environment in a JupyterHub notebook, you need to append a --jupyter flag to the above commands.