Frequently Asked Questions

Some common questions, errors, and problems are categorized below. Click the Expand Topics link in the upper right to see all entries at once. You can also use the search box above to search the user guide for any issues you are seeing.

About Hammer

Frequently asked questions about Hammer.

Can you remove me from the Hammer mailing list?

Your subscription in the Hammer mailing list is tied to your account on Hammer. If you are no longer using your account on Hammer, your account can be deleted from the My Accounts page. Hover over the resource you wish to remove yourself from and click the red 'X' button. Your account and mailing list subscription will be removed overnight. Be sure to make a copy of any data you wish to keep first.

How is Hammer different than other Community Clusters?

- Hammer is optimized for loosely-coupled, high-throughput computation. The scheduler is configured to favor starting jobs quickly and ensure maximum utilization.

- The maximum job size is 8 processor cores. If you require resources with a greater degree of parallelism, please consider an alternate community cluster system optimized for high-performance, parallel computing.

- Jobs are scheduled on a whole-node basis and will not share nodes with other jobs by default. You may submit jobs that use less than one node, however, you will be allocated a whole node from your queue unless node sharing is enabled. Node sharing is enabled by adding ‑l naccesspolicy=singleuser to your job's requirements.

Do I need to do anything to my firewall to access Hammer?

No firewall changes are needed to access Hammer. However, to access data through Network Drives (i.e., CIFS, "Z: Drive"), you must be on a Purdue campus network or connected through VPN.

Logging In & Accounts

Frequently asked questions about logging in & accounts.

Errors

Common errors and solutions/work-arounds for them.

/usr/bin/xauth: error in locking authority file

Link to section 'Problem' of '/usr/bin/xauth: error in locking authority file' Problem

I receive this message when logging in:

/usr/bin/xauth: error in locking authority file

Link to section 'Solution' of '/usr/bin/xauth: error in locking authority file' Solution

Your home directory disk quota is full. You may check your quota with myquota.

You will need to free up space in your home directory.

ncdu command is a convenient interactive tool to examine disk usage. Consider running ncdu $HOME to analyze where the bulk of the usage is. With this knowledge, you could then archive your data elsewhere (e.g. your research group's Data Depot space, or Fortress tape archive), or delete files you no longer need.

There are several common locations that tend to grow large over time and are merely cached downloads. The following are safe to delete if you see them in the output of ncdu $HOME:

/home/myusername/.local/share/Trash

/home/myusername/.cache/pip

/home/myusername/.conda/pkgs

/home/myusername/.singularity/cache

My SSH connection hangs

Link to section 'Problem' of 'My SSH connection hangs' Problem

Your console hangs while trying to connect to a RCAC Server.

Link to section 'Solution' of 'My SSH connection hangs' Solution

This can happen due to various reasons. Most common reasons for hanging SSH terminals are:

- Network: If you are connected over wifi, make sure that your Internet connection is fine.

- Busy front-end server: When you connect to a cluster, you SSH to one of the front-end login nodes. Due to transient user loads, one or more of the front-ends may become unresponsive for a short while. To avoid this, try reconnecting to the cluster or wait until the login node you have connected to has reduced load.

- File system issue: If a server has issues with one or more of the file systems (

home,scratch, ordepot) it may freeze your terminal. To avoid this you can connect to another front-end.

If neither of the suggestions above work, please contact support specifying the name of the server where your console is hung.

Thinlinc session frozen

Link to section 'Problem' of 'Thinlinc session frozen' Problem

Your Thinlinc session is frozen and you can not launch any commands or close the session.

Link to section 'Solution' of 'Thinlinc session frozen' Solution

This can happen due to various reasons. The most common reason is that you ran something memory-intensive inside that Thinlinc session on a front-end, so parts of the Thinlinc session got killed by Cgroups, and the entire session got stuck.

- If you are using a web-version Thinlinc remote desktop (inside the browser):

The web version does not have the capability to kill the existing session, only the standalone client does. Please install the standalone client and follow the steps below:

- If you are using a Thinlinc client:

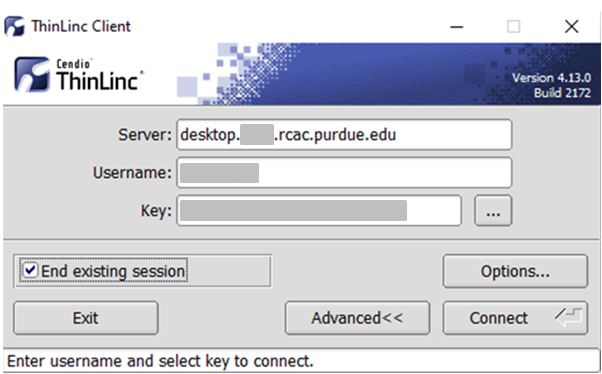

Close the ThinLinc client, reopen the client login popup, and select

End existing session.

Select "End existing session" and try "Connect" again.

Thinlinc session unreachable

Link to section 'Problem' of 'Thinlinc session unreachable' Problem

When trying to login to Thinlinc and re-connect to your existing session, you receive an error "Your Thinlinc session is currently unreachable".

Link to section 'Solution' of 'Thinlinc session unreachable' Solution

This can happen if the specific login node your existing remote desktop session was residing on is currently offline or down, so Thinlinc can not reconnect to your existing session. Most often the session is non-recoverable at this point, so the solution is to terminate your existing Thinlinc desktop session and start a new one.

- If you are using a web-version Thinlinc remote desktop (inside the browser):

The web version does not have the capability to kill the existing session, only the standalone client does. Please install the standalone client and follow the steps below:

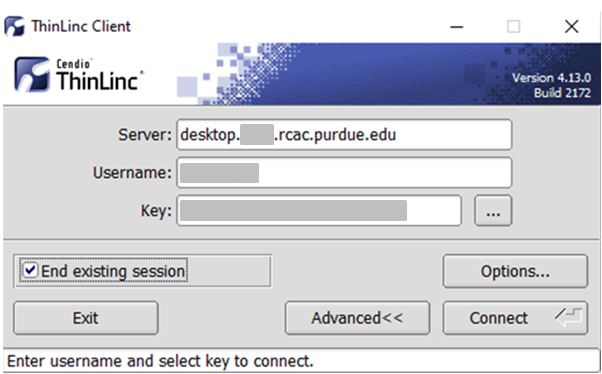

- If you are using a Thinlinc client:

Close the ThinLinc client, reopen the client login popup, and select

End existing session.

Select "End existing session" and try "Connect" again.

Questions

Frequently asked questions about logging in & accounts.

I worked on Hammer after I graduated/left Purdue, but can not access it anymore

Link to section 'Problem' of 'I worked on Hammer after I graduated/left Purdue, but can not access it anymore' Problem

You have graduated or left Purdue but continue collaboration with your Purdue colleagues. You find that your access to Purdue resources has suddenly stopped and your password is no longer accepted.

Link to section 'Solution' of 'I worked on Hammer after I graduated/left Purdue, but can not access it anymore' Solution

Access to all resources depends on having a valid Purdue Career Account. Expired Career Accounts are removed twice a year, during Spring and October breaks (more details at the official page). If your Career Account was purged due to expiration, you will not be be able to access the resources.

To provide remote collaborators with valid Purdue credentials, the University provides a special procedure called Request for Privileges (R4P). If you need to continue your collaboration with your Purdue PI, the PI will have to submit or renew an R4P request on your behalf.

After your R4P is completed and Career Account is restored, please note two additional necessary steps:

-

Access: Restored Career Accounts by default do not have any RCAC resources enabled for them. Your PI will have to login to the Manage Users tool and explicitly re-enable your access by un-checking and then ticking back checkboxes for desired queues/Unix groups resources.

-

Email: Restored Career Accounts by default do not have their @purdue.edu email service enabled. While this does not preclude you from using RCAC resources, any email messages (be that generated on the clusters, or any service announcements) would not be delivered - which may cause inconvenience or loss of compute jobs. To avoid this, we recommend setting your restored @purdue.edu email service to "Forward" (to an actual address you read). The easiest way to ensure it is to go through the Account Setup process.

Jobs

Frequently asked questions related to running jobs.

Errors

Common errors and potential solutions/workarounds for them.

cannot connect to X server / cannot open display

Link to section 'Problem' of 'cannot connect to X server / cannot open display' Problem

You receive the following message after entering a command to bring up a graphical window

cannot connect to X server cannot open display

Link to section 'Solution' of 'cannot connect to X server / cannot open display' Solution

This can happen due to multiple reasons:

- Reason: Your SSH client software does not support graphical display by itself (e.g. SecureCRT or PuTTY).

- Solution: Try using a client software like Thinlinc or MobaXterm as described in the SSH X11 Forwarding guide.

-

Reason: You did not enable X11 forwarding in your SSH connection.

-

Solution: If you are in a Windows environment, make sure that X11 forwarding is enabled in your connection settings (e.g. in MobaXterm or PuTTY). If you are in a Linux environment, try

ssh -Y -l username hostname

-

- Reason: If you are trying to open a graphical window within an interactive PBS job, make sure you are using the

-Xoption withqsubafter following the previous step(s) for connecting to the front-end. Please see the example in the Interactive Jobs guide. - Reason: If none of the above apply, make sure that you are within quota of your home directory.

bash: command not found

Link to section 'Problem' of 'bash: command not found' Problem

You receive the following message after typing a command

bash: command not found

Link to section 'Solution' of 'bash: command not found' Solution

This means the system doesn't know how to find your command. Typically, you need to load a module to do it.

qdel: Server could not connect to MOM 12345.hammer-adm.rcac.purdue.edu

Link to section 'Problem' of 'qdel: Server could not connect to MOM 12345.hammer-adm.rcac.purdue.edu' Problem

You receive the following message after attempting to delete a job with the qdel command

qdel: Server could not connect to MOM 12345.hammer-adm.rcac.purdue.edu

Link to section 'Solution' of 'qdel: Server could not connect to MOM 12345.hammer-adm.rcac.purdue.edu' Solution

This error usually indicates that at least one node running your job has stopped responding or crashed. Please forward the job ID to support, and staff can help remove the job from the queue.

bash: module command not found

Link to section 'Problem' of 'bash: module command not found' Problem

You receive the following message after typing a command, e.g. module load intel

bash: module command not found

Link to section 'Solution' of 'bash: module command not found' Solution

The system cannot find the module command. You need to source the modules.sh file as below

source /etc/profile.d/modules.sh

or

#!/bin/bash -i

1234.hammer-adm.rcac.purdue.edu.SC: line 12: 12345 Killed

Link to section 'Problem' of '1234.hammer-adm.rcac.purdue.edu.SC: line 12: 12345 Killed' Problem

Your PBS job stopped running and you received an email with the following:

/var/spool/torque/mom_priv/jobs/1234.hammer-adm.rcac.purdue.edu.SC: line 12: 12345 Killed <command name>Link to section 'Solution' of '1234.hammer-adm.rcac.purdue.edu.SC: line 12: 12345 Killed' Solution

This means that the node your job was running on ran out of memory to support your program or code. This may be due to your job or other jobs sharing your node(s) consuming more memory in total than is available on the node. Your program was killed by the node to preserve the operating system. There are two possible causes:

- You requested your job share node(s) with other jobs. You should request all cores of the node or request exclusive access. Either your job or one of the other jobs running on the node consumed too much memory. Requesting exclusive access will give you full control over all the memory on the node.

- Your job requires more memory than is available on the node. You should use more nodes if your job supports MPI or run a smaller dataset.

Questions

Frequently asked questions about jobs.

How do I check my job output while it is running?

Link to section 'Problem' of 'How do I check my job output while it is running?' Problem

After submitting your job to the cluster, you want to see the output that it generates.

Link to section 'Solution' of 'How do I check my job output while it is running?' Solution

There are two simple ways to do this:

- qpeek: Use the tool qpeek to check the job's output. Syntax of the command is:

qpeek <jobid> - Redirect your output to a file: To do this you need to edit the main command in your jobscript as shown below. Please note the redirection command starting with the greater than (>) sign.

On any front-end, go to the working directory of the job and scan the output file.myapplication ...other arguments... > "${PBS_JOBID}.output"

Make sure to replace <jobid> with an appropriate jobid.tail "<jobid>.output"

What is the "debug" queue?

The debug queue allows you to quickly start small, short, interactive jobs in order to debug code, test programs, or test configurations. You are limited to one running job at a time in the queue, and you may run up to two compute nodes for 30 minutes.

How can I get email alerts about my PBS job status?

Link to section 'Question' of 'How can I get email alerts about my PBS job status?' Question

How can I be notified when my PBS job was executed and if it completed successfully?

Link to section 'Answer' of 'How can I get email alerts about my PBS job status?' Answer

Submit your job with the following command line arguments

qsub -M email_address -m bea myjobsubmissionfile

Or, include the following in your job submission file.

#PBS -M email_address

#PBS -m bae

The -m option can have the following letters; "a", "b", and "e":

a - mail is sent when the job is aborted by the batch system.

b - mail is sent when the job begins execution.

e - mail is sent when the job terminates.

Can I extend the walltime on a job?

In some circumstances, yes. Walltime extensions must be requested of and completed by staff. Walltime extension requests will be considered on named (your advisor or research lab) queues. Standby or debug queue jobs cannot be extended.

Extension requests are at the discretion of staff based on factors such as any upcoming maintenance or resource availability. Extensions can be made past the normal maximum walltime on named queues but these jobs are subject to early termination should a conflicting maintenance downtime be scheduled.

Please be mindful of time remaining on your job when making requests and make requests at least 24 hours before the end of your job AND during business hours. We cannot guarantee jobs will be extended in time with less than 24 hours notice, after-hours, during weekends, or on a holiday.

We ask that you make accurate walltime requests during job submissions. Accurate walltimes will allow the job scheduler to efficiently and quickly schedule jobs on the cluster. Please consider that extensions can impact scheduling efficiency for all users of the cluster.

Requests can be made by contacting support. We ask that you:

- Provide numerical job IDs, cluster name, and your desired extension amount.

- Provide at least 24 hours notice before job will end (more if request is made on a weekend or holiday).

- Consider making requests during business hours. We may not be able to respond in time to requests made after-hours, on a weekend, or on a holiday.

How do I know Non-uniform Memory Access (NUMA) layout on Hammer?

- You can learn about processor layout on Hammer nodes using the following command:

hammer-a003:~$ lstopo-no-graphics - For detailed IO connectivity:

hammer-a003:~$ lstopo-no-graphics --physical --whole-io - Please note that NUMA information is useful for advanced MPI/OpenMP/GPU optimizations. For most users, using default NUMA settings in MPI or OpenMP would give you the best performance.

Why cannot I use --mem=0 when submitting jobs?

Link to section 'Question' of 'Why cannot I use --mem=0 when submitting jobs?' Question

Why can't I specify --mem=0 for my job?

Link to section 'Answer' of 'Why cannot I use --mem=0 when submitting jobs?' Answer

We no longer support requesting unlimited memory (--mem=0) as it has an adverse effect on the way scheduler allocates job, and could lead to large amount of nodes being blocked from usage.

Most often we suggest relying on default memory allocation (cluster-specific). But if you have to request custom amounts of memory, you can do it explicitly. For example --mem=20G.

If you want to use the entire node's memory, you can submit the job with the --exclusive option.

Data

Frequently asked questions about data and data management.

My scratch files were purged. Can I retrieve them?

Unfortunately, once files are purged, they are purged permanently and cannot be retrieved. Notices of pending purges are sent one week in advance to your Purdue email address. Be sure to regularly check your Purdue email or set up forwarding to an account you do frequently check.

Link to section 'Can you tell me what files were purged?' of 'My scratch files were purged. Can I retrieve them?' Can you tell me what files were purged?

You can see a list of files removed with the command lastpurge. The command accepts a -n option to specify how many weeks/purges ago you want to look back at.

How is my Data Secured on Hammer?

Hammer is operated in line with policies, standards, and best practices as described within Secure Purdue, and specific to RCAC Resources.

Security controls for Hammer are based on ones defined in NIST cybersecurity standards.

Hammer supports research at the L1 fundamental and L2 sensitive levels. Hammer is not approved for storing data at the L3 restricted (covered by HIPAA) or L4 Export Controlled (ITAR), or any Controlled Unclassified Information (CUI).

For resources designed to support research with heightened security requirements, please look for resources within the REED+ Ecosystem.

Link to section 'For additional information' of 'How is my Data Secured on Hammer?' For additional information

Log in with your Purdue Career Account.

Can I share data with outside collaborators?

Yes! Globus allows convenient sharing of data with outside collaborators. Data can be shared with collaborators' personal computers or directly with many other computing resources at other institutions. See the Globus documentation on how to share data:

Can I access Fortress from Hammer?

Yes. While Fortress directories are not directly mounted on Hammer for performance and archival protection reasons, they can be accessed from Hammer front-ends and nodes using any of the recommended methods of HSI, HTAR or Globus.

Software

Frequently asked questions about software.

Cannot use pip after loading ml-toolkit modules

Link to section 'Question' of 'Cannot use pip after loading ml-toolkit modules' Question

Pip throws an error after loading the machine learning modules. How can I fix it?

Link to section 'Answer' of 'Cannot use pip after loading ml-toolkit modules' Answer

Machine learning modules (tensorflow, pytorch, opencv etc.) include a version of pip that is newer than the one installed with Anaconda. As a result it will throw an error when you try to use it.

$ pip --version

Traceback (most recent call last):

File "/apps/cent7/anaconda/5.1.0-py36/bin/pip", line 7, in <module>

from pip import main

ImportError: cannot import name 'main'

The preferred way to use pip with the machine learning modules is to invoke it via Python as shown below.

$ python -m pip --version

How can I get access to Sentaurus software?

Link to section 'Question' of 'How can I get access to Sentaurus software?' Question

How can I get access to Sentaurus tools for micro- and nano-electronics design?

Link to section 'Answer' of 'How can I get access to Sentaurus software?' Answer

Sentaurus software license requires a signed NDA. Please contact Dr. Mark Johnson, Director of ECE Instructional Laboratories to complete the process.

Once the licensing process is complete and you have been added into a cae2 Unix group, you could use Sentaurus on RCAC community clusters by loading the corresponding environment module:

module load sentaurus

About Research Computing

Frequently asked questions about RCAC.

Can I get a private server from RCAC?

Link to section 'Question' of 'Can I get a private server from RCAC?' Question

Can I get a private (virtual or physical) server from RCAC?

Link to section 'Answer' of 'Can I get a private server from RCAC?' Answer

Often, researchers may want a private server to run databases, web servers, or other software. RCAC currently has Geddes, a Community Composable Platform optimized for composable, cloud-like workflows that are complementary to the batch applications run on Community Clusters. Funded by the National Science Foundation under grant OAC-2018926, Geddes consists of Dell Compute nodes with two 64-core AMD Epyc 'Rome' processors (128 cores per node).

To purchase access to Geddes today, go to the Cluster Access Purchase page. Please subscribe to our Community Cluster Program Mailing List to stay informed on the latest purchasing developments or contact us (rcac-cluster-purchase@lists.purdue.edu) if you have any questions.