Anvil User Guide

Purdue University is the home of Anvil, a powerful new supercomputer that provides advanced computing capabilities to support a wide range of computational and data-intensive research spanning from traditional high-performance computing to modern artificial intelligence applications.

Overview of Anvil

Purdue University is the home of Anvil, a powerful new supercomputer that provides advanced computing capabilities to support a wide range of computational and data-intensive research spanning from traditional high-performance computing to modern artificial intelligence applications.

Anvil, which is funded by a $10 million award from the National Science Foundation, significantly increases the capacity available to the NSF's Advanced Cyberinfrastructure Coordination Ecosystem: Services & Support (ACCESS) program, which serves tens of thousands of researchers across the U.S.. Anvil enters production in 2021 and serves researchers for five years. Additional funding from the NSF supports Anvil's operations and user support.

The name "Anvil" reflects the Purdue Boilermakers' strength and workmanlike focus on producing results, and the Anvil supercomputer enables important discoveries across many different areas of science and engineering. Anvil also serves as an experiential learning laboratory for students to gain real-world experience using computing for their science, and for student interns to work with the Anvil team for construction and operation. We will be training the research computing practitioners of the future.

Anvil is built in partnership with Dell and AMD and consists of 1,000 nodes with two 64-core AMD Epyc "Milan" processors each and will deliver over 1 billion CPU core hours to ACCESS each year, with a peak performance of 5.3 petaflops. Anvil's nodes are interconnected with 100 Gbps Mellanox HDR InfiniBand. The supercomputer ecosystem also includes 32 large memory nodes, each with 1 TB of RAM, and 16 nodes each with four NVIDIA A100 Tensor Core GPUs providing 1.5 PF of single-precision performance to support machine learning and artificial intelligence applications.

Anvil is funded under NSF award number 2005632. Carol Song is the principal investigator and project director. Preston Smith, executive director of the Rosen Center for Advanced Computing, Xiao Zhu, computational scientist and senior research scientist, and Rajesh Kalyanam, data scientist, software engineer, and research scientist, are all co-PIs on the project.

Link to section 'Anvil Specifications' of 'Overview of Anvil' Anvil Specifications

All Anvil nodes have 128 processor cores, 256 GB to 1 TB of RAM, and 100 Gbps Infiniband interconnects.

| Login | Number of Nodes | Processors per Node | Cores per Node | Memory per Node |

|---|---|---|---|---|

| 8 | Two Milan CPUs @ 2.45GHz | 32 | 512 GB |

| Sub-Cluster | Number of Nodes | Processors per Node | Cores per Node | Memory per Node |

|---|---|---|---|---|

| A | 1,000 | Two Milan CPUs @ 2.45GHz | 128 | 256 GB |

| B | 32 | Two Milan CPUs @ 2.45GHz | 128 | 1 TB |

| G | 16 | Two Milan CPUs @ 2.45GHz + Four NVIDIA A100 GPUs | 128 | 512 GB |

| H | 21 | Two Intel Xeon Platinum 8468 CPUs @ 2.1GHz + Four NVIDIA H100 GPUs | 96 | 1 TB |

Anvil nodes run CentOS 8 and use Slurm (Simple Linux Utility for Resource Management) as the batch scheduler for resource and job management. The application of operating system patches will occur as security needs dictate. All nodes allow for unlimited stack usage, as well as unlimited core dump size (though disk space and server quotas may still be a limiting factor).

Link to section 'Software catalog' of 'Overview of Anvil' Software catalog

Accessing the System

Link to section 'Accounts on Anvil' of 'Accessing the System' Accounts on Anvil

Obtaining an Account

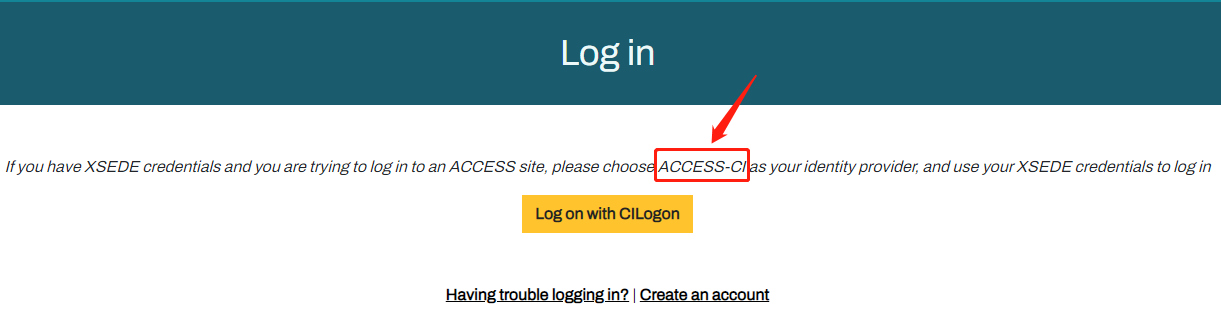

As an ACCESS computing resource, Anvil is accessible to ACCESS users who receive an allocation on the system. To obtain an account, users may submit a proposal through the ACCESS Allocation Request System.

For details on how to go about requesting an allocation, refer to How do I get onto Anvil through ACCESS.

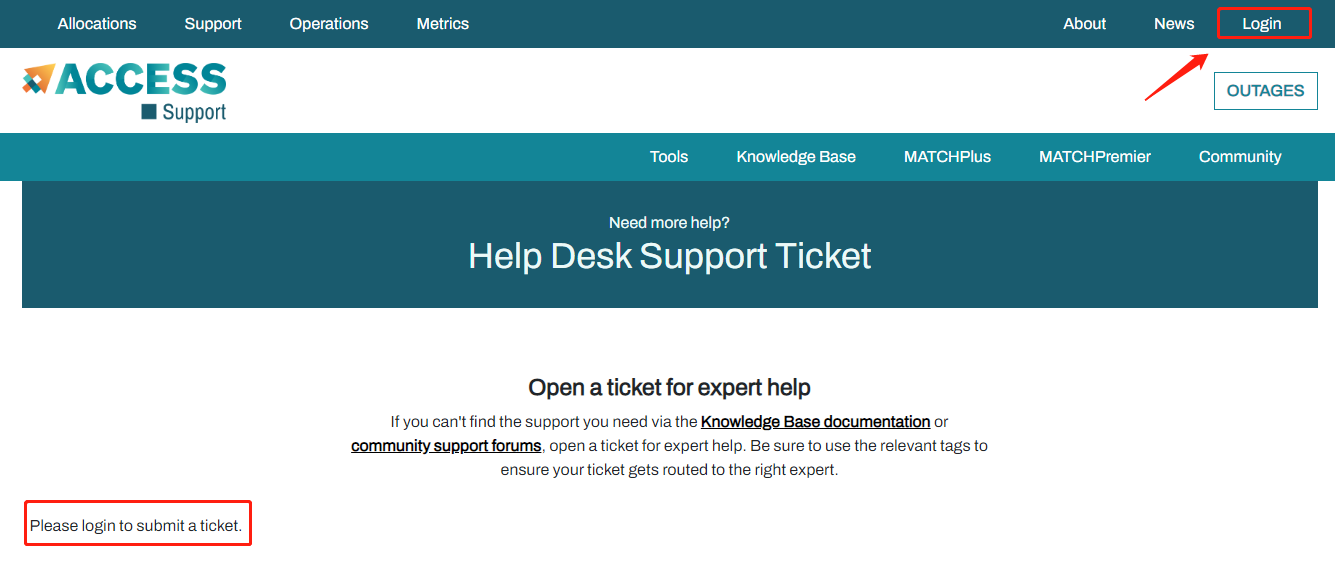

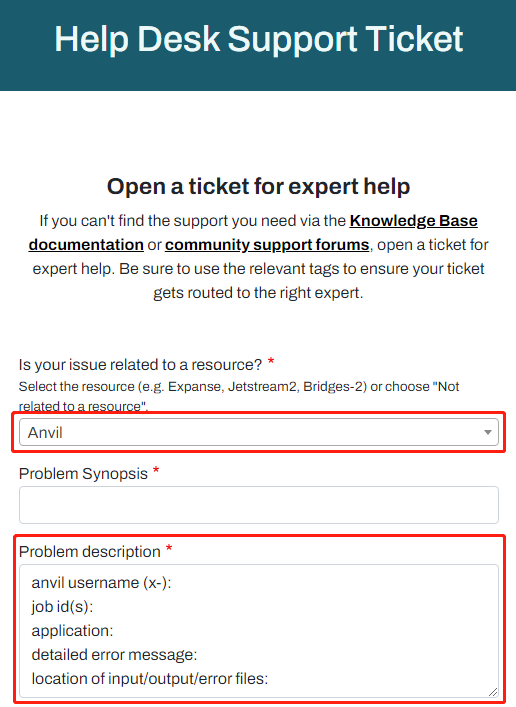

Interested parties may contact the ACCESS Help Desk for help with an Anvil proposal.

How do I get onto Anvil through ACCESS

Link to section 'What is ACCESS?' of 'How do I get onto Anvil through ACCESS' What is ACCESS?

Advanced Cyberinfrastructure Coordination Ecosystem: Services & Support (ACCESS) is an NSF-funded program that manages access to the national research cyberinfrastructure (CI) resources. Any researcher who seeks to use one of these CI resources must follow ACCESS processes to get onto these resources.

Link to section 'What resources are available via ACCESS?' of 'How do I get onto Anvil through ACCESS' What resources are available via ACCESS?

ACCESS coordinates a diverse set of resources including Anvil and other traditional HPC resources suited for resource-intensive CPU workloads, modern accelerator-based systems (e.g., GPU), as well as cloud resources. Anvil provides both CPU and GPU resources as part of ACCESS. A comprehensive list of all the ACCESS-managed resources can be found here along with their descriptions and ideal workloads: https://allocations.access-ci.org/resources

Link to section 'How do I request access to a resource?' of 'How do I get onto Anvil through ACCESS' How do I request access to a resource?

The process of getting onto these resources is broadly:

- Sign up for an ACCESS account (if you don’t have one already) at https://allocations.access-ci.org.

- Prepare an allocation request with details of your proposed computational workflows (science, software needs), resource requirements, and a short CV. See the individual “Preparing Your … Request” pages for details on what documents are required: https://allocations.access-ci.org/prepare-requests.

- Decide on which allocation tier you want to apply to (more on that below) and submit the request.

Link to section 'Which ACCESS tier should I choose?' of 'How do I get onto Anvil through ACCESS' Which ACCESS tier should I choose?

As you can gather from https://allocations.access-ci.org/project-types, there are four different tiers in ACCESS. Broadly, these tiers provide increasing computational resources with corresponding stringent documentation and resource justification requirements. Furthermore, while Explore and Discover tier requests are reviewed on a rolling basis as they are submitted, Accelerate requests will be reviewed monthly and Maximize will be reviewed twice a year. The review period reflects the level of resources provided, and Explore and Discover applications are generally reviewed within a week. An important point to note is that ACCESS does not award you time on a specific computational resource (except for the Maximize tier). Users are awarded a certain number of ACCESS credits which they then exchange for time on a particular resource. Here are some guidelines on how to choose between the tiers:- If you are a graduate student, you may apply for the Explore tier with a letter from your advisor on institutional letterhead stating that the proposed work is being performed primarily by the graduate student and is separate from other funded grants or the advisor's own research.

- If you would just like to test out a resource and gather some performance data before making a large request, Explore or Discover is again the appropriate option.

- If you would like to run simulations across multiple resources to identify the one best suited for you, Discover will provide you with sufficient credits to exchange across multiple systems.

- One way of determining the appropriate tier is to determine what the credits would translate to in terms of computational resources. The exchange calculator (https://allocations.access-ci.org/exchange_calculator) can be used to calculate what a certain number of ACCESS credits translates to in terms of “core-hours” or “GPU-hours” or “node-hours” on an ACCESS resource. For example: the maximum 400,000 ACCESS credits that you may be awarded in the Explore tier translates to ~334,000 CPU core hours or ~6000 GPU hours on Anvil. Based on the scale of simulations you would like to run, you may need to choose one tier or the other.

Link to section 'What else should I know?' of 'How do I get onto Anvil through ACCESS' What else should I know?

- You may request a separate allocation for each of your research grants and the allocation can last the duration of the grant (except for the Maximize tier which only lasts for 12 months). Allocations that do not cite a grant will last for 12 months.

- Supplements are not allowed (for Explore, Discover, and Accelerate tiers), instead you will need to move to a different tier if you require more resources.

- As noted above, the exchange rates for Anvil CPU and Anvil GPU are different so be sure to check the exchange calculator.

- Be sure to include details of the simulations you would like to run and what software you would like to use. This avoids back and forth with the reviewers and also helps Anvil staff determine if your workloads are well suited to Anvil.

- When your request is approved, you only get ACCESS credits awarded. You still need to go through the step of exchanging these credits for time on Anvil. You need not use up all your credits and may also use part of your credits for time on other ACCESS resources.

- You will also need to go to the allocations page and add any users you would like to have access to these resources. Note that they will need to sign up for ACCESS accounts as well before you can add them.

- For other questions you may have, take a look at ACCESS policies here: (https://allocations.access-ci.org/allocations-policy)

Logging In

Anvil supports the SSH (Secure Shell), ThinLinc, and Open OnDemand mechanisms for logging in. The first two of these use SSH keys. If you need help creating or uploading your SSH keys, please see the Managing SSH Public Keys page for that information.

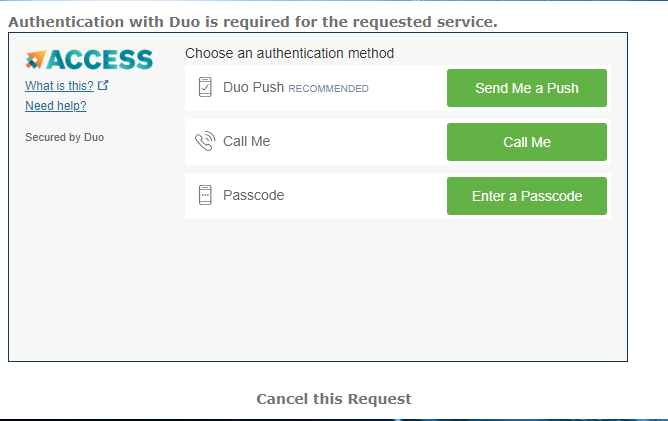

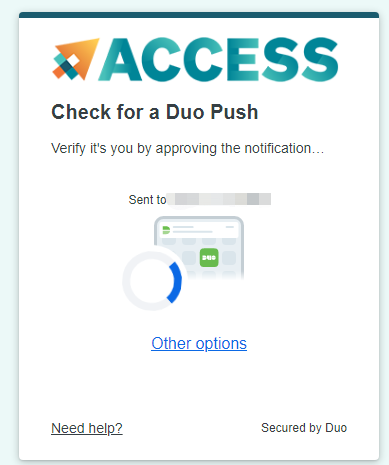

ACCESS requires that you use the ACCESS Duo service for additional authentication, you will be prompted to authenticate yourself further using Duo and your Duo client app, token, or other contact methods. Consult Manage Multi-Factor Authentication with Duo for account setup instructions.

Link to section 'With SSH' of 'Logging In' With SSH

Anvil accepts standard SSH connections with public keys-based authentication to anvil.rcac.purdue.edu using your Anvil username:

localhost$ ssh -l my-x-anvil-username anvil.rcac.purdue.edu

Please note:

- Your Anvil username is not the same as your ACCESS username (although it is derived from it). Anvil usernames look like

x-ACCESSusernameor similar, starting with anx-. - Password-based authentication is not supported on Anvil (in favor of SSH keys). There is no "Anvil password", and your ACCESS password will not be accepted by Anvil's SSH either. SSH keys can be set up from the Open OnDemand interface on Anvil

ondemand.anvil.rcac.purdue.edu. Please follow the steps in Setting up SSH keys to add your SSH key on Anvil.

When reporting SSH problems to the help desk, please execute the ssh command with the -vvv option and include the verbose output in your problem description.

Link to section 'Additional Services and Instructions' of 'Logging In' Additional Services and Instructions

Open OnDemand

Open OnDemand is an open-source HPC portal developed by the Ohio Supercomputing Center. Open OnDemand allows one to interact with HPC resources through a web browser and easily manage files, submit jobs, and interact with graphical applications directly in a browser, all with no software to install. Anvil has an instance of OnDemand available that can be accessed via ondemand.anvil.rcac.purdue.edu.

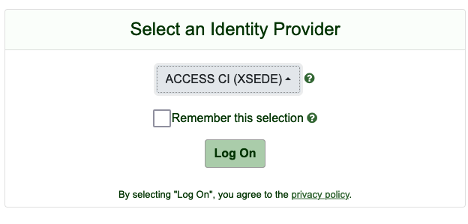

Link to section 'Logging In' of 'Open OnDemand' Logging In

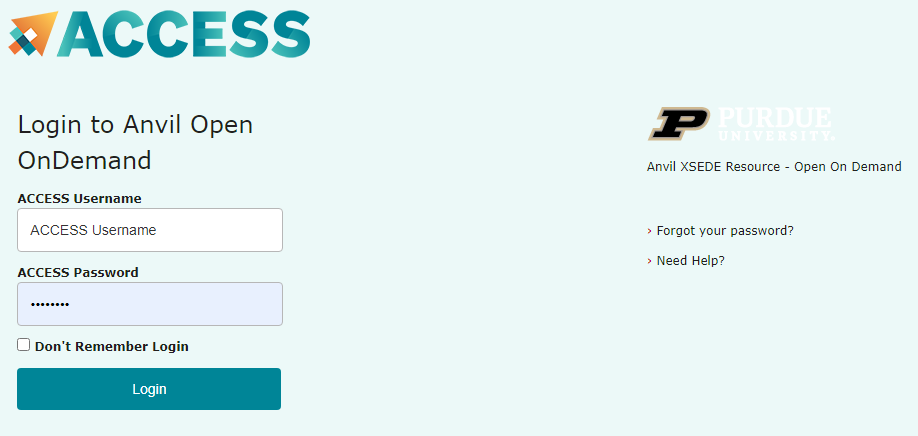

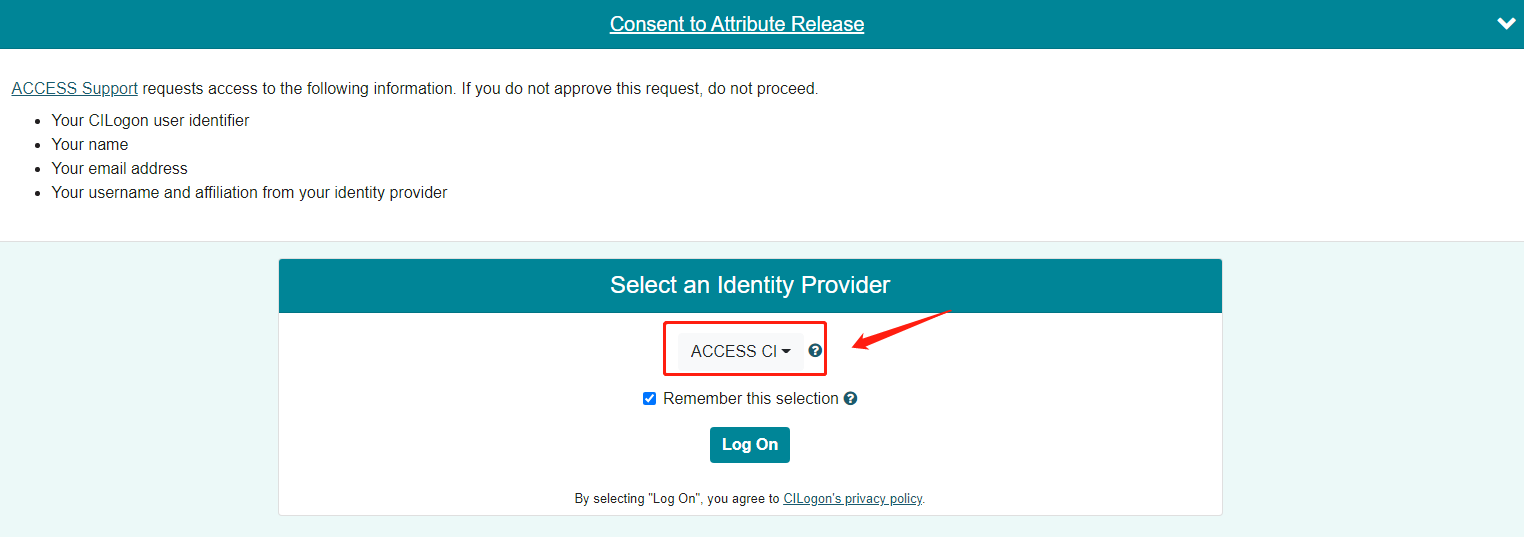

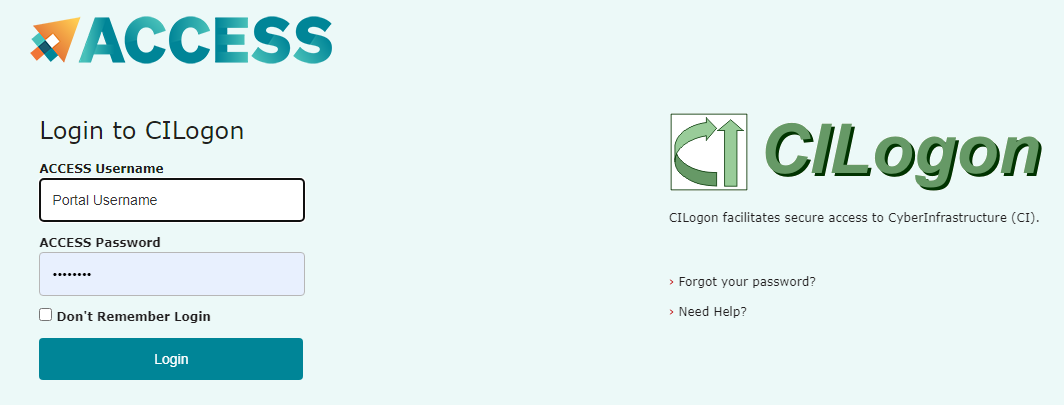

To log into the Anvil OnDemand portal:

- Navigate to ondemand.anvil.rcac.purdue.edu

- Log in using your ACCESS username and password

- ACCESS Duo authentication is required to login to OnDemand. Consult Manage Multi-Factor Authentication with Duo for account setup instructions

The Anvil team continues to refine the user interface, please reach out to us in case of any queries regarding the use of OnDemand.

SSH Keys

Link to section 'General overview' of 'SSH Keys' General overview

To connect to Anvil using SSH keys, you must follow three high-level steps:

- Generate a key pair consisting of a private and a public key on your local machine.

- Copy the public key to the cluster and append it to

$HOME/.ssh/authorized_keysfile in your account. - Test if you can ssh from your local computer to the cluster directly.

Detailed steps for different operating systems and specific SSH client software are given below.

Link to section 'Mac and Linux:' of 'SSH Keys' Mac and Linux:

-

Run

ssh-keygenin a terminal on your local machine.localhost >$ ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (localhost/.ssh/id_rsa):You may supply a filename and a passphrase for protecting your private key, but it is not mandatory. To accept the default settings, press Enter without specifying a filename.

Note: If you do not protect your private key with a passphrase, anyone with access to your computer could SSH to your account on Anvil.Created directory 'localhost/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in localhost/.ssh/id_rsa. Your public key has been saved in localhost/.ssh/id_rsa.pub. The key fingerprint is: ... The key's randomart image is: ...By default, the key files will be stored in

~/.ssh/id_rsaand~/.ssh/id_rsa.pubon your local machine. -

Go to the

~/.sshfolder in your local machine andcatthe key information in theid_rsa.pub file.localhost/.ssh>$ cat id_rsa.pub ssh-rsa XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX= localhost-username@localhost -

For your first time login to Anvil, please log in to Open OnDemand ondemand.anvil.rcac.purdue.edu using your ACCESS username and password.

-

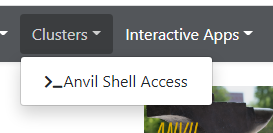

Once logged on to OnDemand, go to the

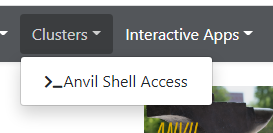

Clusterson the top toolbar. ClickAnvil Shell Accessand you will be able to see the terminal.

============================================================================= == Welcome to the Anvil Cluster == …… ============================================================================= ** DID YOU KNOW? ** …… ***************************************************************************** x-anvilusername@login04.anvil:[~] $ pwd /home/x-anvilusername -

Under the home directory on Anvil, make a

.sshdirectory usingmkdir -p ~/.sshif it does not exist.

Create a file~/.ssh/authorized_keyson the Anvil cluster and copy the contents of the public keyid_rsa.pubin your local machine into~/.ssh/authorized_keys.x-anvilusername@login04.anvil:[~] $ pwd /home/x-anvilusername x-anvilusername@login04.anvil:[~] $ cd ~/.ssh x-anvilusername@login04.anvil:[.ssh] $ vi authorized_keys # copy-paste the contents of the public key id_rsa.pub in your local machine (as shown in step 2) to authorized_keys here and save the change of authorized_keys file. Then it is all set! # -

Test the new key by SSH-ing to the server. The login should now complete without asking for a password.

localhost>$ ssh x-anvilusername@anvil.rcac.purdue.edu ============================================================================= == Welcome to the Anvil Cluster == ... ============================================================================= x-anvilusername@login06.anvil:[~] $ -

If the private key has a non-default name or location, you need to specify the key by

ssh -i my_private_key_name x-anvilusername@anvil.rcac.purdue.edu.

Link to section 'Windows:' of 'SSH Keys' Windows:

| Programs | Instructions |

|---|---|

| MobaXterm | Open a local terminal and follow Linux steps |

| Git Bash | Follow Linux steps |

| Windows 10 PowerShell | Follow Linux steps |

| Windows 10 Subsystem for Linux | Follow Linux steps |

| PuTTY | Follow steps below |

PuTTY:

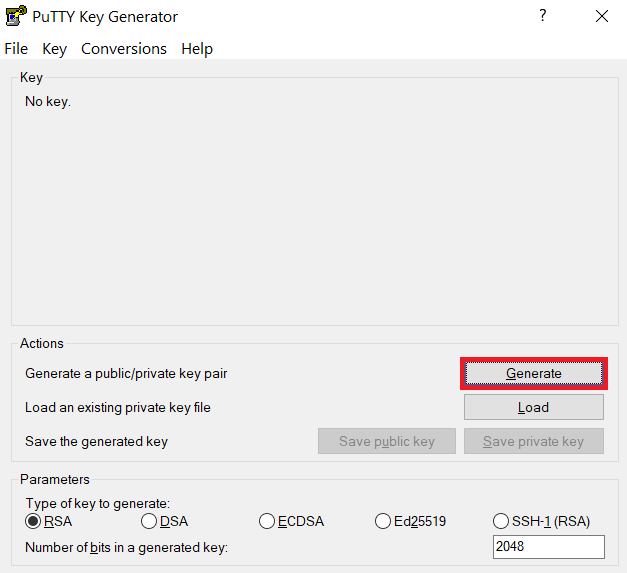

-

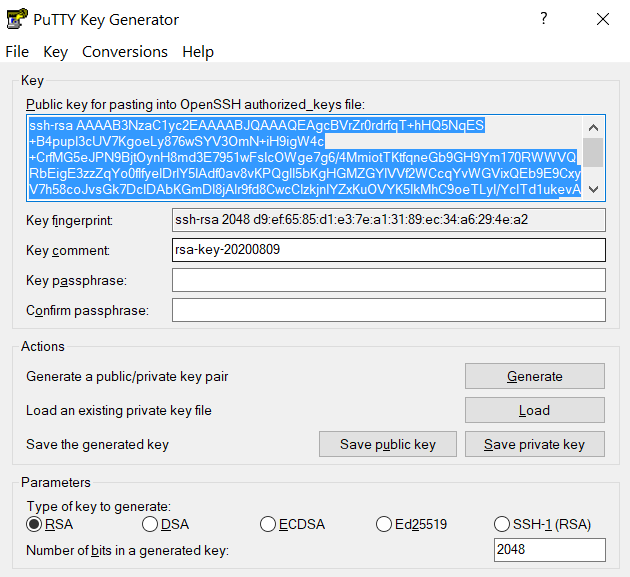

Launch PuTTYgen, keep the default key type (RSA) and length (2048-bits) and click Generate button.

The "Generate" button can be found under the "Actions" section of the PuTTY Key Generator interface. -

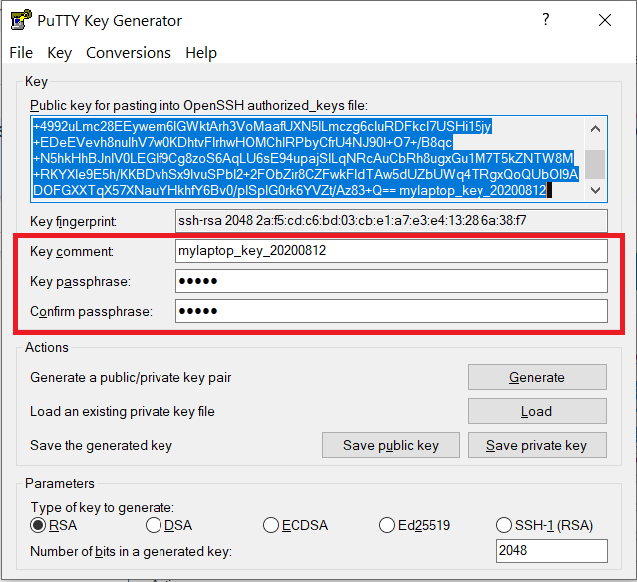

Once the key pair is generated:

Use the Save public key button to save the public key, e.g.

Documents\SSH_Keys\mylaptop_public_key.pubUse the Save private key button to save the private key, e.g.

Documents\SSH_Keys\mylaptop_private_key.ppk. When saving the private key, you can also choose a reminder comment, as well as an optional passphrase to protect your key, as shown in the image below. Note: If you do not protect your private key with a passphrase, anyone with access to your computer could SSH to your account on Anvil.

The PuTTY Key Generator form has inputs for the Key passphrase and optional reminder comment. From the menu of PuTTYgen, use the "Conversion -> Export OpenSSH key" tool to convert the private key into openssh format, e.g.

Documents\SSH_Keys\mylaptop_private_key.opensshto be used later for Thinlinc. -

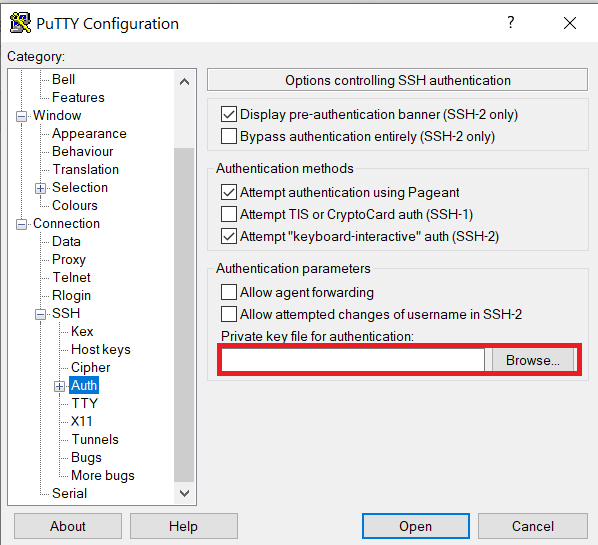

Configure PuTTY to use key-based authentication:

Launch PuTTY and navigate to "Connection -> SSH ->Auth" on the left panel, click Browse button under the "Authentication parameters" section and choose your private key, e.g. mylaptop_private_key.ppk

After clicking Connection -> SSH ->Auth panel, the "Browse" option can be found at the bottom of the resulting panel. Navigate back to "Session" on the left panel. Highlight "Default Settings" and click the "Save" button to ensure the change is in place.

-

For your first time login to Anvil, please log in to Open OnDemand ondemand.anvil.rcac.purdue.edu using your ACCESS username and password.

-

Once logged on to OnDemand, go to the

Clusterson the top toolbar. ClickAnvil Shell Accessand you will be able to see the terminal.

============================================================================= == Welcome to the Anvil Cluster == …… ============================================================================= ** DID YOU KNOW? ** …… ***************************************************************************** x-anvilusername@login04.anvil:[~] $ pwd /home/x-anvilusername -

Under the home directory on Anvil, make a

.sshdirectory usingmkdir -p ~/.sshif it does not exist.

Create a file~/.ssh/authorized_keyson the Anvil cluster and copy the contents of the public keyid_rsa.pubin your local machine into~/.ssh/authorized_keys.

and copy the contents of public key from PuTTYgen as shown below and paste it intox-anvilusername@login04.anvil:[~] $ pwd /home/x-anvilusername x-anvilusername@login04.anvil:[~] $ cd ~/.ssh x-anvilusername@login04.anvil:[.ssh] $ vi authorized_keys # copy-paste the contents of the public key id_rsa.pub in your local machine (as shown in step 2) to authorized_keys here and save the change of authorized_keys file. Then it is all set! #~/.ssh/authorized_keys. Please double-check that your text editor did not wrap or fold the pasted value (it should be one very long line).

The "Public key" will look like a long string of random letters and numbers in a text box at the top of the window. - Test by connecting to the cluster and the login should now complete without asking for a password. If you chose to protect your private key with a passphrase in step 2, you will be prompted to enter the passphrase when connecting.

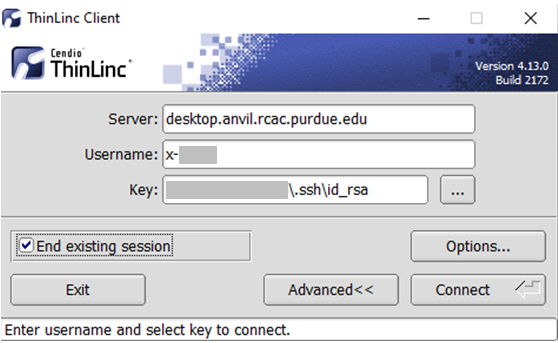

ThinLinc

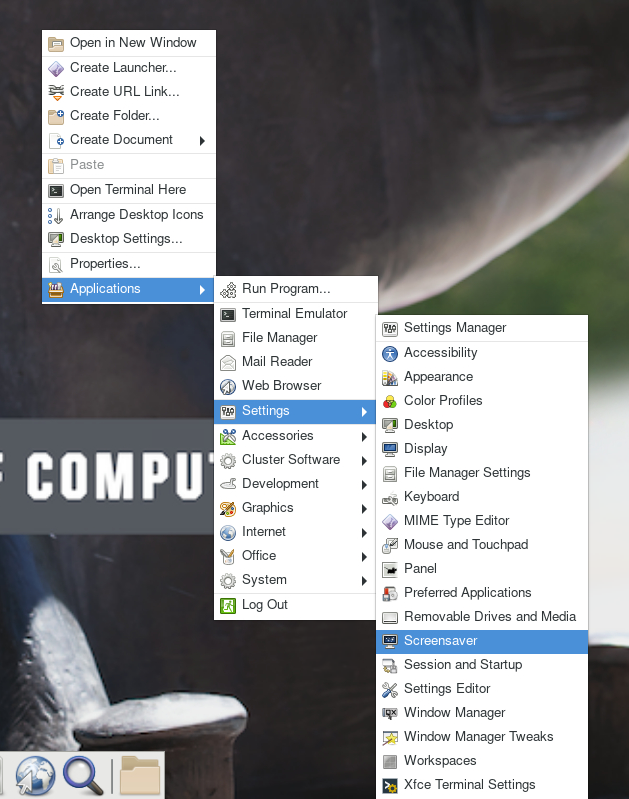

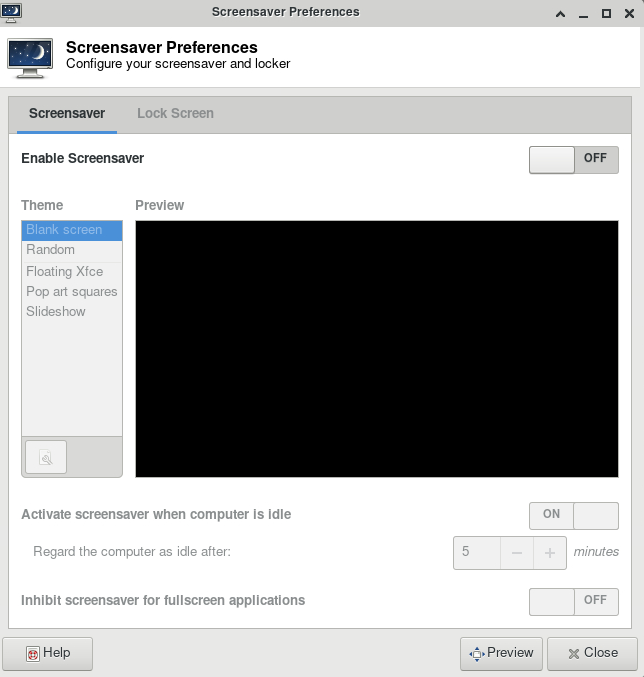

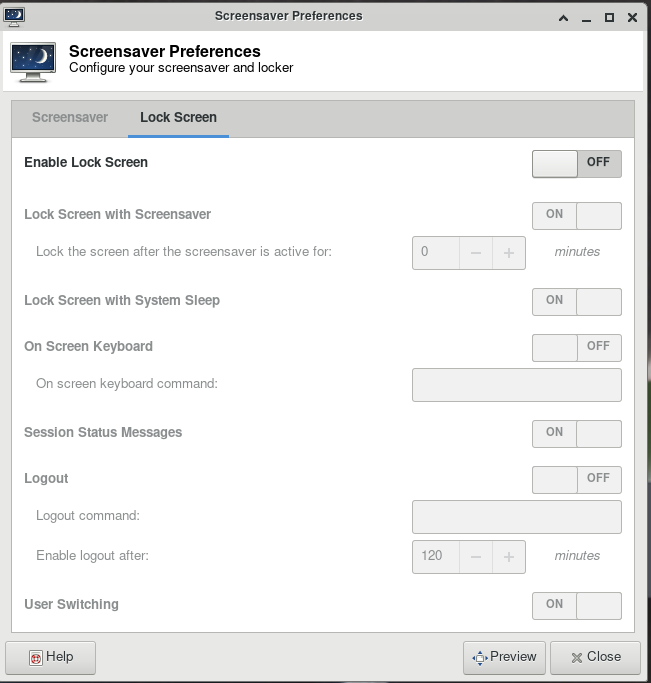

For your first time accessing Anvil using ThinLinc client, your desktop might be locked after it has been idle for more than 5 minutes. It is because in the default settings, the "screensaver" and "lock screen" are turned on. To solve this issue, please refer to the FAQs Page.

Anvil provides Cendio's ThinLinc as an alternative to running an X11 server directly on your computer. It allows you to run graphical applications or graphical interactive jobs directly on Anvil through a persistent remote graphical desktop session.

ThinLinc is a service that allows you to connect to a persistent remote graphical desktop session. This service works very well over a high latency, low bandwidth, or off-campus connection compared to running an X11 server locally. It is also very helpful for Windows users who do not have an easy to use local X11 server, as little to no setup is required on your computer.

There are two ways in which to use ThinLinc: preferably through the native client or through a web browser.

Browser-based Thinlinc access is not supported on Anvil at this moment. Please use native Thinlinc client with SSH keys.

Link to section 'Installing the ThinLinc native client' of 'ThinLinc' Installing the ThinLinc native client

The native ThinLinc client will offer the best experience especially over off-campus connections and is the recommended method for using ThinLinc. It is compatible with Windows, Mac OS X, and Linux.

- Download the ThinLinc client from the ThinLinc website.

- Start the ThinLinc client on your computer.

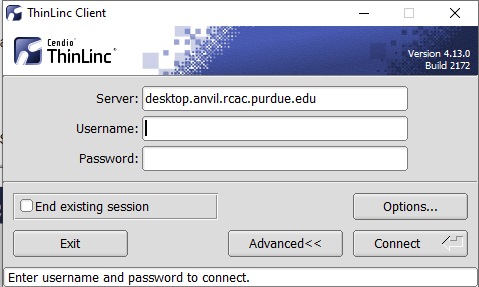

- In the client's login window, use desktop.anvil.rcac.purdue.edu as the Server and use your Anvil username

x-anvilusername. - At this moment, an SSH key is required to login to ThinLinc client. For help generating and uploading keys to the cluster, see SSH Keys section in our user guide for details.

Link to section 'Configure ThinLinc to use SSH Keys' of 'ThinLinc' Configure ThinLinc to use SSH Keys

-

To set up SSH key authentication on the ThinLinc client:

-

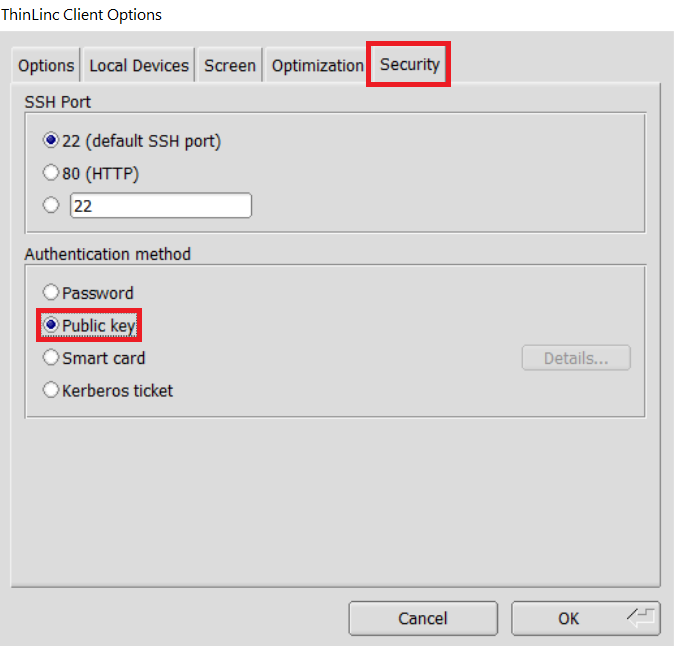

Open the Options panel, and select Public key as your authentication method on the Security tab.

The "Options..." button in the ThinLinc Client can be found towards the bottom left, above the "Connect" button. -

In the options dialog, switch to the "Security" tab and select the "Public key" radio button:

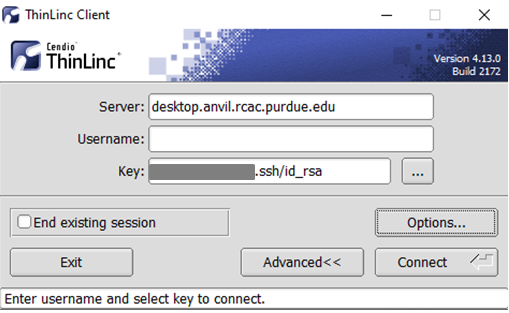

The "Security" tab found in the options dialog, will be the last of available tabs. The "Public key" option can be found in the "Authentication method" options group. - Click OK to return to the ThinLinc Client login window. You should now see a Key field in place of the Password field.

-

In the Key field, type the path to your locally stored private key or click the ... button to locate and select the key on your local system. Note: If PuTTY is used to generate the SSH Key pairs, please choose the private key in the openssh format.

The ThinLinc Client login window will now display key field instead of a password field.

-

- Click the Connect button.

- Continue to following section on connecting to Anvil from ThinLinc.

Link to section 'Connecting to Anvil from ThinLinc' of 'ThinLinc' Connecting to Anvil from ThinLinc

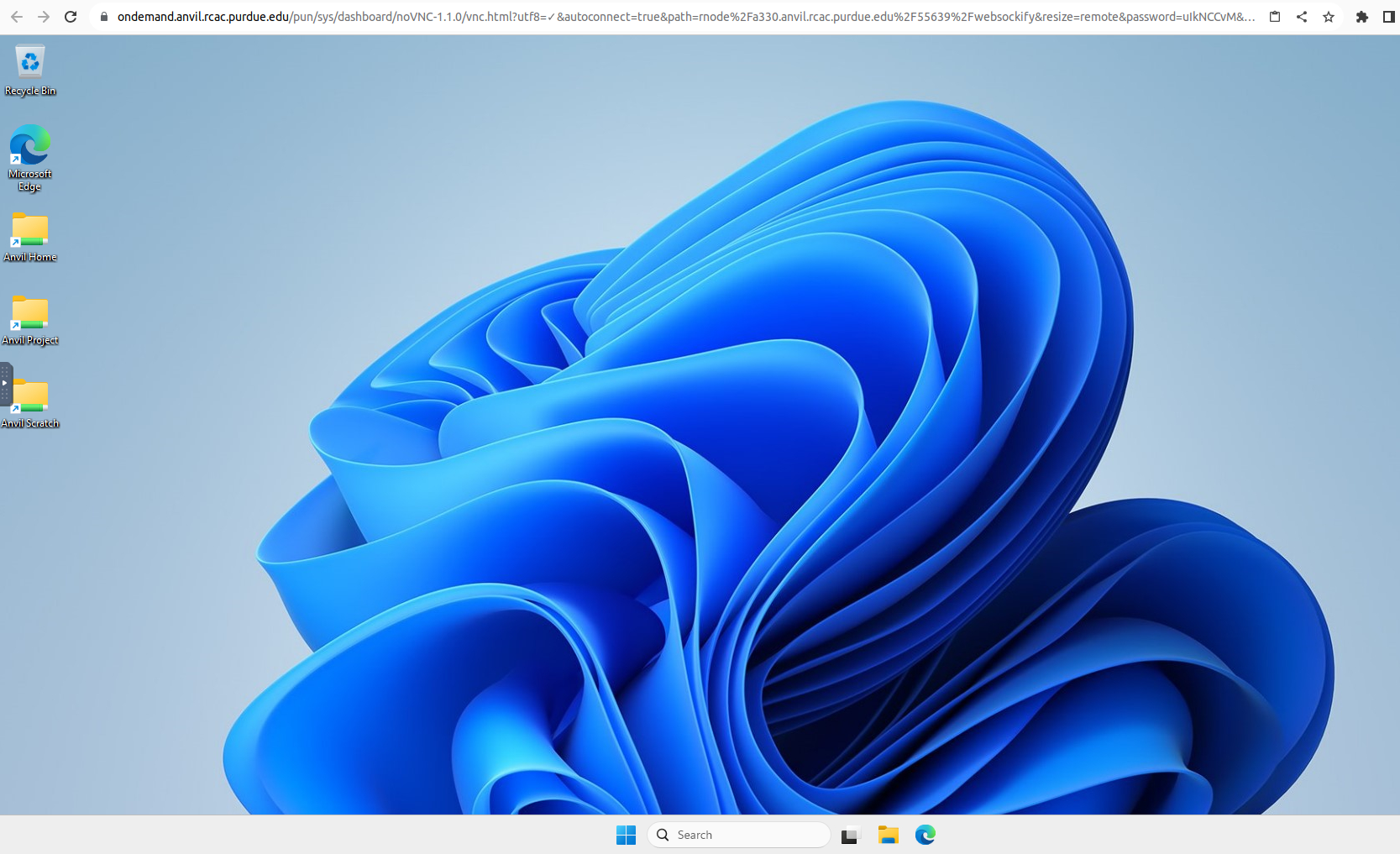

- Once logged in, you will be presented with a remote Linux desktop running directly on a cluster login node.

- Open the terminal application on the remote desktop.

- Once logged in to the Anvil login node, you may use graphical editors, debuggers, software like Matlab, or run graphical interactive jobs. For example, to test the X forwarding connection issue the following command to launch the graphical editor geany:

$ geany - This session will remain persistent even if you disconnect from the session. Any interactive jobs or applications you left running will continue running even if you are not connected to the session.

Link to section 'Tips for using ThinLinc native client' of 'ThinLinc' Tips for using ThinLinc native client

- To exit a full-screen ThinLinc session press the F8 key on your keyboard (fn + F8 key for Mac users) and click to disconnect or exit full screen.

- Full-screen mode can be disabled when connecting to a session by clicking the Options button and disabling full-screen mode from the Screen tab.

Check Allocation Usage

To keep track of the usage of the allocation by your project team, you can use mybalance:

x-anvilusername@login01:~ $ mybalance

Allocation Type SU Limit SU Usage SU Usage SU Balance

Account (account) (user)

=============== ======= ======== ========= ========= ==========

xxxxxxxxx CPU 1000.0 95.7 0.0 904.3

You can also check the allocation usage through ACCESS allocations page.

See SU accounting section for detailed description of the way SUs are charged on Anvil.

System Architecture

Link to section 'Compute Nodes' of 'System Architecture' Compute Nodes

| Attribute | Anvil CPUs | Anvil GPUs | Anvil AI |

|---|---|---|---|

| Model | AMD EPYC™ 7763 CPUs | AMD EPYC™ 7763 CPUs with 4 NVIDIA A100 GPUs | Intel Xeon Platinum 8468 CPUs with 4 NVIDIA H100 GPUs |

| CPU speed | 2.45GHz | 2.45GHz | 2.1Ghz |

| Number of nodes | 1000 | 16 | 21 |

| Cores per node | 128 | 128 | 96 |

| RAM per node | 256GB | 512GB | 1TB |

| Cache | L1d(32K), L1i(32K), L2(512K), L3(32768K) | L1d(32K), L1i(32K), L2(512K), L3(32768K) | L1d(48K), L1i(32K), L2(2048K), L3(107520K) |

| GPU memory | - | 40GB | 80GB |

| Network Interconnect | 100 Gbps Infiniband | 100 Gbps Infiniband | Dual 400Gbps Infiniband |

| Operating System | Rocky Linux 8.10 | Rocky Linux 8.10 | Rocky Linux 8.10 |

| Batch system | Slurm | Slurm | Slurm |

Link to section 'Login Nodes' of 'System Architecture' Login Nodes

| Number of Nodes | Processors per Node | Cores per Node | Memory per Node |

|---|---|---|---|

| 8 | 3rd Gen AMD EPYC™ 7543 CPU | 32 | 512 GB |

Link to section 'Specialized Nodes' of 'System Architecture' Specialized Nodes

| Sub-Cluster | Number of Nodes | Processors per Node | Cores per Node | Memory per Node |

|---|---|---|---|---|

| B | 32 | Two 3rd Gen AMD EPYC™ 7763 CPUs | 128 | 1 TB |

| G | 16 | Two 3rd Gen AMD EPYC™ 7763 CPUs + Four NVIDIA A100 GPUs | 128 | 512 GB |

| H | 21 | Dual Intel Xeon Platinum 8468 CPUs + Four NVIDIA H100 GPUs | 96 | 1 TB |

Link to section 'Network' of 'System Architecture' Network

All nodes, as well as the scratch storage system are interconnected by an oversubscribed (3:1 fat tree) HDR InfiniBand interconnect. The nominal per-node bandwidth is 100 Gbps, with message latency as low as 0.90 microseconds. The fabric is implemented as a two-stage fat tree. Nodes are directly connected to Mellanox QM8790 switches with 60 HDR100 links down to nodes and 10 links to spine switches.

Link to section 'Storage' of 'System Architecture' Storage

The Anvil local storage infrastructure provides users with their Home, Scratch, and Project areas. These file systems are mounted across all Anvil nodes and are accessible on the Anvil Globus Endpoints. In addition, Anvil Ceph offers a distributed, software-defined storage system that supports large-scale, durable, and high-throughput data access for research workflows.

The three tiers of storage are intended for different use cases and are optimized for that use. Use of data tiers for their unintended purposes is discouraged as poor performance or file system access problems may occur. These tiers have quotas in both capacity and numbers of files, so care should be taken to not exceed those. Use the 'myquota' command to see what your usage is on the various tiers.

| HOME | SCRATCH | PROJECT | |

|---|---|---|---|

| Filesystem | ZFS | GPFS | GPFS |

| Capacity | 25 GB | 100 TB | 5 TB |

| File number limit | none | 1 millions | 1 millions |

| Backups | daily snapshots | none | daily snapshots |

| Hardware |

|

Flash Tier

SAS Tier

|

|

Home is intended to hold configuration files for setting up the user's environment and some small files that are often needed to run jobs. Saving job output permanently is not really supported on this tier as the space is limited.

Scratch is intended to hold input and output data for running jobs. This tier of storage is very high performance and is very large to be able to handle a large number of jobs and large quantities of data. It is not intended for long-term storage of data, either input or output as files may only reside on Scratch for 30 days. Files older than 30 days will be eligible for an automated process which purges those files. This automated process can not be cancelled or overridden. So make provisions for moving your data to your home institution or other storage before then. New files on Scratch are written to a fast tier of NVME disk where they will reside for 7 days or if that tier is more than 90% full, at which time they are moved to a slower SAS tier for the remaining 30 days or until deleted.

CAUTION: Be aware that data on this tier is not backed up or snapshotted, so files that are accidentally erased or lost due to mechanical problems is NOT recoverable. Movement of data to a more secure tier is recommended.

Projects is intended for groups to store data that is relevant for entire groups such a common datasets used for computation or for collaboration. Allocations for this tier is by request and it is not designed to be used in actively writing job output to, but are usual for those files that are constantly in use for reading.

Anvil Ceph is intended to provide scalable, fault-tolerant, and high-throughput storage for large or persistent research data. It supports both object and block storage, making it suitable for hosting shared datasets, storing long-term research outputs, and enabling data access for containerized or cloud-integrated workflows. Ceph complements the Lustre-based storage tiers by offering durable and easily expandable storage for diverse data management needs.

| Anvil CEPH | |

|---|---|

| CPU Model | 2x Intel® Xeon® Gold 5416S Processors |

| CPU speed + cache | 2.00 GHz, 30M Cache |

| CPU Cores per node | 32 |

| Number of nodes | 12 |

| RAM per node | 256 GB |

| Network Interconnect | 100 Gbps Infiniband |

| Operating System | Rocky Linux 8.10 |

| Disks | 16 x Dell NVMe 15.36TB |

Running Jobs

Users familiar with the Linux command line may use standard job submission utilities to manage and run jobs on the Anvil compute nodes.

For GPU jobs, make sure to use --gpus-per-node argument, otherwise, your job may not run properly.

Accessing the Compute Nodes

Anvil uses the Slurm Workload Manager for job scheduling and management. With Slurm, a user requests resources and submits a job to a queue. The system takes jobs from queues, allocates the necessary compute nodes, and executes them. While users will typically SSH to an Anvil login node to access the Slurm job scheduler, they should note that Slurm should always be used to submit their work as a job rather than run computationally intensive jobs directly on a login node. All users share the login nodes, and running anything but the smallest test job will negatively impact everyone's ability to use Anvil.

Anvil is designed to serve the moderate-scale computation and data needs of the majority of ACCESS users. Users with allocations can submit to a variety of queues with varying job size and walltime limits. Separate sets of queues are utilized for the CPU, GPU, and large memory nodes. Typically, queues with shorter walltime and smaller job size limits will feature faster turnarounds. Some additional points to be aware of regarding the Anvil queues are:

- Anvil provides a debug queue for testing and debugging codes.

- Anvil supports shared-node jobs (more than one job on a single node). Many applications are serial or can only scale to a few cores. Allowing shared nodes improves job throughput, provides higher overall system utilization and allows more users to run on Anvil.

- Anvil supports long-running jobs - run times can be extended to four days for jobs using up to 16 full nodes.

- The maximum allowable job size on Anvil is 7,168 cores. To run larger jobs, submit a consulting ticket to discuss with Anvil support.

- Shared-node queues will be utilized for managing jobs on the GPU and large memory nodes.

Job Accounting

On Anvil, the CPU nodes and GPU nodes are charged separately.Link to section 'For CPU nodes' of 'Job Accounting' For CPU nodes

The charge unit for Anvil is the Service Unit (SU). This corresponds to the equivalent use of one compute core utilizing less than approximately 2G of data in memory for one hour.

Keep in mind that your charges are based on the resources that are tied up by your job and do not necessarily reflect how the resources are used.

If you explicitly request --mem-per-cpu=2G, SLURM may allocate more resources than expected, since the default memory per core on Anvil is slightly less than 2GB (approximately 1896 MB). By requesting exactly 2GB per core, SLURM may allocate additional cores to meet the memory requirement, which can lead to higher SU charges.

Charges on jobs submitted to the shared queues are based on the number of cores and the fraction of the memory requested, whichever is larger. Jobs submitted as node-exclusive will be charged for all 128 cores, whether the resources are used or not.

Jobs submitted to the large memory nodes will be charged 4 SU per compute core (4x wholenode node charge).

Link to section 'For GPU nodes' of 'Job Accounting' For GPU nodes

1 SU corresponds to the equivalent use of one GPU utilizing less than or equal to approximately 120G of data in memory for one hour.

Each GPU nodes on Anvil have 4 GPUs and all GPU nodes are shared.

Link to section 'For file system' of 'Job Accounting' For file system

Filesystem storage is not charged.

You can use mybalance command to check your current allocation usage.

Slurm Partitions (Queues)

Anvil provides different queues with varying job sizes and walltimes. There are also limits on the number of jobs queued and running on a per-user and queue basis. Queues and limits are subject to change based on the evaluation from the Early User Program.

| Queue Name | Node Type | Max Nodes per Job | Max Cores per Job | Max Duration | Max running Jobs in Queue | Max running + submitted Jobs in Queue | Charging factor |

|---|---|---|---|---|---|---|---|

| debug | regular | 2 nodes | 256 cores | 2 hrs | 1 | 2 | 1 |

| gpu-debug | gpu | 1 node | 2 gpus | 0.5 hrs | 1 | 2 | 1 |

| wholenode | regular | 16 nodes | 2,048 cores | 96 hrs | 64 | 2500 | 1 (node-exclusive) |

| wide | regular | 56 nodes | 7,168 cores | 12 hrs | 5 | 10 | 1 (node-exclusive) |

| shared | regular | 1 node | 128 cores | 96 hrs | 1280 cores | - | 1 |

| highmem | large-memory | 1 node | 128 cores | 48 hrs | 2 | 4 | 4 |

| gpu | gpu | - | - | 48 hrs | - | - | 1 |

| ai | ai | - | - | 48 hrs | - | - | 1 |

For gpu and ai queue: max of 12 GPU in use per user and max of 32 GPU in use per allocation.

Make sure to specify the desired partition when submitting your jobs (e.g. -p wholenode). If you do not specify one, the job will be directed into the default partition (shared).

If the partition is node-exclusive (e.g. the wholenode and wide queues), even if you ask for 1 core in your job submission script, your job will get allocated an entire node and would not share this node with any other jobs. Hence, it will be charged for 128 cores' worth and squeue command would show it as 128 cores, too. See SU accounting for more details.

Link to section 'Useful tools' of 'Slurm Partitions (Queues)' Useful tools

- To display all Slurm partitions and their current usage, type

showpartitionsat the command line.x-anvilusername@login03.anvil:[~] $ showpartitions Partition statistics for cluster anvil at CURRENTTIME Partition #Nodes #CPU_cores Cores_pending Job_Nodes MaxJobTime Cores Mem/Node Name State Total Idle Total Idle Resorc Other Min Max Day-hr:mn /node (GB) wholenode up 750 0 96000 512 0 92280 1 infin infinite 128 257 standard up 750 0 96000 512 0 6753 1 infin infinite 128 257 shared:* up 250 97 32000 16395 0 4997 1 infin infinite 128 257 wide up 746 0 95488 512 0 24576 1 infin infinite 128 257 highmem up 32 19 4096 2504 0 0 1 infin infinite 128 1031 debug up 17 0 2176 113 0 128 1 infin infinite 128 257 gpu up 16 0 2048 1371 0 228 1 infin infinite 128 515 ai up 21 18 2016 1814 0 0 1 infin infinite 96 1031 gpu-debug up 16 0 2048 1371 0 0 1 infin infinite 128 515 benchmarking up 1048 116 134144 20782 0 0 1 infin infinite 128 257+ profiling up 4 0 512 0 0 0 1 infin infinite 128 257 azure up 8 0 16 0 0 0 1 infin infinite 2 7 - To show the list of available constraint feature names for different node types, type

sfeaturesat the command line.x-anvilusername@login03.anvil:[~] $ sfeatures NODELIST CPUS MEMORY AVAIL_FEATURES GRES a[000-999] 128 257400 A,a (null) b[000-031] 128 1031400 B,b,testing (null) g[000-015] 128 515400 G,g,A100 gpu:4 h[000-020] 96 1031000 H,h,H100 gpu:4 login[00-07] 32 500000 login (null) ondemand 32 90000 (null) (null) z[000-007] 2 7684 Z,z,power_azure (null)

Batch Jobs

Link to section 'Job Submission Script' of 'Batch Jobs' Job Submission Script

To submit work to a Slurm queue, you must first create a job submission file. This job submission file is essentially a simple shell script. It will set any required environment variables, load any necessary modules, create or modify files and directories, and run any applications that you need:

#!/bin/sh -l

# FILENAME: myjobsubmissionfile

# Loads Matlab and sets the application up

module load matlab

# Change to the directory from which you originally submitted this job.

cd $SLURM_SUBMIT_DIR

# Runs a Matlab script named 'myscript'

matlab -nodisplay -singleCompThread -r myscript

The standard Slurm environment variables that can be used in the job submission file are listed in the table below:

| Name | Description |

|---|---|

| SLURM_SUBMIT_DIR | Absolute path of the current working directory when you submitted this job |

| SLURM_JOBID | Job ID number assigned to this job by the batch system |

| SLURM_JOB_NAME | Job name supplied by the user |

| SLURM_JOB_NODELIST | Names of nodes assigned to this job |

| SLURM_SUBMIT_HOST | Hostname of the system where you submitted this job |

| SLURM_JOB_PARTITION | Name of the original queue to which you submitted this job |

Once your script is prepared, you are ready to submit your job.

Link to section 'Submitting a Job' of 'Batch Jobs' Submitting a Job

Once you have a job submission file, you may submit this script to SLURM using the sbatch command. Slurm will find, or wait for, available resources matching your request and run your job there.

To submit your job to one compute node with one task:

$ sbatch --nodes=1 --ntasks=1 myjobsubmissionfile

By default, each job receives 30 minutes of wall time, or clock time. If you know that your job will not need more than a certain amount of time to run, request less than the maximum wall time, as this may allow your job to run sooner. To request the 1 hour and 30 minutes of wall time:

$ sbatch -t 1:30:00 --nodes=1 --ntasks=1 myjobsubmissionfile

Each compute node in Anvil has 128 processor cores. In some cases, you may want to request multiple nodes. To utilize multiple nodes, you will need to have a program or code that is specifically programmed to use multiple nodes such as with MPI. Simply requesting more nodes will not make your work go faster. Your code must utilize all the cores to support this ability. To request 2 compute nodes with 256 tasks:

$ sbatch --nodes=2 --ntasks=256 myjobsubmissionfile

If more convenient, you may also specify any command line options to sbatch from within your job submission file, using a special form of comment:

#!/bin/sh -l

# FILENAME: myjobsubmissionfile

#SBATCH -A myallocation

#SBATCH -p queue-name # the default queue is "shared" queue

#SBATCH --nodes=1

#SBATCH --ntasks=1

#SBATCH --time=1:30:00

#SBATCH --job-name myjobname

module purge # Unload all loaded modules and reset everything to original state.

module load ...

...

module list # List currently loaded modules.

# Print the hostname of the compute node on which this job is running.

hostname

If an option is present in both your job submission file and on the command line, the option on the command line will take precedence.

After you submit your job with sbatch, it may wait in the queue for minutes, hours, or even days. How long it takes for a job to start depends on the specific queue, the available resources, and time requested, and other jobs that are already waiting in that queue. It is impossible to say for sure when any given job will start. For best results, request no more resources than your job requires.

Once your job is submitted, you can monitor the job status, wait for the job to complete, and check the job output.

Link to section 'Checking Job Status' of 'Batch Jobs' Checking Job Status

Once a job is submitted there are several commands you can use to monitor the progress of the job. To see your jobs, use the squeue -u command and specify your username.

$ squeue -u myusername

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

188 wholenode job1 myusername R 0:14 2 a[010-011]

189 wholenode job2 myusername R 0:15 1 a012

To retrieve useful information about your queued or running job, use the scontrol show job command with your job's ID number.

$ scontrol show job 189

JobId=189 JobName=myjobname

UserId=myusername GroupId=mygroup MCS_label=N/A

Priority=103076 Nice=0 Account=myacct QOS=normal

JobState=RUNNING Reason=None Dependency=(null)

Requeue=1 Restarts=0 BatchFlag=0 Reboot=0 ExitCode=0:0

RunTime=00:01:28 TimeLimit=00:30:00 TimeMin=N/A

SubmitTime=2021-10-04T14:59:52 EligibleTime=2021-10-04T14:59:52

AccrueTime=Unknown

StartTime=2021-10-04T14:59:52 EndTime=2021-10-04T15:29:52 Deadline=N/A

SuspendTime=None SecsPreSuspend=0 LastSchedEval=2021-10-04T14:59:52 Scheduler=Main

Partition=wholenode AllocNode:Sid=login05:1202865

ReqNodeList=(null) ExcNodeList=(null)

NodeList=a010

BatchHost=a010

NumNodes=1 NumCPUs=1 NumTasks=1 CPUs/Task=1 ReqB:S:C:T=0:0:*:*

TRES=cpu=1,mem=257526M,node=1,billing=1

Socks/Node=* NtasksPerN:B:S:C=0:0:*:* CoreSpec=*

MinCPUsNode=1 MinMemoryNode=257526M MinTmpDiskNode=0

Features=(null) DelayBoot=00:00:00

OverSubscribe=OK Contiguous=0 Licenses=(null) Network=(null)

Command=(null)

WorkDir=/home/myusername/jobdir

Power=

JobStatelets you know if the job is Pending, Running, Completed, or Held.RunTime and TimeLimitwill show how long the job has run and its maximum time.SubmitTimeis when the job was submitted to the cluster.- The job's number of Nodes, Tasks, Cores (CPUs) and CPUs per Task are shown.

WorkDiris the job's working directory.StdOutandStderrare the locations of stdout and stderr of the job, respectively.Reasonwill show why aPENDINGjob isn't running.

You can check the predicted wait time for a queued job by running wait_time -j {your_job_id}

For historic (completed) jobs, you can use the jobinfo command. While not as detailed as scontrol output, it can also report information on jobs that are no longer active.

Link to section 'Checking Job Output' of 'Batch Jobs' Checking Job Output

Once a job is submitted, and has started, it will write its standard output and standard error to files that you can read.

SLURM catches output written to standard output and standard error - what would be printed to your screen if you ran your program interactively. Unless you specified otherwise, SLURM will put the output in the directory where you submitted the job in a file named slurm- followed by the job id, with the extension out. For example slurm-3509.out. Note that both stdout and stderr will be written into the same file, unless you specify otherwise.

If your program writes its own output files, those files will be created as defined by the program. This may be in the directory where the program was run, or may be defined in a configuration or input file. You will need to check the documentation for your program for more details.

Link to section 'Redirecting Job Output' of 'Batch Jobs' Redirecting Job Output

It is possible to redirect job output to somewhere other than the default location with the --error and --output directives:

#! /bin/sh -l

#SBATCH --output=/path/myjob.out

#SBATCH --error=/path/myjob.out

# This job prints "Hello World" to output and exits

echo "Hello World"

Link to section 'Holding a Job' of 'Batch Jobs' Holding a Job

Sometimes you may want to submit a job but not have it run just yet. You may be wanting to allow lab mates to cut in front of you in the queue - so hold the job until their jobs have started, and then release yours.

To place a hold on a job before it starts running, use the scontrol hold job command:

$ scontrol hold job myjobid

Once a job has started running it can not be placed on hold.

To release a hold on a job, use the scontrol release job command:

$ scontrol release job myjobid

Link to section 'Job Dependencies' of 'Batch Jobs' Job Dependencies

Dependencies are an automated way of holding and releasing jobs. Jobs with a dependency are held until the condition is satisfied. Once the condition is satisfied jobs only then become eligible to run and must still queue as normal.

Job dependencies may be configured to ensure jobs start in a specified order. Jobs can be configured to run after other job state changes, such as when the job starts or the job ends.

These examples illustrate setting dependencies in several ways. Typically dependencies are set by capturing and using the job ID from the last job submitted.

To run a job after job myjobid has started:

$ sbatch --dependency=after:myjobid myjobsubmissionfile

To run a job after job myjobid ends without error:

$ sbatch --dependency=afterok:myjobid myjobsubmissionfile

To run a job after job myjobid ends with errors:

$ sbatch --dependency=afternotok:myjobid myjobsubmissionfile

To run a job after job myjobid ends with or without errors:

$ sbatch --dependency=afterany:myjobid myjobsubmissionfile

To set more complex dependencies on multiple jobs and conditions:

$ sbatch --dependency=after:myjobid1:myjobid2:myjobid3,afterok:myjobid4 myjobsubmissionfile

Link to section 'Canceling a Job' of 'Batch Jobs' Canceling a Job

To stop a job before it finishes or remove it from a queue, use the scancel command:

$ scancel myjobid

Interactive Jobs

In addition to the ThinLinc and OnDemand interfaces, users can also choose to run interactive jobs on compute nodes to obtain a shell that they can interact with. This gives users the ability to type commands or use a graphical interface as if they were on a login node.

To submit an interactive job, use sinteractive to run a login shell on allocated resources.

sinteractive accepts most of the same resource requests as sbatch, so to request a login shell in the compute queue while allocating 2 nodes and 256 total cores, you might do:

$ sinteractive -p wholenode -N 2 -n 256 -A oneofyourallocations

You can check the predicted wait time for a queued job by running wait_time -j {your_job_id}

To quit your interactive job:

exit or Ctrl-D

Example Jobs

A number of example jobs are available for you to look over and adapt to your own needs. The first few are generic examples, and latter ones go into specifics for particular software packages.

Generic SLURM Jobs

The following examples demonstrate the basics of SLURM jobs, and are designed to cover common job request scenarios. These example jobs will need to be modified to run your application or code.

Serial job in shared queue

This shows an example of a job submission file of the serial programs:

#!/bin/bash

# FILENAME: myjobsubmissionfile

#SBATCH -A myallocation # Allocation name

#SBATCH --nodes=1 # Total # of nodes (must be 1 for serial job)

#SBATCH --ntasks=1 # Total # of MPI tasks (should be 1 for serial job)

#SBATCH --time=1:30:00 # Total run time limit (hh:mm:ss)

#SBATCH -J myjobname # Job name

#SBATCH -o myjob.o%j # Name of stdout output file

#SBATCH -e myjob.e%j # Name of stderr error file

#SBATCH -p shared # Queue (partition) name

#SBATCH --mail-user=useremailaddress

#SBATCH --mail-type=all # Send email to above address at begin and end of job

# Manage processing environment, load compilers and applications.

module purge

module load compilername

module load applicationname

module list

# Launch serial code

./myexecutablefiles

If you would like to submit one serial job at a time, using shared queue will only charge 1 core, instead of charging 128 cores for wholenode queue.

MPI job in wholenode queue

An MPI job is a set of processes that take advantage of multiple compute nodes by communicating with each other. OpenMPI, Intel MPI (IMPI), and MVAPICH2 are implementations of the MPI standard.

This shows an example of a job submission file of the MPI programs:

#!/bin/bash

# FILENAME: myjobsubmissionfile

#SBATCH -A myallocation # Allocation name

#SBATCH --nodes=2 # Total # of nodes

#SBATCH --ntasks=256 # Total # of MPI tasks

#SBATCH --time=1:30:00 # Total run time limit (hh:mm:ss)

#SBATCH -J myjobname # Job name

#SBATCH -o myjob.o%j # Name of stdout output file

#SBATCH -e myjob.e%j # Name of stderr error file

#SBATCH -p wholenode # Queue (partition) name

#SBATCH --mail-user=useremailaddress

#SBATCH--mail-type=all # Send email to above address at begin and end of job

# Manage processing environment, load compilers and applications.

module purge

module load compilername

module load mpilibrary

module load applicationname

module list

# Launch MPI code

mpirun -np $SLURM_NTASKS ./myexecutablefiles

SLURM can run an MPI program with the srun command. The number of processes is requested with the -n option. If you do not specify the -n option, it will default to the total number of processor cores you request from SLURM.

If the code is built with OpenMPI, it can be run with a simple srun -n command. If it is built with Intel IMPI, then you also need to add the --mpi=pmi2 option: srun --mpi=pmi2 -n 256 ./mycode.exe in this example.

Invoking an MPI program on Anvil with ./myexecutablefiles is typically wrong, since this will use only one MPI process and defeat the purpose of using MPI. Unless that is what you want (rarely the case), you should use srun which is the Slurm analog of mpirun or mpiexec, or use mpirun or mpiexec to invoke an MPI program.

OpenMP job in wholenode queue

A shared-memory job is a single process that takes advantage of a multi-core processor and its shared memory to achieve parallelization.

When running OpenMP programs, all threads must be on the same compute node to take advantage of shared memory. The threads cannot communicate between nodes.

To run an OpenMP program, set the environment variable OMP_NUM_THREADS to the desired number of threads. This should almost always be equal to the number of cores on a compute node. You may want to set to another appropriate value if you are running several processes in parallel in a single job or node.

This example shows how to submit an OpenMP program, this job asked for 2 MPI tasks, each with 64 OpenMP threads for a total of 128 CPU-cores:

#!/bin/bash

# FILENAME: myjobsubmissionfile

#SBATCH -A myallocation # Allocation name

#SBATCH --nodes=1 # Total # of nodes (must be 1 for OpenMP job)

#SBATCH --ntasks-per-node=2 # Total # of MPI tasks per node

#SBATCH --cpus-per-task=64 # cpu-cores per task (default value is 1, >1 for multi-threaded tasks)

#SBATCH --time=1:30:00 # Total run time limit (hh:mm:ss)

#SBATCH -J myjobname # Job name

#SBATCH -o myjob.o%j # Name of stdout output file

#SBATCH -e myjob.e%j # Name of stderr error file

#SBATCH -p wholenode # Queue (partition) name

#SBATCH --mail-user=useremailaddress

#SBATCH --mail-type=all # Send email to above address at begin and end of job

# Manage processing environment, load compilers and applications.

module purge

module load compilername

module load applicationname

module list

# Set thread count (default value is 1).

export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK

# Launch OpenMP code

./myexecutablefiles

The ntasks x cpus-per-task should equal to or less than the total number of CPU cores on a node.

If an OpenMP program uses a lot of memory and 128 threads use all of the memory of the compute node, use fewer processor cores (OpenMP threads) on that compute node.

Hybrid job in wholenode queue

A hybrid program combines both MPI and shared-memory to take advantage of compute clusters with multi-core compute nodes. Libraries for OpenMPI, Intel MPI (IMPI), and MVAPICH2 and compilers which include OpenMP for C, C++, and Fortran are available.

This example shows how to submit a hybrid program, this job asked for 4 MPI tasks (with 2 MPI tasks per node), each with 64 OpenMP threads for a total of 256 CPU-cores:

#!/bin/bash

# FILENAME: myjobsubmissionfile

#SBATCH -A myallocation # Allocation name

#SBATCH --nodes=2 # Total # of nodes

#SBATCH --ntasks-per-node=2 # Total # of MPI tasks per node

#SBATCH --cpus-per-task=64 # cpu-cores per task (default value is 1, >1 for multi-threaded tasks)

#SBATCH --time=1:30:00 # Total run time limit (hh:mm:ss)

#SBATCH -J myjobname # Job name

#SBATCH -o myjob.o%j # Name of stdout output file

#SBATCH -e myjob.e%j # Name of stderr error file

#SBATCH -p wholenode # Queue (partition) name

#SBATCH --mail-user=useremailaddress

#SBATCH --mail-type=all # Send email at begin and end of job

# Manage processing environment, load compilers and applications.

module purge

module load compilername

module load mpilibrary

module load applicationname

module list

# Set thread count (default value is 1).

export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK

# Launch MPI code

mpirun -np $SLURM_NTASKS ./myexecutablefiles

The ntasks x cpus-per-task should equal to or less than the total number of CPU cores on a node.

GPU job in GPU queue

The Anvil cluster nodes contain GPUs that support CUDA and OpenCL. See the detailed hardware overview for the specifics on the GPUs in Anvil or use sfeatures command to see the detailed hardware overview..

Link to section 'How to use Slurm to submit a SINGLE-node GPU program:' of 'GPU job in GPU queue' How to use Slurm to submit a SINGLE-node GPU program:

#!/bin/bash

# FILENAME: myjobsubmissionfile

#SBATCH -A myGPUallocation # allocation name

#SBATCH --nodes=1 # Total # of nodes

#SBATCH --ntasks-per-node=1 # Number of MPI ranks per node (one rank per GPU)

#SBATCH --gpus-per-node=1 # Number of GPUs per node

#SBATCH --time=1:30:00 # Total run time limit (hh:mm:ss)

#SBATCH -J myjobname # Job name

#SBATCH -o myjob.o%j # Name of stdout output file

#SBATCH -e myjob.e%j # Name of stderr error file

#SBATCH -p gpu # Queue (partition) name

#SBATCH --mail-user=useremailaddress

#SBATCH --mail-type=all # Send email to above address at begin and end of job

# Manage processing environment, load compilers, and applications.

module purge

module load modtree/gpu

module load applicationname

module list

# Launch GPU code

./myexecutablefiles

Link to section 'How to use Slurm to submit a MULTI-node GPU program:' of 'GPU job in GPU queue' How to use Slurm to submit a MULTI-node GPU program:

#!/bin/bash

# FILENAME: myjobsubmissionfile

#SBATCH -A myGPUallocation # allocation name

#SBATCH --nodes=2 # Total # of nodes

#SBATCH --ntasks-per-node=4 # Number of MPI ranks per node (one rank per GPU)

#SBATCH --gpus-per-node=4 # Number of GPUs per node

#SBATCH --time=1:30:00 # Total run time limit (hh:mm:ss)

#SBATCH -J myjobname # Job name

#SBATCH -o myjob.o%j # Name of stdout output file

#SBATCH -e myjob.e%j # Name of stderr error file

#SBATCH -p gpu # Queue (partition) name

#SBATCH --mail-user=useremailaddress

#SBATCH --mail-type=all # Send email to above address at begin and end of job

# Manage processing environment, load compilers, and applications.

module purge

module load modtree/gpu

module load applicationname

module list

# Launch GPU code

mpirun -np $SLURM_NTASKS ./myexecutablefiles

Make sure to use --gpus-per-node command, otherwise, your job may not run properly.

NGC GPU container job in GPU queue

Link to section 'What is NGC?' of 'NGC GPU container job in GPU queue' What is NGC?

Nvidia GPU Cloud (NGC) is a GPU-accelerated cloud platform optimized for deep learning and scientific computing. NGC offers a comprehensive catalogue of GPU-accelerated containers, so the application runs quickly and reliably in the high-performance computing environment. Anvil team deployed NGC to extend the cluster capabilities and to enable powerful software and deliver the fastest results. By utilizing Singularity and NGC, users can focus on building lean models, producing optimal solutions, and gathering faster insights. For more information, please visit https://www.nvidia.com/en-us/gpu-cloud and NGC software catalog.

Link to section ' Getting Started ' of 'NGC GPU container job in GPU queue' Getting Started

Users can download containers from the NGC software catalog and run them directly using Singularity instructions from the corresponding container’s catalog page.

In addition, a subset of pre-downloaded NGC containers wrapped into convenient software modules are provided. These modules wrap underlying complexity and provide the same commands that are expected from non-containerized versions of each application.

On Anvil, type the command below to see the lists of NGC containers we deployed.

$ module load modtree/gpu$ module load ngc$ module avail

Once module loaded ngc, you can run your code as with normal non-containerized applications. This section illustrates how to use SLURM to submit a job with a containerized NGC program.

#!/bin/bash

# FILENAME: myjobsubmissionfile

#SBATCH -A myallocation # allocation name

#SBATCH --nodes=1 # Total # of nodes

#SBATCH --ntasks-per-node=1 # Number of MPI ranks per node (one rank per GPU)

#SBATCH --gres=gpu:1 # Number of GPUs per node

#SBATCH --time=1:30:00 # Total run time limit (hh:mm:ss)

#SBATCH -J myjobname # Job name

#SBATCH -o myjob.o%j # Name of stdout output file

#SBATCH -e myjob.e%j # Name of stderr error file

#SBATCH -p gpu # Queue (partition) name

#SBATCH --mail-user=useremailaddress

#SBATCH --mail-type=all # Send email to above address at begin and end of job

# Manage processing environment, load compilers, container, and applications.

module purge

module load modtree/gpu

module load ngc

module load applicationname

module list

# Launch GPU code

myexecutablefiles

BioContainers Collection

Link to section 'What is BioContainers?' of 'BioContainers Collection' What is BioContainers?

The BioContainers project came from the idea of using the containers-based technologies such as Docker or rkt for bioinformatics software. Having a common and controllable environment for running software could help to deal with some of the current problems during software development and distribution. BioContainers is a community-driven project that provides the infrastructure and basic guidelines to create, manage and distribute bioinformatics containers with a special focus on omics fields such as proteomics, genomics, transcriptomics, and metabolomics. For more information, please visit BioContainers project.

Link to section ' Getting Started ' of 'BioContainers Collection' Getting Started

Users can download bioinformatic containers from the BioContainers.pro and run them directly using Singularity instructions from the corresponding container’s catalog page.

Detailed Singularity user guide is available at: sylabs.io/guides/3.8/user-guideIn addition, Anvil team provides a subset of pre-downloaded biocontainers wrapped into convenient software modules. These modules wrap underlying complexity and provide the same commands that are expected from non-containerized versions of each application.

On Anvil, type the command below to see the lists of biocontainers we deployed.

$ module purge$ module load modtree/cpu$ module load biocontainers$ module avail

Once module loaded biocontainers, you can run your code as with normal non-containerized applications. This section illustrates how to use SLURM to submit a job with a biocontainers program.

#!/bin/bash

# FILENAME: myjobsubmissionfile

#SBATCH -A myallocation # allocation name

#SBATCH --nodes=1 # Total # of nodes

#SBATCH --ntasks-per-node=1 # Number of MPI ranks per node

#SBATCH --time=1:30:00 # Total run time limit (hh:mm:ss)

#SBATCH -J myjobname # Job name

#SBATCH -o myjob.o%j # Name of stdout output file

#SBATCH -e myjob.e%j # Name of stderr error file

#SBATCH -p wholenode # Queue (partition) name

#SBATCH --mail-user=useremailaddress

#SBATCH --mail-type=all # Send email to above address at begin and end of job

# Manage processing environment, load compilers, container, and applications.

module purge

module load modtree/cpu

module load biocontainers

module load applicationname

module list

# Launch code

./myexecutablefiles Monitoring Resources

Knowing the precise resource utilization an application had during a job, such as CPU load or memory, can be incredibly useful. This is especially the case when the application isn't performing as expected.

One approach is to run a program like htop during an interactive job and keep an eye on system resources. You can get precise time-series data from nodes associated with your job using XDmod as well, online. But these methods don't gather telemetry in an automated fashion, nor do they give you control over the resolution or format of the data.

As a matter of course, a robust implementation of some HPC workload would include resource utilization data as a diagnostic tool in the event of some failure.

The monitor utility is a simple command line system resource monitoring tool for gathering such telemetry and is available as a module.

module load monitor

Complete documentation is available online at resource-monitor.readthedocs.io. A full manual page is also available for reference, man monitor.

In the context of a SLURM job you will need to put this monitoring task in the background to allow the rest of your job script to proceed. Be sure to interrupt these tasks at the end of your job.

#!/bin/bash

# FILENAME: monitored_job.sh

module load monitor

# track CPU load

monitor cpu percent >cpu-percent.log &

CPU_PID=$!

# track GPU load if any

monitor gpu percent >gpu-percent.log &

GPU_PID=$!

# your code here

# shut down the resource monitors

kill -s INT $CPU_PID $GPU_PID

A particularly elegant solution would be to include such tools in your prologue script and have the tear down in your epilogue script.

For large distributed jobs spread across multiple nodes, mpiexec can be used to gather telemetry from all nodes in the job. The hostname is included in each line of output so that data can be grouped as such. A concise way of constructing the needed list of hostnames in SLURM is to simply use srun hostname | sort -u.

#!/bin/bash

# FILENAME: monitored_job.sh

module load monitor

# track all CPUs (one monitor per host)

mpiexec -machinefile <(srun hostname | sort -u) \

monitor cpu percent --all-cores >cpu-percent.log &

CPU_PID=$!

# track all GPUs if any (one monitor per host)

mpiexec -machinefile <(srun hostname | sort -u) \

monitor gpu percent >gpu-percent.log &

GPU_PID=$!

# your code here

# shut down the resource monitors

kill -s INT $CPU_PID $GPU_PID

To get resource data in a more readily computable format, the monitor program can be told to output in CSV format with the --csv flag.

monitor cpu memory --csv >cpu-memory.csv

Or for GPU

monitor gpu memory --csv >gpu-memory.csv

For a distributed job you will need to suppress the header lines otherwise one will be created by each host.

monitor cpu memory --csv | head -1 >cpu-memory.csv

mpiexec -machinefile <(srun hostname | sort -u) \

monitor cpu memory --csv --no-header >>cpu-memory.csv

Or for GPU

monitor gpu memory --csv | head -1 >gpu-memory.csv

mpiexec -machinefile <(srun hostname | sort -u) \

monitor gpu memory --csv --no-header >>gpu-memory.csv

Specific Applications

The following examples demonstrate job submission files for some common real-world applications.

See the Generic SLURM Examples section for more examples on job submissions that can be adapted for use.

Python

Python is a high-level, general-purpose, interpreted, dynamic programming language. We suggest using Anaconda which is a Python distribution made for large-scale data processing, predictive analytics, and scientific computing. For example, to use the default Anaconda distribution:

$ module load anaconda

For a full list of available Anaconda and Python modules enter:

$ module spider anaconda

Example Python Jobs

This section illustrates how to submit a small Python job to a PBS queue.

Link to section 'Example 1: Hello world' of 'Example Python Jobs' Example 1: Hello world

Prepare a Python input file with an appropriate filename, here named myjob.in:

# FILENAME: hello.py

import string, sys

print "Hello, world!"

Prepare a job submission file with an appropriate filename, here named myjob.sub:

#!/bin/bash

# FILENAME: myjob.sub

module load anaconda

python hello.py

Basic knowledge about Batch Jobs.

Hello, world!Link to section 'Example 2: Matrix multiply' of 'Example Python Jobs' Example 2: Matrix multiply

Save the following script as matrix.py:

# Matrix multiplication program

x = [[3,1,4],[1,5,9],[2,6,5]]

y = [[3,5,8,9],[7,9,3,2],[3,8,4,6]]

result = [[sum(a*b for a,b in zip(x_row,y_col)) for y_col in zip(*y)] for x_row in x]

for r in result:

print(r)

Change the last line in the job submission file above to read:

python matrix.pyThe standard output file from this job will result in the following matrix:

[28, 56, 43, 53]

[65, 122, 59, 73]

[63, 104, 54, 60]

Link to section 'Example 3: Sine wave plot using numpy and matplotlib packages' of 'Example Python Jobs' Example 3: Sine wave plot using numpy and matplotlib packages

Save the following script as sine.py:

import numpy as np

import matplotlib

matplotlib.use('Agg')

import matplotlib.pylab as plt

x = np.linspace(-np.pi, np.pi, 201)

plt.plot(x, np.sin(x))

plt.xlabel('Angle [rad]')

plt.ylabel('sin(x)')

plt.axis('tight')

plt.savefig('sine.png')

Change your job submission file to submit this script and the job will output a png file and blank standard output and error files.

For more information about Python:

Installing Packages

We recommend installing Python packages in an Anaconda environment. One key advantage of Anaconda is that it allows users to install unrelated packages in separate self-contained environments. Individual packages can later be reinstalled or updated without impacting others.

To facilitate the process of creating and using Conda environments, we support a script (conda-env-mod) that generates a module file for an environment, as well as an optional Jupyter kernel to use this environment in a Jupyter.

You must load one of the conda modules in order to use this script.

$ module load conda

Step-by-step instructions for installing custom Python packages are presented below.

Link to section 'Step 1: Create a conda environment' of 'Installing Packages' Step 1: Create a conda environment

Users can use the conda-env-mod script to create an empty conda environment. This script needs either a name or a path for the desired environment. After the environment is created, it generates a module file for using it in future. Please note that conda-env-mod is different from the official conda-env script and supports a limited set of subcommands. Detailed instructions for using conda-env-mod can be found with the command conda-env-mod --help.

-

Example 1: Create a conda environment named mypackages in user's home directory.

$ conda-env-mod create -n mypackages -yIncluding the -y option lets you skip the prompt to install the package.

-

Example 2: Create a conda environment named mypackages at a custom location.

$ conda-env-mod create -p $PROJECT/apps/mypackages -yPlease follow the on-screen instructions while the environment is being created. After finishing, the script will print the instructions to use this environment.

... ... ... Preparing transaction: ...working... done Verifying transaction: ...working... done Executing transaction: ...working... done +---------------------------------------------------------------+ | To use this environment, load the following modules: | | module use $HOME/privatemodules | | module load conda-env/mypackages-py3.8.8 | | (then standard 'conda install' / 'pip install' / run scripts) | +---------------------------------------------------------------+ Your environment "mypackages" was created successfully.

Note down the module names, as you will need to load these modules every time you want to use this environment. You may also want to add the module load lines in your jobscript, if it depends on custom Python packages.

By default, module files are generated in your $HOME/privatemodules directory. The location of module files can be customized by specifying the -m /path/to/modules option.

- Example 3: Create a conda environment named labpackages in your group's $PROJECT folder and place the module file at a shared location for the group to use.

$ conda-env-mod create -p $PROJECT/apps/mypackages -m $PROJECT/etc/modules ... ... ... Preparing transaction: ...working... done Verifying transaction: ...working... done Executing transaction: ...working... done +----------------------------------------------------------------+ | To use this environment, load the following modules: | | module use /anvil/projects/x-mylab/etc/modules | | module load conda-env/mypackages-py3.8.8 | | (then standard 'conda install' / 'pip install' / run scripts) | +----------------------------------------------------------------+ Your environment "labpackages" was created successfully.

If you used a custom module file location, you need to run the module use command as printed by the script.

By default, only the environment and a module file are created (no Jupyter kernel). If you plan to use your environment in a Jupyter, you need to append a --jupyter flag to the above commands.

- Example 4: Create a Jupyter-enabled conda environment named labpackages in your group's $PROJECT folder and place the module file at a shared location for the group to use.

$ conda-env-mod create -p $PROJECT/apps/mypackages/labpackages -m $PROJECT/etc/modules --jupyter ... ... ... Jupyter kernel created: "Python (My labpackages Kernel)" ... ... ... Your environment "labpackages" was created successfully.

Link to section 'Step 2: Load the conda environment' of 'Installing Packages' Step 2: Load the conda environment

-

The following instructions assume that you have used conda-env-mod to create an environment named mypackages (Examples 1 or 2 above). If you used conda create instead, please use conda activate mypackages.

$ module use $HOME/privatemodules $ module load conda-env/mypackages-py3.8.8Note that the conda-env module name includes the Python version that it supports (Python 3.8.8 in this example). This is same as the Python version in the anaconda module.

-

If you used a custom module file location (Example 3 above), please use module use to load the conda-env module.

$ module use /anvil/projects/x-mylab/etc/modules $ module load conda-env/mypackages-py3.8.8

Link to section 'Step 3: Install packages' of 'Installing Packages' Step 3: Install packages

Now you can install custom packages in the environment using either conda install or pip install.

Link to section 'Installing with conda' of 'Installing Packages' Installing with conda

-

Example 1: Install OpenCV (open-source computer vision library) using conda.

$ conda install opencv -

Example 2: Install a specific version of OpenCV using conda.

$ conda install opencv=3.1.0

Link to section 'Installing with pip' of 'Installing Packages' Installing with pip

-

Example 4: Install mpi4py using pip.

$ pip install mpi4py -

Example 5: Install a specific version of mpi4py using pip.

$ pip install mpi4py==3.0.3Follow the on-screen instructions while the packages are being installed. If installation is successful, please proceed to the next section to test the packages.

Note: Do NOT run Pip with the --user argument, as that will install packages in a different location.

Link to section 'Step 4: Test the installed packages' of 'Installing Packages' Step 4: Test the installed packages

To use the installed Python packages, you must load the module for your conda environment. If you have not loaded the conda-env module, please do so following the instructions at the end of Step 1.

$ module use $HOME/privatemodules

$ module load conda-env/mypackages-py3.8.8

- Example 1: Test that OpenCV is available.

$ python -c "import cv2; print(cv2.__version__)" - Example 2: Test that mpi4py is available.

$ python -c "import mpi4py; print(mpi4py.__version__)"

If the commands are finished without errors, then the installed packages can be used in your program.

Link to section 'Additional capabilities of conda-env-mod' of 'Installing Packages' Additional capabilities of conda-env-mod

The conda-env-mod tool is intended to facilitate the creation of a minimal Anaconda environment, matching module file, and optionally a Jupyter kernel. Once created, the environment can then be accessed via familiar module load command, tuned and expanded as necessary. Additionally, the script provides several auxiliary functions to help manage environments, module files, and Jupyter kernels.

General usage for the tool adheres to the following pattern:

$ conda-env-mod help

$ conda-env-mod [optional arguments]

where required arguments are one of

- -n|--name ENV_NAME (name of the environment)

- -p|--prefix ENV_PATH (location of the environment)

and optional arguments further modify behavior for specific actions (e.g. -m to specify alternative location for generated module file).

Given a required name or prefix for an environment, the conda-env-mod script supports the following subcommands:

- create - to create a new environment, its corresponding module file and optional Jupyter kernel.

- delete - to delete existing environment along with its module file and Jupyter kernel.

- module - to generate just the module file for a given existing environment.

- kernel - to generate just the Jupyter kernel for a given existing environment (note that the environment has to be created with a --jupyter option).

- help - to display script usage help.

Using these subcommands, you can iteratively fine-tune your environments, module files and Jupyter kernels, as well as delete and re-create them with ease. Below we cover several commonly occurring scenarios.

Link to section 'Generating module file for an existing environment' of 'Installing Packages' Generating module file for an existing environment

If you already have an existing configured Anaconda environment and want to generate a module file for it, follow appropriate examples from Step 1 above, but use the module subcommand instead of the create one. E.g.

$ conda-env-mod module -n mypackages

and follow printed instructions on how to load this module. With an optional --jupyter flag, a Jupyter kernel will also be generated.

Note that if you intend to proceed with a Jupyter kernel generation (via the --jupyter flag or a kernel subcommand later), you will have to ensure that your environment has ipython and ipykernel packages installed into it. To avoid this and other related complications, we highly recommend making a fresh environment using a suitable conda-env-mod create .... --jupyter command instead.

Link to section 'Generating Jupyter kernel for an existing environment' of 'Installing Packages' Generating Jupyter kernel for an existing environment

If you already have an existing configured Anaconda environment and want to generate a Jupyter kernel file for it, you can use the kernel subcommand. E.g.

$ conda-env-mod kernel -n mypackages

This will add a "Python (My mypackages Kernel)" item to the dropdown list of available kernels upon your next time use Jupyter.

Note that generated Jupiter kernels are always personal (i.e. each user has to make their own, even for shared environments). Note also that you (or the creator of the shared environment) will have to ensure that your environment has ipython and ipykernel packages installed into it.