Bell User Guide

Bell is a Community Cluster optimized for communities running traditional, tightly-coupled science and engineering applications.

Link to section 'Overview of Bell' of 'Overview of Bell' Overview of Bell

Bell is a Community Cluster optimized for communities running traditional, tightly-coupled science and engineering applications. Bell was built through a partnership with Dell and AMD over the summer of 2020. Bell consists of Dell compute nodes with two 64-core AMD Epyc 7662 "Rome" processors (128 cores per node) and 256 GB of memory. All nodes have 100 Gbps HDR Infiniband interconnect and a 6-year warranty.

Bell access is offered on the basis of each 64-core Rome processor, or a half-node share. To purchase access to Bell today, go to the Cluster Access Purchase page. Please subscribe to our Community Cluster Program Mailing List to stay informed on the latest purchasing developments or contact us via email at rcac-cluster-purchase@lists.purdue.edu if you have any questions.

Link to section 'Bell Namesake' of 'Overview of Bell' Bell Namesake

Bell is named in honor of Clara Bell Sessions, minority advocate and Professor and Director of Continuing Education of Nursing. More information about her life and impact on Purdue is available in a Biography of Bell.

Link to section 'Bell Specifications' of 'Overview of Bell' Bell Specifications

All Bell compute nodes have 128 processor cores and 100 Gbps Infiniband interconnects.

| Front-Ends | Number of Nodes | Processors per Node | Cores per Node | Memory per Node | Retires in |

|---|---|---|---|---|---|

| 8 | One Rome CPU @ 2.0GHz | 32 | 512 GB | 2026 |

| Sub-Cluster | Number of Nodes | Processors per Node | Cores per Node | Memory per Node | Retires in |

|---|---|---|---|---|---|

| A | 448 | Two Rome CPUs @ 2.0GHz | 128 | 256 GB | 2026 |

| B | 8 | Two Rome CPUs @ 2.0GHz | 128 | 1 TB | 2026 |

| G | 4 | Two Rome CPUs @ 2.0GHz, two MI50 AMD GPUs (32GB) |

128 | 256 GB | 2026 |

| G | 1 | Two Cascade Lake CPUs @ 2.90 GHz, six MI50 AMD GPUs (32GB) |

48 | 384 GB | 2026 |

Bell nodes runRocky Linux 8 and use Slurm (Simple Linux Utility for Resource Management) as the batch scheduler for resource and job management. The application of operating system patches occurs as security needs dictate. All nodes allow for unlimited stack usage, as well as unlimited core dump size (though disk space and server quotas may still be a limiting factor).

On Bell, the following set of compiler and message-passing library for parallel code are recommended:

- GCC 9.3.0

- OpenMPI

This compiler and these libraries are loaded by default. To load the recommended set again:

$ module load rcac

To verify what you loaded:

$ module list

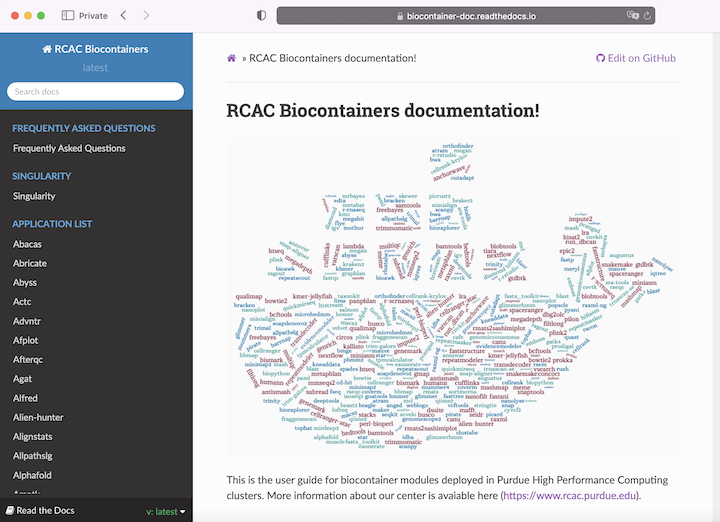

Link to section 'Software catalog' of 'Overview of Bell' Software catalog

Link to section 'Clara Bell Sessions' of 'Biography of Clara Bell Sessions' Clara Bell Sessions

Clara Bell Sessions was a nursing professor and director of continuing education in nursing at Purdue University. While at Purdue, she helped establish the Minority Student Nurses' Association (MSNA) and Minority Faculty Fellows program. Bell attended Indiana State University where she earned her bachelor of science in nursing, followed by Indiana University where she earned her master's and doctoral degrees in their School of Education.

In 1981, Purdue University hired Bell as a professor of nursing and she later became the director of continuing education in nursing. Bell was dedicated to improving the experience for minority students and faculty at Purdue. She was integral in the creation of the Minority Student Nurses' Association (MSNA), now Diversity in Nursing Association, and the Minority Faculty Fellows program, the precursor of the Office of Diversity and Multicultural Affairs.

Bell was also active in national organizations. In 1992 and 1993, she co-chaired the National Congress of Black Faculty Council on Research and Education, served as a cabinet member on the Human Rights Committee of the American Nurses Association, and was a charter member of the Association of Black Nursing Faculty in Higher Education. She earned numerous service awards for her work, including service awards from the Indiana Diabetes Association, Indiana State Nurses Association's board of directors, Delta Omicron Chapter of the Nursing Honor Society of Sigma Theta Tau, and Indiana Department of Aging and Community Services.

Clara Bell Sessions died on March 3, 1996 in Terre Haute, Indiana. After her death, the Black Caucus of Faculty and Staff created the annual Clara E. Bell Academic Achievement Award for the senior in nursing or health sciences with the highest grade point average. In 2013, she was posthumously awarded the Title IX Distinguished Service Award for her contributions to gender equity in education.

Link to section 'Citations' of 'Biography of Clara Bell Sessions' Citations

Archives and Special Collections. (2020, July 28). Bell, Clara E., 1934-. Purdue University. Retrieved from: https://archives.lib.purdue.edu/agents/people/3085

Klink, A. (2020, February 4). Clara E. Bell was a trailblazing African American professor of nursing. Purdue University. Retrieved from: https://www.purdue.edu/hhs/news/2020/02/clara-e-bell-was-a-trailblazing-african-american-professor-of-nursing%EF%BB%BF/

Lythgoe, D. (2001-2020). Clara E. Stewart. The Lost Creek Settlement of Vigo County, Indiana. Retrieved from: https://www.lost-creek.org/genealogy/getperson.php?personID=I72&tree=tree2

Purdue Today. (2013, April 19). Purdue Today presenting profiles on Title IX service awardees. Purdue University. Retrieved from: https://www.purdue.edu/newsroom/purduetoday/releases/2013/Q2/purdue-today-presenting-profiles-on-title-ix-service-awardees.html

Link to section 'Accounts on Bell' of 'Accounts' Accounts on Bell

Link to section 'Obtaining an Account' of 'Accounts' Obtaining an Account

To obtain an account, you must be part of a research group which has purchased access to Bell. Refer to the Accounts / Access page for more details on how to request access.

Link to section 'Outside Collaborators' of 'Accounts' Outside Collaborators

A valid Purdue Career Account is required for access to any resource. If you do not currently have a valid Purdue Career Account you must have a current Purdue faculty or staff member file a Request for Privileges (R4P) before you can proceed.

Purchasing Nodes

RCAC operates a significant shared cluster computing infrastructure developed over several years through focused acquisitions using funds from grants, faculty startup packages, and institutional sources. These "community clusters" are now at the foundation of Purdue's research cyberinfrastructure.

We strongly encourage any Purdue faculty or staff with computational needs to join this growing community and enjoy the enormous benefits this shared infrastructure provides:

- Peace of Mind

RCAC system administrators take care of security patches, attempted hacks, operating system upgrades, and hardware repair so faculty and graduate students can concentrate on research.

- Low Overhead

RCAC data centers provide infrastructure such as networking, racks, floor space, cooling, and power.

- Cost Effective

RCAC works with vendors to obtain the best price for computing resources by pooling funds from different disciplines to leverage greater group purchasing power.

Through the Community Cluster Program, Purdue affiliates have invested several million dollars in computational and storage resources from Q4 2006 to the present with great success in both the research accomplished and the money saved on equipment purchases.

For more information or to purchase access to our latest cluster today, see the Purchase page. Have questions? contact us at rcac-cluster-purchase@lists.purdue.edu to discuss.

Logging In

To submit jobs on Bell, log in to the submission host bell.rcac.purdue.edu via SSH. This submission host is actually 7 front-end hosts: bell-fe00 through bell-fe06 The login process randomly assigns one of these front-ends to each login to bell.rcac.purdue.edu.

Purdue Login

Link to section 'SSH' of 'Purdue Login' SSH

- SSH to the cluster as usual.

- When asked for a password, type your password followed by "

,push". - Your Purdue Duo client will receive a notification to approve the login.

Link to section 'Thinlinc' of 'Purdue Login' Thinlinc

- When asked for a password, type your password followed by "

,push". - Your Purdue Duo client will receive a notification to approve the login.

- The native Thinlinc client will prompt for Duo approval twice due to the way Thinlinc works.

- The native Thinlinc client also supports key-based authentication.

Jupyterhub

The Jupyterhub service has been discontinued in favor of Open OnDemand Jupyter sessions. More infrmation can be found here:

Passwords

Bell supports either Purdue two-factor authentication (Purdue Login) or SSH keys.

SSH Client Software

Secure Shell or SSH is a way of establishing a secure connection between two computers. It uses public-key cryptography to authenticate the user with the remote computer and to establish a secure connection. Its usual function involves logging in to a remote machine and executing commands. There are many SSH clients available for all operating systems:

Linux / Solaris / AIX / HP-UX / Unix:

- The

sshcommand is pre-installed. Log in usingssh myusername@bell.rcac.purdue.edufrom a terminal.

Microsoft Windows:

- MobaXterm is a small, easy to use, full-featured SSH client. It includes X11 support for remote displays, SFTP capabilities, and limited SSH authentication forwarding for keys.

Mac OS X:

- The

sshcommand is pre-installed. You may start a local terminal window from "Applications->Utilities". Log in by typing the commandssh myusername@bell.rcac.purdue.edu.

When prompted for password, enter your Purdue career account password followed by ",push ". Your Purdue Duo client will then receive a notification to approve the login.

SSH Keys

Link to section 'General overview' of 'SSH Keys' General overview

To connect to Bell using SSH keys, you must follow three high-level steps:

- Generate a key pair consisting of a private and a public key on your local machine.

- Copy the public key to the cluster and append it to

$HOME/.ssh/authorized_keysfile in your account. - Test if you can ssh from your local computer to the cluster without using your Purdue password.

Detailed steps for different operating systems and specific SSH client softwares are give below.

Link to section 'Mac and Linux:' of 'SSH Keys' Mac and Linux:

-

Run

ssh-keygenin a terminal on your local machine. You may supply a filename and a passphrase for protecting your private key, but it is not mandatory. To accept the default settings, press Enter without specifying a filename.

Note: If you do not protect your private key with a passphrase, anyone with access to your computer could SSH to your account on Bell. -

By default, the key files will be stored in

~/.ssh/id_rsaand~/.ssh/id_rsa.pubon your local machine. -

Copy the contents of the public key into

$HOME/.ssh/authorized_keyson the cluster with the following command. When asked for a password, type your password followed by ",push". Your Purdue Duo client will receive a notification to approve the login.ssh-copy-id -i ~/.ssh/id_rsa.pub myusername@bell.rcac.purdue.eduNote: use your actual Purdue account user name.

If your system does not have the

ssh-copy-idcommand, use this instead:cat ~/.ssh/id_rsa.pub | ssh myusername@bell.rcac.purdue.edu "mkdir -p ~/.ssh && chmod 700 ~/.ssh && cat >> ~/.ssh/authorized_keys" -

Test the new key by SSH-ing to the server. The login should now complete without asking for a password.

-

If the private key has a non-default name or location, you need to specify the key by

ssh -i my_private_key_name myusername@bell.rcac.purdue.edu

Link to section 'Windows:' of 'SSH Keys' Windows:

| Programs | Instructions |

|---|---|

| MobaXterm | Open a local terminal and follow Linux steps |

| Git Bash | Follow Linux steps |

| Windows 10 PowerShell | Follow Linux steps |

| Windows 10 Subsystem for Linux | Follow Linux steps |

| PuTTY | Follow steps below |

PuTTY:

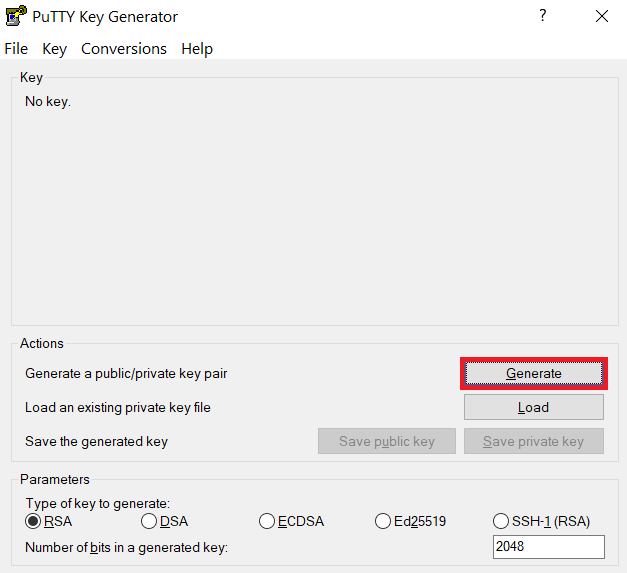

-

Launch PuTTYgen, keep the default key type (RSA) and length (2048-bits) and click Generate button.

The "Generate" button can be found under the "Actions" section of the PuTTY Key Generator interface. -

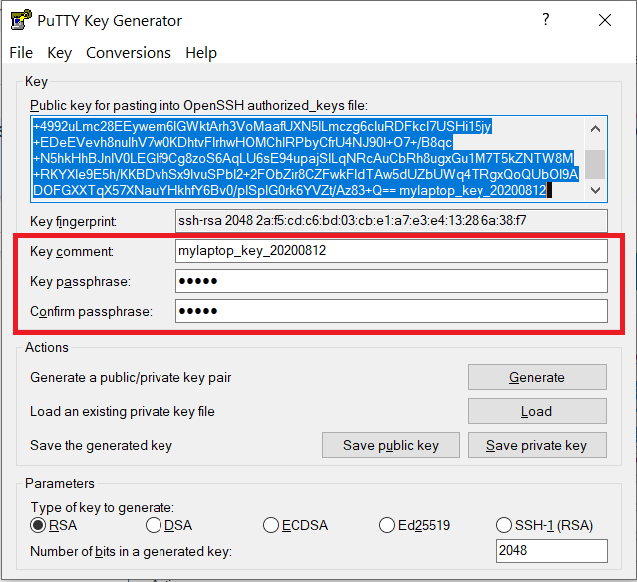

Once the key pair is generated:

Use the Save public key button to save the public key, e.g.

Documents\SSH_Keys\mylaptop_public_key.pubUse the Save private key button to save the private key, e.g.

Documents\SSH_Keys\mylaptop_private_key.ppk. When saving the private key, you can also choose a reminder comment, as well as an optional passphrase to protect your key, as shown in the image below. Note: If you do not protect your private key with a passphrase, anyone with access to your computer could SSH to your account on Bell.

The PuTTY Key Generator form has inputs for the Key passphrase and optional reminder comment. From the menu of PuTTYgen, use the "Conversion -> Export OpenSSH key" tool to convert the private key into openssh format, e.g.

Documents\SSH_Keys\mylaptop_private_key.opensshto be used later for Thinlinc. -

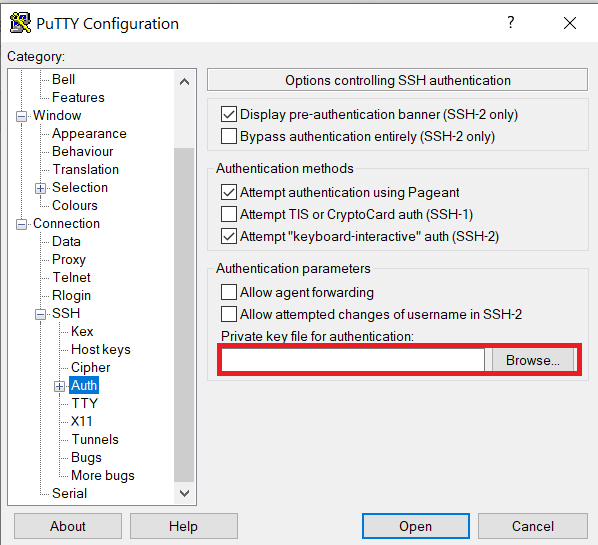

Configure PuTTY to use key-based authentication:

Launch PuTTY and navigate to "Connection -> SSH ->Auth" on the left panel, click Browse button under the "Authentication parameters" section and choose your private key, e.g. mylaptop_private_key.ppk

After clicking Connection -> SSH ->Auth panel, the "Browse" option can be found at the bottom of the resulting panel. Navigate back to "Session" on the left panel. Highlight "Default Settings" and click the "Save" button to ensure the change in place.

-

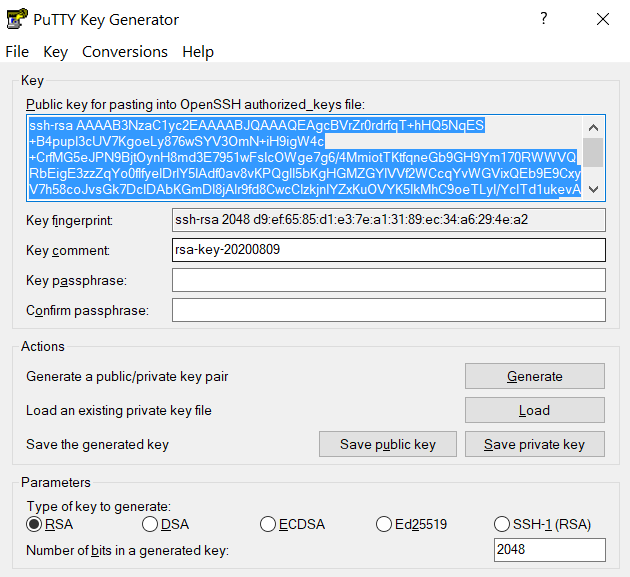

Connect to the cluster. When asked for a password, type your password followed by "

,push". Your Purdue Duo client will receive a notification to approve the login. Copy the contents of public key from PuTTYgen as shown below and paste it into$HOME/.ssh/authorized_keys. Please double-check that your text editor did not wrap or fold the pasted value (it should be one very long line).

The "Public key" will look like a long string of random letters and numbers in a text box at the top of the window. - Test by connecting to the cluster. If successful, you will not be prompted for a password or receive a Duo notification. If you protected your private key with a passphrase in step 2, you will instead be prompted to enter your chosen passphrase when connecting.

SSH X11 Forwarding

SSH supports tunneling of X11 (X-Windows). If you have an X11 server running on your local machine, you may use X11 applications on remote systems and have their graphical displays appear on your local machine. These X11 connections are tunneled and encrypted automatically by your SSH client.

Link to section 'Installing an X11 Server' of 'SSH X11 Forwarding' Installing an X11 Server

To use X11, you will need to have a local X11 server running on your personal machine. Both free and commercial X11 servers are available for various operating systems.

Linux / Solaris / AIX / HP-UX / Unix:

- An X11 server is at the core of all graphical sessions. If you are logged in to a graphical environment on these operating systems, you are already running an X11 server.

- ThinLinc is an alternative to running an X11 server directly on your Linux computer. ThinLinc is a service that allows you to connect to a persistent remote graphical desktop session.

Microsoft Windows:

- ThinLinc is an alternative to running an X11 server directly on your Windows computer. ThinLinc is a service that allows you to connect to a persistent remote graphical desktop session.

- MobaXterm is a small, easy to use, full-featured SSH client. It includes X11 support for remote displays, SFTP capabilities, and limited SSH authentication forwarding for keys.

Mac OS X:

- X11 is available as an optional install on the Mac OS X install disks prior to 10.7/Lion. Run the installer, select the X11 option, and follow the instructions. For 10.7+ please download XQuartz.

- ThinLinc is an alternative to running an X11 server directly on your Mac computer. ThinLinc is a service that allows you to connect to a persistent remote graphical desktop session.

Link to section 'Enabling X11 Forwarding in your SSH Client' of 'SSH X11 Forwarding' Enabling X11 Forwarding in your SSH Client

Once you are running an X11 server, you will need to enable X11 forwarding/tunneling in your SSH client:

ssh: X11 tunneling should be enabled by default. To be certain it is enabled, you may usessh -Y.- MobaXterm: Select "New session" and "SSH." Under "Advanced SSH Settings" check the box for X11 Forwarding.

SSH will set the remote environment variable $DISPLAY to "localhost:XX.YY" when this is working correctly. If you had previously set your $DISPLAY environment variable to your local IP or hostname, you must remove any set/export/setenv of this variable from your login scripts. The environment variable $DISPLAY must be left as SSH sets it, which is to a random local port address. Setting $DISPLAY to an IP or hostname will not work.

ThinLinc

RCAC provides Cendio's ThinLinc as an alternative to running an X11 server directly on your computer. It allows you to run graphical applications or graphical interactive jobs directly on Bell through a persistent remote graphical desktop session.

ThinLinc is a service that allows you to connect to a persistent remote graphical desktop session. This service works very well over a high latency, low bandwidth, or off-campus connection compared to running an X11 server locally. It is also very helpful for Windows users who do not have an easy to use local X11 server, as little to no set up is required on your computer.

There are two ways in which to use ThinLinc: preferably through the native client or through a web browser.

Link to section 'Installing the ThinLinc native client' of 'ThinLinc' Installing the ThinLinc native client

The native ThinLinc client will offer the best experience especially over off-campus connections and is the recommended method for using ThinLinc. It is compatible with Windows, Mac OS X, and Linux.

- Download the ThinLinc client from the ThinLinc website.

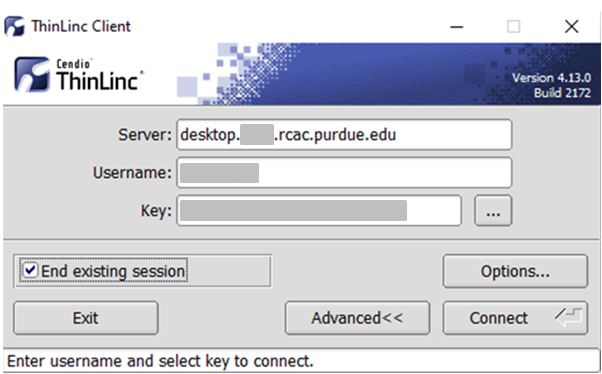

- Start the ThinLinc client on your computer.

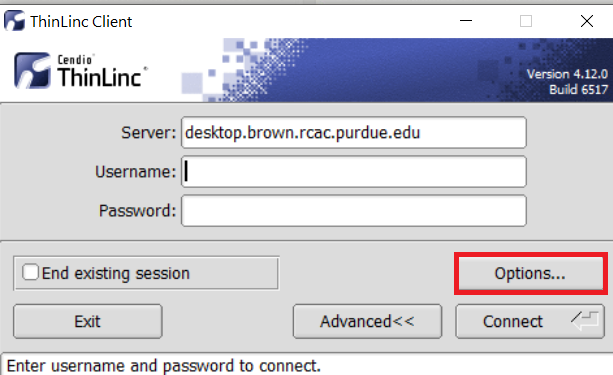

- In the client's login window, use desktop.bell.rcac.purdue.edu as the Server. Use your Purdue Career Account username and password, but append "

,push" to your password. - Click the Connect button.

- Your Purdue Login Duo will receive a notification to approve your login.

- Continue to following section on connecting to Bell from ThinLinc.

Link to section 'Using ThinLinc through your web browser' of 'ThinLinc' Using ThinLinc through your web browser

The ThinLinc service can be accessed from your web browser as a convenience to installing the native client. This option works with no set up and is a good option for those on computers where you do not have privileges to install software. All that is required is an up-to-date web browser. Older versions of Internet Explorer may not work.

- Open a web browser and navigate to desktop.bell.rcac.purdue.edu.

- Log in with your Purdue Career Account username and password, but append "

,push" to your password. - You may safely proceed past any warning messages from your browser.

- Your Purdue Login Duo will receive a notification to approve your login.

- Continue to the following section on connecting to Bell from ThinLinc.

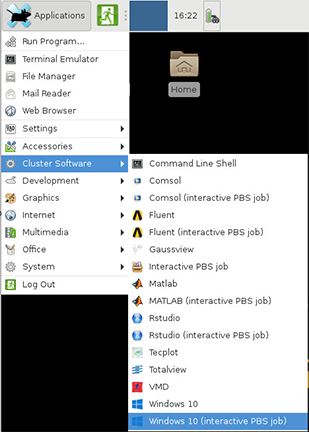

Link to section 'Connecting to Bell from ThinLinc' of 'ThinLinc' Connecting to Bell from ThinLinc

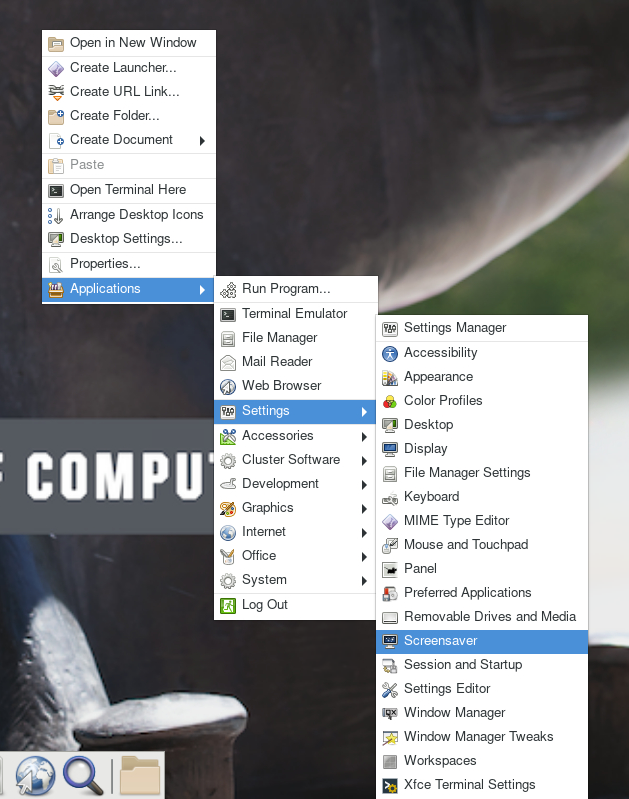

- Once logged in, you will be presented with a remote Linux desktop running directly on a cluster front-end.

- Open the terminal application on the remote desktop.

- Once logged in to the Bell head node, you may use graphical editors, debuggers, software like Matlab, or run graphical interactive jobs. For example, to test the X forwarding connection issue the following command to launch the graphical editor gedit:

$ gedit - This session will remain persistent even if you disconnect from the session. Any interactive jobs or applications you left running will continue running even if you are not connected to the session.

Link to section 'Tips for using ThinLinc native client' of 'ThinLinc' Tips for using ThinLinc native client

- To exit a full screen ThinLinc session press the F8 key on your keyboard (fn + F8 key for Mac users) and click to disconnect or exit full screen.

- Full screen mode can be disabled when connecting to a session by clicking the Options button and disabling full screen mode from the Screen tab.

Link to section 'Configure ThinLinc to use SSH Keys' of 'ThinLinc' Configure ThinLinc to use SSH Keys

- The web client does NOT support public-key authentication.

-

ThinLinc native client supports the use of an SSH key pair. For help generating and uploading keys to the cluster, see SSH Keys section in our user guide for details.

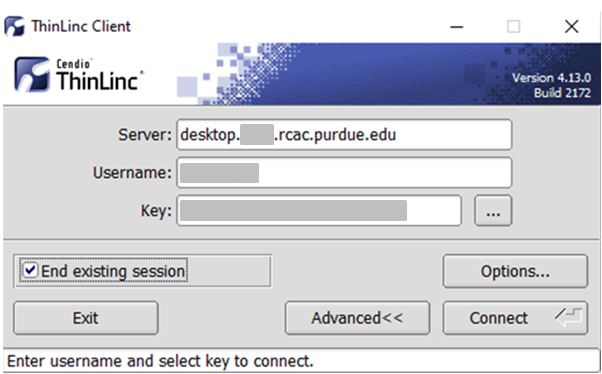

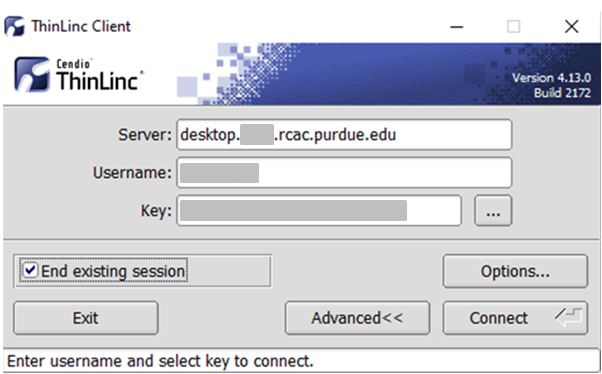

To set up SSH key authentication on the ThinLinc client:

-

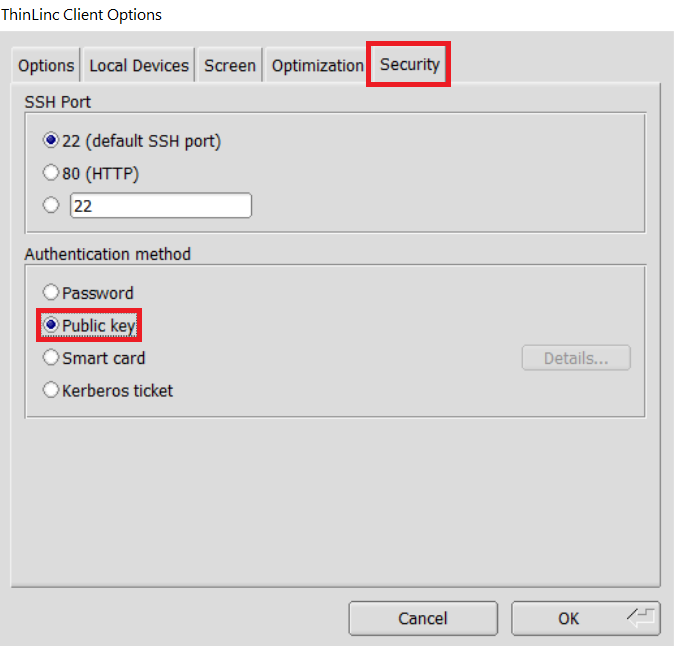

Open the Options panel, and select Public key as your authentication method on the Security tab.

The "Options..." button in the ThinLinc Client can be found towards the bottom left, above the "Connect" button. -

In the options dialog, switch to the "Security" tab and select the "Public key" radio button:

The "Security" tab found in the options dialog, will be the last of available tabs. The "Public key" option can be found in the "Authentication method" options group. - Click OK to return to the ThinLinc Client login window. You should now see a Key field in place of the Password field.

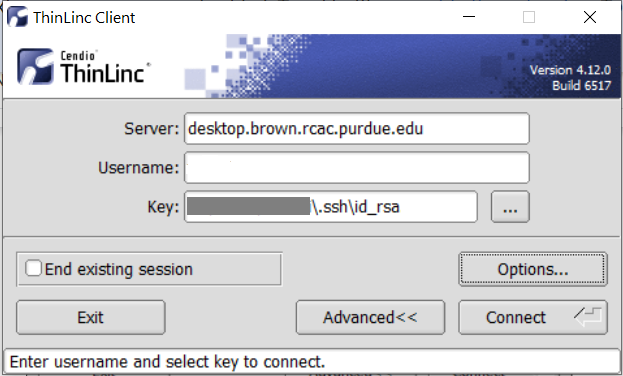

-

In the Key field, type the path to your locally stored private key or click the ... button to locate and select the key on your local system. Note: If PuTTY is used to generate the SSH Key pairs, please choose the private key in the openssh format.

The ThinLinc Client login window will now display key field instead of a password field.

-

Link to section 'Rstudio Server' of 'Rstudio Server' Rstudio Server

After Aug 22, 2020, the workbench cluster will NOT support RStudio Server. Please see how to launch RStudio on bell.

File Storage and Transfer

Learn more about file storage transfer for Bell.

Link to section 'Archive and Compression' of 'Archive and Compression' Archive and Compression

There are several options for archiving and compressing groups of files or directories. The mostly commonly used options are:

Link to section 'tar' of 'Archive and Compression' tar

See the official documentation for tar for more information.

Saves many files together into a single archive file, and restores individual files from the archive. Includes automatic archive compression/decompression options and special features for incremental and full backups.

Examples:

(list contents of archive somefile.tar)

$ tar tvf somefile.tar

(extract contents of somefile.tar)

$ tar xvf somefile.tar

(extract contents of gzipped archive somefile.tar.gz)

$ tar xzvf somefile.tar.gz

(extract contents of bzip2 archive somefile.tar.bz2)

$ tar xjvf somefile.tar.bz2

(archive all ".c" files in current directory into one archive file)

$ tar cvf somefile.tar *.c

(archive and gzip-compress all files in a directory into one archive file)

$ tar czvf somefile.tar.gz somedirectory/

(archive and bzip2-compress all files in a directory into one archive file)

$ tar cjvf somefile.tar.bz2 somedirectory/

Other arguments for tar can be explored by using the man tar command.

Link to section 'gzip' of 'Archive and Compression' gzip

The standard compression system for all GNU software.

Examples:

(compress file somefile - also removes uncompressed file)

$ gzip somefile

(uncompress file somefile.gz - also removes compressed file)

$ gunzip somefile.gz

Link to section 'bzip2' of 'Archive and Compression' bzip2

See the official documentation for bzip for more information.

Strong, lossless data compressor based on the Burrows-Wheeler transform. Stronger compression than gzip.

Examples:

(compress file somefile - also removes uncompressed file)

$ bzip2 somefile

(uncompress file somefile.bz2 - also removes compressed file)

$ bunzip2 somefile.bz2

There are several other, less commonly used, options available as well:

- zip

- 7zip

- xz

Link to section 'Storage Environment Variables' of 'Storage Environment Variables' Storage Environment Variables

Several environment variables are automatically defined for you to help you manage your storage. Use environment variables instead of actual paths whenever possible to avoid problems if the specific paths to any of these change.

| Name | Description |

|---|---|

| HOME | /home/myusername |

| PWD | path to your current directory |

| RCAC_SCRATCH | /scratch/bell/myusername |

By convention, environment variable names are all uppercase. You may use them on the command line or in any scripts in place of and in combination with hard-coded values:

$ ls $HOME

...

$ ls $RCAC_SCRATCH/myproject

...

To find the value of any environment variable:

$ echo $RCAC_SCRATCH

/scratch/bell/myusername

To list the values of all environment variables:

$ env

USER=myusername

HOME=/home/myusername

RCAC_SCRATCH=/scratch/bell/myusername

...

You may create or overwrite an environment variable. To pass (export) the value of a variable in bash:

$ export MYPROJECT=$RCAC_SCRATCH/myproject

To assign a value to an environment variable in either tcsh or csh:

$ setenv MYPROJECT value

Storage Options

File storage options on RCAC systems include long-term storage (home directories, depot, Fortress) and short-term storage (scratch directories, /tmp directory). Each option has different performance and intended uses, and some options vary from system to system as well. Daily snapshots of home directories are provided for a limited time for accidental deletion recovery. Scratch directories and temporary storage are not backed up and old files are regularly purged from scratch and /tmp directories. More details about each storage option appear below.

Home Directory

Home directories are provided for long-term file storage. Each user has one home directory. You should use your home directory for storing important program files, scripts, input data sets, critical results, and frequently used files. You should store infrequently used files on Fortress. Your home directory becomes your current working directory, by default, when you log in.

Your home directory physically resides on a dedicated storage system only accessible for Bell. To find the path to your home directory, first log in then immediately enter the following:

$ pwd

/home/myusername

Or from any subdirectory:

$ echo $HOME

/home/myusername

Please note that your Bell home directory and its contents are exclusive to Bell cluster, including front-end hosts and compute nodes. This home directory is not available on other RCAC machines but Bell. There is no automatic copying or synchronization between home directories, but at your discretion you can manually copy all or parts of your main home to Bell using one of the suggested methods.

Your home directory has a quota limiting the total size of files you may store within. For more information, refer to the Storage Quotas / Limits Section.

Link to section 'Lost File Recovery' of 'Home Directory' Lost File Recovery

Nightly snapshots for 7 days, weekly snapshots for 4 weeks, and monthly snapshots for 3 months are kept. This means you will find snapshots from the last 7 nights, the last 4 Sundays, and the last 3 first of the months. Files are available going back between two and three months, depending on how long ago the last first of the month was. Snapshots beyond this are not kept. For additional security, you should store another copy of your files on more permanent storage, such as the Fortress HPSS Archive.

Link to section 'Performance' of 'Home Directory' Performance

Your home directory is medium-performance, non-purged space suitable for tasks like sharing data, editing files, developing and building software, and many other uses.

Your home directory is not designed or intended for use as high-performance working space for running data-intensive jobs with heavy I/O demands.

Link to section 'Long-Term Storage' of 'Long-Term Storage' Long-Term Storage

Long-term Storage or Permanent Storage is available to users on the High Performance Storage System (HPSS), an archival storage system, called Fortress. Program files, data files and any other files which are not used often, but which must be saved, can be put in permanent storage. Fortress currently has over 10PB of capacity.

For more information about Fortress, how it works, and user guides, and how to obtain an account:

Scratch Space

Scratch directories are provided for short-term file storage only. The quota of your scratch directory is much greater than the quota of your home directory. You should use your scratch directory for storing temporary input files which your job reads or for writing temporary output files which you may examine after execution of your job. You should use your home directory and Fortress for longer-term storage or for holding critical results. The hsi and htar commands provide easy-to-use interfaces into the archive and can be used to copy files into the archive interactively or even automatically at the end of your regular job submission scripts.

Files in scratch directories are not recoverable. Files in scratch directories are not backed up. If you accidentally delete a file, a disk crashes, or old files are purged, they cannot be restored.

Files are purged from scratch directories not accessed or had content modified in 30 days. Owners of these files receive a notice one week before removal via email. Be sure to regularly check your Purdue email account or set up mail forwarding to an email account you do regularly check. For more information, please refer to our Scratch File Purging Policy.

All users may access scratch directories on Bell. To find the path to your scratch directory:

$ findscratch

/scratch/bell/myusername

The value of variable $RCAC_SCRATCH is your scratch directory path. Use this variable in any scripts. Your actual scratch directory path may change without warning, but this variable will remain current.

$ echo $RCAC_SCRATCH

/scratch/bell/myusername

Scratch directories are specific per cluster. I.e. only the /scratch/bell directory is available on Bell front-end and compute nodes. No other scratch directories are available on Bell.

Your scratch directory has a quota capping the total size and number of files you may store in it. For more information, refer to the section Storage Quotas / Limits.

Link to section 'Performance' of 'Scratch Space' Performance

Your scratch directory is located on a high-performance, large-capacity parallel filesystem engineered to provide work-area storage optimized for a wide variety of job types. It is designed to perform well with data-intensive computations, while scaling well to large numbers of simultaneous connections.

/tmp Directory

/tmp directories are provided for short-term file storage only. Each front-end and compute node has a /tmp directory. Your program may write temporary data to the /tmp directory of the compute node on which it is running. That data is available for as long as your program is active. Once your program terminates, that temporary data is no longer available. When used properly, /tmp may provide faster local storage to an active process than any other storage option. You should use your home directory and Fortress for longer-term storage or for holding critical results.

Backups are not performed for the /tmp directory and removes files from /tmp whenever space is low or whenever the system needs a reboot. In the event of a disk crash or file purge, files in /tmp are not recoverable. You should copy any important files to more permanent storage.

Storage Quota / Limits

Some limits are imposed on your disk usage on research systems. A quota is implemented on each filesystem. Each filesystem (home directory, scratch directory, etc.) may have a different limit. If you exceed the quota, you will not be able to save new files or new data to the filesystem until you delete or move data to long-term storage.

Link to section 'Checking Quota' of 'Storage Quota / Limits' Checking Quota

To check the current quotas of your home and scratch directories check the My Quota page or use the myquota command:

$ myquota

Type Filesystem Size Limit Use Files Limit Use

==============================================================================

home myusername 5.0GB 25.0GB 20% - - -

scratch bell 220.7GB 100.0TB 0.22% 8k 2,000k 0.43%

The columns are as follows:

- Type: indicates home or scratch directory or your depot space.

- Filesystem: name of storage option.

- Size: sum of file sizes in bytes.

- Limit: allowed maximum on sum of file sizes in bytes.

- Use: percentage of file-size limit currently in use.

- Files: number of files and directories (not the size).

- Limit: allowed maximum on number of files and directories. It is possible, though unlikely, to reach this limit and not the file-size limit if you create a large number of very small files.

- Use: percentage of file-number limit currently in use.

If you find that you reached your quota in either your home directory or your scratch file directory, obtain estimates of your disk usage. Find the top-level directories which have a high disk usage, then study the subdirectories to discover where the heaviest usage lies.

To see in a human-readable format an estimate of the disk usage of your top-level directories in your home directory:

$ du -h --max-depth=1 $HOME >myfile

32K /home/myusername/mysubdirectory_1

529M /home/myusername/mysubdirectory_2

608K /home/myusername/mysubdirectory_3

The second directory is the largest of the three, so apply command du to it.

To see in a human-readable format an estimate of the disk usage of your top-level directories in your scratch file directory:

$ du -h --max-depth=1 $RCAC_SCRATCH >myfile

160K /scratch/bell/myusername

This strategy can be very helpful in figuring out the location of your largest usage. Move unneeded files and directories to long-term storage to free space in your home and scratch directories.

Link to section 'Increasing Quota' of 'Storage Quota / Limits' Increasing Quota

Link to section 'Home Directory' of 'Storage Quota / Limits' Home Directory

If you find you need additional disk space in your home directory, please consider archiving and compressing old files and moving them to long-term storage on the Fortress HPSS Archive, or purchase the Depot space for long-term storage. Unfortunately, it is not possible to increase your home directory quota beyond it's current level.

Link to section 'Scratch Space' of 'Storage Quota / Limits' Scratch Space

If you find you need additional disk space in your scratch space, please first consider archiving and compressing old files and moving them to long-term storage on the Fortress HPSS Archive. If you are unable to do so, you may ask for a quota increase by contacting support.

Link to section 'Sharing Files from Bell' of 'Sharing' Sharing Files from Bell

Bell supports several methods for file sharing. Use the links below to learn more about these methods.

Link to section 'Sharing Data with Globus' of 'Globus' Sharing Data with Globus

Data on any RCAC resource can be shared with other users within Purdue or with collaborators at other institutions. Globus allows convenient sharing of data with outside collaborators. Data can be shared with collaborators' personal computers or directly with many other computing resources at other institutions.

To share files, login to https://transfer.rcac.purdue.edu, navigate to the endpoint (collection) of your choice, and follow instructions as described in Globus documentation on how to share data:

See also RCAC Globus presentation.

File Transfer

Bell supports several methods for file transfer. Use the links below to learn more about these methods.

SCP

SCP (Secure CoPy) is a simple way of transferring files between two machines that use the SSH protocol. SCP is available as a protocol choice in some graphical file transfer programs and also as a command line program on most Linux, Unix, and Mac OS X systems. SCP can copy single files, but will also recursively copy directory contents if given a directory name.

After Aug 17, 2020, the community clusters will not support password-based authentication for login. Methods that can be used include two-factor authentication (Purdue Login) or SSH keys. If you do not have SSH keys installed, you would need to type your Purdue Login response into the SFTP's "Password" prompt.

Link to section 'Command-line usage:' of 'SCP' Command-line usage:

You can transfer files both to and from Bell while initiating an SCP session on either some other computer or on Bell (in other words, directionality of connection and directionality of data flow are independent from each other). The scp command appears somewhat similar to the familiar cp command, with an extra user@host:file syntax to denote files and directories on a remote host. Either Bell or another computer can be a remote.

-

Example: Initiating SCP session on some other computer (i.e. you are on some other computer, connecting to Bell):

(transfer TO Bell) (Individual files) $ scp sourcefile myusername@bell.rcac.purdue.edu:somedir/destinationfile $ scp sourcefile myusername@bell.rcac.purdue.edu:somedir/ (Recursive directory copy) $ scp -pr sourcedirectory/ myusername@bell.rcac.purdue.edu:somedir/ (transfer FROM Bell) (Individual files) $ scp myusername@bell.rcac.purdue.edu:somedir/sourcefile destinationfile $ scp myusername@bell.rcac.purdue.edu:somedir/sourcefile somedir/ (Recursive directory copy) $ scp -pr myusername@bell.rcac.purdue.edu:sourcedirectory somedir/The -p flag is optional. When used, it will cause the transfer to preserve file attributes and permissions. The -r flag is required for recursive transfers of entire directories.

-

Example: Initiating SCP session on Bell (i.e. you are on Bell, connecting to some other computer):

(transfer TO Bell) (Individual files) $ scp myusername@$another.computer.example.com:sourcefile somedir/destinationfile $ scp myusername@$another.computer.example.com:sourcefile somedir/ (Recursive directory copy) $ scp -pr myusername@$another.computer.example.com:sourcedirectory/ somedir/ (transfer FROM Bell) (Individual files) $ scp somedir/sourcefile myusername@$another.computer.example.com:destinationfile $ scp somedir/sourcefile myusername@$another.computer.example.com:somedir/ (Recursive directory copy) $ scp -pr sourcedirectory myusername@$another.computer.example.com:somedir/The -p flag is optional. When used, it will cause the transfer to preserve file attributes and permissions. The -r flag is required for recursive transfers of entire directories.

Link to section 'Software (SCP clients)' of 'SCP' Software (SCP clients)

Linux and other Unix-like systems:

- The

scpcommand-line program should already be installed.

Microsoft Windows:

- MobaXterm

Free, full-featured, graphical Windows SSH, SCP, and SFTP client. - Command-line

scpprogram can be installed as part of Windows Subsystem for Linux (WSL), or Git-Bash.

Mac OS X:

- The

scpcommand-line program should already be installed. You may start a local terminal window from "Applications->Utilities". - Cyberduck is a full-featured and free graphical SFTP and SCP client.

Globus

Link to section 'Globus' of 'Globus' Globus

Globus, previously known as Globus Online, is a powerful and easy to use file transfer service for transferring files virtually anywhere. It works within RCAC's various research storage systems; it connects between RCAC and remote research sites running Globus; and it connects research systems to personal systems. You may use Globus to connect to your home, scratch, and Fortress storage directories. Since Globus is web-based, it works on any operating system that is connected to the internet. The Globus Personal client is available on Windows, Linux, and Mac OS X. It is primarily used as a graphical means of transfer but it can also be used over the command line.

Link to section 'Link to section 'Globus Web:' of 'Globus' Globus Web:' of 'Globus' Link to section 'Globus Web:' of 'Globus' Globus Web:

- Navigate to http://transfer.rcac.purdue.edu

- Click "Proceed" to log in with your Purdue Career Account.

- On your first login it will ask to make a connection to a Globus account. Accept the conditions.

- Now you are at the main screen. Click "File Transfer" which will bring you to a two-panel interface (if you only see one panel, you can use selector in the top-right corner to switch the view).

- You will need to select one collection and file path on one side as the source, and the second collection on the other as the destination. This can be one of several Purdue endpoints, or another University, or even your personal computer (see Personal Client section below).

The RCAC collections are as follows. A search for "Purdue" will give you several suggested results you can choose from, or you can give a more specific search.

- Home Directory storage: "Purdue Research Computing - Home Directories", however, you can start typing "Purdue" and "Home Directories" and it will suggest appropriate matches.

- Weber scratch storage: "Purdue Weber Cluster", however, you can start typing "Purdue" and "Weber and it will suggest appropriate matches. From here you will need to navigate into the first letter of your username, and then into your username.

- Research Data Depot: "Purdue Research Computing - Data Depot", a search for "Depot" should provide appropriate matches to choose from.

- Fortress: "Purdue Fortress HPSS Archive", a search for "Fortress" should provide appropriate matches to choose from.

From here, select a file or folder in either side of the two-pane window, and then use the arrows in the top-middle of the interface to instruct Globus to move files from one side to the other. You can transfer files in either direction. You will receive an email once the transfer is completed.

Link to section 'Link to section 'Globus Personal Client setup:' of 'Globus' Globus Personal Client setup:' of 'Globus' Link to section 'Globus Personal Client setup:' of 'Globus' Globus Personal Client setup:

Globus Connect Personal is a small software tool you can install to make your own computer a Globus endpoint on its own. It is useful if you need to transfer files via Globus to and from your computer directly.

- On the "Collections" page from earlier, click "Get Globus Connect Personal" or download a version for your operating system it from here: Globus Connect Personal

- Name this particular personal system and follow the setup prompts to create your Globus Connect Personal endpoint.

- Your personal system is now available as a collection within the Globus transfer interface.

Link to section 'Link to section 'Globus Command Line:' of 'Globus' Globus Command Line:' of 'Globus' Link to section 'Globus Command Line:' of 'Globus' Globus Command Line:

Globus supports command line interface, allowing advanced automation of your transfers.

To use the recommended standalone Globus CLI application (the globus command):

- First time use: issue the globus login command and follow instructions for initial login.

- Commands for interfacing with the CLI can be found via Using the Command Line Interface, as well as the Globus CLI Examples pages.

Link to section 'Link to section 'Sharing Data with Outside Collaborators' of 'Globus' Sharing Data with Outside Collaborators' of 'Globus' Link to section 'Sharing Data with Outside Collaborators' of 'Globus' Sharing Data with Outside Collaborators

Globus allows convenient sharing of data with outside collaborators. Data can be shared with collaborators' personal computers or directly with many other computing resources at other institutions. See the Globus documentation on how to share data:

For links to more information, please see Globus Support page and RCAC Globus presentation.

Windows Network Drive / SMB

SMB (Server Message Block), also known as CIFS, is an easy-to-use file transfer protocol that is useful for transferring files between RCAC systems and a desktop or laptop. You may use SMB to connect to your home, scratch, and Fortress storage directories. The SMB protocol is available on Windows, Linux, and Mac OS X. It is primarily used as a graphical means of transfer, but it can also be used over the command line.

Note: to access Bell through SMB file sharing, you must be on a Purdue campus network or connected through VPN.

Link to section 'Windows:' of 'Windows Network Drive / SMB' Windows:

- Windows 7: Click Windows menu > Computer, then click Map Network Drive in the top bar

- Windows 8 & 10: Tap the Windows key, type computer, select This PC, click Computer > Map Network Drive in the top bar

- Windows 11: Tap the Windows key, type File Explorer, select This PC, click Computer > Map Network Drive in the top bar

- In the folder location, enter the following information and click Finish:

- To access your Bell home directory, enter \\home.bell.rcac.purdue.edu\bell-home.

- To access your scratch space on Bell, enter \\scratch.bell.rcac.purdue.edu\bell-scratch. Once mapped, you will be able to navigate to your scratch directory.

- Note: Use your career account login name and password when prompted. (You will not need to add "

,push" nor use your Purdue Duo client.) - Your home or scratch directory should now be mounted as a drive in the Computer window.

- If you have issues mapping the drive check Credential Manager.

- In the Search bar, type "Credential Manager"

- Inside Credential Manager, click "Windows Credentials" and "Add New Credential"

- Servername:

\\home.negishi.rcac.purdue.edu - Username:

boilerad\PurdueLogin - Then save it and try mapping again.

-

If there's already an entry for the server you are trying to map delete it.

-

Link to section 'Mac OS X:' of 'Windows Network Drive / SMB' Mac OS X:

- In the Finder, click Go > Connect to Server

- In the Server Address enter the following information and click Connect:

- To access your Bell home directory, enter smb://home.bell.rcac.purdue.edu/bell-home.

- To access your scratch space on Bell, enter smb://scratch.bell.rcac.purdue.edu/bell-scratch. Once mapped, you will be able to navigate to your scratch directory.

- Note: Use your career account login name and password when prompted. (You will not need to add "

,push" nor use your Purdue Duo client.) - Your home or scratch directory should now be mounted as a drive in the Computer window.

Link to section 'Linux:' of 'Windows Network Drive / SMB' Linux:

- There are several graphical methods to connect in Linux depending on your desktop environment. Once you find out how to connect to a network server on your desktop environment, choose the Samba/SMB protocol and adapt the information from the Mac OS X section to connect.

- If you would like access via samba on the command line you may install smbclient which will give you FTP-like access and can be used as shown below. For all the possible ways to connect look at the Mac OS X instructions.

smbclient //home.bell.rcac.purdue.edu/bell-home -U boilerad/myusername smbclient //scratch.bell.rcac.purdue.edu/bell-scratch -U boilerad/myusername - Note: Use your career account login name and password when prompted. (You will not need to add "

,push" nor use your Purdue Duo client.)

FTP / SFTP

FTP is not supported on any research systems because it does not allow for secure transmission of data. Use SFTP instead, as described below.

SFTP (Secure File Transfer Protocol) is a reliable way of transferring files between two machines. SFTP is available as a protocol choice in some graphical file transfer programs and also as a command-line program on most Linux, Unix, and Mac OS X systems. SFTP has more features than SCP and allows for other operations on remote files, remote directory listing, and resuming interrupted transfers. Command-line SFTP cannot recursively copy directory contents; to do so, try using SCP or graphical SFTP client.

After Aug 17, 2020, the community clusters will not support password-based authentication for login. Methods that can be used include two-factor authentication (Purdue Login) or SSH keys. If you do not have SSH keys installed, you would need to type your Purdue Login response into the SFTP's "Password" prompt.

Link to section 'Command-line usage' of 'FTP / SFTP' Command-line usage

You can transfer files both to and from Bell while initiating an SFTP session on either some other computer or on Bell (in other words, directionality of connection and directionality of data flow are independent from each other). Once the connection is established, you use put or get subcommands between "local" and "remote" computers. Either Bell or another computer can be a remote.

-

Example: Initiating SFTP session on some other computer (i.e. you are on another computer, connecting to Bell):

$ sftp myusername@bell.rcac.purdue.edu (transfer TO Bell) sftp> put sourcefile somedir/destinationfile sftp> put -P sourcefile somedir/ (transfer FROM Bell) sftp> get sourcefile somedir/destinationfile sftp> get -P sourcefile somedir/ sftp> exitThe -P flag is optional. When used, it will cause the transfer to preserve file attributes and permissions.

-

Example: Initiating SFTP session on Bell (i.e. you are on Bell, connecting to some other computer):

$ sftp myusername@$another.computer.example.com (transfer TO Bell) sftp> get sourcefile somedir/destinationfile sftp> get -P sourcefile somedir/ (transfer FROM Bell) sftp> put sourcefile somedir/destinationfile sftp> put -P sourcefile somedir/ sftp> exitThe -P flag is optional. When used, it will cause the transfer to preserve file attributes and permissions.

Link to section 'Software (SFTP clients)' of 'FTP / SFTP' Software (SFTP clients)

Linux and other Unix-like systems:

- The

sftpcommand-line program should already be installed.

Microsoft Windows:

- MobaXterm

Free, full-featured, graphical Windows SSH, SCP, and SFTP client. - Command-line

sftpprogram can be installed as part of Windows Subsystem for Linux (WSL), or Git-Bash.

Mac OS X:

- The

sftpcommand-line program should already be installed. You may start a local terminal window from "Applications->Utilities". - Cyberduck is a full-featured and free graphical SFTP and SCP client.

Copying files from Purdue IT research computing home directory to Bell

The Bell home directory and its contents are specific to the Bell cluster, and are not available on other RCAC machines. For people having access to other Community Clusters and Bell, there is no automatic copying or synchronization between main and Bell home directories. At your discretion, you can manually copy all or parts of your main research computing home to Bell using one of the methods described below.

Please note that copying may fail if the size of your research computing home directory is larger than the Bell one's quota. Please check usage and limits before proceeding!

Link to section 'Complete copy' of 'Copying files from Purdue IT research computing home directory to Bell' Complete copy

For your convenience, a custom tool copy-rcac-home is provided to simplify at-will duplication of your main research computing home directory into Bell. The tool performs a complete 1-to-1 copy using rsync -auH (with exception of a narrow subset of system-specific service files).

To use the tool, simply type copy-rcac-home in a terminal window on a Bell front-end or compute node:

$ copy-rcac-home

This script will copy entire contents of your main RCAC

home directory into your Bell cluster's $HOME.

Note: copying may fail if the size of your RCAC home directory

is larger than your quota on the Bell one (25GB).

BEFORE PROCEEDING, please run 'myquota' command on another

cluster to see your usage there and judge whether it would fit!

Would you like to proceed? [Y/n]:

At this stage answering yes will proceed with copying, or you can respond with a no (or Ctrl-C) to cancel. See copy-rcac-home --help for more details on the tool.

Link to section 'Partial copy' of 'Copying files from Purdue IT research computing home directory to Bell' Partial copy

Desired parts (or whole) of your research computing home directories can be copied to Bell via any of the home directories' supported transfer methods, such as SCP, SFTP, rsync, or Globus.

-

Example: recursive copying of a subdirectory from RCAC home directory into Bell home using

scp.(if you are on Bell, use other cluster name for the remote part) $ scp -pr myothercluster.rcac.purdue.edu:somedirectory/ ~/ (if you are on another cluster, use Bell for the remote part) $ scp -pr somedirectory/ myusername@bell.rcac.purdue.edu:~/ -

Example: copying using Globus.

Search collections for "Purdue Research Computing - Home Directories" and "Purdue Bell Cluster - Home" endpoints, respectively, then transfer desired files and/or directories as usual.

Lost File Recovery

Bell is protected against accidental file deletion through a series of snapshots taken every night just after midnight. Each snapshot provides the state of your files at the time the snapshot was taken. It does so by storing only the files which have changed between snapshots. A file that has not changed between snapshots is only stored once but will appear in every snapshot. This is an efficient method of providing snapshots because the snapshot system does not have to store multiple copies of every file.

These snapshots are kept for a limited time at various intervals. RCAC keeps nightly snapshots for 7 days, weekly snapshots for 4 weeks, and monthly snapshots for 3 months. This means you will find snapshots from the last 7 nights, the last 4 Sundays, and the last 3 first of the months. Files are available going back between two and three months, depending on how long ago the last first of the month was. Snapshots beyond this are not kept.

Only files which have been saved during an overnight snapshot are recoverable. If you lose a file the same day you created it, the file is not recoverable because the snapshot system has not had a chance to save the file.

Snapshots are not a substitute for regular backups. It is the responsibility of the researchers to back up any important data to the Fortress Archive. Bell does protect against hardware failures or physical disasters through other means however these other means are also not substitutes for backups.

Files in scratch directories are not recoverable. Files in scratch directories are not backed up. If you accidentally delete a file, a disk crashes, or old files are purged, they cannot be restored.

Bell offers several ways for researchers to access snapshots of their files.

flost

If you know when you lost the file, the easiest way is to use the flost command. This tool is available from any RCAC resource. If you do not have access to a compute cluster, any Data Depot user may use an SSH client to connect to bell.rcac.purdue.edu and run this command.

To run the tool you will need to specify the location where the lost file was with the -w argument:

$ flost -w /depot/mylab

Replace mylab with the name of your lab's Bell directory. If you know more specifically where the lost file was you may provide the full path to that directory.

This tool will prompt you for the date on which you lost the file or would like to recover the file from. If the tool finds an appropriate snapshot it will provide instructions on how to search for and recover the file.

If you are not sure what date you lost the file you may try entering different dates into the flost to try to find the file or you may also manually browse the snapshots as described below.

Manual Browsing

You may also search through the snapshots by hand on the Bell filesystem if you are not sure what date you lost the file or would like to browse by hand. Snapshots can be browsed from any RCAC resource. If you do not have access to a compute cluster, any Bell user may use an SSH client to connect to bell.rcac.purdue.edu and browse from there. The snapshots are located at /depot/.snapshots on these resources.

You can also mount the snapshot directory over Samba (or SMB, CIFS) on Windows or Mac OS X. Mount (or map) the snapshot directory in the same way as you did for your main Bell space substituting the server name and path for \\datadepot.rcac.purdue.edu\depot\.winsnaps (Windows) or smb://datadepot.rcac.purdue.edu/depot/.winsnaps (Mac OS X).

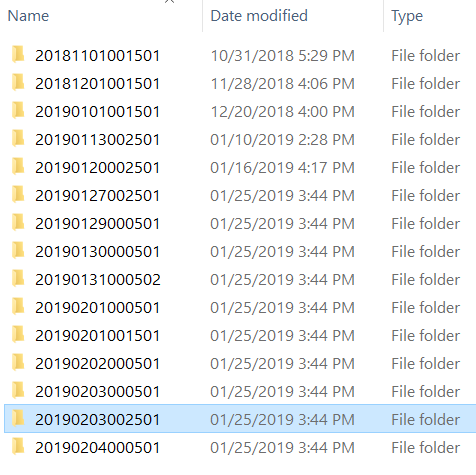

Once connected to the snapshot directory through SSH or Samba, you will see something similar to this:

| SSH to bell.rcac.purdue.edu | Samba mount on datadepot.rcac.purdue.edu |

|---|---|

|

|

Each of these directories is a snapshot of the entire Bell filesystem at the timestamp encoded into the directory name. The format for this timestamp is year, two digits for month, two digits for day, followed by the time of the day.

You may cd into any of these directories where you will find the entire Bell filesystem. Use cd to continue into your lab's Bell space and then you may browse the snapshot as normal.

If you are browsing these directories over a Samba network drive you can simply drag and drop the files over into your live Data Depot folder.

Once you find the file you are looking for, use cp to copy the file back into your lab's live Bell space. Do not attempt to modify files directly in the snapshot directories.

Windows

If you use Bell through "network drives" on Windows you may recover lost files directly from within Windows:

- Open the folder that contained the lost file.

- Right click inside the window and select "Properties".

- Click on the "Previous Versions" tab.

- A list of snapshots will be displayed.

- Select the snapshot from which you wish to restore.

- In the new window, locate the file you wish to restore.

- Simply drag the file or folder to their correct locations.

In the "Previous Versions" window the list contains two columns. The first column is the timestamp on which the snapshot was taken. The second column is the date on which the selected file or folder was last modified in that snapshot. This may give you some extra clues to which snapshot contains the version of the file you are looking for.

Mac OS X

Mac OS X does not provide any way to access the Bell snapshots directly. To access the snapshots there are two options: browse the snapshots by hand through a network drive mount or use an automated command-line based tool.

To browse the snapshots by hand, follow the directions outlined in the Manual Browsing section.

To use the automated command-line tool, log into a compute cluster or into the host bell.rcac.purdue.edu (which is available to all Bell users) and use the flost tool. On Mac OS X you can use the built-in SSH terminal application to connect.

- Open the Applications folder from Finder.

- Navigate to the Utilities folder.

- Double click the Terminal application to open it.

- Type the following command when the terminal opens.

Replace myusername with your Purdue career account username and provide your password when prompted.$ ssh myusername@bell.rcac.purdue.edu

Once logged in use the flost tool as described above. The tool will guide you through the process and show you the commands necessary to retrieve your lost file.

Gateway (Open OnDemand)

Bell's Gateway is an open-source HPC portal developed by the Ohio Supercomputing Center. Open OnDemand allows one to interact with HPC resources through a web browser and easily manage files, submit jobs, and interact with graphical applications directly in a browser, all with no software to install. Bell has an instance of OnDemand available that can be accessed via gateway.bell.rcac.purdue.edu.

Link to section 'Logging In' of 'Gateway (Open OnDemand)' Logging In

To log into Gateway:

- Navigate to gateway.bell.rcac.purdue.edu

- Log in using your Career account username and Purdue Login Duo client.

On the splash page you will see a quota usage report. If you are over 90% on any of your quotas a warning will be displayed. This information will update every 10-15 minutes while you are active on Gateway.

Link to section 'Apps' of 'Gateway (Open OnDemand)' Apps

There are a number of built-in apps in Gateway that can be accessed from the top menu bar. Below are links to documentation on each app.

Interactive Apps

There are several interactive apps available through Gateway that can be accessed through the Interactive Apps dropdown menu. These apps are provided with a basic node and software configuration as a 'quick-launch' option to get your work up and running quickly. For simplicity, minimal options are provided - these apps are not intended for complex configuration/customization scenarios.

After you a submit an interactive app to the queue, Gateway will track and manage the session. Once it starts, you may connect and disconnect from the session in your browser, leaving the job running while you log out of your browser.

Each of the available apps are documented through the following links.

Compute Node Desktop

The Compute Node Desktop app will launch a graphical desktop session on a compute node. This is similar to using Thinlinc, however, this gives you a desktop directly on a compute node instead on a front-end. This app is useful if you have a custom application or application not directly available as an interactive app you would like to run inside Gateway.

To launch a desktop session on a compute node, select the Bell Compute Desktop app. From the submit form, select from the available options - the queue to which you wish to submit and the number of wallclock hours you wish to have job running. There is also a checkbox that enable a notification to your email when the job starts.

After the interactive job is submitted you will be taken to your list of active interactive app sessions. You can monitor the status of the job from here until it starts, or if you enabled the email notification, watch your Purdue email for the notification the job has started.

Once it is indicated the job has started you can connect to the desktop with the "Launch noVNC in New Tab" button. The session will be terminated after the wallclock hours you specified have elapsed or you terminate the session early with the "Delete" button from the list of sessions. Deleting the session when you are finished will free up queue resources for your lab mates and other users on the system.

Jupyter Notebook

The Notebook app will launch a Notebook session on a compute node and allow you to connect directly to it in a web browser.

To launch a Notebook session on a compute node, select the Notebook app. From the submit form, select from the available options:

- Queue: This is a dropdown menu from which you can select a queue from all of the queues to which you have permission to submit.

- Walltime: This is a field which expects a number and represents how many hours you want to keep the session running. Note that this value should not exceed the maximum value given next to the selected queue name from the queue dropdown menu.

- Number of Cores/GPUs: This is a field which expects a number and represents the number of your resources your session is requesting. Note that the amount of memory allocated for your session is proportional to the number of cores or GPUs that you request for your job, so if your session is running out of memory, consider increasing this value.

- Use Jupyter Lab: This is a checkbox which, when checked, will run Jupyter Lab instead of Jupyter Notebook. Both of these applications are interfaces to Jupyter, and you can launch Jupyter notebooks from within Jupyter Lab. Jupyter Notebook is more "barebones" while Jupyter Lab has additional features such as the ability to interact with additional file types.

- E-mail Notice: This is a checkbox which, when checked, will send you an e-mail notification to your Purdue e-mail that your session is ready when the scheduler has found resources to dedicate to your session.

After the interactive job is submitted you will be taken to your list of active interactive app sessions. You can monitor the status of the job from here until it starts, or if you enabled the email notification, watch your Purdue email for the notification the job has started.

Once it is indicated the job has started you can connect to the desktop with the "Connect to Jupyter" button. Once connected, you can create new notebooks, selecting the currently available Anaconda versions available as modules, and any personally created Notebook kernels.

Often times you may want to use one of your existing Anaconda environments within your Jupyter session to use libraries specific to your workflow. In order to do so, you must ensure that the Anaconda environment you want to use contains the Python packages "IPyKernel" and "IPython" which are packages that are required by Jupyter. When you create a Jupyter session, Open OnDemand will check through your existing Anaconda environments and create a Jupyter kernel for any Anaconda environment that contains these two packages, and you will be able to select to use that kernel from within the application.

The session will be terminated after the number of hours you specified have elapsed or you terminate the session early with the "Delete" button from the list of sessions. Deleting the session when you are finished will free up queue resources for your lab mates and other users on the system.

MATLAB

The MATLAB app will launch a MATLAB session on a compute node and allow you to connect directly to it in a web browser.

To launch a MATLAB session on a compute node, select the MATLAB app. From the submit form, select from the available options - the version of MATLAB you are interested in running, the queue to which you wish to submit, and the number of wallclock hours you wish to have job running. There is also a checkbox that enable a notification to your email when the job starts.

After the interactive job is submitted you will be taken to your list of active interactive app sessions. You can monitor the status of the job from here until it starts, or if you enabled the email notification, watch your Purdue email for the notification the job has started.

Once it is indicated the job has started you can connect to the desktop with the "Launch noVNC in New Tab" button. The session will be terminated after the wallclock hours you specified have elapsed or you terminate the session early with the "Delete" button from the list of sessions. Deleting the session when you are finished will free up queue resources for your lab mates and other users on the system.

RStudio Server

The RStudio app will launch a RStudio session on a compute node and allow you to connect directly to it in a web browser.

To launch a RStudio session on a compute node, select the RStudio app. From the submit form, select from the available options - the queue to which you wish to submit, and the number of wallclock hours you wish to have job running. There is also a checkbox that enable a notification to your email when the job starts.

After the interactive job is submitted you will be taken to your list of active interactive app sessions. You can monitor the status of the job from here until it starts, or if you enabled the email notification, watch your Purdue email for the notification the job has started.

Once it is indicated the job has started you can connect to the desktop with the "Connect to RStudio Server" button. The session will be terminated after the wallclock hours you specified have elapsed or you terminate the session early with the "Delete" button from the list of sessions. Deleting the session when you are finished will free up queue resources for your lab mates and other users on the system.

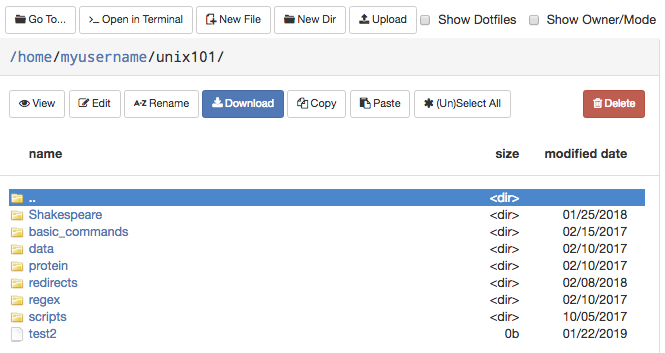

Files

The Files app will let you access your files in your Home Directory, Scratch, and Data Depot spaces. The app lets you manage create, manage, and delete files and directories from your web browser. Navigate by double clicking on folders in the file explorer or by using the file tree on the left.

On the top row, there are buttons to:

- Go To: directly input a directory to navigate to

- Open in Terminal: launches the Shell app and navigates you to the current directory in the terminal

- New File: creates a new, empty file

- New Dir: creates a new, empty directory

- Upload: upload a file from your computer

Note: File uploads from your browser are limited to 100 GB per file. Be mindful that uploads over a few gigabytes may be unreliable through your browser, especially from off-campus connections. For very large files or off-campus transfers alternative methods such as Globus are highly recommended.

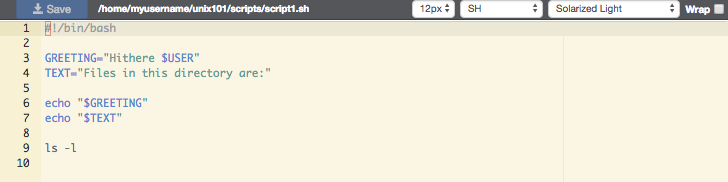

The second row of buttons lets you perform typical file management operations. The Edit button will open files in a fully fledged browser based text editor - it features syntax highlighting and vim and Emacs key bindings.

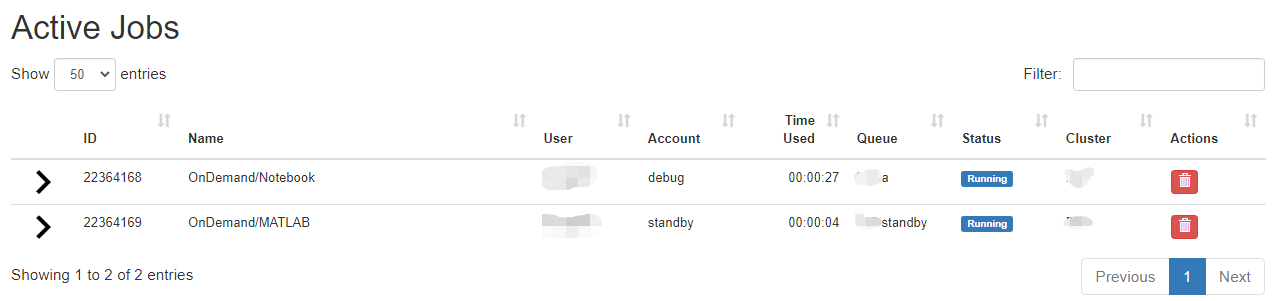

Jobs

There are two apps under the Jobs apps: Active Jobs and Job Composer. These are detailed below.

Link to section 'Active Jobs' of 'Jobs' Active Jobs

This shows you active SLURM jobs currently on the cluster. The default view will show you your current jobs, similar to squeue -u rices. Using the button labeled "Your Jobs" in the upper right allows you to select different filters by queue (account). All accounts output by slist will appear for you here. Using the arrow on the left hand side will expand the full job details.

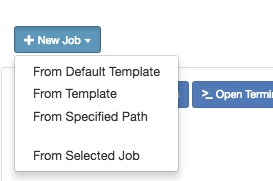

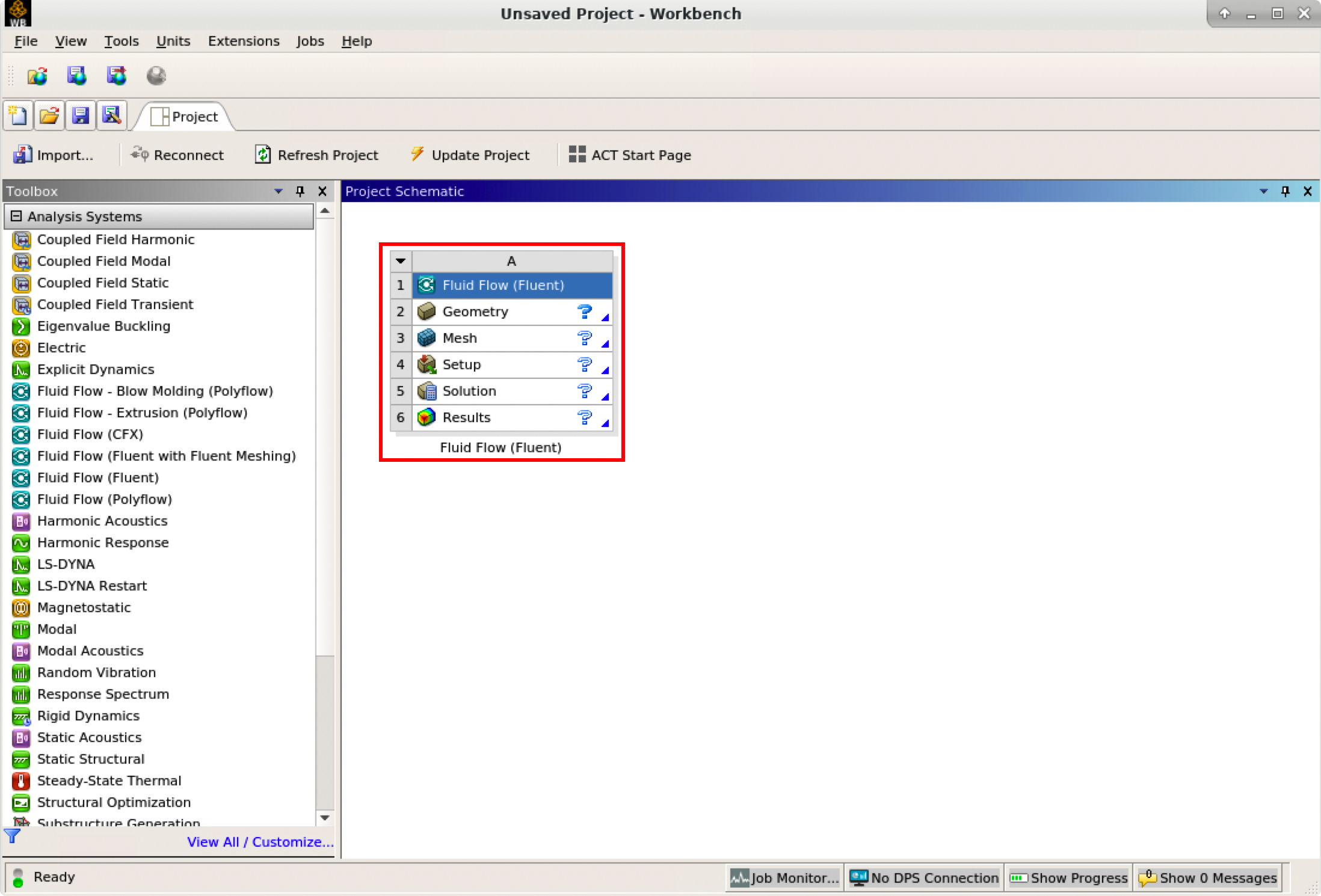

Link to section 'Job Composer' of 'Jobs' Job Composer

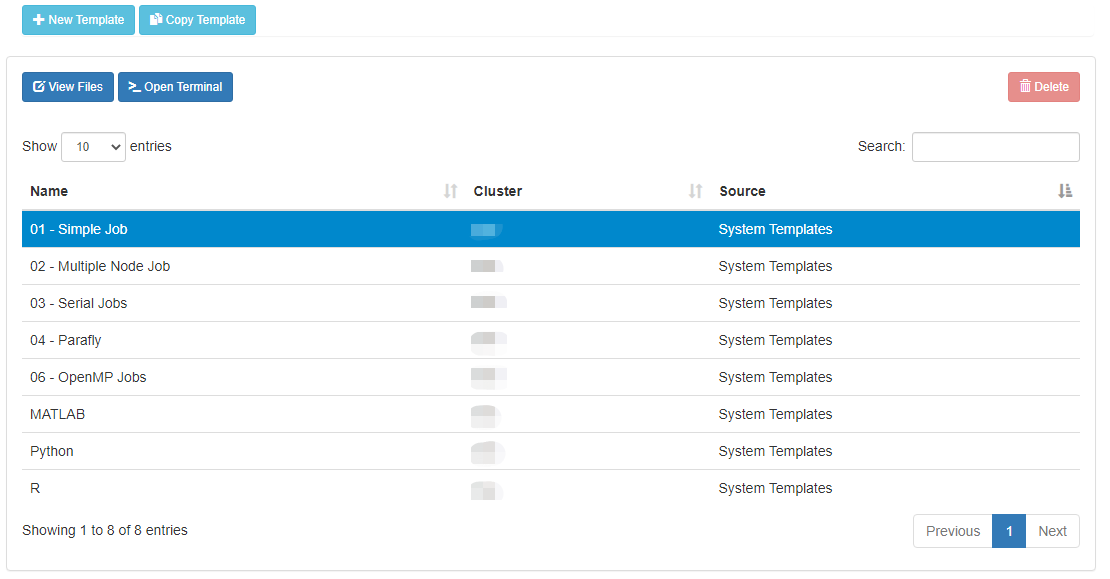

The Job Composer app allows you to create and submit jobs to the cluster. You can select from pre-defined templates (most of these are taken from the User Guide examples) or you can create your own templates for frequently used workflows.

Link to section 'Creating Job from Existing Template' of 'Jobs' Creating Job from Existing Template

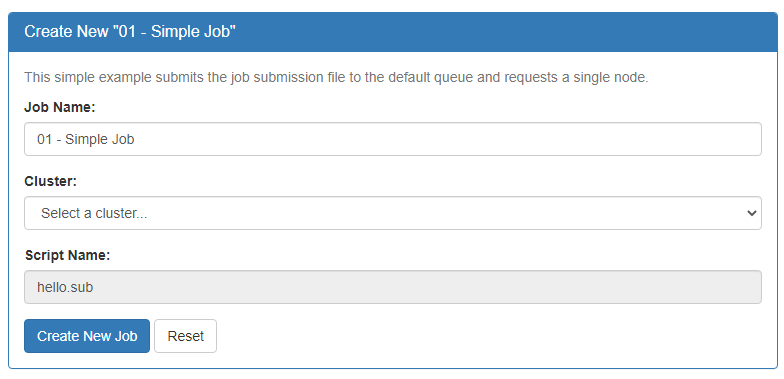

Click "New Job" menu, then select "From Template":

Then select from one of the available templates.

Click 'Create New Job' in second pane.

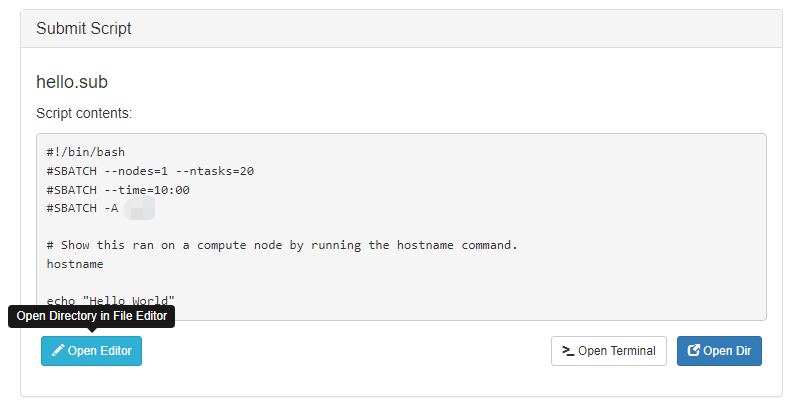

Your new job should be selected in your list of jobs. In the 'Submit Script' pane you can see the job script that was generated with an 'Open Editor' link to open the script in the built-in editor. Open the file in the editor and edit the script as necessary. By default the job will specify standby queue - this should be changed as appropriate, along with the node and walltime requests.

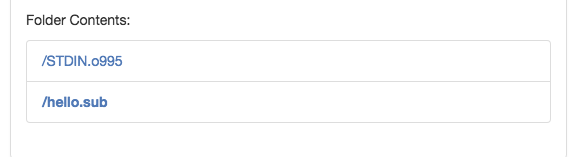

When you are finished with editing the job and are ready to submit, click the green 'Submit' button at the top of the job list. You can monitor progress from here or from the Active Jobs app. Once completed, you should see the output files appear:

Clicking on one of the output files will open it in the file editor for your viewing.

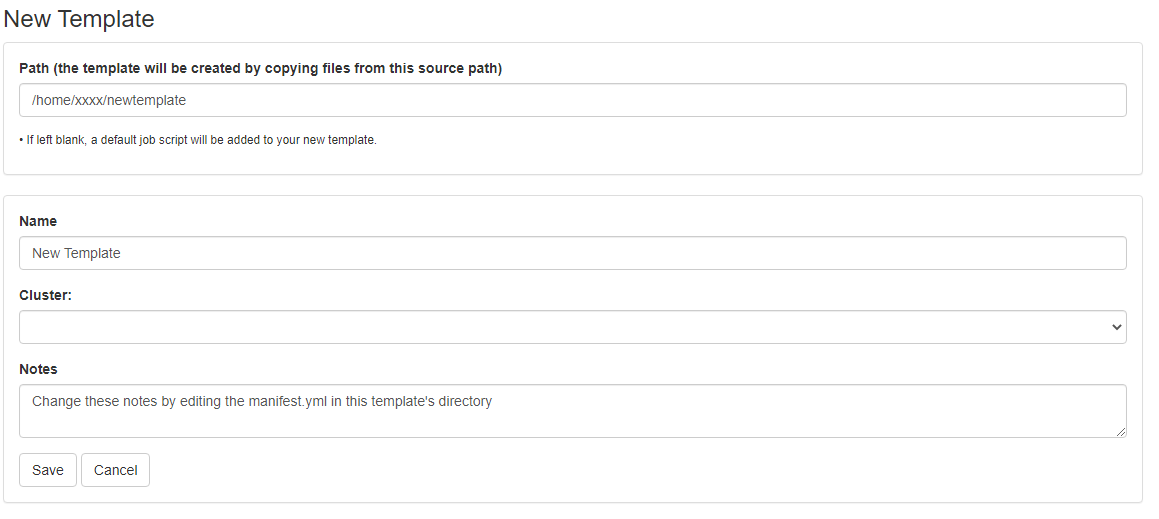

Link to section 'Creating New Template' of 'Jobs' Creating New Template

First, prepare a template directory containing a template submission script along with any input files. Then, to import the job into the Job Composer app, click the 'Create New Template' button. Fill in the directory containing your template job script and files in the first box. Give it an appropriate name and notes.

This template will now appear in your list of templates to choose from when composing jobs. You can now go create and submit a job from this new template.

Cluster Tools

The Cluster Tools menu contains cluster utilities. At the moment, only a terminal app is provided. Additional apps may be developed and provided in the future.

Link to section 'Shell Access' of 'Cluster Tools' Shell Access

Launching the shell app will provide you with a web-based terminal session on the cluster front-end. This is equivalent to using a standalone SSH client to connect to bell.rcac.purdue.edu where you are connected to one several front-ends. The normal acceptable front-end use policy applies to access through the web-app. X11 Forwarding is not supported. Use of one of the interactive apps is recommended for graphical applications.

Software

Link to section 'Environment module' of 'Software' Environment module

Link to section 'Software catalog' of 'Software' Software catalog

Compiling Source Code

Documentation on compiling source code on Bell.

Compiling Serial Programs

A serial program is a single process which executes as a sequential stream of instructions on one processor core. Compilers capable of serial programming are available for C, C++, and versions of Fortran.

Here are a few sample serial programs:

- serial_hello.f

- serial_hello.f90

- serial_hello.f95

- serial_hello.c

-

To load a compiler, enter one of the following:

$ module load intel

$ module load gcc

| Language | Intel Compiler | GNU Compiler | |

|---|---|---|---|

| Fortran 77 |

|

|

|

| Fortran 90 |

|

|

|

| Fortran 95 |

|

|

|

| C |

|

|

|

| C++ |

|

|

The Intel and GNU compilers will not output anything for a successful compilation. Also, the Intel compiler does not recognize the suffix ".f95".

Compiling MPI Programs

OpenMPI and Intel MPI (IMPI) are implementations of the Message-Passing Interface (MPI) standard. Libraries for these MPI implementations and compilers for C, C++, and Fortran are available on all clusters.

| Language | Header Files |

|---|---|

| Fortran 77 |

|

| Fortran 90 |

|

| Fortran 95 |

|

| C |

|

| C++ |

|

Here are a few sample programs using MPI:

To see the available MPI libraries:

$ module avail openmpi

$ module avail impi

| Language | Intel MPI | OpenMPI |

|---|---|---|

| Fortran 77 |

|

|

| Fortran 90 |

|

|

| Fortran 95 |

|

|

| C |

|

|

| C++ |

|

|

The Intel and GNU compilers will not output anything for a successful compilation. Also, the Intel compiler does not recognize the suffix ".f95".

Here is some more documentation from other sources on the MPI libraries:

Compiling OpenMP Programs

All compilers installed on Brown include OpenMP functionality for C, C++, and Fortran. An OpenMP program is a single process that takes advantage of a multi-core processor and its shared memory to achieve a form of parallel computing called multithreading. It distributes the work of a process over processor cores in a single compute node without the need for MPI communications.

| Language | Header Files |

|---|---|

| Fortran 77 |

|

| Fortran 90 |

|

| Fortran 95 |

|

| C |

|

| C++ |

|

Sample programs illustrate task parallelism of OpenMP:

A sample program illustrates loop-level (data) parallelism of OpenMP:

To load a compiler, enter one of the following:

$ module load intel

$ module load gcc

| Language | Intel Compiler | GNU Compiler |

|---|---|---|

| Fortran 77 |

|

|

| Fortran 90 |

|

|

| Fortran 95 |

|

|

| C |

|

|

| C++ |

|

|

The Intel and GNU compilers will not output anything for a successful compilation. Also, the Intel compiler does not recognize the suffix ".f95".

Here is some more documentation from other sources on OpenMP:

Compiling Hybrid Programs

A hybrid program combines both MPI and shared-memory to take advantage of compute clusters with multi-core compute nodes. Libraries for OpenMPI and Intel MPI (IMPI) and compilers which include OpenMP for C, C++, and Fortran are available.

| Language | Header Files |

|---|---|

| Fortran 77 |

|

| Fortran 90 |

|

| Fortran 95 |

|

| C |

|

| C++ |

|

A few examples illustrate hybrid programs with task parallelism of OpenMP:

This example illustrates a hybrid program with loop-level (data) parallelism of OpenMP:

To see the available MPI libraries:

$ module avail impi

$ module avail openmpi

The following tables illustrate how to compile your hybrid (MPI/OpenMP) program. Any compiler flags accepted by Intel ifort/icc compilers are compatible with their respective MPI compiler.

| Language | Command |

|---|---|

| Fortran 77 |

|

| Fortran 90 |

|

| Fortran 95 |

|

| C |

|

| C++ |

|

| Language | Command |

|---|---|

| Fortran 77 |

|

| Fortran 90 |

|

| Fortran 95 |

|