Biocontainers

Link to section 'What is BioContainers' of 'Biocontainers' What is BioContainers

The BioContainers project came from the idea of using the containers-based technologies such as Docker or rkt for bioinformatics software. Having a common and controllable environment for running software could help to deal with some of the current problems during software development and distribution. BioContainers is a community-driven project that provides the infrastructure and basic guidelines to create, manage and distribute bioinformatics containers with a special focus on omics fields such as proteomics, genomics, transcriptomics and metabolomics. . For more information, please visit BioContainers project.

Link to section 'Deployed Applications' of 'Biocontainers' Deployed Applications

- abacas

- abismal

- abpoa

- abricate

- abyss

- actc

- adapterremoval

- advntr

- afplot

- afterqc

- agat

- agfusion

- alfred

- alien-hunter

- alignstats

- allpathslg

- alphafold

- amptk

- ananse

- anchorwave

- angsd

- annogesic

- annovar

- antismash

- anvio

- any2fasta

- arcs

- asgal

- aspera-connect

- assembly-stats

- atac-seq-pipeline

- ataqv

- atram

- atropos

- augur

- augustus

- bactopia

- bali-phy

- bamgineer

- bamliquidator

- bam-readcount

- bamsurgeon

- bamtools

- bamutil

- barrnap

- basenji

- bayescan

- bazam

- bbmap

- bbtools

- bcftools

- bcl2fastq

- beagle

- beast2

- bedops

- bedtools

- bioawk

- biobambam

- bioconvert

- biopython

- bismark

- blasr

- blast

- blobtools

- bmge

- bowtie

- bowtie2

- bracken

- braker2

- brass

- breseq

- busco

- bustools

- bwa

- bwameth

- cactus

- cafe

- canu

- ccs

- cdbtools

- cd-hit

- cegma

- cellbender

- cellphonedb

- cellranger

- cellranger-arc

- cellranger-atac

- cellranger-dna

- cellrank

- cellrank-krylov

- cellsnp-lite

- celltypist

- centrifuge

- cfsan-snp-pipeline

- checkm-genome

- chewbbaca

- chopper

- chromap

- cicero

- circexplorer2

- circlator

- circompara2

- circos

- ciri2

- ciriquant

- clair3

- clairvoyante

- clearcnv

- clever-toolkit

- clonalframeml

- clust

- clustalw

- cnvkit

- cnvnator

- coinfinder

- concoct

- control-freec

- cooler

- coverm

- cramino

- crisprcasfinder

- crispresso2

- crispritz

- crossmap

- cross_match

- csvtk

- cufflinks

- cutadapt

- cuttlefish

- cyvcf2

- das_tool

- dbg2olc

- deconseq

- deepbgc

- deepconsensus

- deepsignal2

- deeptools

- deepvariant

- delly

- dendropy

- diamond

- dnaio

- dragonflye

- drep

- dropest

- drop-seq

- dsuite

- easysfs

- edta

- eggnog-mapper

- emboss

- ensembl-vep

- epic2

- evidencemodeler

- exonerate

- expansionhunter

- fasta3

- fastani

- fastp

- fastqc

- fastq_pair

- fastq-scan

- fastspar

- faststructure

- fasttree

- fastx_toolkit

- filtlong

- flye

- fraggenescan

- fraggenescanrs

- freebayes

- freyja

- fseq

- funannotate

- fwdpy11

- gadma

- gambit

- gamma

- gangstr

- gapfiller

- gatk

- gatk4

- gemma

- gemoma

- genemark

- genemarks-2

- genmap

- genomedata

- genomepy

- genomescope2

- genomicconsensus

- genrich

- getorganelle

- gfaffix

- gfastats

- gfatools

- gffcompare

- gffread

- gffutils

- gimmemotifs

- glimmer

- glimmerhmm

- glnexus

- gmap

- goatools

- graphlan

- graphmap

- gridss

- gseapy

- gtdbtk

- gubbins

- guppy

- hail

- hap.py

- helen

- hicexplorer

- hic-pro

- hifiasm

- hisat2

- hmmer

- homer

- how_are_we_stranded_here

- htseq

- htslib

- htstream

- humann

- hyphy

- idba

- igv

- impute2

- infernal

- instrain

- intarna

- interproscan

- iqtree

- isoquant

- isoseq3

- ivar

- jcvi

- kaiju

- kakscalculator2

- kallisto

- khmer

- kissde

- kissplice

- kissplice2refgenome

- kma

- kmc

- kmergenie

- kmer-jellyfish

- kneaddata

- kover

- kraken2

- krakentools

- lambda

- last

- lastz

- ldhat

- ldjump

- ldsc

- liftoff

- liftofftools

- lima

- links

- lofreq

- longphase

- longqc

- lra

- ltr_finder

- ltrpred

- lumpy-sv

- lyveset

- macrel

- macs2

- macs3

- mafft

- mageck

- magicblast

- maker

- manta

- mapcaller

- marginpolish

- mash

- mashmap

- mashtree

- masurca

- mauve

- maxbin2

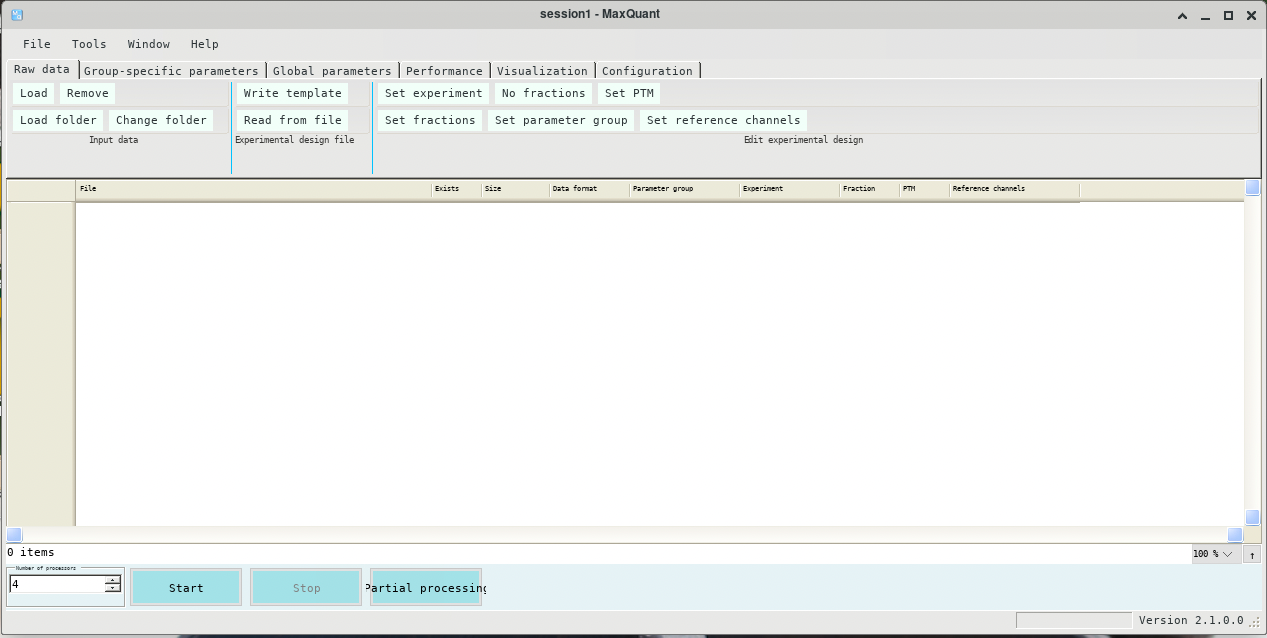

- maxquant

- mcl

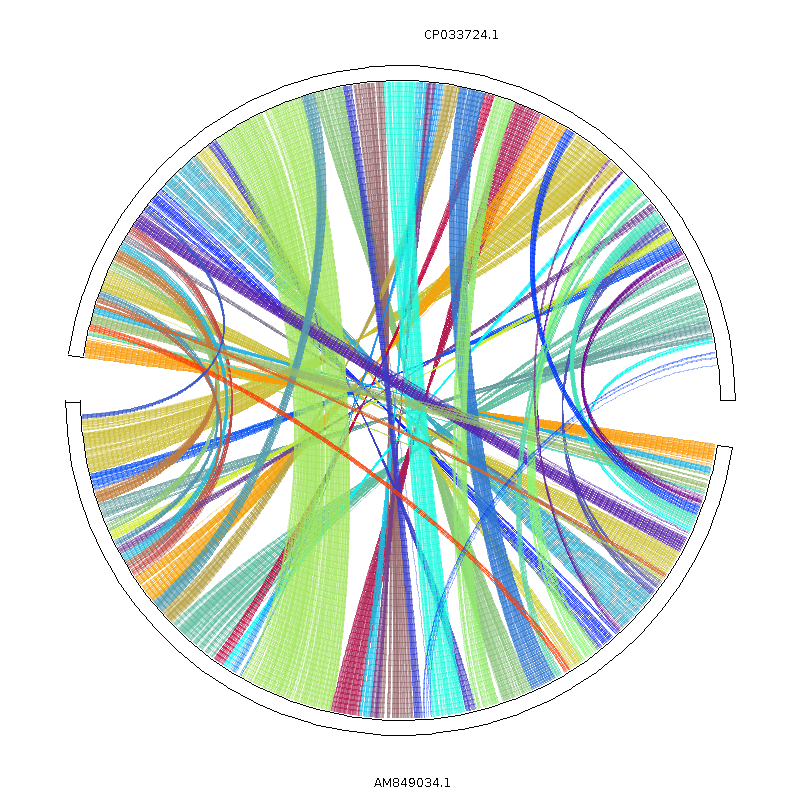

- mcscanx

- medaka

- megadepth

- megahit

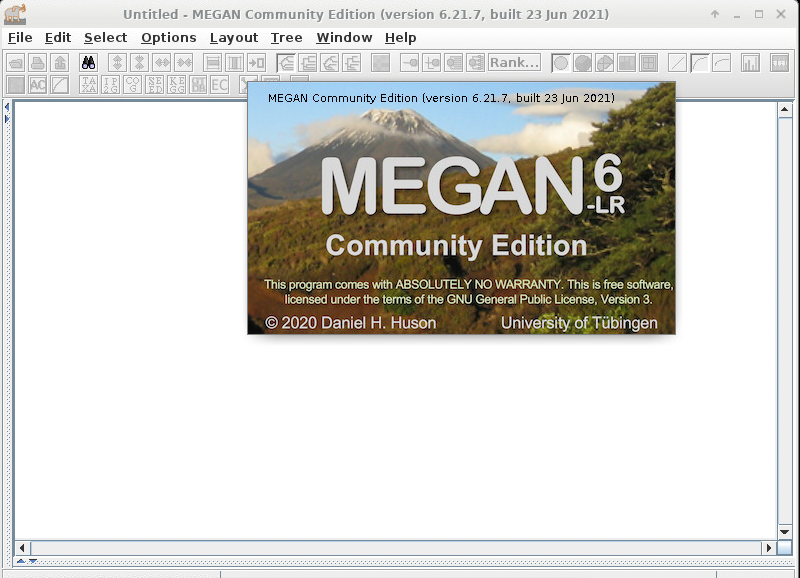

- megan

- meme

- memes

- meraculous

- merqury

- meryl

- metabat

- metachip

- metaphlan

- metaseq

- methyldackel

- metilene

- mhm2

- microbedmm

- minialign

- miniasm

- minimap2

- minipolish

- miniprot

- mirdeep2

- mirtop

- mitofinder

- mlst

- mmseqs2

- mob_suite

- modbam2bed

- modeltest-ng

- momi

- mothur

- motus

- mrbayes

- multiqc

- mummer4

- muscle

- mutmap

- mykrobe

- n50

- nanofilt

- nanolyse

- nanoplot

- nanopolish

- ncbi-amrfinderplus

- ncbi-datasets

- ncbi-genome-download

- ncbi-table2asn

- neusomatic

- nextalign

- nextclade

- nextflow

- ngs-bits

- ngsld

- ngsutils

- orthofinder

- paml

- panacota

- panaroo

- pandaseq

- pandora

- pangolin

- panphlan

- parallel-fastq-dump

- parliament2

- parsnp

- pasta

- pbmm2

- pbptyper

- pcangsd

- peakranger

- pepper_deepvariant

- perl-bioperl

- phast

- phd2fasta

- phg

- phipack

- phrap

- phred

- phylosuite

- picard

- picrust2

- pilon

- pindel

- pirate

- piscem

- pixy

- plasmidfinder

- platon

- platypus

- plink

- plink2

- plotsr

- pomoxis

- poppunk

- popscle

- pplacer

- prinseq

- prodigal

- prokka

- proteinortho

- prothint

- pullseq

- purge_dups

- pvactools

- pyani

- pybedtools

- pybigwig

- pychopper

- pycoqc

- pyensembl

- pyfaidx

- pygenometracks

- pygenomeviz

- pyranges

- pysam

- pyvcf3

- qiime2

- qtlseq

- qualimap

- quast

- quickmirseq

- r

- racon

- ragout

- ragtag

- rapmap

- rasusa

- raven-assembler

- raxml

- raxml-ng

- reapr

- rebaler

- reciprocal_smallest_distance

- recycler

- regtools

- repeatmasker

- repeatmodeler

- repeatscout

- resfinder

- revbayes

- rmats

- rmats2sashimiplot

- rnaindel

- rnapeg

- rnaquast

- roary

- r-rnaseq

- r-rstudio

- r-scrnaseq

- rsem

- rseqc

- run_dbcan

- rush

- sage

- salmon

- sambamba

- samblaster

- samclip

- samplot

- samtools

- scanpy

- scarches

- scgen

- scirpy

- scvelo

- scvi-tools

- segalign

- seidr

- sepp

- seqcode

- seqkit

- seqyclean

- shapeit4

- shapeit5

- shasta

- shigeifinder

- shorah

- shortstack

- shovill

- sicer

- sicer2

- signalp4

- signalp6

- simug

- singlem

- ska

- skewer

- slamdunk

- smoove

- snakemake

- snap

- snap-aligner

- snaptools

- snippy

- snp-dists

- snpeff

- snpgenie

- snphylo

- snpsift

- snp-sites

- soapdenovo2

- sortmerna

- souporcell

- sourmash

- spaceranger

- spades

- sprod

- squeezemeta

- squid

- sra-tools

- srst2

- stacks

- star

- staramr

- starfusion

- stream

- stringdecomposer

- stringtie

- strique

- structure

- subread

- survivor

- svaba

- svtools

- svtyper

- swat

- syri

- talon

- targetp

- tassel

- taxonkit

- t-coffee

- tetranscripts

- tiara

- tigmint

- tobias

- tombo

- tophat

- tpmcalculator

- transabyss

- transdecoder

- transrate

- transvar

- trax

- treetime

- trimal

- trim-galore

- trimmomatic

- trinity

- trinotate

- trnascan-se

- trtools

- trust4

- trycycler

- ucsc_genome_toolkit

- unicycler

- usefulaf

- vadr

- vardict-java

- varlociraptor

- varscan

- vartrix

- vatools

- vcf2maf

- vcf2phylip

- vcf2tsvpy

- vcf-kit

- vcftools

- velocyto.py

- velvet

- veryfasttree

- vg

- viennarna

- vsearch

- weblogo

- whatshap

- wiggletools

- winnowmap

- wtdbg

abacas

Link to section 'Introduction' of 'abacas' Introduction

Abacas is a tool for algorithm based automatic contiguation of assembled sequences.

For more information, please check its website: https://biocontainers.pro/tools/abacas and its home page: http://abacas.sourceforge.net.

Link to section 'Versions' of 'abacas' Versions

- 1.3.1

Link to section 'Commands' of 'abacas' Commands

- abacas.pl

- abacas.1.3.1.pl

Link to section 'Module' of 'abacas' Module

You can load the modules by:

module load biocontainers

module load abacas

Link to section 'Example job' of 'abacas' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Abacas on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=abacas

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers abacas

abacas.pl -r cmm.fasta -q Cm.contigs.fasta -p nucmer -o out_prefix

abismal

Link to section 'Introduction' of 'abismal' Introduction

Another Bisulfite Mapping Algorithm (abismal) is a read mapping program for bisulfite sequencing in DNA methylation studies.

BioContainers: https://biocontainers.pro/tools/abismal

Home page: https://github.com/smithlabcode/abismal

Link to section 'Versions' of 'abismal' Versions

- 3.0.0

Link to section 'Commands' of 'abismal' Commands

- abismal

- abismalidx

- simreads

Link to section 'Module' of 'abismal' Module

You can load the modules by:

module load biocontainers

module load abismal

Link to section 'Example job' of 'abismal' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run abismal on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=abismal

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers abismal

abismalidx ~/.local/share/genomes/hg38/hg38.fa hg38

abpoa

Link to section 'Introduction' of 'abpoa' Introduction

abPOA: adaptive banded Partial Order Alignment

Home page: https://github.com/yangao07/abPOA

Link to section 'Versions' of 'abpoa' Versions

- 1.4.1

Link to section 'Commands' of 'abpoa' Commands

- abpoa

Link to section 'Module' of 'abpoa' Module

You can load the modules by:

module load biocontainers

module load abpoa

Link to section 'Example job' of 'abpoa' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run abpoa on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=abpoa

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers abpoa

abpoa seq.fa > cons.fa

abricate

Link to section 'Introduction' of 'abricate' Introduction

Abricate is a tool for mass screening of contigs for antimicrobial resistance or virulence genes.

For more information, please check its website: https://biocontainers.pro/tools/abricate and its home page on Github.

Link to section 'Versions' of 'abricate' Versions

- 1.0.1

Link to section 'Commands' of 'abricate' Commands

- abricate

Link to section 'Module' of 'abricate' Module

You can load the modules by:

module load biocontainers

module load abricate

Link to section 'Example job' of 'abricate' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Abricate on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 8

#SBATCH --job-name=abricate

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers abricate

abricate --threads 8 *.fastaabyss

Link to section 'Introduction' of 'abyss' Introduction

ABySS is a de novo sequence assembler intended for short paired-end reads and genomes of all sizes.

For more information, please check its website: https://biocontainers.pro/tools/abyss and its home page on Github.

Link to section 'Versions' of 'abyss' Versions

- 2.3.2

- 2.3.4

- 2.3.8

Link to section 'Commands' of 'abyss' Commands

- ABYSS

- ABYSS-P

- AdjList

- Consensus

- DAssembler

- DistanceEst

- DistanceEst-ssq

- KAligner

- MergeContigs

- MergePaths

- Overlap

- ParseAligns

- PathConsensus

- PathOverlap

- PopBubbles

- SimpleGraph

- abyss-align

- abyss-bloom

- abyss-bloom-dbg

- abyss-bowtie

- abyss-bowtie2

- abyss-bwa

- abyss-bwamem

- abyss-bwasw

- abyss-db-txt

- abyss-dida

- abyss-fac

- abyss-fatoagp

- abyss-filtergraph

- abyss-fixmate

- abyss-fixmate-ssq

- abyss-gapfill

- abyss-gc

- abyss-index

- abyss-junction

- abyss-kaligner

- abyss-layout

- abyss-longseqdist

- abyss-map

- abyss-map-ssq

- abyss-mergepairs

- abyss-overlap

- abyss-paired-dbg

- abyss-paired-dbg-mpi

- abyss-pe

- abyss-rresolver-short

- abyss-samtoafg

- abyss-scaffold

- abyss-sealer

- abyss-stack-size

- abyss-tabtomd

- abyss-todot

- abyss-tofastq

- konnector

- logcounter

Link to section 'Module' of 'abyss' Module

You can load the modules by:

module load biocontainers

module load abyss

Link to section 'Example job' of 'abyss' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run abyss on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 4

#SBATCH --job-name=abyss

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers abyss

abyss-pe np=4 k=25 name=test B=1G \

in='test-data/reads1.fastq test-data/reads2.fastq'

actc

Link to section 'Introduction' of 'actc' Introduction

Actc is used to align subreads to ccs reads.

Home page: https://github.com/PacificBiosciences/actc

Link to section 'Versions' of 'actc' Versions

- 0.2.0

Link to section 'Commands' of 'actc' Commands

- actc

Link to section 'Module' of 'actc' Module

You can load the modules by:

module load biocontainers

module load actc

Link to section 'Example job' of 'actc' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run actc on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=actc

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers actc

actc subreads.bam ccs.bam subreads_to_ccs.bam

adapterremoval

Link to section 'Introduction' of 'adapterremoval' Introduction

AdapterRemoval searches for and removes adapter sequences from High-Throughput Sequencing (HTS) data and (optionally) trims low quality bases from the 3' end of reads following adapter removal. AdapterRemoval can analyze both single end and paired end data, and can be used to merge overlapping paired-ended reads into (longer) consensus sequences. Additionally, AdapterRemoval can construct a consensus adapter sequence for paired-ended reads, if which this information is not available.

BioContainers: https://biocontainers.pro/tools/adapterremoval

Home page: https://github.com/MikkelSchubert/adapterremoval

Link to section 'Versions' of 'adapterremoval' Versions

- 2.3.3

Link to section 'Commands' of 'adapterremoval' Commands

- AdapterRemoval

Link to section 'Module' of 'adapterremoval' Module

You can load the modules by:

module load biocontainers

module load adapterremoval

Link to section 'Example job' of 'adapterremoval' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run adapterremoval on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=adapterremoval

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers adapterremoval

AdapterRemoval --file1 input_1.fastq --file2 input_2.fastq

advntr

Link to section 'Introduction' of 'advntr' Introduction

Advntr is a tool for genotyping Variable Number Tandem Repeats (VNTR) from sequence data.

For more information, please check its website: https://biocontainers.pro/tools/advntr and its home page on Github.

Link to section 'Versions' of 'advntr' Versions

- 1.4.0

- 1.5.0

Link to section 'Commands' of 'advntr' Commands

- advntr

Link to section 'Module' of 'advntr' Module

You can load the modules by:

module load biocontainers

module load advntr

Link to section 'Example job' of 'advntr' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Advntr on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=advntr

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers advntr

advntr addmodel -r chr21.fa -p CGCGGGGCGGGG -s 45196324 -e 45196360 -c chr21

advntr genotype --vntr_id 1 --alignment_file CSTB_2_5_testdata.bam --working_directory working_dirafplot

Link to section 'Introduction' of 'afplot' Introduction

Afplot is a tool to plot allele frequencies in VCF files.

For more information, please check its website: https://biocontainers.pro/tools/afplot and its home page on Github.

Link to section 'Versions' of 'afplot' Versions

- 0.2.1

Link to section 'Commands' of 'afplot' Commands

- afplot

Link to section 'Module' of 'afplot' Module

You can load the modules by:

module load biocontainers

module load afplot

Link to section 'Example job' of 'afplot' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run afplot on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=afplot

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers afplot

afplot whole-genome histogram -v my_vcf.gz -l my_label -s my_sample -o mysample.histogram.png

afterqc

Link to section 'Introduction' of 'afterqc' Introduction

Afterqc is a tool for quality control of FASTQ data produced by HiSeq 2000/2500/3000/4000, Nextseq 500/550, MiniSeq, and Illumina 1.8 or newer.

For more information, please check its website: https://biocontainers.pro/tools/afterqc and its home page on Github.

Link to section 'Versions' of 'afterqc' Versions

- 0.9.7

Link to section 'Commands' of 'afterqc' Commands

- after.py

Link to section 'Module' of 'afterqc' Module

You can load the modules by:

module load biocontainers

module load afterqc

Link to section 'Example job' of 'afterqc' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run blobtools on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=afterqc

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers afterqc

after.py -1 SRR11941281_1.fastq.paired.fq -2 SRR11941281_2.fastq.paired.fq

agat

Link to section 'Introduction' of 'agat' Introduction

Agat is a suite of tools to handle gene annotations in any GTF/GFF format.

For more information, please check its website: https://biocontainers.pro/tools/agat and its home page on Github.

Link to section 'Versions' of 'agat' Versions

- 0.8.1

Link to section 'Commands' of 'agat' Commands

- agat_convert_bed2gff.pl

- agat_convert_embl2gff.pl

- agat_convert_genscan2gff.pl

- agat_convert_mfannot2gff.pl

- agat_convert_minimap2_bam2gff.pl

- agat_convert_sp_gff2bed.pl

- agat_convert_sp_gff2gtf.pl

- agat_convert_sp_gff2tsv.pl

- agat_convert_sp_gff2zff.pl

- agat_convert_sp_gxf2gxf.pl

- agat_sp_Prokka_inferNameFromAttributes.pl

- agat_sp_add_introns.pl

- agat_sp_add_start_and_stop.pl

- agat_sp_alignment_output_style.pl

- agat_sp_clipN_seqExtremities_and_fixCoordinates.pl

- agat_sp_compare_two_BUSCOs.pl

- agat_sp_compare_two_annotations.pl

- agat_sp_complement_annotations.pl

- agat_sp_ensembl_output_style.pl

- agat_sp_extract_attributes.pl

- agat_sp_extract_sequences.pl

- agat_sp_filter_by_ORF_size.pl

- agat_sp_filter_by_locus_distance.pl

- agat_sp_filter_by_mrnaBlastValue.pl

- agat_sp_filter_feature_by_attribute_presence.pl

- agat_sp_filter_feature_by_attribute_value.pl

- agat_sp_filter_feature_from_keep_list.pl

- agat_sp_filter_feature_from_kill_list.pl

- agat_sp_filter_gene_by_intron_numbers.pl

- agat_sp_filter_gene_by_length.pl

- agat_sp_filter_incomplete_gene_coding_models.pl

- agat_sp_filter_record_by_coordinates.pl

- agat_sp_fix_cds_phases.pl

- agat_sp_fix_features_locations_duplicated.pl

- agat_sp_fix_fusion.pl

- agat_sp_fix_longest_ORF.pl

- agat_sp_fix_overlaping_genes.pl

- agat_sp_fix_small_exon_from_extremities.pl

- agat_sp_flag_premature_stop_codons.pl

- agat_sp_flag_short_introns.pl

- agat_sp_functional_statistics.pl

- agat_sp_keep_longest_isoform.pl

- agat_sp_kraken_assess_liftover.pl

- agat_sp_list_short_introns.pl

- agat_sp_load_function_from_protein_align.pl

- agat_sp_manage_IDs.pl

- agat_sp_manage_UTRs.pl

- agat_sp_manage_attributes.pl

- agat_sp_manage_functional_annotation.pl

- agat_sp_manage_introns.pl

- agat_sp_merge_annotations.pl

- agat_sp_prokka_fix_fragmented_gene_annotations.pl

- agat_sp_sensitivity_specificity.pl

- agat_sp_separate_by_record_type.pl

- agat_sp_statistics.pl

- agat_sp_webApollo_compliant.pl

- agat_sq_add_attributes_from_tsv.pl

- agat_sq_add_hash_tag.pl

- agat_sq_add_locus_tag.pl

- agat_sq_count_attributes.pl

- agat_sq_filter_feature_from_fasta.pl

- agat_sq_list_attributes.pl

- agat_sq_manage_IDs.pl

- agat_sq_manage_attributes.pl

- agat_sq_mask.pl

- agat_sq_remove_redundant_entries.pl

- agat_sq_repeats_analyzer.pl

- agat_sq_rfam_analyzer.pl

- agat_sq_split.pl

- agat_sq_stat_basic.pl

Link to section 'Module' of 'agat' Module

You can load the modules by:

module load biocontainers

module load agat

Link to section 'Example job' of 'agat' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Agat on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=agat

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers agat

agat_convert_sp_gff2bed.pl --gff genes.gff -o genes.bedagfusion

Link to section 'Introduction' of 'agfusion' Introduction

AGFusion (pronounced 'A G Fusion') is a python package for annotating gene fusions from the human or mouse genomes.

Docker hub: https://hub.docker.com/r/mgibio/agfusion

Home page: https://github.com/murphycj/AGFusion

Link to section 'Versions' of 'agfusion' Versions

- 1.3.11

Link to section 'Commands' of 'agfusion' Commands

- agfusion

Link to section 'Module' of 'agfusion' Module

You can load the modules by:

module load biocontainers

module load agfusion

Link to section 'Example job' of 'agfusion' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run agfusion on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=agfusion

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers agfusion

alfred

Link to section 'Introduction' of 'alfred' Introduction

Alfred is an efficient and versatile command-line application that computes multi-sample quality control metrics in a read-group aware manner.

For more information, please check its website: https://biocontainers.pro/tools/alfred and its home page on Github.

Link to section 'Versions' of 'alfred' Versions

- 0.2.5

- 0.2.6

Link to section 'Commands' of 'alfred' Commands

- alfred

Link to section 'Module' of 'alfred' Module

You can load the modules by:

module load biocontainers

module load alfred

Link to section 'Example job' of 'alfred' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Alfred on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=alfred

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers alfred

alfred qc -r genome.fasta -o qc.tsv.gz sorted.bamalien-hunter

Link to section 'Introduction' of 'alien-hunter' Introduction

Alien-hunter is an application for the prediction of putative Horizontal Gene Transfer (HGT) events with the implementation of Interpolated Variable Order Motifs (IVOMs).

For more information, please check its website: https://biocontainers.pro/tools/alien-hunter.

Link to section 'Versions' of 'alien-hunter' Versions

- 1.7.7

Link to section 'Commands' of 'alien-hunter' Commands

- alien_hunter

Link to section 'Module' of 'alien-hunter' Module

You can load the modules by:

module load biocontainers

module load alien_hunter

Link to section 'Example job' of 'alien-hunter' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Alien_hunter on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=alien_hunter

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers alien_hunter

alien_hunter genome.fasta output

alignstats

Link to section 'Introduction' of 'alignstats' Introduction

AlignStats produces various alignment, whole genome coverage, and capture coverage metrics for sequence alignment files in SAM, BAM, and CRAM format.

BioContainers: https://biocontainers.pro/tools/alignstats

Home page: https://github.com/jfarek/alignstats

Link to section 'Versions' of 'alignstats' Versions

- 0.9.1

Link to section 'Commands' of 'alignstats' Commands

- alignstats

Link to section 'Module' of 'alignstats' Module

You can load the modules by:

module load biocontainers

module load alignstats

Link to section 'Example job' of 'alignstats' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run alignstats on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=alignstats

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers alignstats

alignstats -C -i input.bam -o report.txt

allpathslg

Link to section 'Introduction' of 'allpathslg' Introduction

Allpathslg is a whole-genome shotgun assembler that can generate high-quality genome assemblies using short reads.

For more information, please check its website: https://biocontainers.pro/tools/allpathslg and its home page: https://bioinformaticshome.com/tools/wga/descriptions/Allpaths-LG.html.

Link to section 'Versions' of 'allpathslg' Versions

- 52488

Link to section 'Commands' of 'allpathslg' Commands

- PrepareAllPathsInputs.pl

- RunAllPathsLG

- CacheLibs.pl

- Fasta2Fastb

Link to section 'Module' of 'allpathslg' Module

You can load the modules by:

module load biocontainers

module load allpathslg

Link to section 'Example job' of 'allpathslg' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Allpathslg on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 24

#SBATCH --job-name=allpathslg

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers allpathslg

PrepareAllPathsInputs.pl \

DATA_DIR=data \

PLOIDY=1 \

IN_GROUPS_CSV=in_groups.csv\

IN_LIBS_CSV=in_libs.csv\

OVERWRITE=True\

RunAllPathsLG PRE=allpathlg REFERENCE_NAME=test.genome \

DATA_SUBDIR=data RUN=myrun TARGETS=standard \

SUBDIR=test OVERWRITE=True

alphafold

Link to section 'Introduction' of 'alphafold' Introduction

Alphafold is a protein structure prediction tool developed by DeepMind (Google). It uses a novel machine learning approach to predict 3D protein structures from primary sequences alone. The source code is available on Github. It has been deployed in all RCAC clusters, supporting both CPU and GPU.

It also relies on a huge database. The full database ( 2.2TB) has been downloaded and setup for users.

Protein struction prediction by alphafold is performed in the following steps:

- Search the amino acid sequence in uniref90 database by jackhmmer (using CPU)

- Search the amino acid sequence in mgnify database by jackhmmer (using CPU)

- Search the amino acid sequence in pdb70 database (for monomers) or pdb_seqres database (for multimers) by hhsearch (using CPU)

- Search the amino acid sequence in bfd database and uniclust30 (updated to uniref30 since v2.3.0) database by hhblits (using CPU)

- Search structure templates in pdb_mmcif database (using CPU)

- Search the amino acid sequence in uniprot database (for multimers) by jackhmmer (using CPU)

- Predict 3D structure by machine learning (using CPU or GPU)

- Structure optimisation with OpenMM (using CPU or GPU)

Link to section 'Versions' of 'alphafold' Versions

- 2.1.1

- 2.2.0

- 2.2.3

- 2.3.0

- 2.3.1

- 2.3.2

Link to section 'Commands' of 'alphafold' Commands

run_alphafold.sh

Link to section 'Module' of 'alphafold' Module

You can load the modules by:

module load biocontainers

module load alphafold

Link to section 'Usage' of 'alphafold' Usage

The usage of Alphafold on our cluster is very straightford, users can create a flagfile containing the database path information:

run_alphafold.sh --flagfile=full_db.ff --fasta_paths=XX --output_dir=XX ...

Users can check its detailed user guide in its Github.

Link to section 'full_db.ff' of 'alphafold' full_db.ff

Example contents of full_db.ff:

--db_preset=full_dbs

--bfd_database_path=/depot/itap/datasets/alphafold/db/bfd/bfd_metaclust_clu_complete_id30_c90_final_seq.sorted_opt

--data_dir=/depot/itap/datasets/alphafold/db/

--uniref90_database_path=/depot/itap/datasets/alphafold/db/uniref90/uniref90.fasta

--mgnify_database_path=/depot/itap/datasets/alphafold/db/mgnify/mgy_clusters_2018_12.fa

--uniclust30_database_path=/depot/itap/datasets/alphafold/db/uniclust30/uniclust30_2018_08/uniclust30_2018_08

--pdb70_database_path=/depot/itap/datasets/alphafold/db/pdb70/pdb70

--template_mmcif_dir=/depot/itap/datasets/alphafold/db/pdb_mmcif/mmcif_files

--max_template_date=2022-01-29

--obsolete_pdbs_path=/depot/itap/datasets/alphafold/db/pdb_mmcif/obsolete.dat

--hhblits_binary_path=/usr/bin/hhblits

--hhsearch_binary_path=/usr/bin/hhsearch

--jackhmmer_binary_path=/usr/bin/jackhmmer

--kalign_binary_path=/usr/bin/kalign

Since Version v2.2.0, the AlphaFold-Multimer model parameters has been updated. The updated full database is stored in depot/itap/datasets/alphafold/db_20221014. For ACCESS Anvil, the database is stored in /anvil/datasets/alphafold/db_20221014. Users need to update the flagfile using the updated database:

run_alphafold.sh --flagfile=full_db_20221014.ff --fasta_paths=XX --output_dir=XX ...

Link to section 'full_db_20221014.ff (for alphafold v2)' of 'alphafold' full_db_20221014.ff (for alphafold v2)

Example contents of full_db_20221014.ff (For ACCESS Anvil, please change depot/itap to anvil):

--db_preset=full_dbs

--bfd_database_path=/depot/itap/datasets/alphafold/db_20221014/bfd/bfd_metaclust_clu_complete_id30_c90_final_seq.sorted_opt

--data_dir=/depot/itap/datasets/alphafold/db_20221014/

--uniref90_database_path=/depot/itap/datasets/alphafold/db_20221014/uniref90/uniref90.fasta

--mgnify_database_path=/depot/itap/datasets/alphafold/db_20221014/mgnify/mgy_clusters_2018_12.fa

--uniclust30_database_path=/depot/itap/datasets/alphafold/db_20221014/uniclust30/uniclust30_2018_08/uniclust30_2018_08

--pdb_seqres_database_path=/depot/itap/datasets/alphafold/db_20221014/pdb_seqres/pdb_seqres.txt

--uniprot_database_path=/depot/itap/datasets/alphafold/db_20221014/uniprot/uniprot.fasta

--template_mmcif_dir=/depot/itap/datasets/alphafold/db_20221014/pdb_mmcif/mmcif_files

--obsolete_pdbs_path=/depot/itap/datasets/alphafold/db_20221014/pdb_mmcif/obsolete.dat

--hhblits_binary_path=/usr/bin/hhblits

--hhsearch_binary_path=/usr/bin/hhsearch

--jackhmmer_binary_path=/usr/bin/jackhmmer

--kalign_binary_path=/usr/bin/kalign

Since Version v2.3.0, the AlphaFold-Multimer model parameters has been updated. The updated full database is stored in depot/itap/datasets/alphafold/db_20230311. For ACCESS Anvil, the database is stored in /anvil/datasets/alphafold/db_20230311. Users need to update the flagfile using the updated database:

run_alphafold.sh --flagfile=full_db_20230311.ff --fasta_paths=XX --output_dir=XX ...

Since Version v2.3.0, uniclust30_database_path has been changed to uniref30_database_path.

Link to section 'full_db_20230311.ff (for alphafold v3)' of 'alphafold' full_db_20230311.ff (for alphafold v3)

Example contents of full_db_20230311.ff for monomer (For ACCESS Anvil, please change depot/itap to anvil):

--db_preset=full_dbs

--bfd_database_path=/depot/itap/datasets/alphafold/db_20230311/bfd/bfd_metaclust_clu_complete_id30_c90_final_seq.sorted_opt

--data_dir=/depot/itap/datasets/alphafold/db_20230311/

--uniref90_database_path=/depot/itap/datasets/alphafold/db_20230311/uniref90/uniref90.fasta

--mgnify_database_path=/depot/itap/datasets/alphafold/db_20230311/mgnify/mgy_clusters_2022_05.fa

--uniref30_database_path=/depot/itap/datasets/alphafold/db_20230311/uniref30/UniRef30_2021_03

--pdb70_database_path=/depot/itap/datasets/alphafold/db_20230311/pdb70/pdb70

--template_mmcif_dir=/depot/itap/datasets/alphafold/db_20230311/pdb_mmcif/mmcif_files

--obsolete_pdbs_path=/depot/itap/datasets/alphafold/db_20230311/pdb_mmcif/obsolete.dat

--hhblits_binary_path=/usr/bin/hhblits

--hhsearch_binary_path=/usr/bin/hhsearch

--jackhmmer_binary_path=/usr/bin/jackhmmer

--kalign_binary_path=/usr/bin/kalign

Example contents of full_db_20230311.ff for multimer (For ACCESS Anvil, please change depot/itap to anvil):

--db_preset=full_dbs

--bfd_database_path=/depot/itap/datasets/alphafold/db_20230311/bfd/bfd_metaclust_clu_complete_id30_c90_final_seq.sorted_opt

--data_dir=/depot/itap/datasets/alphafold/db_20230311/

--uniref90_database_path=/depot/itap/datasets/alphafold/db_20230311/uniref90/uniref90.fasta

--mgnify_database_path=/depot/itap/datasets/alphafold/db_20230311/mgnify/mgy_clusters_2022_05.fa

--uniref30_database_path=/depot/itap/datasets/alphafold/db_20230311/uniref30/UniRef30_2021_03

--pdb_seqres_database_path=/depot/itap/datasets/alphafold/db_20230311/pdb_seqres/pdb_seqres.txt

--uniprot_database_path=/depot/itap/datasets/alphafold/db_20230311/uniprot/uniprot.fasta

--template_mmcif_dir=/depot/itap/datasets/alphafold/db_20230311/pdb_mmcif/mmcif_files

--obsolete_pdbs_path=/depot/itap/datasets/alphafold/db_20230311/pdb_mmcif/obsolete.dat

--hhblits_binary_path=/usr/bin/hhblits

--hhsearch_binary_path=/usr/bin/hhsearch

--jackhmmer_binary_path=/usr/bin/jackhmmer

--kalign_binary_path=/usr/bin/kalign

Link to section 'Example job using CPU' of 'alphafold' Example job using CPU

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

Notice that since version 2.2.0, the parameter --use_gpu_relax=False is required.

To run alphafold using CPU:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 20:00:00

#SBATCH -N 1

#SBATCH -n 24

#SBATCH --job-name=alphafold

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers alphafold/2.3.1

run_alphafold.sh --flagfile=full_db_20230311.ff \

--fasta_paths=sample.fasta --max_template_date=2022-02-01 \

--output_dir=af2_full_out --model_preset=monomer \

--use_gpu_relax=False

Link to section 'Example job using GPU' of 'alphafold' Example job using GPU

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

Notice that since version 2.2.0, the parameter --use_gpu_relax=True is required.

To run alphafold using GPU:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 20:00:00

#SBATCH -N 1

#SBATCH -n 11

#SBATCH --gres=gpu:1

#SBATCH --job-name=alphafold

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers alphafold/2.3.1

run_alphafold.sh --flagfile=full_db_20230311.ff \

--fasta_paths=sample.fasta --max_template_date=2022-02-01 \

--output_dir=af2_full_out --model_preset=monomer \

--use_gpu_relax=True

amptk

Link to section 'Introduction' of 'amptk' Introduction

Amptk is a series of scripts to process NGS amplicon data using USEARCH and VSEARCH, it can also be used to process any NGS amplicon data and includes databases setup for analysis of fungal ITS, fungal LSU, bacterial 16S, and insect COI amplicons.

For more information, please check its website: https://biocontainers.pro/tools/amptk and its home page on Github.

Link to section 'Versions' of 'amptk' Versions

- 1.5.4

Link to section 'Commands' of 'amptk' Commands

- amptk

Link to section 'Module' of 'amptk' Module

You can load the modules by:

module load biocontainers

module load amptk

Link to section 'Example job' of 'amptk' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Amptk on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 4

#SBATCH --job-name=amptk

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers amptk

amptk illumina -i test_data/illumina_test_data -o miseq -f fITS7 -r ITS4 --cpus 4

ananse

Link to section 'Introduction' of 'ananse' Introduction

ANANSE is a computational approach to infer enhancer-based gene regulatory networks (GRNs) and to identify key transcription factors between two GRNs.

BioContainers: https://biocontainers.pro/tools/ananse

Home page: https://github.com/vanheeringen-lab/ANANSE

Link to section 'Versions' of 'ananse' Versions

- 0.4.0

Link to section 'Commands' of 'ananse' Commands

- ananse

Link to section 'Module' of 'ananse' Module

You can load the modules by:

module load biocontainers

module load ananse

Link to section 'Example job' of 'ananse' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run ananse on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=ananse

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers ananse

mkdir -p ANANSE.REMAP.model.v1.0

wget https://zenodo.org/record/4768075/files/ANANSE.REMAP.model.v1.0.tgz

tar xvzf ANANSE.REMAP.model.v1.0.tgz -C ANANSE.REMAP.model.v1.0

rm ANANSE.REMAP.model.v1.0.tgz

wget https://zenodo.org/record/4769814/files/ANANSE_example_data.tgz

tar xvzf ANANSE_example_data.tgz

rm ANANSE_example_data.tgz

ananse binding -H ANANSE_example_data/H3K27ac/fibroblast*bam -A ANANSE_example_data/ATAC/fibroblast*bam -R ANANSE.REMAP.model.v1.0/ -o fibroblast.binding

ananse binding -H ANANSE_example_data/H3K27ac/heart*bam -A ANANSE_example_data/ATAC/heart*bam -R ANANSE.REMAP.model.v1.0/ -o heart.binding

ananse network -b fibroblast.binding/binding.h5 -e ANANSE_example_data/RNAseq/fibroblast*TPM.txt -n 4 -o fibroblast.network.txt

ananse network -b heart.binding/binding.h5 -e ANANSE_example_data/RNAseq/heart*TPM.txt -n 4 -o heart.network.txt

ananse influence -s fibroblast.network.txt -t heart.network.txt -d ANANSE_example_data/RNAseq/fibroblast2heart_degenes.csv -p -o fibroblast2heart.influence.txt

anchorwave

Link to section 'Introduction' of 'anchorwave' Introduction

Anchorwave is used for sensitive alignment of genomes with high sequence diversity, extensive structural polymorphism and whole-genome duplication variation.

For more information, please check its website: https://biocontainers.pro/tools/anchorwave and its home page on Github.

Link to section 'Versions' of 'anchorwave' Versions

- 1.0.1

- 1.1.1

Link to section 'Commands' of 'anchorwave' Commands

- anchorwave

- gmap_build

- gmap

- minimap2

Link to section 'Module' of 'anchorwave' Module

You can load the modules by:

module load biocontainers

module load anchorwave

Link to section 'Example job' of 'anchorwave' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Anchorwave on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 4

#SBATCH --job-name=anchorwave

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers anchorwave

anchorwave gff2seq -i Zea_mays.AGPv4.34.gff3 -r Zea_mays.AGPv4.dna.toplevel.fa -o cds.fa

angsd

ANGSD is a software for analyzing next generation sequencing data. Detailed usage can be found here: http://www.popgen.dk/angsd/index.php/ANGSD.

Link to section 'Versions' of 'angsd' Versions

- 0.935

- 0.937

- 0.939

- 0.940

Link to section 'Commands' of 'angsd' Commands

- angsd

- realSFS

- msToGlf

- thetaStat

- supersim

Link to section 'Module' of 'angsd' Module

You can load the modules by:

module load biocontainers

module load angsd/0.937

Link to section 'Example job' of 'angsd' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run angsd on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 20:00:00

#SBATCH -N 1

#SBATCH -n 24

#SBATCH --job-name=angsd

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers angsd/0.937

angsd -b bam.filelist -GL 1 -doMajorMinor 1 -doMaf 2 -P 5 -minMapQ 30 -minQ 20 -minMaf 0.05

annogesic

Link to section 'Introduction' of 'annogesic' Introduction

ANNOgesic is the swiss army knife for RNA-Seq based annotation of bacterial/archaeal genomes.

Docker hub: https://hub.docker.com/r/silasysh/annogesic

Home page: https://github.com/Sung-Huan/ANNOgesic

Link to section 'Versions' of 'annogesic' Versions

- 1.1.0

Link to section 'Commands' of 'annogesic' Commands

- annogesic

Link to section 'Module' of 'annogesic' Module

You can load the modules by:

module load biocontainers

module load annogesic

Link to section 'Example job' of 'annogesic' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run annogesic on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=annogesic

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers annogesic

ANNOGESIC_FOLDER=ANNOgesic

annogesic \

update_genome_fasta \

-c $ANNOGESIC_FOLDER/input/references/fasta_files/NC_009839.1.fa \

-m $ANNOGESIC_FOLDER/input/mutation_tables/mutation.csv \

-u NC_test.1 \

-pj $ANNOGESIC_FOLDER

annovar

Link to section 'Introduction' of 'annovar' Introduction

ANNOVAR is an efficient software tool to utilize update-to-date information to functionally annotate genetic variants detected from diverse genomes (including human genome hg18, hg19, hg38, as well as mouse, worm, fly, yeast and many others).

For more information, please check its website: https://annovar.openbioinformatics.org/en/latest/.

Link to section 'Versions' of 'annovar' Versions

- 2022-01-13

Link to section 'Commands' of 'annovar' Commands

- annotate_variation.pl

- coding_change.pl

- convert2annovar.pl

- retrieve_seq_from_fasta.pl

- table_annovar.pl

- variants_reduction.pl

Link to section 'Module' of 'annovar' Module

You can load the modules by:

module load biocontainers

module load annovar

Link to section 'Example job' of 'annovar' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run ANNOVAR on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 4

#SBATCH --job-name=annovar

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers annovar

annotate_variation.pl --buildver hg19 --downdb seq humandb/hg19_seq

convert2annovar.pl -format region -seqdir humandb/hg19_seq/ chr1:2000001-2000003

antismash

Link to section 'Introduction' of 'antismash' Introduction

Antismash Antismash allows the rapid genome-wide identification, annotation and analysis of secondary metabolite biosynthesis gene clusters in bacterial and fungal genomes.

For more information, please check its website: https://biocontainers.pro/tools/antismash and its home page: https://docs.antismash.secondarymetabolites.org.

Link to section 'Versions' of 'antismash' Versions

- 5.1.2

- 6.0.1

- 6.1.0

Link to section 'Commands' of 'antismash' Commands

- antismash

Link to section 'Module' of 'antismash' Module

You can load the modules by:

module load biocontainers

module load antismash

Link to section 'Example job' of 'antismash' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Antismash on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 4

#SBATCH --job-name=antismash

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers antismash

antismash --cb-general --cb-knownclusters --cb-subclusters --asf --pfam2go --smcog-trees seq.gbk

anvio

Link to section 'Introduction' of 'anvio' Introduction

Anvio is an analysis and visualization platform for 'omics data.

For more information, please check its website: https://biocontainers.pro/tools/anvio and its home page on Github.

Link to section 'Versions' of 'anvio' Versions

- 7.0

- 7.1_main

- 7.1_structure

Link to section 'Commands' of 'anvio' Commands

- anvi-analyze-synteny

- anvi-cluster-contigs

- anvi-compute-ani

- anvi-compute-completeness

- anvi-compute-functional-enrichment

- anvi-compute-gene-cluster-homogeneity

- anvi-compute-genome-similarity

- anvi-convert-trnaseq-database

- anvi-db-info

- anvi-delete-collection

- anvi-delete-hmms

- anvi-delete-misc-data

- anvi-delete-state

- anvi-dereplicate-genomes

- anvi-display-contigs-stats

- anvi-display-metabolism

- anvi-display-pan

- anvi-display-structure

- anvi-estimate-genome-completeness

- anvi-estimate-genome-taxonomy

- anvi-estimate-metabolism

- anvi-estimate-scg-taxonomy

- anvi-estimate-trna-taxonomy

- anvi-experimental-organization

- anvi-export-collection

- anvi-export-contigs

- anvi-export-functions

- anvi-export-gene-calls

- anvi-export-gene-coverage-and-detection

- anvi-export-items-order

- anvi-export-locus

- anvi-export-misc-data

- anvi-export-splits-and-coverages

- anvi-export-splits-taxonomy

- anvi-export-state

- anvi-export-structures

- anvi-export-table

- anvi-gen-contigs-database

- anvi-gen-fixation-index-matrix

- anvi-gen-gene-consensus-sequences

- anvi-gen-gene-level-stats-databases

- anvi-gen-genomes-storage

- anvi-gen-network

- anvi-gen-phylogenomic-tree

- anvi-gen-structure-database

- anvi-gen-variability-matrix

- anvi-gen-variability-network

- anvi-gen-variability-profile

- anvi-get-aa-counts

- anvi-get-codon-frequencies

- anvi-get-enriched-functions-per-pan-group

- anvi-get-sequences-for-gene-calls

- anvi-get-sequences-for-gene-clusters

- anvi-get-sequences-for-hmm-hits

- anvi-get-short-reads-from-bam

- anvi-get-short-reads-mapping-to-a-gene

- anvi-get-split-coverages

- anvi-help

- anvi-import-collection

- anvi-import-functions

- anvi-import-items-order

- anvi-import-misc-data

- anvi-import-state

- anvi-import-taxonomy-for-genes

- anvi-import-taxonomy-for-layers

- anvi-init-bam

- anvi-inspect

- anvi-interactive

- anvi-matrix-to-newick

- anvi-mcg-classifier

- anvi-merge

- anvi-merge-bins

- anvi-meta-pan-genome

- anvi-migrate

- anvi-oligotype-linkmers

- anvi-pan-genome

- anvi-profile

- anvi-push

- anvi-refine

- anvi-rename-bins

- anvi-report-linkmers

- anvi-run-hmms

- anvi-run-interacdome

- anvi-run-kegg-kofams

- anvi-run-ncbi-cogs

- anvi-run-pfams

- anvi-run-scg-taxonomy

- anvi-run-trna-taxonomy

- anvi-run-workflow

- anvi-scan-trnas

- anvi-script-add-default-collection

- anvi-script-augustus-output-to-external-gene-calls

- anvi-script-calculate-pn-ps-ratio

- anvi-script-checkm-tree-to-interactive

- anvi-script-compute-ani-for-fasta

- anvi-script-enrichment-stats

- anvi-script-estimate-genome-size

- anvi-script-filter-fasta-by-blast

- anvi-script-fix-homopolymer-indels

- anvi-script-gen-CPR-classifier

- anvi-script-gen-distribution-of-genes-in-a-bin

- anvi-script-gen-help-pages

- anvi-script-gen-hmm-hits-matrix-across-genomes

- anvi-script-gen-programs-network

- anvi-script-gen-programs-vignette

- anvi-script-gen-pseudo-paired-reads-from-fastq

- anvi-script-gen-scg-domain-classifier

- anvi-script-gen-short-reads

- anvi-script-gen_stats_for_single_copy_genes.R

- anvi-script-gen_stats_for_single_copy_genes.py

- anvi-script-gen_stats_for_single_copy_genes.sh

- anvi-script-get-collection-info

- anvi-script-get-coverage-from-bam

- anvi-script-get-hmm-hits-per-gene-call

- anvi-script-get-primer-matches

- anvi-script-merge-collections

- anvi-script-pfam-accessions-to-hmms-directory

- anvi-script-predict-CPR-genomes

- anvi-script-process-genbank

- anvi-script-process-genbank-metadata

- anvi-script-reformat-fasta

- anvi-script-run-eggnog-mapper

- anvi-script-snvs-to-interactive

- anvi-script-tabulate

- anvi-script-transpose-matrix

- anvi-script-variability-to-vcf

- anvi-script-visualize-split-coverages

- anvi-search-functions

- anvi-self-test

- anvi-setup-interacdome

- anvi-setup-kegg-kofams

- anvi-setup-ncbi-cogs

- anvi-setup-pdb-database

- anvi-setup-pfams

- anvi-setup-scg-taxonomy

- anvi-setup-trna-taxonomy

- anvi-show-collections-and-bins

- anvi-show-misc-data

- anvi-split

- anvi-summarize

- anvi-trnaseq

- anvi-update-db-description

- anvi-update-structure-database

- anvi-upgrade

Link to section 'Module' of 'anvio' Module

You can load the modules by:

module load biocontainers

module load anvio

Link to section 'Example job' of 'anvio' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Anvio on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 8

#SBATCH --job-name=anvio

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers anvio

anvi-script-reformat-fasta assembly.fa -o contigs.fa -l 1000 --simplify-names --seq-type NT

anvi-gen-contigs-database -f contigs.fa -o contigs.db -n 'An example contigs database' --num-threads 8

anvi-display-contigs-stats contigs.db

anvi-setup-ncbi-cogs --cog-data-dir $PWD --num-threads 8 --just-do-it --reset

anvi-run-ncbi-cogs -c contigs.db --cog-data-dir COG20 --num-threads 8

any2fasta

Link to section 'Introduction' of 'any2fasta' Introduction

Any2fasta can convert various sequence formats to FASTA.

BioContainers: https://biocontainers.pro/tools/any2fasta

Home page: https://github.com/tseemann/any2fasta

Link to section 'Versions' of 'any2fasta' Versions

- 0.4.2

Link to section 'Commands' of 'any2fasta' Commands

- any2fasta

Link to section 'Module' of 'any2fasta' Module

You can load the modules by:

module load biocontainers

module load any2fasta

Link to section 'Example job' of 'any2fasta' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run any2fasta on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=any2fasta

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers any2fasta

any2fasta input.gff > out.fasta

arcs

Link to section 'Introduction' of 'arcs' Introduction

ARCS is a tool for scaffolding genome sequence assemblies using linked or long read sequencing data.

Home page: https://github.com/bcgsc/arcs

Link to section 'Versions' of 'arcs' Versions

- 1.2.4

Link to section 'Commands' of 'arcs' Commands

- arcs

- arcs-make

Link to section 'Module' of 'arcs' Module

You can load the modules by:

module load biocontainers

module load arcs

Link to section 'Example job' of 'arcs' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run arcs on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=arcs

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers arcs

asgal

Link to section 'Introduction' of 'asgal' Introduction

ASGAL (Alternative Splicing Graph ALigner) is a tool for detecting the alternative splicing events expressed in a RNA-Seq sample with respect to a gene annotation.

Docker hub: https://hub.docker.com/r/algolab/asgal and its home page on Github.

Link to section 'Versions' of 'asgal' Versions

- 1.1.7

Link to section 'Commands' of 'asgal' Commands

- asgal

Link to section 'Module' of 'asgal' Module

You can load the modules by:

module load biocontainers

module load asgal

Link to section 'Example job' of 'asgal' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run ASGAL on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=asgal

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers asgal

asgal -g input/genome.fa \

-a input/annotation.gtf \

-s input/sample_1.fa -o outputFolder

assembly-stats

Link to section 'Introduction' of 'assembly-stats' Introduction

Assembly-stats is a tool to get assembly statistics from FASTA and FASTQ files.

For more information, please check its website: https://biocontainers.pro/tools/assembly-stats and its home page on Github.

Link to section 'Versions' of 'assembly-stats' Versions

- 1.0.1

Link to section 'Commands' of 'assembly-stats' Commands

- assembly-stats

Link to section 'Module' of 'assembly-stats' Module

You can load the modules by:

module load biocontainers

module load assembly-stats

Link to section 'Example job' of 'assembly-stats' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Assembly-stats on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 00:10:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=assembly-stats

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers assembly-stats

assembly-stats seq.fasta

atac-seq-pipeline

Link to section 'Introduction' of 'atac-seq-pipeline' Introduction

The ENCODE ATAC-seq pipeline is used for quality control and statistical signal processing of short-read sequencing data, producing alignments and measures of enrichment. It was developed by Anshul Kundaje's lab at Stanford University.

Docker hub: https://hub.docker.com/r/encodedcc/atac-seq-pipeline

Home page: https://www.encodeproject.org/atac-seq/

Link to section 'Versions' of 'atac-seq-pipeline' Versions

- 2.1.3

Link to section 'Commands' of 'atac-seq-pipeline' Commands

- 10x_bam2fastq

- SAMstats

- SAMstatsParallel

- ace2sam

- aggregate_scores_in_intervals.py

- align_print_template.py

- alignmentSieve

- annotate.py

- annotateBed

- axt_extract_ranges.py

- axt_to_fasta.py

- axt_to_lav.py

- axt_to_maf.py

- bamCompare

- bamCoverage

- bamPEFragmentSize

- bamToBed

- bamToFastq

- bed12ToBed6

- bedToBam

- bedToIgv

- bed_bigwig_profile.py

- bed_build_windows.py

- bed_complement.py

- bed_count_by_interval.py

- bed_count_overlapping.py

- bed_coverage.py

- bed_coverage_by_interval.py

- bed_diff_basewise_summary.py

- bed_extend_to.py

- bed_intersect.py

- bed_intersect_basewise.py

- bed_merge_overlapping.py

- bed_rand_intersect.py

- bed_subtract_basewise.py

- bedpeToBam

- bedtools

- bigwigCompare

- blast2sam.pl

- bnMapper.py

- bowtie2sam.pl

- bwa

- chardetect

- closestBed

- clusterBed

- complementBed

- compress

- computeGCBias

- computeMatrix

- computeMatrixOperations

- correctGCBias

- coverageBed

- createDiff

- cutadapt

- cygdb

- cython

- cythonize

- deeptools

- div_snp_table_chr.py

- download_metaseq_example_data.py

- estimateReadFiltering

- estimateScaleFactor

- expandCols

- export2sam.pl

- faidx

- fastaFromBed

- find_in_sorted_file.py

- flankBed

- gene_fourfold_sites.py

- genomeCoverageBed

- getOverlap

- getSeq_genome_wN

- getSeq_genome_woN

- get_objgraph

- get_scores_in_intervals.py

- gffutils-cli

- groupBy

- gsl-config

- gsl-histogram

- gsl-randist

- idr

- int_seqs_to_char_strings.py

- interpolate_sam.pl

- intersectBed

- intersection_matrix.py

- interval_count_intersections.py

- interval_join.py

- intron_exon_reads.py

- jsondiff

- lav_to_axt.py

- lav_to_maf.py

- line_select.py

- linksBed

- lzop_build_offset_table.py

- mMK_bitset.py

- macs2

- maf_build_index.py

- maf_chop.py

- maf_chunk.py

- maf_col_counts.py

- maf_col_counts_all.py

- maf_count.py

- maf_covered_ranges.py

- maf_covered_regions.py

- maf_div_sites.py

- maf_drop_overlapping.py

- maf_extract_chrom_ranges.py

- maf_extract_ranges.py

- maf_extract_ranges_indexed.py

- maf_filter.py

- maf_filter_max_wc.py

- maf_gap_frequency.py

- maf_gc_content.py

- maf_interval_alignibility.py

- maf_limit_to_species.py

- maf_mapping_word_frequency.py

- maf_mask_cpg.py

- maf_mean_length_ungapped_piece.py

- maf_percent_columns_matching.py

- maf_percent_identity.py

- maf_print_chroms.py

- maf_print_scores.py

- maf_randomize.py

- maf_region_coverage_by_src.py

- maf_select.py

- maf_shuffle_columns.py

- maf_species_in_all_files.py

- maf_split_by_src.py

- maf_thread_for_species.py

- maf_tile.py

- maf_tile_2.py

- maf_tile_2bit.py

- maf_to_axt.py

- maf_to_concat_fasta.py

- maf_to_fasta.py

- maf_to_int_seqs.py

- maf_translate_chars.py

- maf_truncate.py

- maf_word_frequency.py

- makeBAM.sh

- makeDiff.sh

- makeFastq.sh

- make_unique

- makepBAM_genome.sh

- makepBAM_transcriptome.sh

- mapBed

- maq2sam-long

- maq2sam-short

- maskFastaFromBed

- mask_quality.py

- mergeBed

- metaseq-cli

- multiBamCov

- multiBamSummary

- multiBigwigSummary

- multiIntersectBed

- nib_chrom_intervals_to_fasta.py

- nib_intervals_to_fasta.py

- nib_length.py

- novo2sam.pl

- nucBed

- one_field_per_line.py

- out_to_chain.py

- pairToBed

- pairToPair

- pbam2bam

- pbam_mapped_transcriptome

- pbt_plotting_example.py

- peak_pie.py

- plot-bamstats

- plotCorrelation

- plotCoverage

- plotEnrichment

- plotFingerprint

- plotHeatmap

- plotPCA

- plotProfile

- prefix_lines.py

- pretty_table.py

- print_unique

- psl2sam.pl

- py.test

- pybabel

- pybedtools

- pygmentize

- pytest

- python-argcomplete-check-easy-install-script

- python-argcomplete-tcsh

- qv_to_bqv.py

- randomBed

- random_lines.py

- register-python-argcomplete

- sam2vcf.pl

- samtools

- samtools.pl

- seq_cache_populate.pl

- shiftBed

- shuffleBed

- slopBed

- soap2sam.pl

- sortBed

- speedtest.py

- subtractBed

- table_add_column.py

- table_filter.py

- tagBam

- tfloc_summary.py

- ucsc_gene_table_to_intervals.py

- undill

- unionBedGraphs

- varfilter.py

- venn_gchart.py

- venn_mpl.py

- wgsim

- wgsim_eval.pl

- wiggle_to_array_tree.py

- wiggle_to_binned_array.py

- wiggle_to_chr_binned_array.py

- wiggle_to_simple.py

- windowBed

- windowMaker

- zoom2sam.pl

Link to section 'Module' of 'atac-seq-pipeline' Module

You can load the modules by:

module load biocontainers

module load atac-seq-pipeline

Link to section 'Example job' of 'atac-seq-pipeline' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run atac-seq-pipeline on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=atac-seq-pipeline

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers atac-seq-pipeline

ataqv

Link to section 'Introduction' of 'ataqv' Introduction

Ataqv is a toolkit for measuring and comparing ATAC-seq results, made in the Parker lab at the University of Michigan.

For more information, please check its website: https://biocontainers.pro/tools/ataqv and its home page on Github.

Link to section 'Versions' of 'ataqv' Versions

- 1.3.0

Link to section 'Commands' of 'ataqv' Commands

- ataqv

Link to section 'Module' of 'ataqv' Module

You can load the modules by:

module load biocontainers

module load ataqv

Link to section 'Example job' of 'ataqv' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Ataqv on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 1

#SBATCH --job-name=ataqv

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers ataqv

ataqv --peak-file sample_1_peaks.broadPeak \

--name sample_1 --metrics-file sample_1.ataqv.json.gz \

--excluded-region-file hg19.blacklist.bed.gz \

--tss-file hg19.tss.refseq.bed.gz \

--ignore-read-groups human sample_1.md.bam \

> sample_1.ataqv.out

ataqv --peak-file sample_2_peaks.broadPeak \

--name sample_2 --metrics-file sample_2.ataqv.json.gz \

--excluded-region-file hg19.blacklist.bed.gz \

--tss-file hg19.tss.refseq.bed.gz \

--ignore-read-groups human sample_2.md.bam \

> sample_2.ataqv.out

ataqv --peak-file sample_3_peaks.broadPeak \

--name sample_3 --metrics-file sample_3.ataqv.json.gz \

--excluded-region-file hg19.blacklist.bed.gz \

--tss-file hg19.tss.refseq.bed.gz \

--ignore-read-groups human sample_3.md.bam \

> sample_3.ataqv.out

mkarv my_fantastic_experiment sample_1.ataqv.json.gz sample_2.ataqv.json.gz sample_3.ataqv.json.gz

atram

aTRAM (automated target restricted assembly method) is an iterative assembler that performs reference-guided local de novo assemblies using a variety of available methods.

Detailed usage can be found here: https://bioinformaticshome.com/tools/wga/descriptions/aTRAM.html

Link to section 'Versions' of 'atram' Versions

- 2.4.3

Link to section 'Commands' of 'atram' Commands

- atram.py

- atram_preprocessor.py

- atram_stitcher.py

Link to section 'Module' of 'atram' Module

You can load the modules by:

module load biocontainers

module load atram/2.4.3

Link to section 'Example job' of 'atram' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run aTRAM on our our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 20:00:00

#SBATCH -N 1

#SBATCH -n 24

#SBATCH --job-name=atram

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers atram/2.4.3a

atram_preprocessor.py --blast-db=atram_db \

--end-1=data/tutorial_end_1.fasta.gz \

--end-2=data/tutorial_end_2.fasta.gz \

--gzip

atram.py --query=tutorial-query.pep.fasta \

--blast-db=atram_db \

--output=output \

--assembler=velvet

atropos

Link to section 'Introduction' of 'atropos' Introduction

Atropos is a tool for specific, sensitive, and speedy trimming of NGS reads.

For more information, please check its website: https://biocontainers.pro/tools/atropos and its home page on Github.

Link to section 'Versions' of 'atropos' Versions

- 1.1.17

- 1.1.31

Link to section 'Commands' of 'atropos' Commands

- atropos

Link to section 'Module' of 'atropos' Module

You can load the modules by:

module load biocontainers

module load atropos

Link to section 'Example job' of 'atropos' Example job

Using #!/bin/sh -l as shebang in the slurm job script will cause the failure of some biocontainer modules. Please use #!/bin/bash instead.

To run Atropos on our clusters:

#!/bin/bash

#SBATCH -A myallocation # Allocation name

#SBATCH -t 1:00:00

#SBATCH -N 1

#SBATCH -n 4

#SBATCH --job-name=atropos

#SBATCH --mail-type=FAIL,BEGIN,END

#SBATCH --error=%x-%J-%u.err

#SBATCH --output=%x-%J-%u.out

module --force purge

ml biocontainers atropos

atropos --threads 4 \

-a AGATCGGAAGAGCACACGTCTGAACTCCAGTCACGAGTTA \

-o trimmed1.fq.gz -p trimmed2.fq.gz \

-pe1 SRR13176582_1.fastq -pe2 SRR13176582_2.fastq

augur

Link to section 'Introduction' of 'augur' Introduction

Augur is the bioinformatics toolkit we use to track evolution from sequence and serological data.

For more information, please check its website: https://biocontainers.pro/tools/augur and its home page on Github.

Link to section 'Versions' of 'augur' Versions

- 14.0.0

- 15.0.0

Link to section 'Commands' of 'augur' Commands

- augur

Link to section 'Module' of 'augur' Module

You can load the modules by:

module load biocontainers

module load augur